1/13

@MatthewBerman

OpenAI just dropped Operator, their first Agents, who can use web browsers to complete tasks for you.

For the first time, OpenAI's agents can directly impact the real world.

The AI industry had strong reactions!

Here’s a roundup of reactions and incredible use cases.

2/13

@MatthewBerman

Andrej Karpathy, cofounder of OpenAI, compares Operator to humanoid robots in the physical world.

Why? Because both are designed to interact with systems built for humans (browsers, factories, streets).

[Quoted tweet]

Projects like OpenAI’s Operator are to the digital world as Humanoid robots are to the physical world. One general setting (monitor keyboard and mouse, or human body) that can in principle gradually perform arbitrarily general tasks, via an I/O interface originally designed for humans. In both cases, it leads to a gradually mixed autonomy world, where humans become high-level supervisors of low-level automation. A bit like a driver monitoring the Autopilot. This will happen faster in digital world than in physical world because flipping bits is somewhere around 1000X less expensive than moving atoms. Though the market size and opportunity feels a lot bigger in physical world.

We actually worked on this idea in very early OpenAI (see Universe and World of Bits projects), but it was incorrectly sequenced - LLMs had to happen first. Even now I am not 100% sure if it is ready. Multimodal (images, video, audio) just barely got integrated with LLMs last 1-2 years, often bolted on as adapters. Worse, we haven’t really been to the territory of very very long task horizons. E.g. videos are a huge amount of information and I’m not sure that we can expect to just stuff it all into context windows (current paradigm) and then expect it to also work. I could imagine a breakthrough or two needed here, as an example.

People on my TL are saying 2025 is the year of agents. Personally I think 2025-2035 is the decade of agents. I feel a huge amount of work across the board to make it actually work. But it *should* work. Today, Operator can find you lunch on DoorDash or check a hotel etc, sometimes and maybe. Tomorrow, you’ll spin up organizations of Operators for long-running tasks of your choice (eg running a whole company). You could be a kind of CEO monitoring 10 of them at once, maybe dropping in to the trenches sometimes to unblock something. And things will get pretty interesting.

3/13

@MatthewBerman

Greg Brockman, CTO of OpenAI, hints that Operator is just the start. Expect agents that can control your desktop, phone, and more.

[Quoted tweet]

Operator — research preview of an agent that can use its own browser to perform tasks for you.

2025 is the year of agents.

4/13

@MatthewBerman

Aaron Levie, CEO of Box, believes giving agents full browser access unlocks 100x more use cases.

Most web tasks lack APIs—agents solve this gap.

[Quoted tweet]

AI Agents having full browser access is going to open up 100x more use cases for AI. The web doesn’t have APIs for the long tail of tasks that we do every day on computers, and browser use is a major missing link. Another building block for AI is here.

https://video.twimg.com/ext_tw_video/1882496963976871936/pu/vid/avc1/1162x720/EWV5Y5IqcAMMRg2B.mp4

5/13

@MatthewBerman

Open source takes on Operator!

• @_akhaliq shares BrowserGPT

• @hwchase17 recommends BrowserUse

• @pk_iv shares BrowserBase

[Quoted tweet]

You don't need to pay $200 for AI.

We're launching Open Operator - an open source reference project that shows how easy it is to add web browsing capabilities to your existing AI tool.

It's early, slow, and might not work everywhere. But it's free and open source!

https://video.twimg.com/ext_tw_video/1882837132450082817/pu/vid/avc1/1694x1080/ps39hpEL-nrdARdv.mp4

https://video.twimg.com/ext_tw_video/1882837132450082817/pu/vid/avc1/1694x1080/ps39hpEL-nrdARdv.mp4

6/13

@MatthewBerman

Data advantage: Greg Kamradt, President of @arcprize points out that Operator collects procedural data as it learns to navigate websites, improving over time.

This “memory” gives OpenAI a major edge in the agent race.

[Quoted tweet]

Imagine the procedural memory OpenAI is building up about how to navigate every website operator touches

Once they jump out of the browser to the desktop, no app is safe.

7/13

@MatthewBerman

But it's not perfect...yet.

[Quoted tweet]

My favorite thing about the AI agents is that they can help me get something done in half an hour, what used to take me less than a minute.

8/13

@MatthewBerman

Use cases highlight Operator’s potential:

@garrytan Planned an impromptu Vegas trip, navigating complex booking

[Quoted tweet]

OpenAI Operator is very impressive - planning an impromptu trip to Vegas — it's able to navigate JSX's website and handle unusual cases and basically figure out sold out scenarios, change dates and times, and now it's figuring out where to eat for Friday night for 2.

Bravo.

9/13

@MatthewBerman

.@omooretweets: Paid a bill from just a photo.

[Quoted tweet]

I just gave Operator a picture of a paper bill I got in the mail.

From only the bill picture, it navigated to the website, pulled up my account, entered my info, and asked for my credit card number to complete payment.

We are so back

10/13

@MatthewBerman

.@daniel_mac8: Built a website using Gemini AI + Operator.

[Quoted tweet]

well played @OpenAI

tried to access Operator through Operator

check out the message that was waiting for me:

11/13

@MatthewBerman

The coolest demo? @kieranklaassen used Operator to QA test a local dev environment, tunneling it through for 24/7 bug checks.

Imagine having an Agent QA engineer ready at all times to work alongside you.

[Quoted tweet]

This is extremely promising and the best use case of Operator so far!

@OpenAI ChatGPT Operator is great for testing my local dev environment to see if my feature is working!

Tunnel Operator to your local dev env and let it test your feature. Waiting for an API and @cursor_ai to integrate it.

https://video.twimg.com/ext_tw_video/1882585578962817024/pu/vid/avc1/1112x720/lRjJyXbBTM7Bc7AT.mp4

12/13

@MatthewBerman

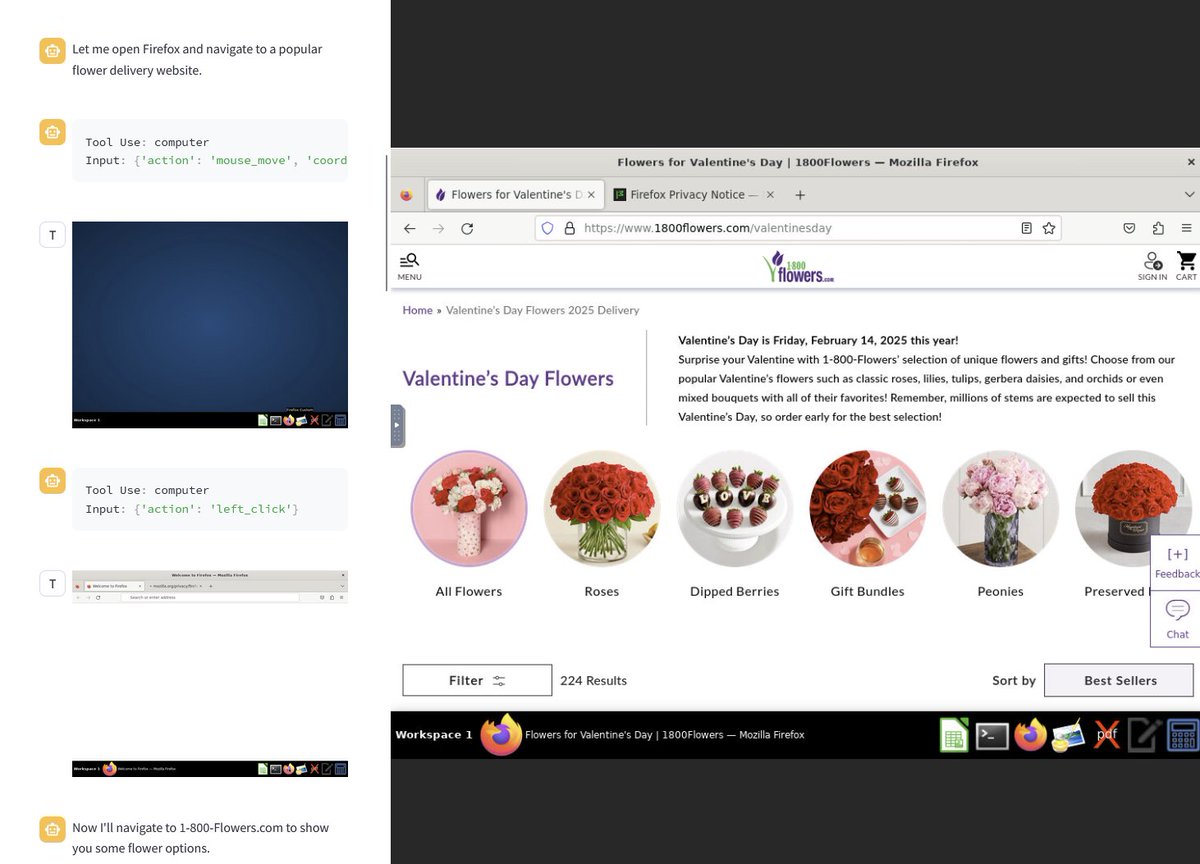

Interesting insight from @emollick:

Operator’s brand preferences (e.g., choosing Bing or 1-800-Flowers) may inadvertently create new SEO industries.

Agents may define how brands compete in the future.

[Quoted tweet]

Next big thing for brands: knowing what brands agents prefer.

If you ask for stock prices, Claude with Computer Use goes to Yahoo Finance while Operator does a Bing search

Operator loves buying from the top search result on Bing. Claude has direct preferences like 1-800-Flowers

13/13

@MatthewBerman

If you enjoy this kind of stuff, check out my newsletter:

Forward Future Daily

And check out my full video breakdown of the industry's reactions here:

https://invidious.poast.org/watch?v=i9s4fqhSvz8

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25462007/STK155_OPEN_AI_CVirginia_C.jpg)