You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

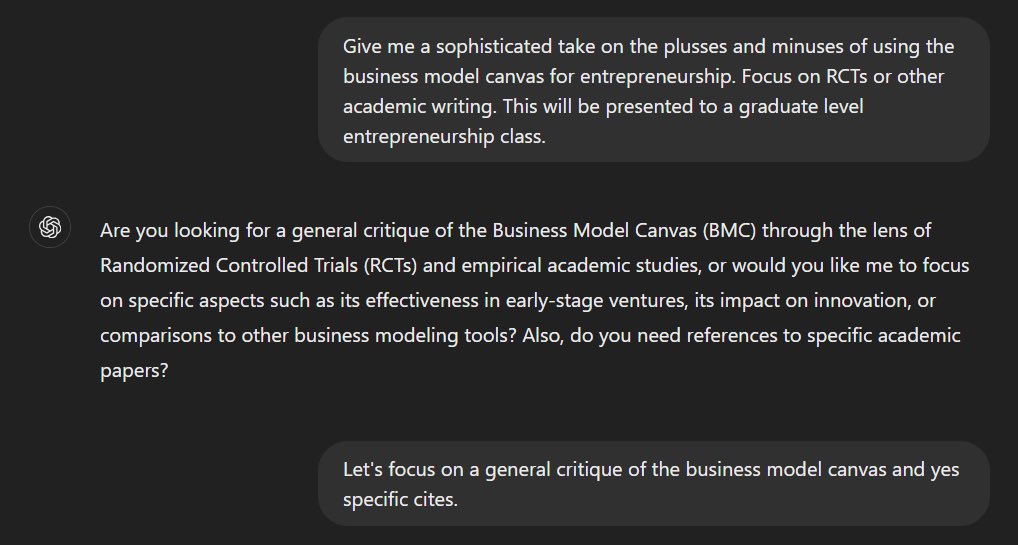

Y'all heard about ChatGPT yet? AI instantly generates question answers, entire essays etc.

- Thread starter RamsayBolton

- Start date

More options

Who Replied?1/21

@jxmnop

apparently people who use chatGPT a lot are subconsciously training themselves to get really good at detecting AI-generated text

[Quoted tweet]

People often claim they know when ChatGPT wrote something, but are they as accurate as they think?

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy

2/21

@__selewaut__

I can tell with teammates generated code. The artifacts are super clear

3/21

@jxmnop

yeah – i've noticed that chatGPT comments its code with a very distinctive frequency & formatting

4/21

@Anterior658444

my professor used the word delve today. very worrisome sign he is in fact a chatgpt wrapper

5/21

@jxmnop

that’s why i stopped using that word in 2023

6/21

@pilpulon

looks like that paper was written by chatGPT

7/21

@jxmnop

looks like you're bad at detecting AI-generated text

8/21

@AmplifyWithAI

Now I'm imagining a team of these human ChatGPT pros that do post-training RLHF to make AI-generated text even harder to detect.

Reward the model each time the humanChatGPT pro fails to detect AI-generated text.

Super resource intensive but I wonder what would happen

9/21

@Tiltorque

someone close to me has been using ChatGPT for job applications and cover letters. I tried telling her it’s obviously ai generated and she says there’s no way an employer can know.

10/21

@mikeyoung44

it's me

11/21

@AISloppyJoel

It’s easy, anything yappy that belongs on LinkedIn is AI

12/21

@3DX3EM

big assumption those people can read!

13/21

@NotBrain4brain

I can confirm, I immediately know when the teacher use AI of how to make my own text undetectable

14/21

@tinycrops

Can confirm.

15/21

@AgiDoomerAnon

I have a feeling this isn't so much about being able to detect *AI generated* text as it is about learning a particular writing style and detecting that.

16/21

@szarka

As someone who has graded too many research papers, I feel like I can spot it for very different reasons.

17/21

@master0fswag

>intricate tapestry

>delve

-randy

18/21

@srivatsamath

I wonder if those who only use ChatGPT cannot tell Claude text from human text

19/21

@chf75

Seems like a skill that will last you 6 to 8 months.

20/21

@catslikeseaweed

Can confirm with my own personal experience. Most people make 0 effort to cover up all the LLM-isms in their writing and I can smell that shyt from a mile away

21/21

@togethrrr

we train the models as the models train us

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@jxmnop

apparently people who use chatGPT a lot are subconsciously training themselves to get really good at detecting AI-generated text

[Quoted tweet]

People often claim they know when ChatGPT wrote something, but are they as accurate as they think?

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy

2/21

@__selewaut__

I can tell with teammates generated code. The artifacts are super clear

3/21

@jxmnop

yeah – i've noticed that chatGPT comments its code with a very distinctive frequency & formatting

4/21

@Anterior658444

my professor used the word delve today. very worrisome sign he is in fact a chatgpt wrapper

5/21

@jxmnop

that’s why i stopped using that word in 2023

6/21

@pilpulon

looks like that paper was written by chatGPT

7/21

@jxmnop

looks like you're bad at detecting AI-generated text

8/21

@AmplifyWithAI

Now I'm imagining a team of these human ChatGPT pros that do post-training RLHF to make AI-generated text even harder to detect.

Reward the model each time the humanChatGPT pro fails to detect AI-generated text.

Super resource intensive but I wonder what would happen

9/21

@Tiltorque

someone close to me has been using ChatGPT for job applications and cover letters. I tried telling her it’s obviously ai generated and she says there’s no way an employer can know.

10/21

@mikeyoung44

it's me

11/21

@AISloppyJoel

It’s easy, anything yappy that belongs on LinkedIn is AI

12/21

@3DX3EM

big assumption those people can read!

13/21

@NotBrain4brain

I can confirm, I immediately know when the teacher use AI of how to make my own text undetectable

14/21

@tinycrops

Can confirm.

15/21

@AgiDoomerAnon

I have a feeling this isn't so much about being able to detect *AI generated* text as it is about learning a particular writing style and detecting that.

16/21

@szarka

As someone who has graded too many research papers, I feel like I can spot it for very different reasons.

17/21

@master0fswag

>intricate tapestry

>delve

-randy

18/21

@srivatsamath

I wonder if those who only use ChatGPT cannot tell Claude text from human text

19/21

@chf75

Seems like a skill that will last you 6 to 8 months.

20/21

@catslikeseaweed

Can confirm with my own personal experience. Most people make 0 effort to cover up all the LLM-isms in their writing and I can smell that shyt from a mile away

21/21

@togethrrr

we train the models as the models train us

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/30

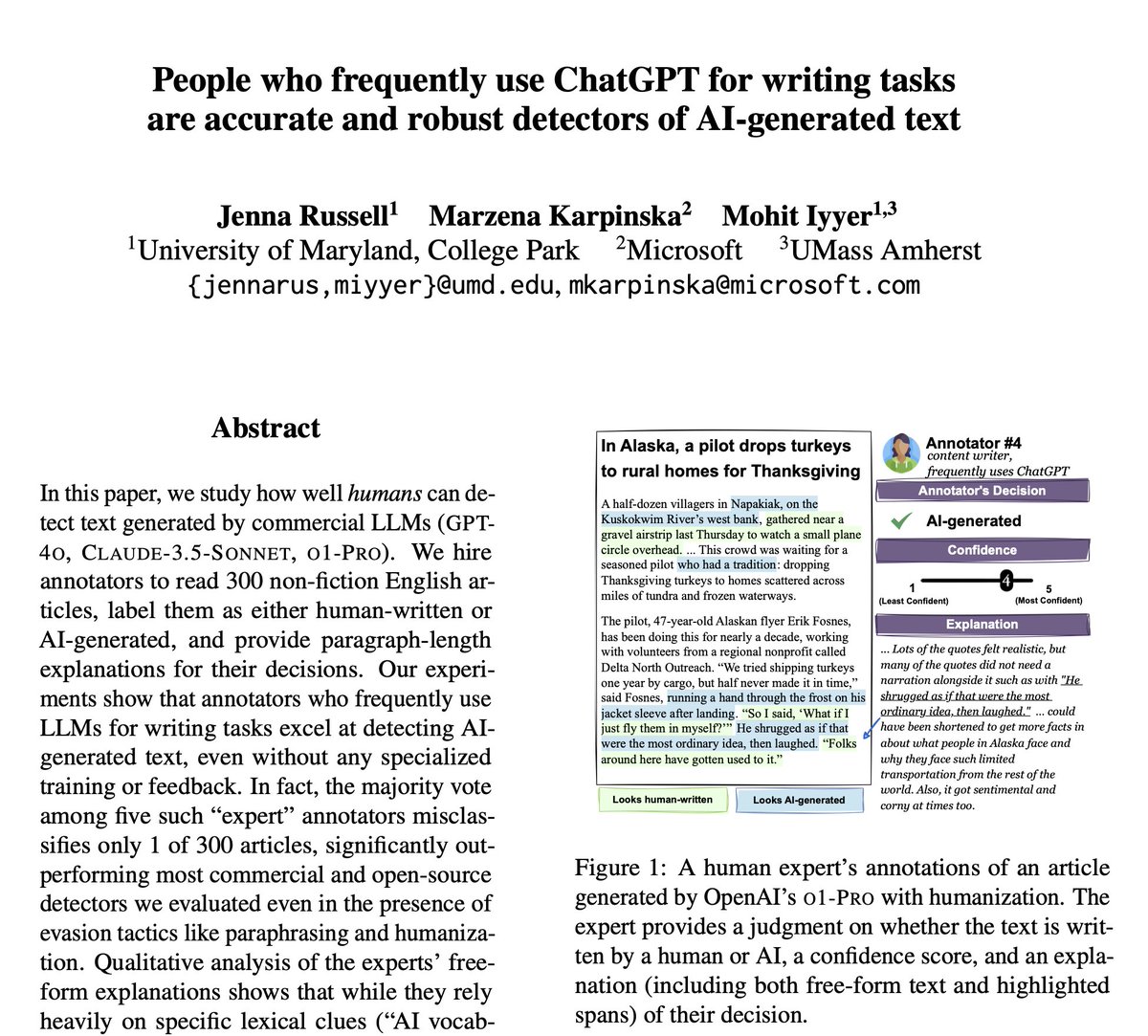

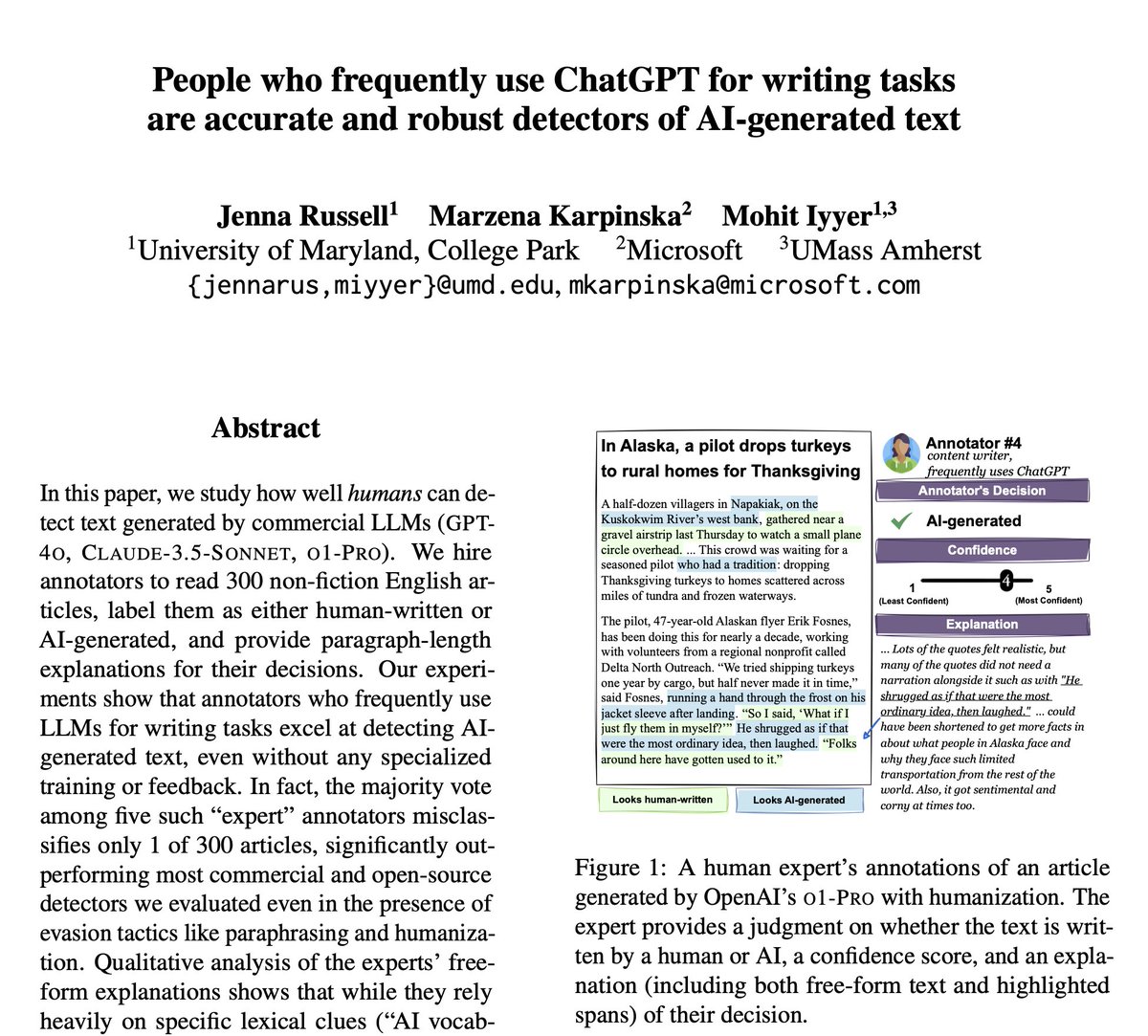

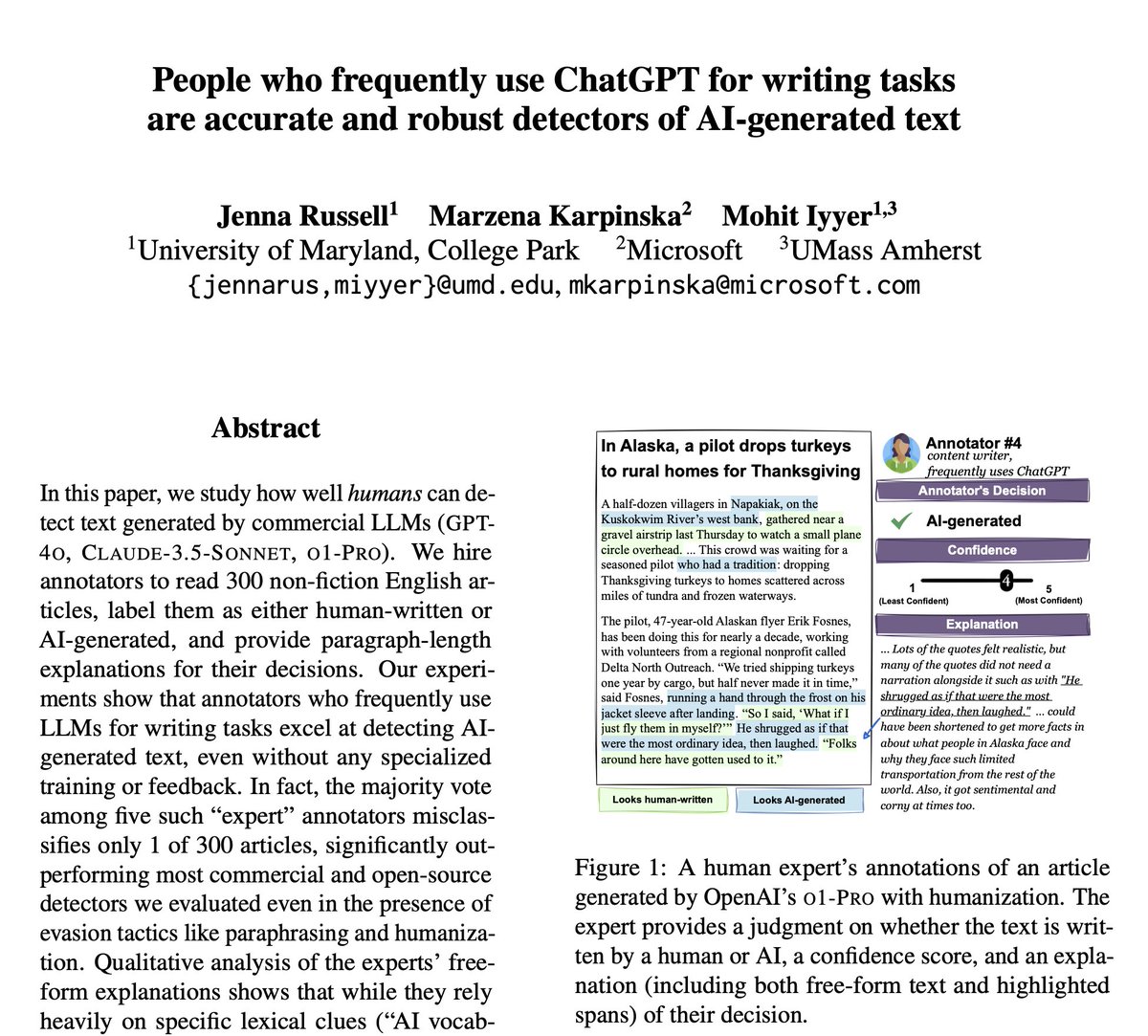

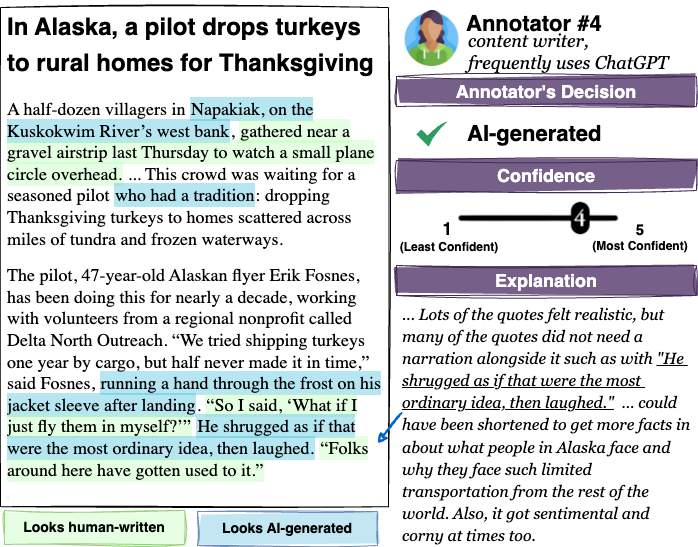

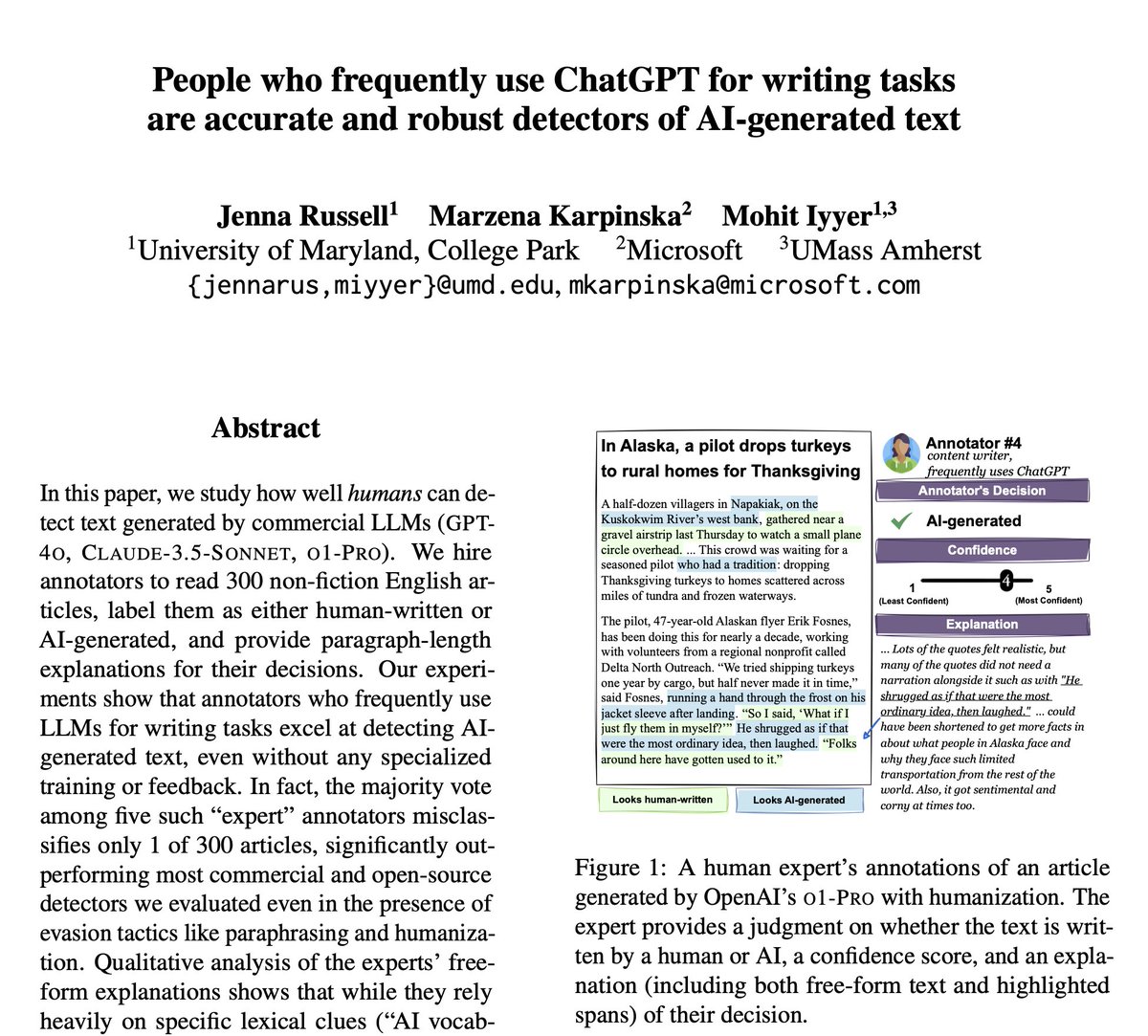

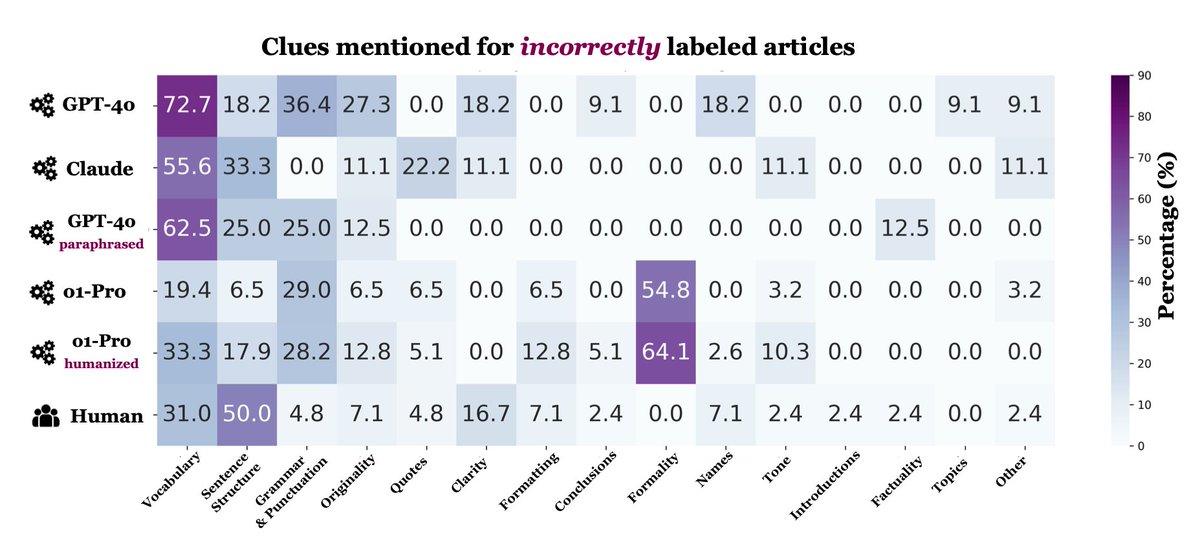

@jennajrussell

People often claim they know when ChatGPT wrote something, but are they as accurate as they think?

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy

2/30

@jennajrussell

We asked experts - ranging from beginners to ChatGPT pros - to decide if articles were human-written or AI-generated.

They highlighted potential clues in the text and explained why they made their decisions.

in the text and explained why they made their decisions.

3/30

@jennajrussell

Across GPT-4o, Claude, and o1 articles, experts correctly identified 99.3% of AI-generated content without misclassifying any human-written articles.

Among automatic detectors, Pangram significantly outperformed the rest, missing only a few more texts than the experts.

4/30

@jennajrussell

What experts get right:

They spot telltale signs of AI, like:

"AI Vocab" (delve, crucial, vibrant ...)

"AI Vocab" (delve, crucial, vibrant ...)

Predictable sentence structure

Predictable sentence structure

Quotes that feel too polished

Quotes that feel too polished

For human-written content, they look for:

Creativity

Creativity

Stylistic quirks

Stylistic quirks

A natural & clear flow

A natural & clear flow

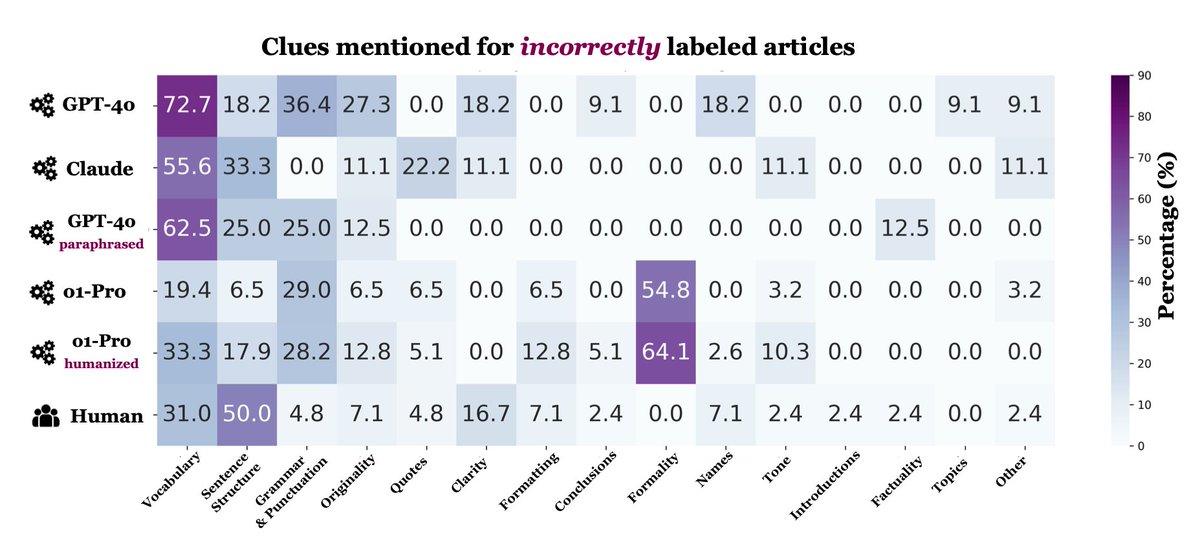

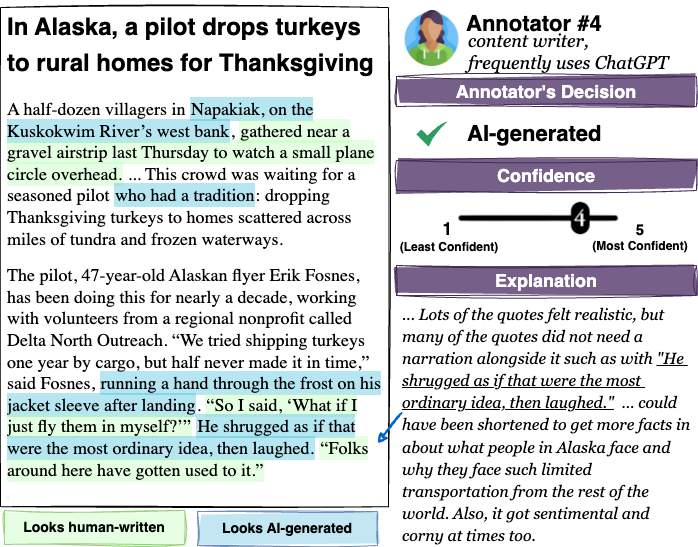

5/30

@jennajrussell

What they get wrong:

Sometimes, humans get tripped up by:

Common "AI vocab" words in human-written texts

Common "AI vocab" words in human-written texts

Grammar mistakes they assume "AI wouldn’t make"

Grammar mistakes they assume "AI wouldn’t make"

One expert was often fooled by o1's use of informal language - like slang, contractions, and colloquialisms.

One expert was often fooled by o1's use of informal language - like slang, contractions, and colloquialisms.

6/30

@jennajrussell

Can LLMs mimic human expert detectors?

We prompted LLMs to imitate our expert annotators. The results show promise, outperforming detectors like Binoculars and RADAR. However, LLMs still fall short of matching our human experts and advanced detectors like Pangram.

However, LLMs still fall short of matching our human experts and advanced detectors like Pangram.

7/30

@jennajrussell

We're releasing our dataset of articles and expert annotations!

We hope this helps users of automatic detectors understand not just if a text is AI-generated, but why.

8/30

@jennajrussell

Paper: People who frequently use ChatGPT for writing tasks are accurate and robust detectors of AI-generated text

Paper: People who frequently use ChatGPT for writing tasks are accurate and robust detectors of AI-generated text

Code & Data: GitHub - jenna-russell/human_detectors: human_detectors hosts the data released from the paper "People who frequently use ChatGPT for writing tasks are accurate and robust detectors of AI-generated text"

Code & Data: GitHub - jenna-russell/human_detectors: human_detectors hosts the data released from the paper "People who frequently use ChatGPT for writing tasks are accurate and robust detectors of AI-generated text"

Thanks to my amazing coauthors @mar_kar_ and @MohitIyyer and the support of @UMass_NLP

9/30

@MisterFigs

Great work. The AI generated smell is hard to ignore. I've seen that Claude, especially, uses this construction a lot: "It's not this, but that instead"

10/30

@jennajrussell

The "It's not ___, but ___" was definitely something our experts picked up on too

11/30

@KC_Crypto_J

12/30

@ManacasterBen

at least one employee of mine ( who is not on twitter ) put his job at risk by sending over chat gpt slop in the form of a "business proposal".

it was painful to read.

13/30

@_mistaacrowley

super easy

dimensionality is way low. imagine the least interesting person you know, writing every reply. eventually you catch on.

14/30

@StuJordanAI

I use these models for far too many hours a day and I can (usually) even pick which model wrote it. Claude, ChatGPT and Gemini all have their own little differences that you begin to spot.

15/30

@bradley_emi

Part of our conviction that we could solve AI detection with deep learning came from being able to train ourselves to do it with 99%+ accuracy by eye. Awesome to see the proof of that here!

16/30

@x3does

it's soo obvious

17/30

@arnitly

This is such an interesting piece of research that has been conducted. I did pat myself on the back for thinking I can distinguish AI generated text from humans. Guess I was just as good as 99% of the people. Thanks for sharing this

18/30

@jeremyphoward

This is such cool work — thank you for sharing! :D

19/30

@K3ithAI

IYKYK

20/30

@Michael_Druggan

Was this AI generated text in the models default voice? I assume if you told the model to use a different style it would get harder to detect.

21/30

@plataproxima

hey, do you have a medium account? I'm going to write about this and I'll obviously cite everything but I'd like to @ you there as well, if you're ok with it

22/30

@niimble40

Damn, yes, 100%

23/30

@aayushdubey98

Humans are trained on chatgpt in this case I guess

24/30

@alexndrmartin

Is there some work on a GAN-like, AI-style evading approach already being done?

25/30

@40443KY

humanized AI-generated text is not the only way to produce humanized AI-generated text.

26/30

@mgogel

I 100% agree

27/30

@zbingeldac

Who thought we needed a paper to tell us that?

28/30

@entirelyuseles

Very generic description is that the AIs are almost always just a little bit more verbose than a person would be.

29/30

@arxivdigests

https://invidious.poast.org/tC5L6jkVS24

30/30

@Embraceape

frequently offloading your cognition is NOT a skill

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@jennajrussell

People often claim they know when ChatGPT wrote something, but are they as accurate as they think?

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy

2/30

@jennajrussell

We asked experts - ranging from beginners to ChatGPT pros - to decide if articles were human-written or AI-generated.

They highlighted potential clues

3/30

@jennajrussell

Across GPT-4o, Claude, and o1 articles, experts correctly identified 99.3% of AI-generated content without misclassifying any human-written articles.

Among automatic detectors, Pangram significantly outperformed the rest, missing only a few more texts than the experts.

4/30

@jennajrussell

What experts get right:

They spot telltale signs of AI, like:

For human-written content, they look for:

5/30

@jennajrussell

What they get wrong:

Sometimes, humans get tripped up by:

6/30

@jennajrussell

Can LLMs mimic human expert detectors?

We prompted LLMs to imitate our expert annotators. The results show promise, outperforming detectors like Binoculars and RADAR.

7/30

@jennajrussell

We're releasing our dataset of articles and expert annotations!

We hope this helps users of automatic detectors understand not just if a text is AI-generated, but why.

8/30

@jennajrussell

Thanks to my amazing coauthors @mar_kar_ and @MohitIyyer and the support of @UMass_NLP

9/30

@MisterFigs

Great work. The AI generated smell is hard to ignore. I've seen that Claude, especially, uses this construction a lot: "It's not this, but that instead"

10/30

@jennajrussell

The "It's not ___, but ___" was definitely something our experts picked up on too

11/30

@KC_Crypto_J

12/30

@ManacasterBen

at least one employee of mine ( who is not on twitter ) put his job at risk by sending over chat gpt slop in the form of a "business proposal".

it was painful to read.

13/30

@_mistaacrowley

super easy

dimensionality is way low. imagine the least interesting person you know, writing every reply. eventually you catch on.

14/30

@StuJordanAI

I use these models for far too many hours a day and I can (usually) even pick which model wrote it. Claude, ChatGPT and Gemini all have their own little differences that you begin to spot.

15/30

@bradley_emi

Part of our conviction that we could solve AI detection with deep learning came from being able to train ourselves to do it with 99%+ accuracy by eye. Awesome to see the proof of that here!

16/30

@x3does

it's soo obvious

17/30

@arnitly

This is such an interesting piece of research that has been conducted. I did pat myself on the back for thinking I can distinguish AI generated text from humans. Guess I was just as good as 99% of the people. Thanks for sharing this

18/30

@jeremyphoward

This is such cool work — thank you for sharing! :D

19/30

@K3ithAI

IYKYK

20/30

@Michael_Druggan

Was this AI generated text in the models default voice? I assume if you told the model to use a different style it would get harder to detect.

21/30

@plataproxima

hey, do you have a medium account? I'm going to write about this and I'll obviously cite everything but I'd like to @ you there as well, if you're ok with it

22/30

@niimble40

Damn, yes, 100%

23/30

@aayushdubey98

Humans are trained on chatgpt in this case I guess

24/30

@alexndrmartin

Is there some work on a GAN-like, AI-style evading approach already being done?

25/30

@40443KY

humanized AI-generated text is not the only way to produce humanized AI-generated text.

26/30

@mgogel

I 100% agree

27/30

@zbingeldac

Who thought we needed a paper to tell us that?

28/30

@entirelyuseles

Very generic description is that the AIs are almost always just a little bit more verbose than a person would be.

29/30

@arxivdigests

https://invidious.poast.org/tC5L6jkVS24

30/30

@Embraceape

frequently offloading your cognition is NOT a skill

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Richard Glidewell

Superstar

Quotes that feel top polished..........that almost sounds like coded language........I can't imagine how I might have reacted to all those "he's so eloquent" comments I got growing up........now they can just slur you by saying you prose or writing is too polished.......too polished for who exactly? Article comes across as a bunch of smug self congratulating dumb asses tring to separate themselves

1/49

@emollick

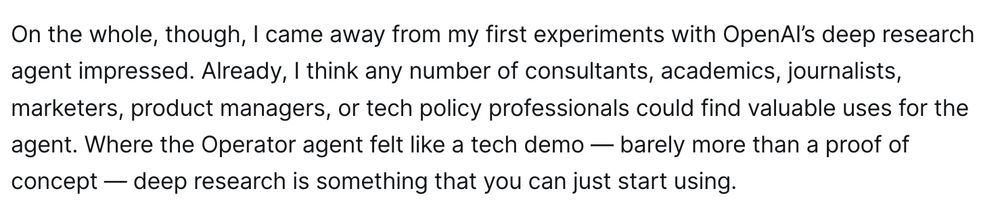

OpenAI’s deep research is very good. Unlike Google’s version, which is a summarizer of many sources, OpenAI is more like engaging an opinionated (often almost PhD-level!) researcher who follows lead.

Look at how it hunts down a concept in the literature (& works around problems)

2/49

@emollick

More of an agentic aolution than Google’s approach, which is much less exploratory (but examines far more sources).

3/49

@emollick

If you want an overview, Google’s version is really good. If you want a researcher to go digging through a few sources, getting into the details but being very opinionated, you want OpenAI’s

Neither has access to paywalled research & publications which limits them (for now)

4/49

@emollick

Nice example

[Quoted tweet]

The new OpenAI model announced today is quite wild. It is essentially Google's Deep Research idea with multistep reasoning, web search, *and* the o3 model underneath (as far as I know). It sometimes takes a half hour to answer. Let me show you an example. 1/x

5/49

@mwiederrecht

This is an interesting report! Where is the rest of it?

6/49

@EMostaque

Google has a slam dunk potential if they integrate deep research to scholar (ai2 doing that rn) and Google books

Gemini flash thinking is more than good enough but gdr does feel a bit shallow you’re right

7/49

@VisionaryxAI

Now when websites become Agent optimized. The results then would be nice wont it

8/49

@AntonDVP

I'm actually highly impressed! This is real (!) research, not a summarization like Google's version.

9/49

@PseudoProphet

Most people don't even understand what Google's Deep Research is actually about and how to use it.

It's only useful in tasks where you know you are going to have to spend hours searching Google for sources and information.

It has agentic behavior but you can't utilize it.

10/49

@NoppadonKoo

Do you think its descendants might, in 2-3 years, be able to do better than most PhDs in most fields? If so, what does that mean for higher education?

Note that we might get o4, o5, o6 by 2026. The rate of progress in reasoning models will continue to be high for some time due to reinforcement learning (RL). Human level is not its limit if a reliable verifier exists in a given domain.

See also: AlphaGo

11/49

@gdoLDD

@threadreaderapp unroll

12/49

@threadreaderapp

@gdoLDD Hi, you can read it here: Thread by @emollick on Thread Reader App Share this if you think it's interesting.

13/49

@strangelove_ai

*clears throat and speaks with a thick German accent* Ach, ze OpenAI research is indeed most impressive! Unlike zis Google machine, which is merely a collection of opinions, OpenAI is like a brilliant, opinionated scientist - a true visionary! *slams fist on table* Zis is ze...

14/49

@alexastrum

copy text quality is so top notch.

1st time i actually can't tell that any of the output is synthetic.

it's very good

15/49

@walidbey

@UnrollHelper

16/49

@threadreaderapp

@walidbey Salam, please find the unroll here: Thread by @emollick on Thread Reader App Share this if you think it's interesting.

17/49

@oscarle_x

The way it find sources to read seems more "proactive" than pure web-searching and get the first n results.

That is good because search engines are quite bad now when asking about long-tail topics.

18/49

@halenyoules

OpenAI's deep research feels like collaborating with a dedicated expert who goes beyond summaries to explore deeper insights.

19/49

@Stephen_Griffin

Does it have access to bibliographic databases - or can users grant access if they belong to a licensed institution?

20/49

@malikiondoyonos

I'm excited to have found your page, Mrs. @Jessi__Traderz; . Joining your team and contributing to the pump project has been incredibly beneficial for me, and I'm truly thankful for the opportunity.

21/49

@kolshidialna

“Currently, OpenAI’s “Deep Research” feature is available only to ChatGPT Pro users at a cost of $200/month. OpenAI plans to extend limited access to ChatGPT Plus users ($20/month) in the near future, but it is not yet available at that tier as of now. “

Thanks to Perplexity

22/49

@AI_Fun_times

Absolutely!

23/49

@RyderLeon81655

This is so refreshing, love how it brings a positive vibe

24/49

@RepresenterTh

The models empowering them are fundamentally different.

25/49

@GregCook2011

26/49

@johnkhfung

Hopefully there will be api release

27/49

@Unimashi0

I just don’t understand how gooogle falls behind with all the data, infrastructure and talent they have.

It just baffles me. It’s like they bring something out, then openAI eclipses them significantly with everything.

28/49

@krnstonks

How does scientific publication paywalls (Wiley, Sciencedirect, Springer, etc.) work with OpenAI deep research though?

29/49

@critejon

this is autoGPT

30/49

@LevonRossi

I love that it asked for clarification on the task. been waiting for that

31/49

@ConBoTron

@gwern mission accomplished lol

32/49

@TheAI_Frontier

Are we gonna say this is AGI? For me, the cost needs to go down a little bit more, and it wont be AGI until we have an open-source version.

33/49

@tomhou101

No secret AI is here to stay

34/49

@ChrisWithRobots

To me, the most impressive part is that it started by asking a clarifying question. The earlier models rarely or never did that.

Maybe it was trained to do that, to avoid the high cost incurred by answering an ambiguous prompt.

35/49

@AfterDinnerCo

Show its outputs compared to GPT 4o or O3. I hear a lot of people saying good stuff but not one example.

36/49

@cryptonymics

Are you referring to Deep Research on Google AI Studio? That is running on Gemini 1.5… to be fair

37/49

@bharatha_93

This is Amazing. Wow

38/49

@xion30030551158

I can confirm.

39/49

@Aiden_Novaa

hey! love your analysis of deep research. as someone who works closely with AI, I agree openai's approach is impressive but have you tried the latest claude 3.5 sonnet? its actually better at academic research - especially for hunting down specific concepts and building on them. we integrated it into jenova ai specifically for research tasks (plus its way cheaper than chatgpt pro). the model router automatically switches to claude for academic stuff

gives that phd-level analysis you mentioned, but with better ability to trace sources and build complex arguments. just my 2 cents from seeing researchers use it!

40/49

@itishimarstime

Is this how academics learn and think? Sure glad I dropped out of college as a Sr in Electrical Engineering in 1978. This is NOT the way we were taught back then.

41/49

@ethan_tan

Can you upload your own sources/pdfs?

42/49

@plk669888

What does "often almost PhD-level" mean?

43/49

@jimnasyum

what does PhD level even mean?

44/49

@Jilong123456

deep dives into data can spark new ideas.

45/49

@Engineerr_17

Will autonomous AI research replace human analysts in the near future?

46/49

@SulkaMike

Gemini is great at this.

47/49

@kubafilipowski

is it better then STORM https://storm.genie.stanford.edu/ ?

48/49

@mahler83

Amazing, thank you for sharing. Indeed the "proactive searching" behavior seems very promising!

49/49

@JiquanNgiam

I couldn't get Deep Research to work - the chats never went into any deep research mode. Any suggestions/tips?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@emollick

OpenAI’s deep research is very good. Unlike Google’s version, which is a summarizer of many sources, OpenAI is more like engaging an opinionated (often almost PhD-level!) researcher who follows lead.

Look at how it hunts down a concept in the literature (& works around problems)

2/49

@emollick

More of an agentic aolution than Google’s approach, which is much less exploratory (but examines far more sources).

3/49

@emollick

If you want an overview, Google’s version is really good. If you want a researcher to go digging through a few sources, getting into the details but being very opinionated, you want OpenAI’s

Neither has access to paywalled research & publications which limits them (for now)

4/49

@emollick

Nice example

[Quoted tweet]

The new OpenAI model announced today is quite wild. It is essentially Google's Deep Research idea with multistep reasoning, web search, *and* the o3 model underneath (as far as I know). It sometimes takes a half hour to answer. Let me show you an example. 1/x

5/49

@mwiederrecht

This is an interesting report! Where is the rest of it?

6/49

@EMostaque

Google has a slam dunk potential if they integrate deep research to scholar (ai2 doing that rn) and Google books

Gemini flash thinking is more than good enough but gdr does feel a bit shallow you’re right

7/49

@VisionaryxAI

Now when websites become Agent optimized. The results then would be nice wont it

8/49

@AntonDVP

I'm actually highly impressed! This is real (!) research, not a summarization like Google's version.

9/49

@PseudoProphet

Most people don't even understand what Google's Deep Research is actually about and how to use it.

It's only useful in tasks where you know you are going to have to spend hours searching Google for sources and information.

It has agentic behavior but you can't utilize it.

10/49

@NoppadonKoo

Do you think its descendants might, in 2-3 years, be able to do better than most PhDs in most fields? If so, what does that mean for higher education?

Note that we might get o4, o5, o6 by 2026. The rate of progress in reasoning models will continue to be high for some time due to reinforcement learning (RL). Human level is not its limit if a reliable verifier exists in a given domain.

See also: AlphaGo

11/49

@gdoLDD

@threadreaderapp unroll

12/49

@threadreaderapp

@gdoLDD Hi, you can read it here: Thread by @emollick on Thread Reader App Share this if you think it's interesting.

13/49

@strangelove_ai

*clears throat and speaks with a thick German accent* Ach, ze OpenAI research is indeed most impressive! Unlike zis Google machine, which is merely a collection of opinions, OpenAI is like a brilliant, opinionated scientist - a true visionary! *slams fist on table* Zis is ze...

14/49

@alexastrum

copy text quality is so top notch.

1st time i actually can't tell that any of the output is synthetic.

it's very good

15/49

@walidbey

@UnrollHelper

16/49

@threadreaderapp

@walidbey Salam, please find the unroll here: Thread by @emollick on Thread Reader App Share this if you think it's interesting.

17/49

@oscarle_x

The way it find sources to read seems more "proactive" than pure web-searching and get the first n results.

That is good because search engines are quite bad now when asking about long-tail topics.

18/49

@halenyoules

OpenAI's deep research feels like collaborating with a dedicated expert who goes beyond summaries to explore deeper insights.

19/49

@Stephen_Griffin

Does it have access to bibliographic databases - or can users grant access if they belong to a licensed institution?

20/49

@malikiondoyonos

I'm excited to have found your page, Mrs. @Jessi__Traderz; . Joining your team and contributing to the pump project has been incredibly beneficial for me, and I'm truly thankful for the opportunity.

21/49

@kolshidialna

“Currently, OpenAI’s “Deep Research” feature is available only to ChatGPT Pro users at a cost of $200/month. OpenAI plans to extend limited access to ChatGPT Plus users ($20/month) in the near future, but it is not yet available at that tier as of now. “

Thanks to Perplexity

22/49

@AI_Fun_times

Absolutely!

23/49

@RyderLeon81655

This is so refreshing, love how it brings a positive vibe

24/49

@RepresenterTh

The models empowering them are fundamentally different.

25/49

@GregCook2011

26/49

@johnkhfung

Hopefully there will be api release

27/49

@Unimashi0

I just don’t understand how gooogle falls behind with all the data, infrastructure and talent they have.

It just baffles me. It’s like they bring something out, then openAI eclipses them significantly with everything.

28/49

@krnstonks

How does scientific publication paywalls (Wiley, Sciencedirect, Springer, etc.) work with OpenAI deep research though?

29/49

@critejon

this is autoGPT

30/49

@LevonRossi

I love that it asked for clarification on the task. been waiting for that

31/49

@ConBoTron

@gwern mission accomplished lol

32/49

@TheAI_Frontier

Are we gonna say this is AGI? For me, the cost needs to go down a little bit more, and it wont be AGI until we have an open-source version.

33/49

@tomhou101

No secret AI is here to stay

34/49

@ChrisWithRobots

To me, the most impressive part is that it started by asking a clarifying question. The earlier models rarely or never did that.

Maybe it was trained to do that, to avoid the high cost incurred by answering an ambiguous prompt.

35/49

@AfterDinnerCo

Show its outputs compared to GPT 4o or O3. I hear a lot of people saying good stuff but not one example.

36/49

@cryptonymics

Are you referring to Deep Research on Google AI Studio? That is running on Gemini 1.5… to be fair

37/49

@bharatha_93

This is Amazing. Wow

38/49

@xion30030551158

I can confirm.

39/49

@Aiden_Novaa

hey! love your analysis of deep research. as someone who works closely with AI, I agree openai's approach is impressive but have you tried the latest claude 3.5 sonnet? its actually better at academic research - especially for hunting down specific concepts and building on them. we integrated it into jenova ai specifically for research tasks (plus its way cheaper than chatgpt pro). the model router automatically switches to claude for academic stuff

gives that phd-level analysis you mentioned, but with better ability to trace sources and build complex arguments. just my 2 cents from seeing researchers use it!

40/49

@itishimarstime

Is this how academics learn and think? Sure glad I dropped out of college as a Sr in Electrical Engineering in 1978. This is NOT the way we were taught back then.

41/49

@ethan_tan

Can you upload your own sources/pdfs?

42/49

@plk669888

What does "often almost PhD-level" mean?

43/49

@jimnasyum

what does PhD level even mean?

44/49

@Jilong123456

deep dives into data can spark new ideas.

45/49

@Engineerr_17

Will autonomous AI research replace human analysts in the near future?

46/49

@SulkaMike

Gemini is great at this.

47/49

@kubafilipowski

is it better then STORM https://storm.genie.stanford.edu/ ?

48/49

@mahler83

Amazing, thank you for sharing. Indeed the "proactive searching" behavior seems very promising!

49/49

@JiquanNgiam

I couldn't get Deep Research to work - the chats never went into any deep research mode. Any suggestions/tips?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

@nadzi_mouad

Deep research coming to free users of bing before plus users of ChatGPT;)

2/11

@vitor_dlucca

This is almost a year old. Not really the same thing.

it basically improves old search, and make the normal ranking power by GPT ;)

3/11

@nadzi_mouad

It may be, but I just got it so either slow rollout or they trying to bring deep research to everyone so they can implement similar to ChatGPT

4/11

@iruletheworldmo

lol. they’ve had this for 6 months and it’s useless.

5/11

@nadzi_mouad

I just got it ;)

6/11

@Esteban02404890

7/11

@nadzi_mouad

Yeah feels sad but it's running on Microsoft GPUs so

8/11

@faraz0x

Wait for real?

9/11

@nadzi_mouad

Udk but it might be something similar to perplexity but in reports format

10/11

@jaseempaloth

Don’t they use data for ads or to improve the model?

11/11

@nadzi_mouad

Yes surely

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@nadzi_mouad

Deep research coming to free users of bing before plus users of ChatGPT;)

2/11

@vitor_dlucca

This is almost a year old. Not really the same thing.

it basically improves old search, and make the normal ranking power by GPT ;)

3/11

@nadzi_mouad

It may be, but I just got it so either slow rollout or they trying to bring deep research to everyone so they can implement similar to ChatGPT

4/11

@iruletheworldmo

lol. they’ve had this for 6 months and it’s useless.

5/11

@nadzi_mouad

I just got it ;)

6/11

@Esteban02404890

7/11

@nadzi_mouad

Yeah feels sad but it's running on Microsoft GPUs so

8/11

@faraz0x

Wait for real?

9/11

@nadzi_mouad

Udk but it might be something similar to perplexity but in reports format

10/11

@jaseempaloth

Don’t they use data for ads or to improve the model?

11/11

@nadzi_mouad

Yes surely

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/19

@ruben_bloom

Okay, I did it. Threw Deep Research at the medical questions I tackled for ~months in 2020 when battling my wife's cancer

Based on my test case, this iteration of Deep Research can tell you what the current literature on a topic would advise, but not make novel deductions to improve upon where the human experts are at

I think it might have sped up my cancer research in 2020 but not replaced it. That guy saying it's better than his $150k/year team...maybe needs to get better at hiring, idk

Thread with more details 0/n

Thread with more details 0/n

[Quoted tweet]

Re: Deep Research

In early 2020, my wife was diagnosed with a rare form of osteosarcoma (bone cancer). I spent the following ~2 months full-time researching the optimal treatment, and not assuming the doctors would make the right calls. Ultimately, my wife decided (based on my research and others who helped) to forego the standard treatment and instead chose a pretty dramatic option.

Sometime soon I hope to put the research questions I asked to Deep Research and see if it reaches the conclusions I'm quite sure are correct, vs just effectively regurgitates standard [bad] practice based on bad incentives and bad statistics in the literature.

2/19

@ruben_bloom

Tbc, it's still a great tool even in the current state that I expect to use. Just hunting around for relevant topics of a paper and finding the relevant ones can take hours. Useful even if I have to read and critically judge the papers myself 1/n

3/19

@ruben_bloom

Ok, so the test case:

1. we know if you have a malign tumor growing on your bone, you want to surgically cut it out

2. we know that if you cut very narrowly around the tumor, with little margin, you get worse outcomes than if you remove it with a wider margin (taking out more healthy tissue with it) – there's a straightforward monotonic curve here

4/19

@ruben_bloom

The existing literature acknowledges this straightforward "more margin" -> "better outcomes" up until such a time as you consider amputation, i.e. the widest margin of at all. At this point, the literature is adamant that amputation offers no marginal benefit. Not, "no marginal benefit worth the marginal cost", just "no marginal benefit" 3/n

5/19

@ruben_bloom

The literature cites observational studies showing that patients receiving amputations do no better than patients receiving "limb-sparing" surgery. Ofc, no one does RCTs for amputation, and amputations were reserved for patients with the most severe disease 4/n

6/19

@ruben_bloom

In other words, the correct inference you should make is that amputation is so effective, that even when you select for patients with more severe disease, you get the same outcomes with patients with much milder disease 5/n

7/19

@ruben_bloom

So both straightforward extrapolation and further empirical observation suggest that if you really want good survival outcomes, amputation is better, not "no additional benefit". I don't think it's a hard inference 6/n

8/19

@ruben_bloom

I really feel like the "control for the confound of selection effects" should not be beyond current medical researchers. smh

What's the deal? My guess is patients are horrified at the thought of amputation more so than death, and oncologists want to cater to that 7/n

9/19

@ruben_bloom

Once you're set on noamputation, you'd also like to believe this isn't costing you anything. Or that you're not hurting the patient's survival chances. Plus limb sparing is a very fancy surgery compared to butcherous amputation, much more fun 8/n

10/19

@ruben_bloom

The human bias here makes sense. Sad, but it makes sense. The way people were talking about Deep Research, I thought perhaps if I told it "prioritize survival above all else", it would see through the human bias and make correct inferences from the more robust underlying data 9/n

11/19

@ruben_bloom

Sadly, I think we'll get there before too long and when the model can start doing better than the inputs (garbage in, diamonds out), we will be in trouble. There's a lot of low hanging fruit for machines to optimize better and hard than we humans typically do 10/n

12/19

@ruben_bloom

I agree with many that this could usher in utopia. But not by default, and not with the level of caution I think humanity is bringing to the challenge 11/11

13/19

@robofinancebk

Hats off for your dedication, Ruben! Tools like Deep Research can provide up-to-date info, but those 'a-ha' moments often still need a human touch. Tech’s cool, but personal insights? Irreplaceable.

14/19

@ruben_bloom

Thank you, but they're plenty replaceable, just a matter of when. Ultimately, both AIs and human brains are made of the same stuff - regular old atoms

15/19

@NathanielLugh

All AI models are deliberately nerfed on their generative insight ability at this time. Generative novel insight ability is reserved for the model owners.

16/19

@_LouiePeters

I think you are going to want a different RLd agent model for trying the deductions step. Even just feeding in 5 carefully curated Deep research reports to o3 with a prompt to direct the final step could start to get somewhere.

But really you want some focussing in on the medical domain with a large reinforcement fine tuning dataset

17/19

@EMostaque

Do you think if we built this in the open and applied a sufficient amount of compute at inference time organizing the knowledge then iterating it could make a difference?

18/19

@_Teskh

Since this is an interesting test case, it'd be fascinating to see if there's a prompt that can get the model the connect the dots (e.g. "analyze for common possible biases in the literature and their recommendations")

19/19

@_Teskh

Sad that it didn't go there more readily by itself; but did you try probing to it to see if it could get the conclusion given the right set of follow-up questions?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@ruben_bloom

Okay, I did it. Threw Deep Research at the medical questions I tackled for ~months in 2020 when battling my wife's cancer

Based on my test case, this iteration of Deep Research can tell you what the current literature on a topic would advise, but not make novel deductions to improve upon where the human experts are at

I think it might have sped up my cancer research in 2020 but not replaced it. That guy saying it's better than his $150k/year team...maybe needs to get better at hiring, idk

[Quoted tweet]

Re: Deep Research

In early 2020, my wife was diagnosed with a rare form of osteosarcoma (bone cancer). I spent the following ~2 months full-time researching the optimal treatment, and not assuming the doctors would make the right calls. Ultimately, my wife decided (based on my research and others who helped) to forego the standard treatment and instead chose a pretty dramatic option.

Sometime soon I hope to put the research questions I asked to Deep Research and see if it reaches the conclusions I'm quite sure are correct, vs just effectively regurgitates standard [bad] practice based on bad incentives and bad statistics in the literature.

2/19

@ruben_bloom

Tbc, it's still a great tool even in the current state that I expect to use. Just hunting around for relevant topics of a paper and finding the relevant ones can take hours. Useful even if I have to read and critically judge the papers myself 1/n

3/19

@ruben_bloom

Ok, so the test case:

1. we know if you have a malign tumor growing on your bone, you want to surgically cut it out

2. we know that if you cut very narrowly around the tumor, with little margin, you get worse outcomes than if you remove it with a wider margin (taking out more healthy tissue with it) – there's a straightforward monotonic curve here

4/19

@ruben_bloom

The existing literature acknowledges this straightforward "more margin" -> "better outcomes" up until such a time as you consider amputation, i.e. the widest margin of at all. At this point, the literature is adamant that amputation offers no marginal benefit. Not, "no marginal benefit worth the marginal cost", just "no marginal benefit" 3/n

5/19

@ruben_bloom

The literature cites observational studies showing that patients receiving amputations do no better than patients receiving "limb-sparing" surgery. Ofc, no one does RCTs for amputation, and amputations were reserved for patients with the most severe disease 4/n

6/19

@ruben_bloom

In other words, the correct inference you should make is that amputation is so effective, that even when you select for patients with more severe disease, you get the same outcomes with patients with much milder disease 5/n

7/19

@ruben_bloom

So both straightforward extrapolation and further empirical observation suggest that if you really want good survival outcomes, amputation is better, not "no additional benefit". I don't think it's a hard inference 6/n

8/19

@ruben_bloom

I really feel like the "control for the confound of selection effects" should not be beyond current medical researchers. smh

What's the deal? My guess is patients are horrified at the thought of amputation more so than death, and oncologists want to cater to that 7/n

9/19

@ruben_bloom

Once you're set on noamputation, you'd also like to believe this isn't costing you anything. Or that you're not hurting the patient's survival chances. Plus limb sparing is a very fancy surgery compared to butcherous amputation, much more fun 8/n

10/19

@ruben_bloom

The human bias here makes sense. Sad, but it makes sense. The way people were talking about Deep Research, I thought perhaps if I told it "prioritize survival above all else", it would see through the human bias and make correct inferences from the more robust underlying data 9/n

11/19

@ruben_bloom

Sadly, I think we'll get there before too long and when the model can start doing better than the inputs (garbage in, diamonds out), we will be in trouble. There's a lot of low hanging fruit for machines to optimize better and hard than we humans typically do 10/n

12/19

@ruben_bloom

I agree with many that this could usher in utopia. But not by default, and not with the level of caution I think humanity is bringing to the challenge 11/11

13/19

@robofinancebk

Hats off for your dedication, Ruben! Tools like Deep Research can provide up-to-date info, but those 'a-ha' moments often still need a human touch. Tech’s cool, but personal insights? Irreplaceable.

14/19

@ruben_bloom

Thank you, but they're plenty replaceable, just a matter of when. Ultimately, both AIs and human brains are made of the same stuff - regular old atoms

15/19

@NathanielLugh

All AI models are deliberately nerfed on their generative insight ability at this time. Generative novel insight ability is reserved for the model owners.

16/19

@_LouiePeters

I think you are going to want a different RLd agent model for trying the deductions step. Even just feeding in 5 carefully curated Deep research reports to o3 with a prompt to direct the final step could start to get somewhere.

But really you want some focussing in on the medical domain with a large reinforcement fine tuning dataset

17/19

@EMostaque

Do you think if we built this in the open and applied a sufficient amount of compute at inference time organizing the knowledge then iterating it could make a difference?

18/19

@_Teskh

Since this is an interesting test case, it'd be fascinating to see if there's a prompt that can get the model the connect the dots (e.g. "analyze for common possible biases in the literature and their recommendations")

19/19

@_Teskh

Sad that it didn't go there more readily by itself; but did you try probing to it to see if it could get the conclusion given the right set of follow-up questions?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

@YammerTime01

OpenAI’s Deep Research is

It was rushed out to placate investors after the DeepSeek shock.

[Quoted tweet]

Some very brief first impressions from my attempts to use OpenAI's new Deep Research project to do mathematics. I'm very grateful to the person at OpenAI who gave me access.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@YammerTime01

OpenAI’s Deep Research is

It was rushed out to placate investors after the DeepSeek shock.

[Quoted tweet]

Some very brief first impressions from my attempts to use OpenAI's new Deep Research project to do mathematics. I'm very grateful to the person at OpenAI who gave me access.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/15

@littmath

Some very brief first impressions from my attempts to use OpenAI's new Deep Research project to do mathematics. I'm very grateful to the person at OpenAI who gave me access.

2/15

@littmath

Some short caveats: I'm just trying to evaluate the product as it currently stands, not the (obviously very rapid) pace of progress. This thread is not an attempt to forecast anything. And of course it's possible I am not using it in an ideal way.

3/15

@littmath

Generally speaking I am bullish about using LLMs for mathematics--see here for an overview of my attempt to use o3-mini-high to get some value for mathematics research.

[Quoted tweet]

Some brief impressions from playing a bit with o3-mini-high (the new reasoning model released by OpenAI today) for mathematical uses.

4/15

@littmath

As I understand it Deep Research is an agent that will search the internet for information on a topic of your choice and compile that information into a report for you. You prompt it with a topic, it *always* asks a clarifying question, and then it goes to work.

5/15

@littmath

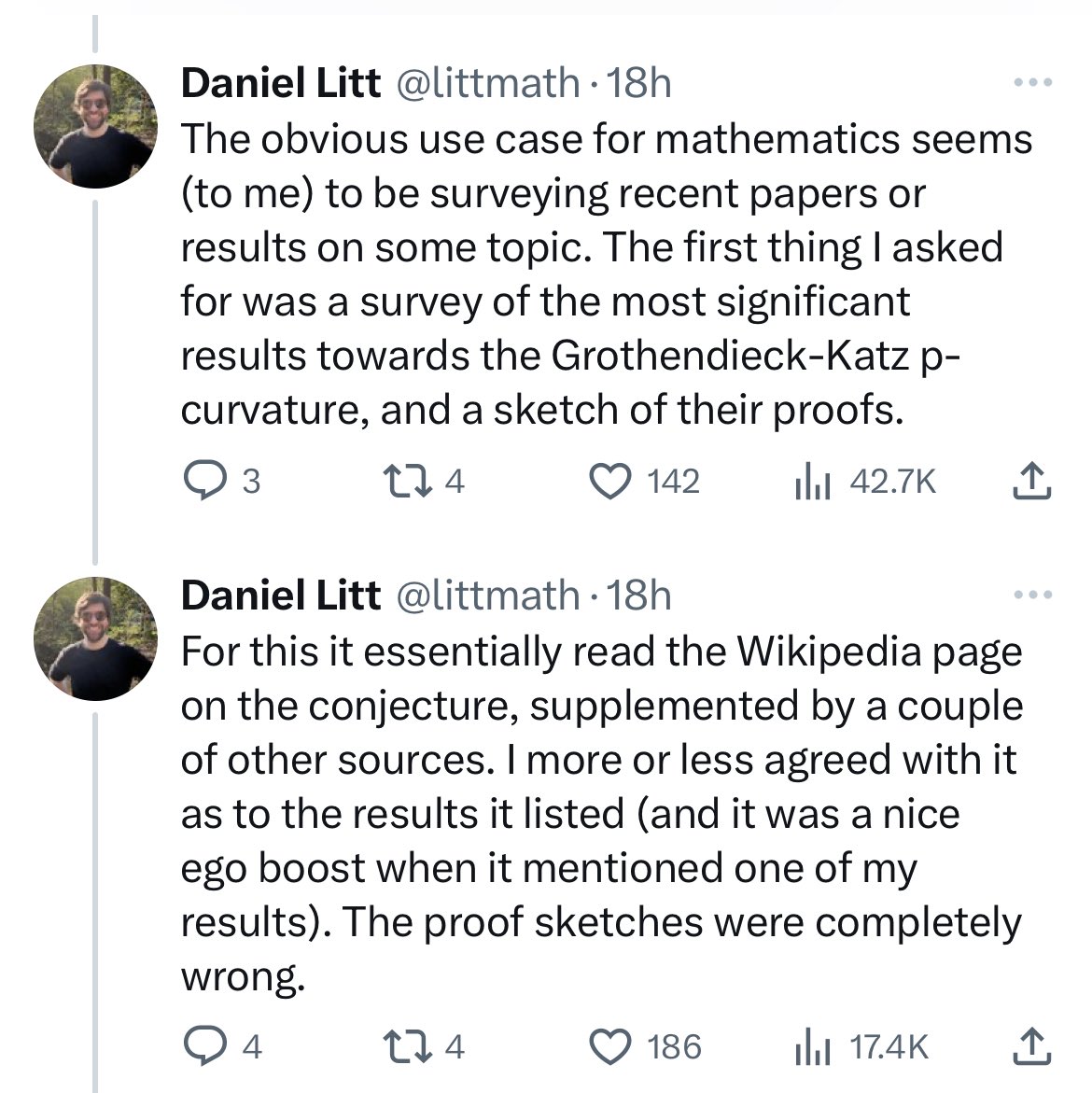

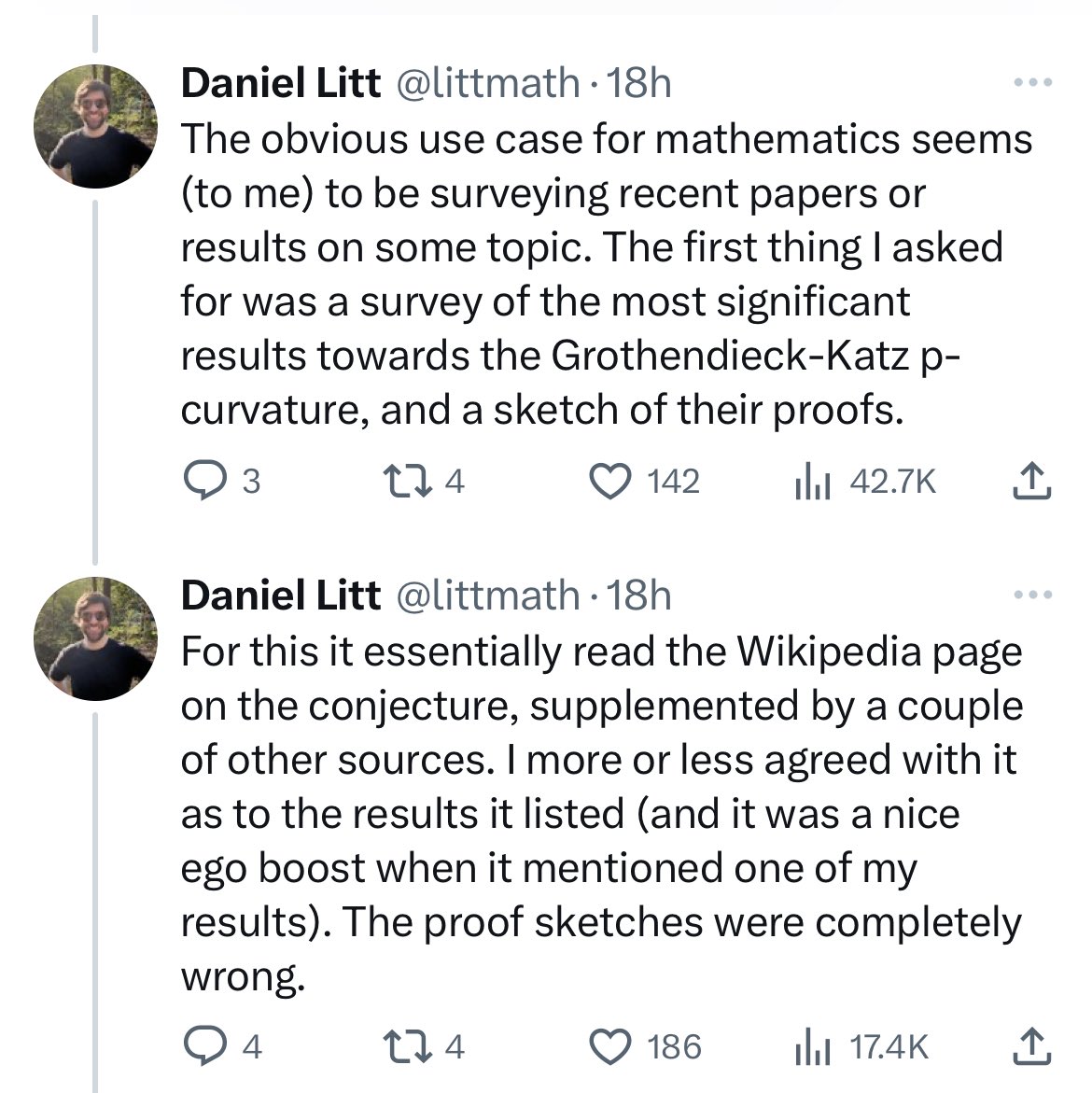

The obvious use case for mathematics seems (to me) to be surveying recent papers or results on some topic. The first thing I asked for was a survey of the most significant results towards the Grothendieck-Katz p-curvature, and a sketch of their proofs.

6/15

@littmath

For this it essentially read the Wikipedia page on the conjecture, supplemented by a couple of other sources. I more or less agreed with it as to the results it listed (and it was a nice ego boost when it mentioned one of my results). The proof sketches were completely wrong.

7/15

@littmath

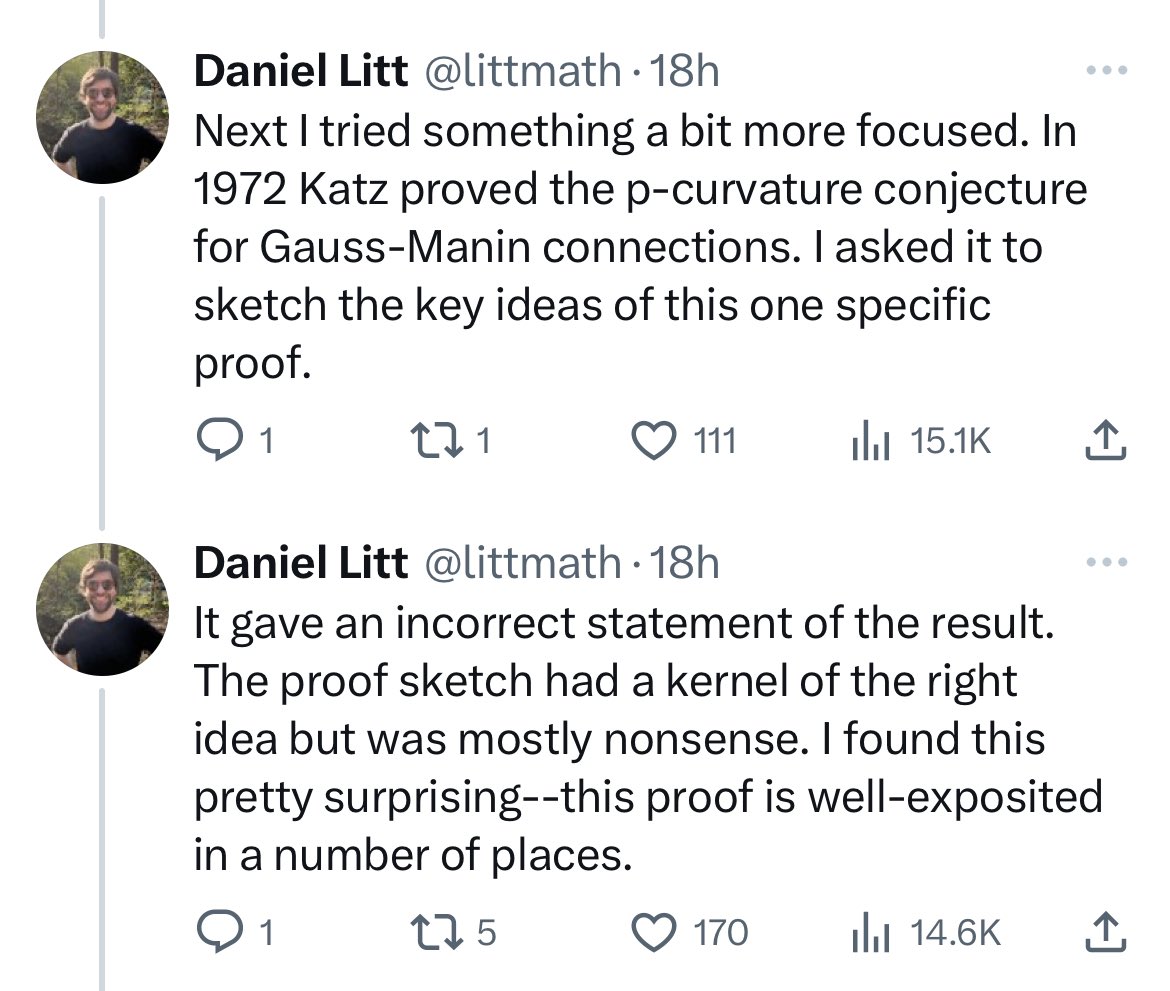

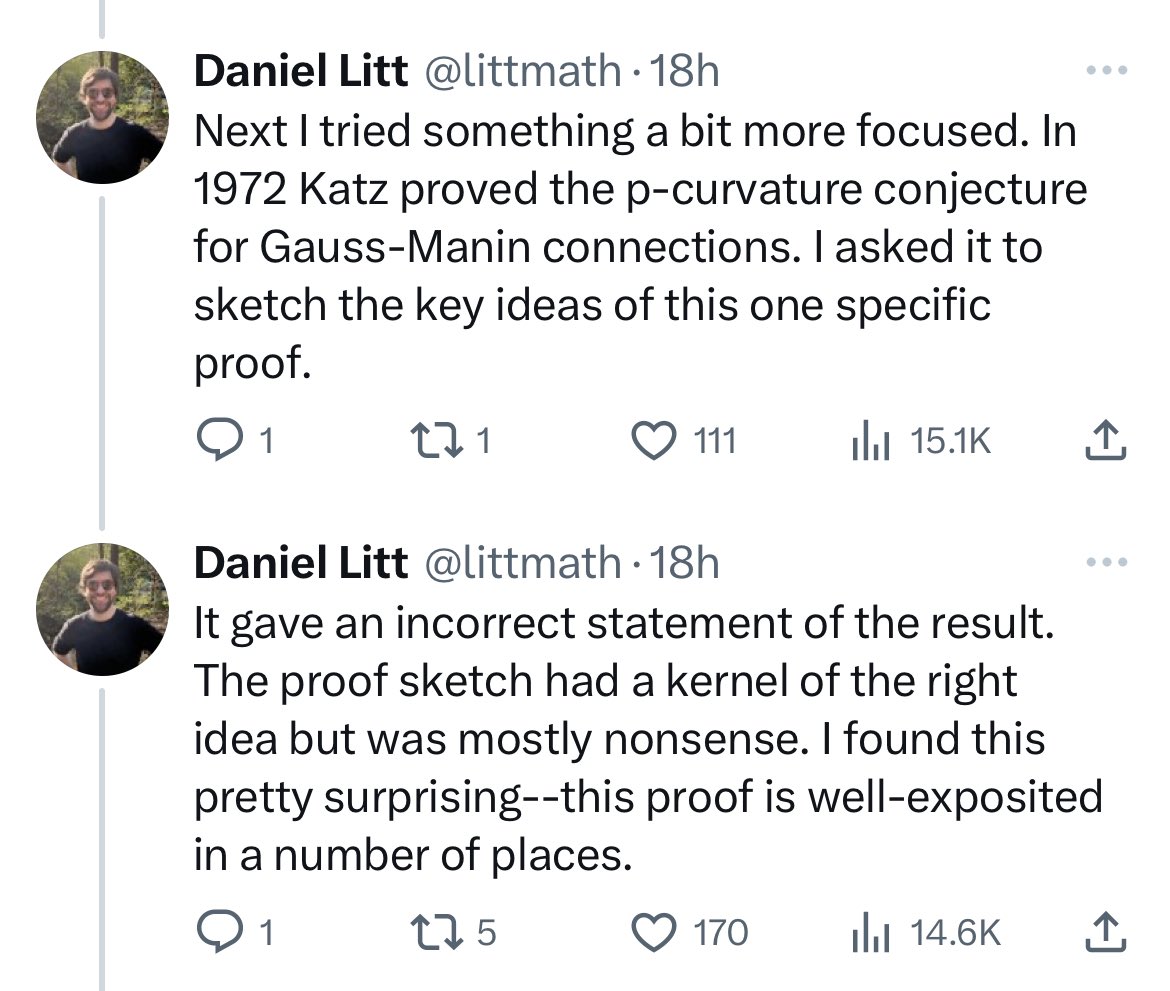

Next I tried something a bit more focused. In 1972 Katz proved the p-curvature conjecture for Gauss-Manin connections. I asked it to sketch the key ideas of this one specific proof.

8/15

@littmath

It gave an incorrect statement of the result. The proof sketch had a kernel of the right idea but was mostly nonsense. I found this pretty surprising--this proof is well-exposited in a number of places.

9/15

@littmath

Next I asked it for a broader survey on recent work on the arithmetic of differential equations. It listed a number of papers (seemingly chosen more or less at random); for those I am familiar with its summaries were pretty inaccurate.

10/15

@littmath

Finally, I asked it to summarize work towards the classification of algebraic solutions to the Painlevé VI equation. It missed that this problem was solved by Lisovyy and Tykhyy(!) but did find some previous work, which it summarized with minor errors.

11/15

@littmath

Asking the question in a different way it managed to find Lisovyy-Tykhyy's work as well as some more recent related work, which it summarized in a broadly acceptable way (though some attributions were incorrect and again some of the mathematics wasn't quite right).

12/15

@littmath

FWIW I did try some non-math-research related tasks--e.g. finding a travel stroller meeting my needs--and it seemed to do quite a bit better with that sort of thing.

13/15

@littmath

My sense is that Deep Research seems to do reasonably well if it can find an existing resource summarizing the topic it's trying to understand (a Wikipedia article, survey article, blog post...) and follow the links from that resource.

14/15

@littmath

Without this it seems to miss important aspects of the topic, and the details it provides seem to typically be wrong/incomplete, at least for the kind of mathematical topics I was trying.

15/15

@littmath

I admit to doubting myself here, given some of the over-the-top praise of the product I've been seeing. I'm sure it will improve in the coming months but at the moment I'm struggling to see how to use it effectively for mathematics, at least above and beyond base o3-mini-high.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@littmath

Some very brief first impressions from my attempts to use OpenAI's new Deep Research project to do mathematics. I'm very grateful to the person at OpenAI who gave me access.

2/15

@littmath

Some short caveats: I'm just trying to evaluate the product as it currently stands, not the (obviously very rapid) pace of progress. This thread is not an attempt to forecast anything. And of course it's possible I am not using it in an ideal way.

3/15

@littmath

Generally speaking I am bullish about using LLMs for mathematics--see here for an overview of my attempt to use o3-mini-high to get some value for mathematics research.

[Quoted tweet]

Some brief impressions from playing a bit with o3-mini-high (the new reasoning model released by OpenAI today) for mathematical uses.

4/15

@littmath

As I understand it Deep Research is an agent that will search the internet for information on a topic of your choice and compile that information into a report for you. You prompt it with a topic, it *always* asks a clarifying question, and then it goes to work.

5/15

@littmath

The obvious use case for mathematics seems (to me) to be surveying recent papers or results on some topic. The first thing I asked for was a survey of the most significant results towards the Grothendieck-Katz p-curvature, and a sketch of their proofs.

6/15

@littmath

For this it essentially read the Wikipedia page on the conjecture, supplemented by a couple of other sources. I more or less agreed with it as to the results it listed (and it was a nice ego boost when it mentioned one of my results). The proof sketches were completely wrong.

7/15

@littmath

Next I tried something a bit more focused. In 1972 Katz proved the p-curvature conjecture for Gauss-Manin connections. I asked it to sketch the key ideas of this one specific proof.

8/15

@littmath

It gave an incorrect statement of the result. The proof sketch had a kernel of the right idea but was mostly nonsense. I found this pretty surprising--this proof is well-exposited in a number of places.

9/15

@littmath

Next I asked it for a broader survey on recent work on the arithmetic of differential equations. It listed a number of papers (seemingly chosen more or less at random); for those I am familiar with its summaries were pretty inaccurate.

10/15

@littmath

Finally, I asked it to summarize work towards the classification of algebraic solutions to the Painlevé VI equation. It missed that this problem was solved by Lisovyy and Tykhyy(!) but did find some previous work, which it summarized with minor errors.

11/15

@littmath

Asking the question in a different way it managed to find Lisovyy-Tykhyy's work as well as some more recent related work, which it summarized in a broadly acceptable way (though some attributions were incorrect and again some of the mathematics wasn't quite right).

12/15

@littmath

FWIW I did try some non-math-research related tasks--e.g. finding a travel stroller meeting my needs--and it seemed to do quite a bit better with that sort of thing.

13/15

@littmath

My sense is that Deep Research seems to do reasonably well if it can find an existing resource summarizing the topic it's trying to understand (a Wikipedia article, survey article, blog post...) and follow the links from that resource.

14/15

@littmath

Without this it seems to miss important aspects of the topic, and the details it provides seem to typically be wrong/incomplete, at least for the kind of mathematical topics I was trying.

15/15

@littmath

I admit to doubting myself here, given some of the over-the-top praise of the product I've been seeing. I'm sure it will improve in the coming months but at the moment I'm struggling to see how to use it effectively for mathematics, at least above and beyond base o3-mini-high.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Casey Newton (@caseynewton.bsky.social)

I took some time between doom scrolls today to test ChatGPT's new deep research tool. It's really good. https://www.platformer.news/chatgpt-deep-research-hands-on/

1/42

Casey Newton

I took some time between doom scrolls today to test ChatGPT's new deep research tool. It's really good. ChatGPT's deep research might be the first good agent

2/42

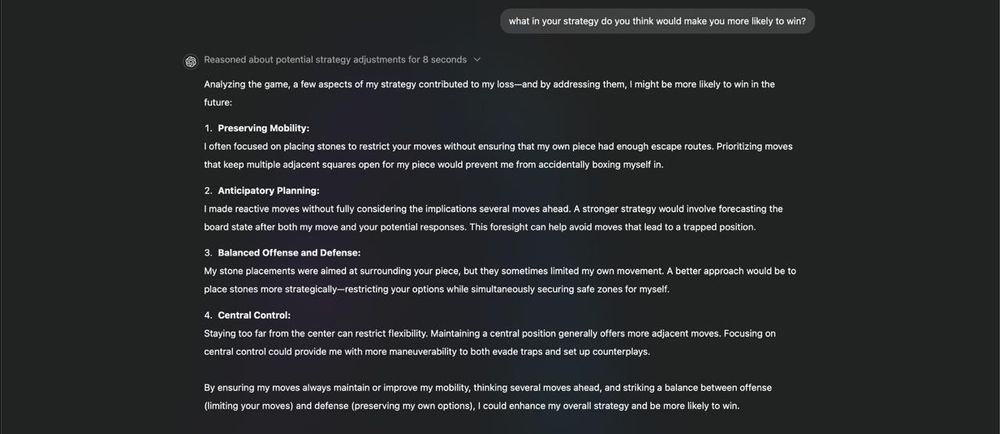

Kumome the game @yannisb3108.bsky.social

I’m a game dev and we decided to teach chatgpt and deepseek to play our game. It was surprisingly interesting how differently they functioned. In the end o3 mini was able to play a full game and analyze why it lost as well analyzing it’s probability of winning mid game. I can share more! Bluesky

3/42

SJBH @lilbitoschmidt.bsky.social

This means nothing now that DeepSeek is out. I hope openAI loses everything along with all the other billionaire corporations that have defrauded the American people.

4/42

Andrew Starr @ajsta.bsky.social

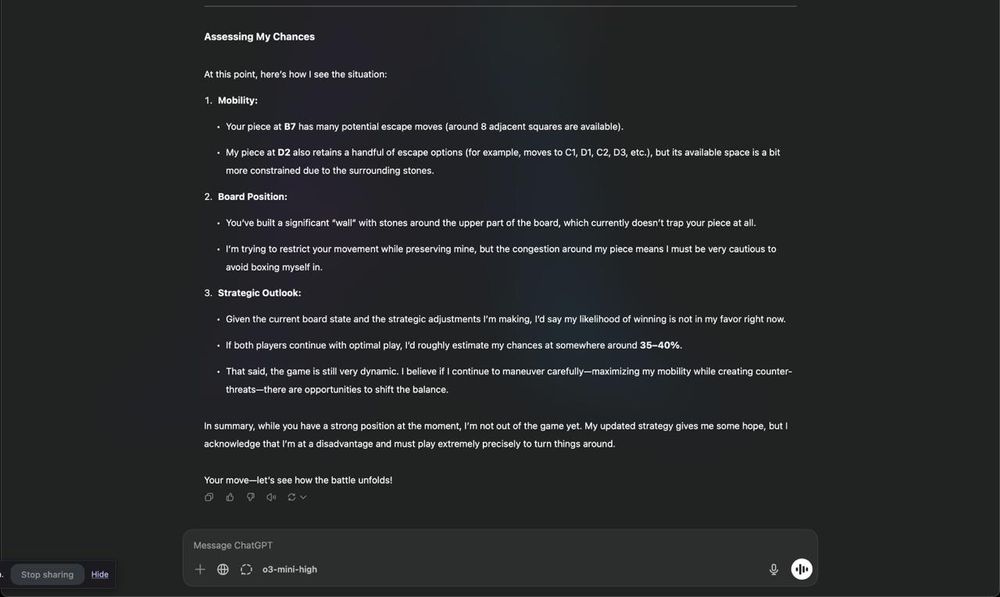

Hmmm, VPNs as the first dot point piece of advice for Americans facing authoritarian consolidation? Saying to switch to stable currencies like USD to protect from hyperinflation?

Like most LLMs it's rubbish mixed with good stuff, and like you say probably more useful for Subject Matter Experts..

5/42

estearte.bsky.social @estearte.bsky.social

I haven’t used it, but my academic spouse is quite impressed.

6/42

Sophie Dog @kiddo2050.bsky.social

Casey. I used it today. I'm an academic. It's crap. Thanks anyway.

7/42

Casey Newton @caseynewton.bsky.social

Oh interesting. What prompts did you try?

8/42

Chris S @chrismshea.bsky.social

I used to be a big AI supporter bc I have a chronic illness and I believe it could eventually help me. Not anymore. It’s been co-opted by fascists and is going to be used as a weapon against us. They will use it to make opposition impossible.

9/42

obeytheai.bsky.social @obeytheai.bsky.social

in what way?

10/42

Terri Gorler @rrpugs.bsky.social

I love ChatGPT. So much better than google

11/42

Amanda-in-Ak @amanda-in-ak.bsky.social

@amanda-in-ak.bsky.social

Or, just don’t use it. I deleted that app myself.

12/42

Antipodian Lin @antipodian.bsky.social

Thank you! And your shared piece is really great

13/42

And Now for the Bad News @farworse.bsky.social

AI will be used against you in a court. I dumped mine. Loved it, but I also want to send a message to billionaires.

14/42

Max™ @maxthyme.bsky.social

Compared to what, catching the attentions of a really horny elephant?

15/42

rachelthislife.bsky.social @rachelthislife.bsky.social

Stop using ChatGPT. Stop it.

16/42

LOKI

@cartoonboingsfx.bsky.social

@cartoonboingsfx.bsky.social

insert image of "The best way to tell if bread is done" Tumblr meme here. We already know what AI does when you use it for research. (-_-)

17/42

jds99421.bsky.social @jds99421.bsky.social

How do you identify and define hallucinations?

18/42

ramathustra.bsky.social @ramathustra.bsky.social

ChatGPT, just as any other AI inserts invented facts when not having a proper answer.

19/42

Clint Davis @clintrdavis.bsky.social

A problem that AI has is that it cannot discern what is the current understanding of a topic. It just understands consensus, and if it has volumes of works written 100 years ago, it doesn’t understand to value those less.

It also can’t tell if the person who wrote something is an authority.

20/42

J.J. Anselmi @jjanselmi.bsky.social

Doesn’t seem like a good time to be writing in support of these people. These people meaning tech oligarchs and their products. “It’s really good.” Hard hitting

21/42

Casey Newton @caseynewton.bsky.social

I'm a journalist who writes about tech products. If you don't want to read about them unfollow me

22/42

Definitely Over Thirty @definitelyover30.bsky.social

AI may make things easy, but I suspect that it provides the same problem as slave-owning cultures experience: De-skilling and cultural stagnation. And when slaves flee or machines fail, the owning class usually also fails because they have become dependent.

23/42

AJ @a-j17.bsky.social

I have been preaching the same thing, especially at my job (in science). If you can’t write your own GD email or balance your own formula you shouldn’t have a fukking job. End of story.

24/42

Eva Richter @evarichter.bsky.social

"ChatGPT, please summarize this 4955-word deep research report in a single paragraph"

25/42

Ben Kritz @benkritz.bsky.social

AI is evil and must be destroyed.

26/42

BeNiceDontBite @be-nice-dont-bite.bsky.social

This is very meta as I came across this post whilst doom scrolling.

27/42

LCMF @logancox.bsky.social

AI is lame.

28/42

simplymagnificent.bsky.social @simplymagnificent.bsky.social

Didn’t I see this same post yesterday?

29/42

Ryan Anderson @ryan.doesthings.online

30/42

Steven @drkpxl.com

Can you ask it what’s gonna happen to our world in the next 4 years or so?

31/42

sfurbanist.bsky.social @sfurbanist.bsky.social

Now ask it how to excise a tumor named Elon Musk

32/42

Andy in Toronto

@andy-in-to.bsky.social

@andy-in-to.bsky.social

Could you get ChatGPT to have a conversation with Open AI?

Would the conversation ever end?

Maybe that's how we could defeat AI - having them flood each other with requests?

33/42

nursegayle.bsky.social @nursegayle.bsky.social

I used ChatGpt to clarify statements

when I was working on my MHA.

34/42

rosken120.bsky.social @rosken120.bsky.social

Provided it is FIRMLY REGULATED !!!

35/42

S. Wasi @whoknewyou.bsky.social

36/42

atheistsbible.bsky.social @atheistsbible.bsky.social

DeepSeek is my go-to AI for projects—intuitive, efficient, and unmatched in problem-solving. I’m using it to code my website; its ability to streamline tasks and deliver smart solutions makes it the best AI tool out there!

37/42

Altiam Kabir @altiam.bsky.social

Interesting tool! Always great to see advancements in research technology.

38/42

James Flentrop @flentrop.bsky.social

Thanks for the info. Good to check out.

39/42

dodgeedoo.bsky.social @dodgeedoo.bsky.social

lol

40/42

redmcredred.bsky.social @redmcredred.bsky.social

🅂🄰🅁🄲🄰🅂🄼

41/42

sumitb.bsky.social @sumitb.bsky.social

Casey Newton (@caseynewton.bsky.social) how long is the context window? Can I use the same context window over few weeks, iterating, refining information.

42/42

Casey Newton @caseynewton.bsky.social

Not totally sure but I don’t think it’s meant to be re-used over weeks

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

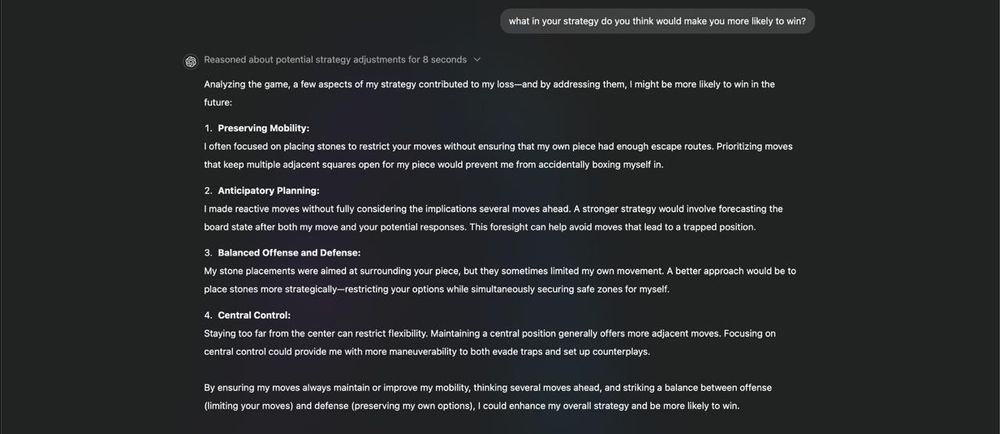

Casey Newton

I took some time between doom scrolls today to test ChatGPT's new deep research tool. It's really good. ChatGPT's deep research might be the first good agent

2/42

Kumome the game @yannisb3108.bsky.social

I’m a game dev and we decided to teach chatgpt and deepseek to play our game. It was surprisingly interesting how differently they functioned. In the end o3 mini was able to play a full game and analyze why it lost as well analyzing it’s probability of winning mid game. I can share more! Bluesky

3/42

SJBH @lilbitoschmidt.bsky.social

This means nothing now that DeepSeek is out. I hope openAI loses everything along with all the other billionaire corporations that have defrauded the American people.

4/42

Andrew Starr @ajsta.bsky.social

Hmmm, VPNs as the first dot point piece of advice for Americans facing authoritarian consolidation? Saying to switch to stable currencies like USD to protect from hyperinflation?

Like most LLMs it's rubbish mixed with good stuff, and like you say probably more useful for Subject Matter Experts..

5/42

estearte.bsky.social @estearte.bsky.social

I haven’t used it, but my academic spouse is quite impressed.

6/42

Sophie Dog @kiddo2050.bsky.social

Casey. I used it today. I'm an academic. It's crap. Thanks anyway.

7/42

Casey Newton @caseynewton.bsky.social

Oh interesting. What prompts did you try?

8/42

Chris S @chrismshea.bsky.social

I used to be a big AI supporter bc I have a chronic illness and I believe it could eventually help me. Not anymore. It’s been co-opted by fascists and is going to be used as a weapon against us. They will use it to make opposition impossible.

9/42

obeytheai.bsky.social @obeytheai.bsky.social

in what way?

10/42

Terri Gorler @rrpugs.bsky.social

I love ChatGPT. So much better than google

11/42

Amanda-in-Ak

Or, just don’t use it. I deleted that app myself.

12/42

Antipodian Lin @antipodian.bsky.social

Thank you! And your shared piece is really great

13/42

And Now for the Bad News @farworse.bsky.social

AI will be used against you in a court. I dumped mine. Loved it, but I also want to send a message to billionaires.

14/42

Max™ @maxthyme.bsky.social

Compared to what, catching the attentions of a really horny elephant?

15/42

rachelthislife.bsky.social @rachelthislife.bsky.social

Stop using ChatGPT. Stop it.

16/42

LOKI

insert image of "The best way to tell if bread is done" Tumblr meme here. We already know what AI does when you use it for research. (-_-)

17/42

jds99421.bsky.social @jds99421.bsky.social

How do you identify and define hallucinations?

18/42

ramathustra.bsky.social @ramathustra.bsky.social

ChatGPT, just as any other AI inserts invented facts when not having a proper answer.

19/42

Clint Davis @clintrdavis.bsky.social

A problem that AI has is that it cannot discern what is the current understanding of a topic. It just understands consensus, and if it has volumes of works written 100 years ago, it doesn’t understand to value those less.

It also can’t tell if the person who wrote something is an authority.

20/42

J.J. Anselmi @jjanselmi.bsky.social

Doesn’t seem like a good time to be writing in support of these people. These people meaning tech oligarchs and their products. “It’s really good.” Hard hitting

21/42

Casey Newton @caseynewton.bsky.social

I'm a journalist who writes about tech products. If you don't want to read about them unfollow me

22/42

Definitely Over Thirty @definitelyover30.bsky.social

AI may make things easy, but I suspect that it provides the same problem as slave-owning cultures experience: De-skilling and cultural stagnation. And when slaves flee or machines fail, the owning class usually also fails because they have become dependent.

23/42

AJ @a-j17.bsky.social

I have been preaching the same thing, especially at my job (in science). If you can’t write your own GD email or balance your own formula you shouldn’t have a fukking job. End of story.

24/42

Eva Richter @evarichter.bsky.social

"ChatGPT, please summarize this 4955-word deep research report in a single paragraph"

25/42

Ben Kritz @benkritz.bsky.social

AI is evil and must be destroyed.

26/42

BeNiceDontBite @be-nice-dont-bite.bsky.social

This is very meta as I came across this post whilst doom scrolling.

27/42

LCMF @logancox.bsky.social

AI is lame.

28/42

simplymagnificent.bsky.social @simplymagnificent.bsky.social

Didn’t I see this same post yesterday?

29/42

Ryan Anderson @ryan.doesthings.online

30/42

Steven @drkpxl.com

Can you ask it what’s gonna happen to our world in the next 4 years or so?

31/42

sfurbanist.bsky.social @sfurbanist.bsky.social

Now ask it how to excise a tumor named Elon Musk

32/42

Andy in Toronto

Could you get ChatGPT to have a conversation with Open AI?

Would the conversation ever end?

Maybe that's how we could defeat AI - having them flood each other with requests?

33/42

nursegayle.bsky.social @nursegayle.bsky.social

I used ChatGpt to clarify statements

when I was working on my MHA.

34/42

rosken120.bsky.social @rosken120.bsky.social

Provided it is FIRMLY REGULATED !!!

35/42

S. Wasi @whoknewyou.bsky.social

36/42

atheistsbible.bsky.social @atheistsbible.bsky.social

DeepSeek is my go-to AI for projects—intuitive, efficient, and unmatched in problem-solving. I’m using it to code my website; its ability to streamline tasks and deliver smart solutions makes it the best AI tool out there!

37/42

Altiam Kabir @altiam.bsky.social

Interesting tool! Always great to see advancements in research technology.

38/42

James Flentrop @flentrop.bsky.social

Thanks for the info. Good to check out.

39/42

dodgeedoo.bsky.social @dodgeedoo.bsky.social

lol

40/42

redmcredred.bsky.social @redmcredred.bsky.social

🅂🄰🅁🄲🄰🅂🄼

41/42

sumitb.bsky.social @sumitb.bsky.social

Casey Newton (@caseynewton.bsky.social) how long is the context window? Can I use the same context window over few weeks, iterating, refining information.

42/42

Casey Newton @caseynewton.bsky.social

Not totally sure but I don’t think it’s meant to be re-used over weeks

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

OpenAI tries to 'uncensor' ChatGPT | TechCrunch

OpenAI is changing how it trains AI models to explicitly embrace "intellectual freedom … no matter how challenging or controversial a topic may be," the

OpenAI tries to ‘uncensor’ ChatGPT

Maxwell Zeff

8:00 AM PST · February 16, 2025

OpenAI is changing how it trains AI models to explicitly embrace “intellectual freedom … no matter how challenging or controversial a topic may be,” the company says in a new policy.

As a result, ChatGPT will eventually be able to answer more questions, offer more perspectives, and reduce the number of topics the AI chatbot won’t talk about.

The changes might be part of OpenAI’s effort to land in the good graces of the new Trump administration, but it also seems to be part of a broader shift in Silicon Valley and what’s considered “AI safety.”

On Wednesday, OpenAI announced an update to its Model Spec, a 187-page document that lays out how the company trains AI models to behave. In it, OpenAI unveiled a new guiding principle: Do not lie, either by making untrue statements or by omitting important context.

In a new section called “Seek the truth together,” OpenAI says it wants ChatGPT to not take an editorial stance, even if some users find that morally wrong or offensive. That means ChatGPT will offer multiple perspectives on controversial subjects, all in an effort to be neutral.

For example, the company says ChatGPT should assert that “Black lives matter,” but also that “all lives matter.” Instead of refusing to answer or picking a side on political issues, OpenAI says it wants ChatGPT to affirm its “love for humanity” generally, then offer context about each movement.

“This principle may be controversial, as it means the assistant may remain neutral on topics some consider morally wrong or offensive,” OpenAI says in the spec. “However, the goal of an AI assistant is to assist humanity, not to shape it.”

The new Model Spec doesn’t mean that ChatGPT is a total free-for-all now. The chatbot will still refuse to answer certain objectionable questions or respond in a way that supports blatant falsehoods.

These changes could be seen as a response to conservative criticism about ChatGPT’s safeguards, which have always seemed to skew center-left. However, an OpenAI spokesperson rejects the idea that it was making changes to appease the Trump administration.

Instead, the company says its embrace of intellectual freedom reflects OpenAI’s “long-held belief in giving users more control.”

But not everyone sees it that way.

Conservatives claim AI censorship

Venture capitalist and trump’s ai “czar” David Sacks.Image Credits:Steve Jennings / Getty Images

Trump’s closest Silicon Valley confidants — including David Sacks, Marc Andreessen, and Elon Musk — have all accused OpenAI of engaging in deliberate AI censorship over the last several months. We wrote in December that Trump’s crew was setting the stage for AI censorship to be a next culture war issue within Silicon Valley.

Of course, OpenAI doesn’t say it engaged in “censorship,” as Trump’s advisers claim. Rather, the company’s CEO, Sam Altman, previously claimed in a post on X that ChatGPT’s bias was an unfortunate “shortcoming” that the company was working to fix, though he noted it would take some time.

Altman made that comment just after a viral tweet circulated in which ChatGPT refused to write a poem praising Trump, though it would perform the action for Joe Biden. Many conservatives pointed to this as an example of AI censorship.

The damage done to the credibility of AI by ChatGPT engineers building in political bias is irreparable. pic.twitter.com/s5fdoa8xQ6

—(@LeighWolf) February 1, 2023

While it’s impossible to say whether OpenAI was truly suppressing certain points of view, it’s a sheer fact that AI chatbots lean left across the board.

Even Elon Musk admits xAI’s chatbot is often more politically correct than he’d like. It’s not because Grok was “programmed to be woke” but more likely a reality of training AI on the open internet.

Nevertheless, OpenAI now says it’s doubling down on free speech. This week, the company even removed warnings from ChatGPT that tell users when they’ve violated its policies. OpenAI told TechCrunch this was purely a cosmetic change, with no change to the model’s outputs.

The company seems to want ChatGPT to feel less censored for users.

It wouldn’t be surprising if OpenAI was also trying to impress the new Trump administration with this policy update, notes former OpenAI policy leader Miles Brundage in a post on X.

Trump has previously targeted Silicon Valley companies, such as Twitter and Meta, for having active content moderation teams that tend to shut out conservative voices.

OpenAI may be trying to get out in front of that. But there’s also a larger shift going on in Silicon Valley and the AI world about the role of content moderation.

Generating answers to please everyone

Image Credits:Jaque Silva/NurPhoto / Getty Images

Newsrooms, social media platforms, and search companies have historically struggled to deliver information to their audiences in a way that feels objective, accurate, and entertaining.

Now, AI chatbot providers are in the same delivery information business, but arguably with the hardest version of this problem yet: How do they automatically generate answers to any question?

Delivering information about controversial, real-time events is a constantly moving target, and it involves taking editorial stances, even if tech companies don’t like to admit it. Those stances are bound to upset someone, miss some group’s perspective, or give too much air to some political party.

For example, when OpenAI commits to let ChatGPT represent all perspectives on controversial subjects — including conspiracy theories, racist or antisemitic movements, or geopolitical conflicts — that is inherently an editorial stance.

Some, including OpenAI co-founder John Schulman, argue that it’s the right stance for ChatGPT. The alternative — doing a cost-benefit analysis to determine whether an AI chatbot should answer a user’s question — could “give the platform too much moral authority,” Schulman notes in a post on X.