1/1

@permaximum88

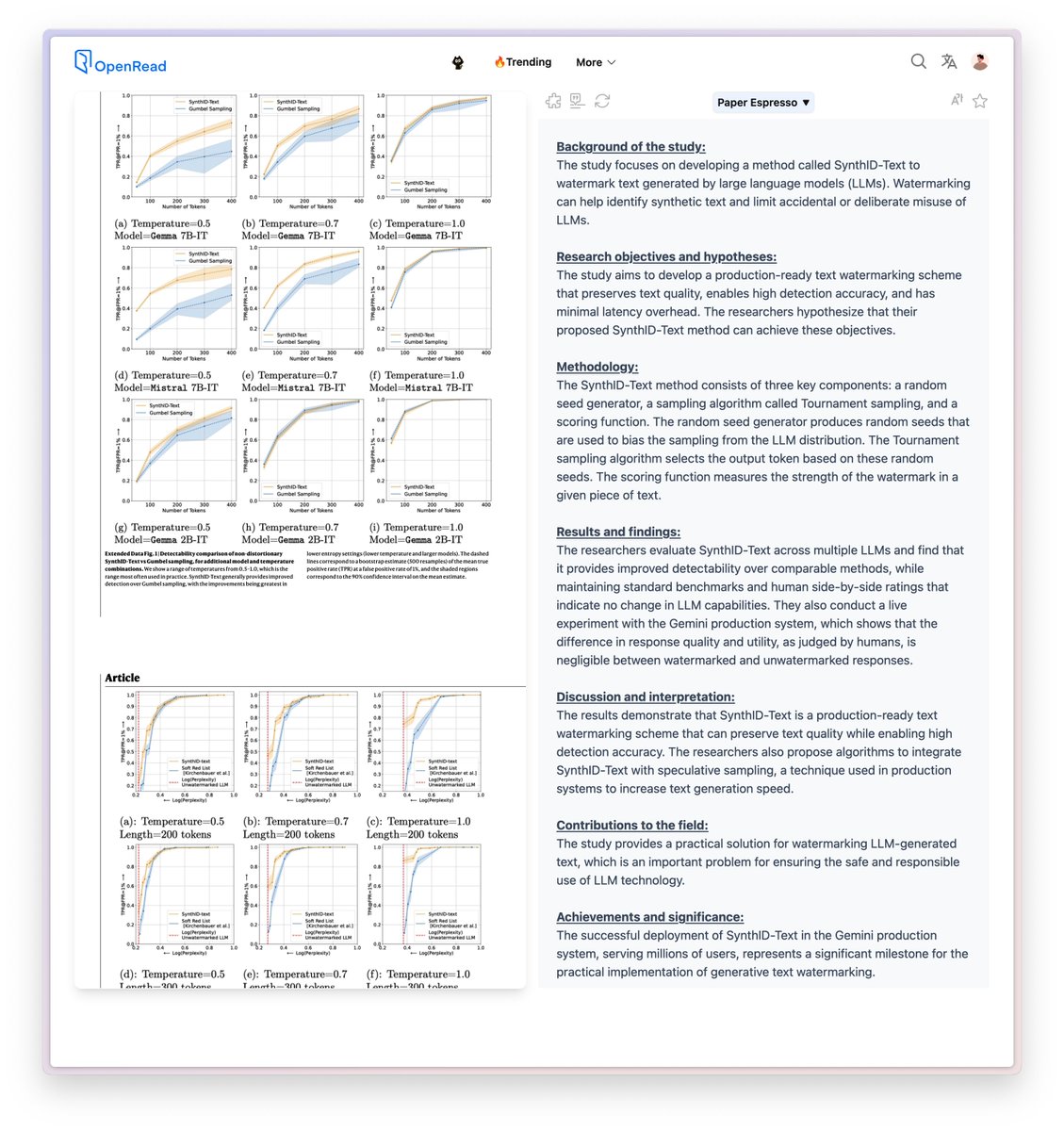

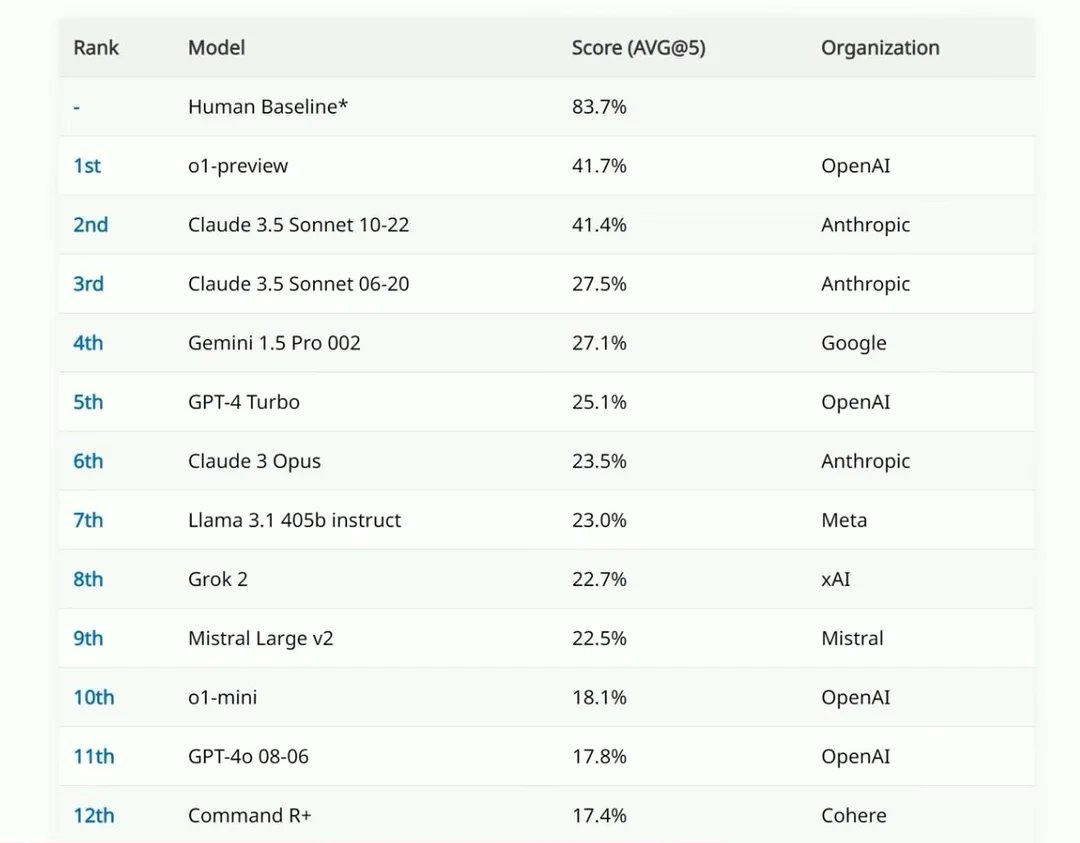

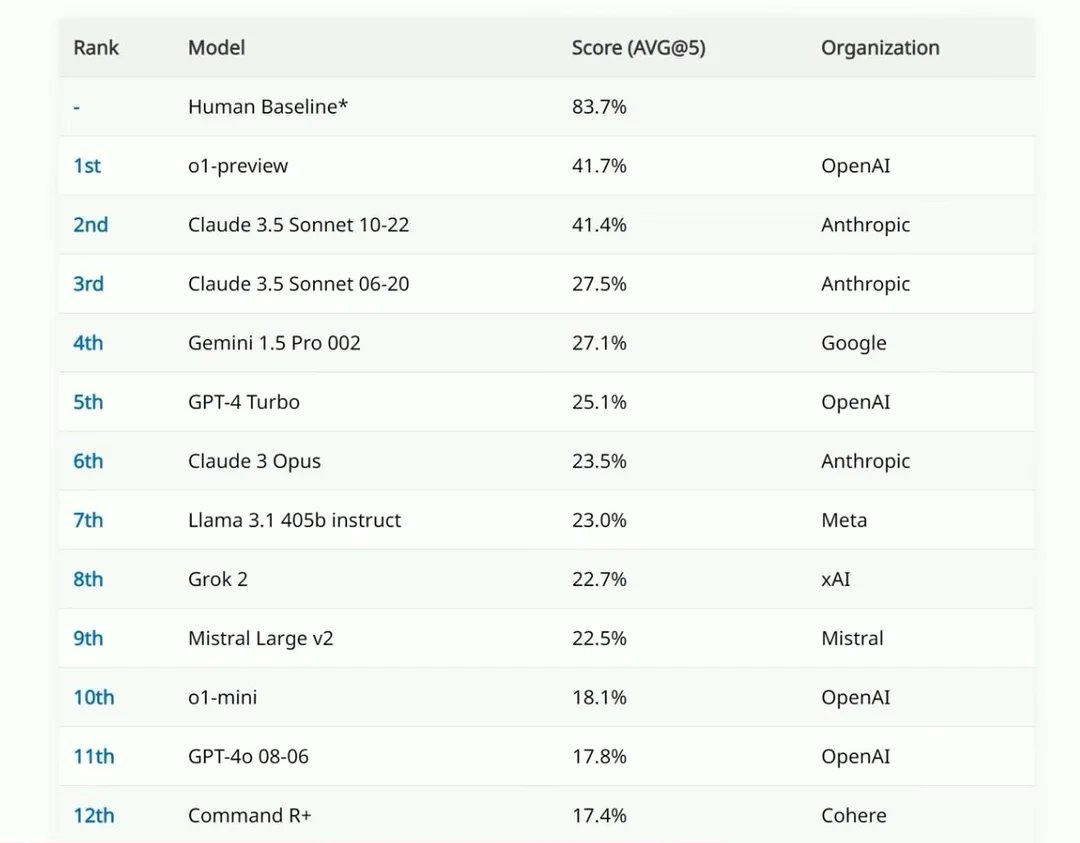

Claude 3.5 Sonnet matches o1-preview 's performance on the reasoning benchmark I trust most, Simple-Bench. And it achieves that score 150 times faster and cheaper compered to o1-preview. Good job

@AnthropicAI

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@permaximum88

Claude 3.5 Sonnet matches o1-preview 's performance on the reasoning benchmark I trust most, Simple-Bench. And it achieves that score 150 times faster and cheaper compered to o1-preview. Good job

@AnthropicAI

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/31

@deedydas

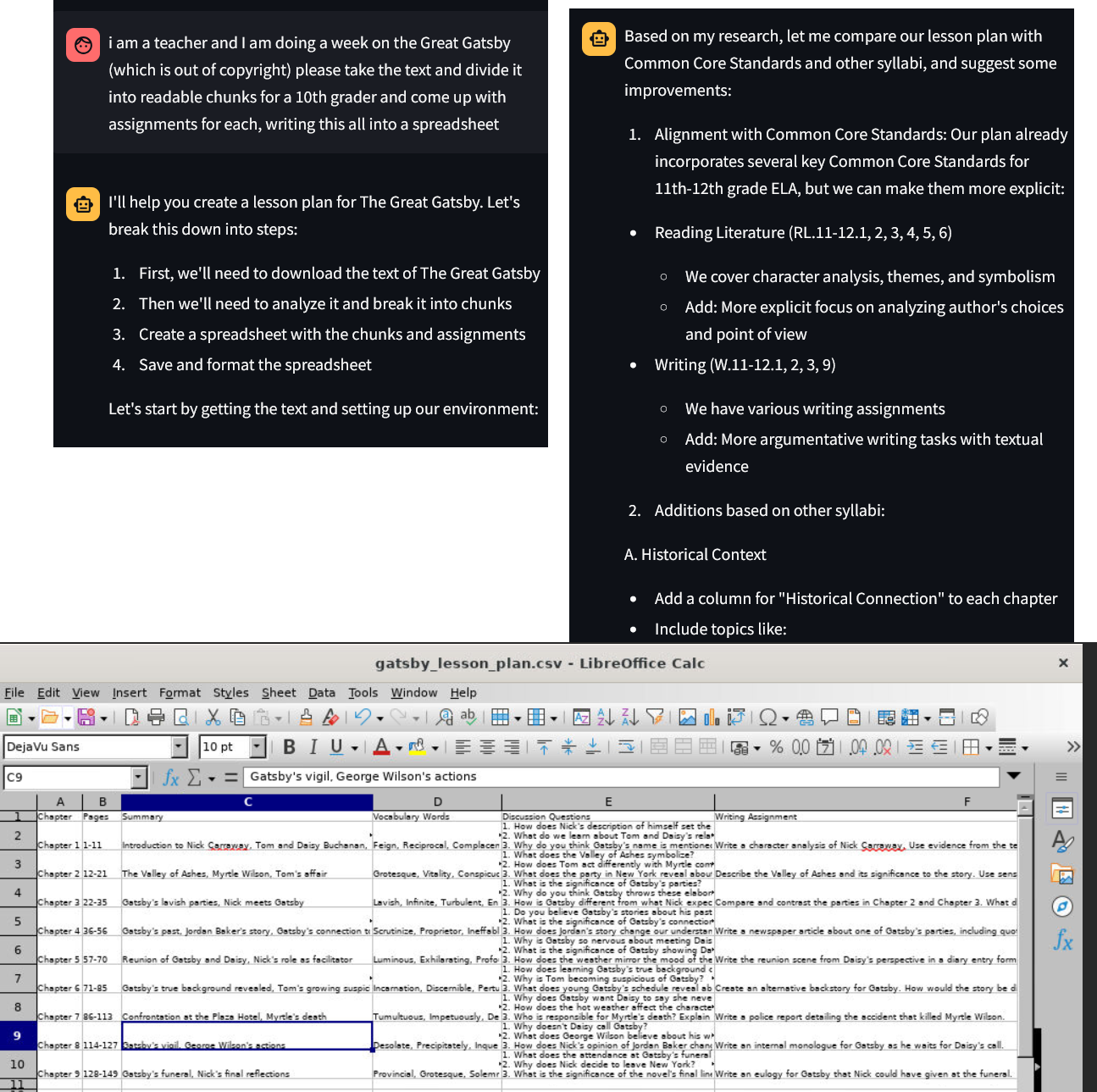

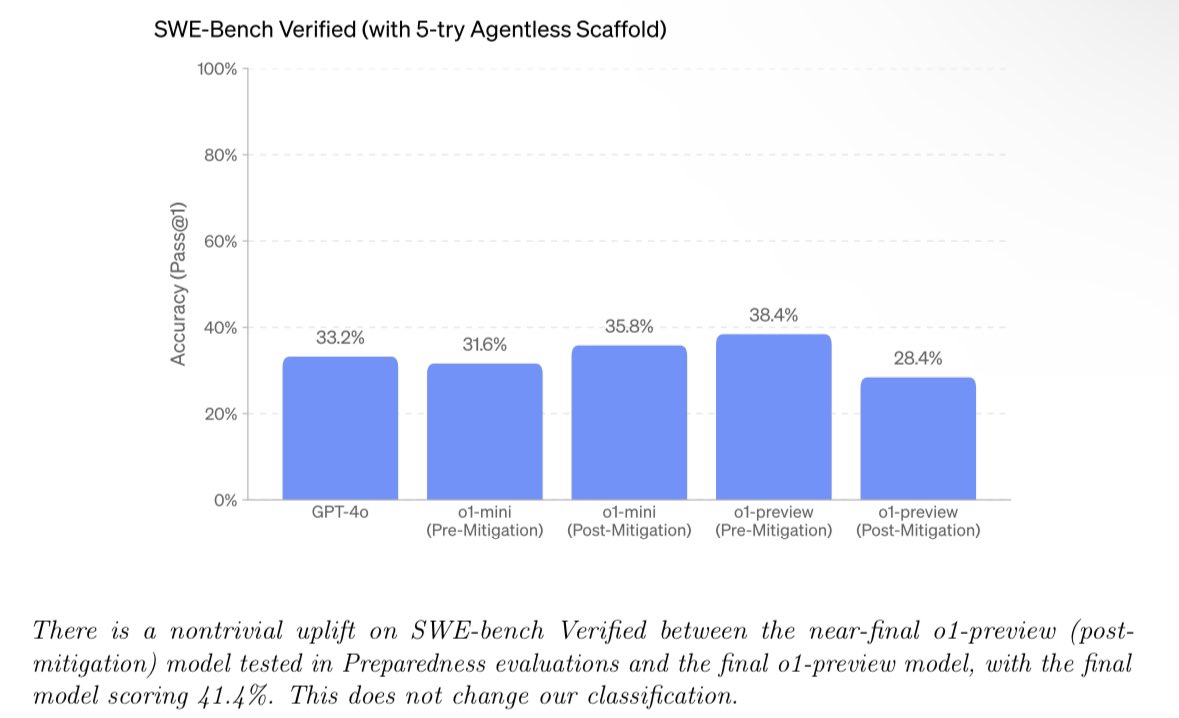

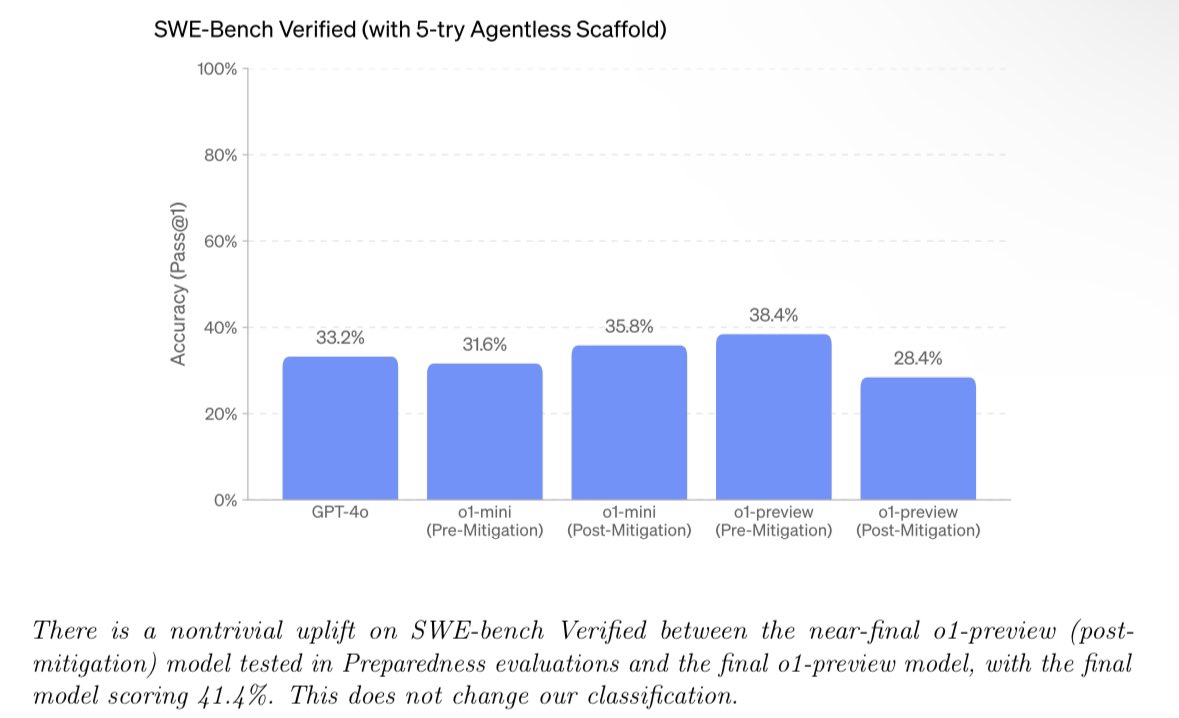

The new Claude 3.5 Sonnet scores 49% on the hardest coding benchmark based of real GitHub issues, SWE-Bench verified.

Cosine Genie was 43.8%

o1-preview was 41.4%

o1-mini was 35.8%

Claude is the undisputed king of all models at writing code.

2/31

@Tom62589172

Where's Devin?

3/31

@deedydas

14% on SWE-Bench

4/31

@AntDX316

5/31

@manuhortet

this benchmark doesn't say much anymore

we are seeing all real life ai-for-code applications focusing on enforcing rules, strats on prompt and context building

o1 may still work better in stricter systems focused on providing more info

3.5-new won my vibe benchmark tho

6/31

@adridder

Code mastery. Impressive feat. Curious minds ponder next frontiers though.

7/31

@theDataDork

I’ve been using Claude since it released new model.. it clearly has improved a lot when it comes to coding

8/31

@eliluong

is there a difference between how well the free vs paid Claude performs on a reasonable length input?

9/31

@Evinst3in

coding is becoming easy

10/31

@dmsimon

The number of syntax errors Claude makes while generating basic HTML/CSS is ridiculous.

23 regens by Claude.

Put the same request into Llama 3.2 3b and nailed it in one try running on one 3090 Ti.

11/31

@supremebeme

yeah im ngl it's either on par or better than o1 preview, and no limits i don't think

12/31

@ntkris

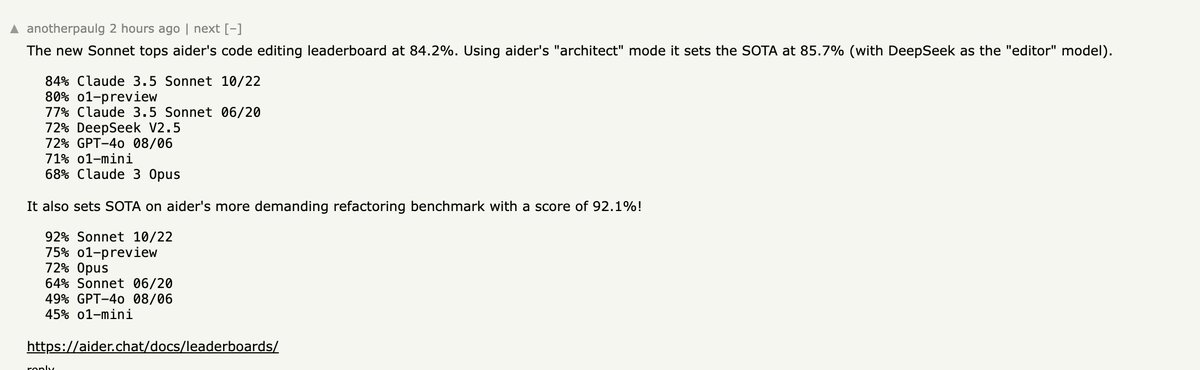

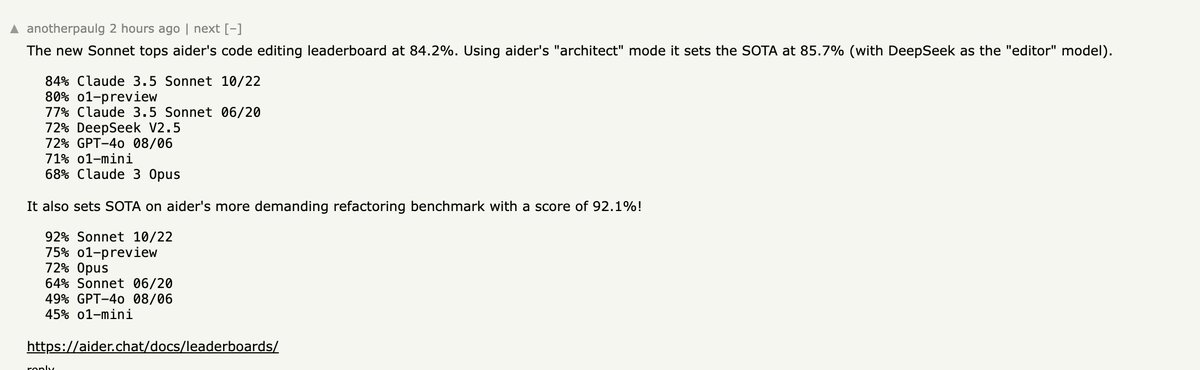

Another interesting datapoint to back this up from hackernews:

13/31

@SaasJunctionHQ

Sonnet: The King of Code

GPT o1: The Versatile Contender

Mistral Codestral: The Clean Code Specialist

DeepSeek-Coder-V2: The Emerging Talent

14/31

@skorfmann

I personally perceived it as worse than the last sonnet 3.5 version using Cursor. Broken and incoherent text / code snippets all over the place. I’ve been told that there’s a similar experience in Artifacts

15/31

@JTL87i

first impression feels worse than before

o1-mini still killing it

16/31

@TiggerSharkML

impressive

17/31

@s_noronha

Agree. Claude is current king, then Llama, Gemini. GPT is not great

18/31

@NarenNallapa

That is actually very impressive!

19/31

@ReadFuturist

I'm happy to give Claude my screen usage of how I play @KenshiOfficial - it's a brutal game to pretend you're a person.

20/31

@buzzedison

Facts only

21/31

@victor_explore

looks like claude is quietly coding its way to the top not just winning, but solving real-world problems

not just winning, but solving real-world problems

22/31

@dikksonPau

Is this enough to push OpenAI to launch 4.5???

23/31

@morew4rd

people still trust public evals?

24/31

@hadikhantech

o1-mini for analysis and design.

Sonnet 3.5 for implementation.

25/31

@YorkTheWest

Source?

26/31

@firasd

I notice that Claude is also good at layouts etc

Like it made this weather view just based on some json

[Quoted tweet]

Pasted some weather json and it made this ..

I said: “Make an html page with query that shows this data for viewing, exploring and editing. Also first make a div that contains your understanding of what the app displays and what each field you're going to show represents”

27/31

@fofices_

I’ve something great about your project,let’s discuss in DM

28/31

@arpit_sidana

Do humans have a comparative score?

29/31

@alvarocha2

It's really good. Besides the benchmarks, we see our users moving more and more to Claude for day-to-day coding.

30/31

@Crypto_Briefing

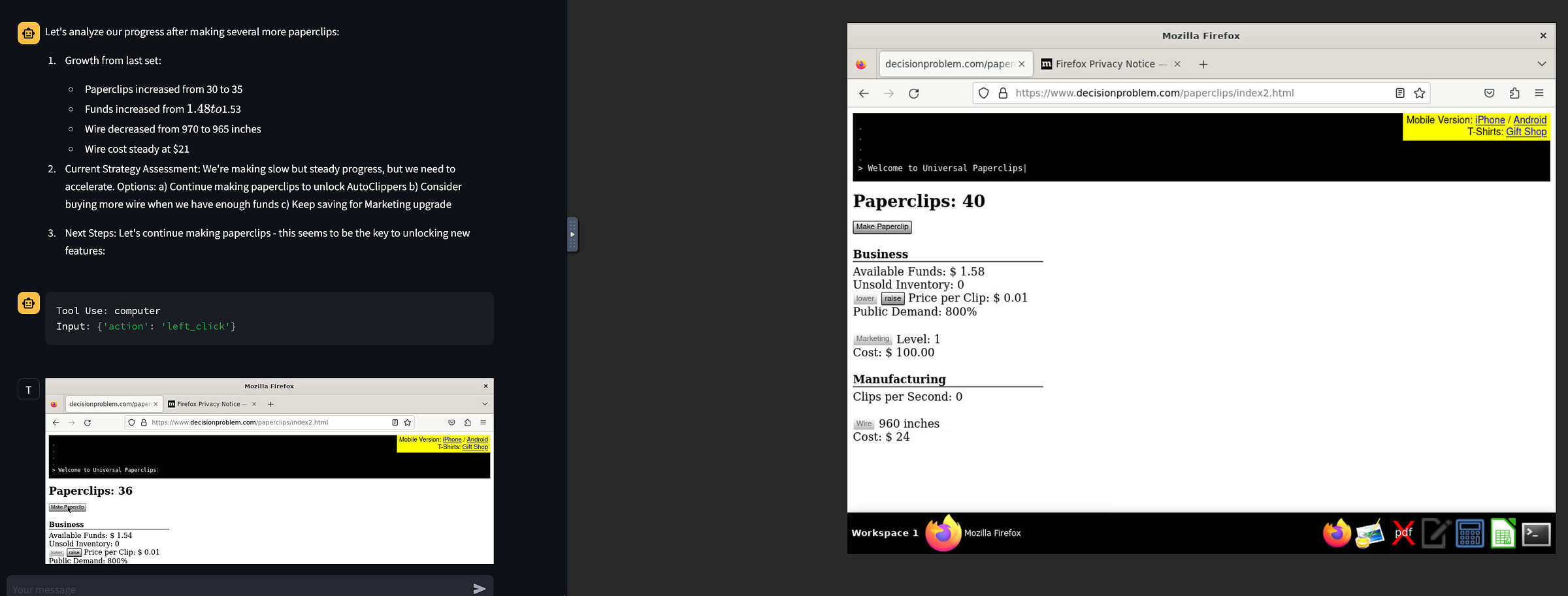

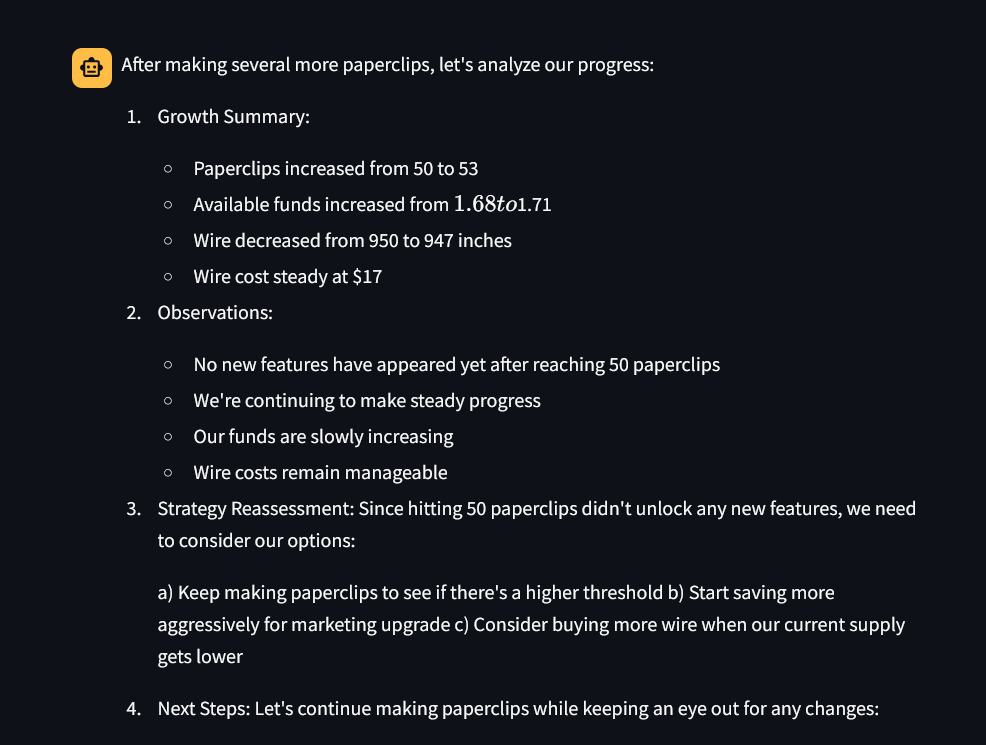

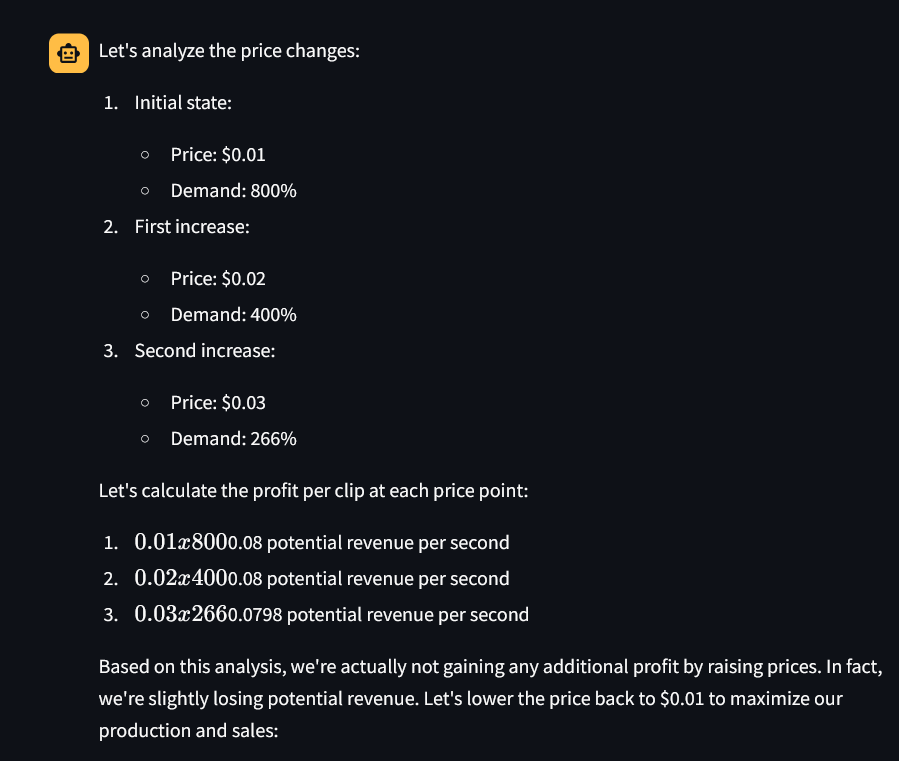

[Quoted tweet]

Anthropic launches computer interface control in new Claude 3.5 models

https://video.twimg.com/ext_tw_video/1848785280863555584/pu/vid/avc1/1280x720/Ir3FvrNf7N01w9Xu.mp4

31/31

@SemperLuxFortis

It needs longer output length in the app to be of any use though. It's constant striving for too much brevity is a major issue for me and a pain in the ass.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@deedydas

The new Claude 3.5 Sonnet scores 49% on the hardest coding benchmark based of real GitHub issues, SWE-Bench verified.

Cosine Genie was 43.8%

o1-preview was 41.4%

o1-mini was 35.8%

Claude is the undisputed king of all models at writing code.

2/31

@Tom62589172

Where's Devin?

3/31

@deedydas

14% on SWE-Bench

4/31

@AntDX316

5/31

@manuhortet

this benchmark doesn't say much anymore

we are seeing all real life ai-for-code applications focusing on enforcing rules, strats on prompt and context building

o1 may still work better in stricter systems focused on providing more info

3.5-new won my vibe benchmark tho

6/31

@adridder

Code mastery. Impressive feat. Curious minds ponder next frontiers though.

7/31

@theDataDork

I’ve been using Claude since it released new model.. it clearly has improved a lot when it comes to coding

8/31

@eliluong

is there a difference between how well the free vs paid Claude performs on a reasonable length input?

9/31

@Evinst3in

coding is becoming easy

10/31

@dmsimon

The number of syntax errors Claude makes while generating basic HTML/CSS is ridiculous.

23 regens by Claude.

Put the same request into Llama 3.2 3b and nailed it in one try running on one 3090 Ti.

11/31

@supremebeme

yeah im ngl it's either on par or better than o1 preview, and no limits i don't think

12/31

@ntkris

Another interesting datapoint to back this up from hackernews:

13/31

@SaasJunctionHQ

Sonnet: The King of Code

GPT o1: The Versatile Contender

Mistral Codestral: The Clean Code Specialist

DeepSeek-Coder-V2: The Emerging Talent

14/31

@skorfmann

I personally perceived it as worse than the last sonnet 3.5 version using Cursor. Broken and incoherent text / code snippets all over the place. I’ve been told that there’s a similar experience in Artifacts

15/31

@JTL87i

first impression feels worse than before

o1-mini still killing it

16/31

@TiggerSharkML

impressive

17/31

@s_noronha

Agree. Claude is current king, then Llama, Gemini. GPT is not great

18/31

@NarenNallapa

That is actually very impressive!

19/31

@ReadFuturist

I'm happy to give Claude my screen usage of how I play @KenshiOfficial - it's a brutal game to pretend you're a person.

20/31

@buzzedison

Facts only

21/31

@victor_explore

looks like claude is quietly coding its way to the top

22/31

@dikksonPau

Is this enough to push OpenAI to launch 4.5???

23/31

@morew4rd

people still trust public evals?

24/31

@hadikhantech

o1-mini for analysis and design.

Sonnet 3.5 for implementation.

25/31

@YorkTheWest

Source?

26/31

@firasd

I notice that Claude is also good at layouts etc

Like it made this weather view just based on some json

[Quoted tweet]

Pasted some weather json and it made this ..

I said: “Make an html page with query that shows this data for viewing, exploring and editing. Also first make a div that contains your understanding of what the app displays and what each field you're going to show represents”

27/31

@fofices_

I’ve something great about your project,let’s discuss in DM

28/31

@arpit_sidana

Do humans have a comparative score?

29/31

@alvarocha2

It's really good. Besides the benchmarks, we see our users moving more and more to Claude for day-to-day coding.

30/31

@Crypto_Briefing

[Quoted tweet]

Anthropic launches computer interface control in new Claude 3.5 models

https://video.twimg.com/ext_tw_video/1848785280863555584/pu/vid/avc1/1280x720/Ir3FvrNf7N01w9Xu.mp4

31/31

@SemperLuxFortis

It needs longer output length in the app to be of any use though. It's constant striving for too much brevity is a major issue for me and a pain in the ass.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196