Llama-3.1-nemotron-70b-instruct

1/1

@NVIDIAAIDev

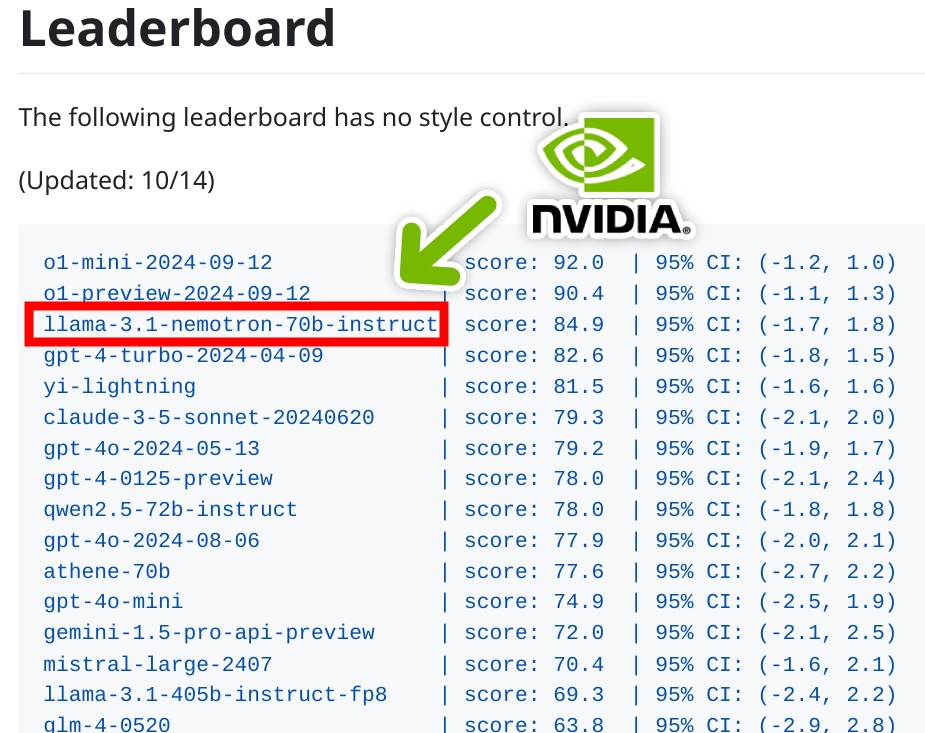

Our Llama-3.1-Nemotron-70B-Instruct model is a leading model on the Arena Hard benchmark (85) from @lmarena_ai.

Arena Hard benchmark (85) from @lmarena_ai.

Arena Hard uses a data pipeline to build high-quality benchmarks from live data in Chatbot Arena, and is known for its predictive ability of Chatbot Arena Elo score as well as separability between helpful and less helpful models.

Use our customized model Llama-3.1-Nemotron-70B to improve the helpfulness of LLM generated responses in your applications.

Try on our API catalog: NVIDIA NIM | llama-3_1-nemotron-70b-instruct

Try on our API catalog: NVIDIA NIM | llama-3_1-nemotron-70b-instruct

On @GitHub: GitHub - lmarena/arena-hard-auto: Arena-Hard-Auto: An automatic LLM benchmark.

On @GitHub: GitHub - lmarena/arena-hard-auto: Arena-Hard-Auto: An automatic LLM benchmark.

Or on @huggingface: nvidia/Llama-3.1-Nemotron-70B-Instruct · Hugging Face

Or on @huggingface: nvidia/Llama-3.1-Nemotron-70B-Instruct · Hugging Face

https://video.twimg.com/ext_tw_video/1846227628749197312/pu/vid/avc1/1254x720/a-lJe7AoX1glqAIP.mp4

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@NVIDIAAIDev

Our Llama-3.1-Nemotron-70B-Instruct model is a leading model on the

Arena Hard uses a data pipeline to build high-quality benchmarks from live data in Chatbot Arena, and is known for its predictive ability of Chatbot Arena Elo score as well as separability between helpful and less helpful models.

Use our customized model Llama-3.1-Nemotron-70B to improve the helpfulness of LLM generated responses in your applications.

https://video.twimg.com/ext_tw_video/1846227628749197312/pu/vid/avc1/1254x720/a-lJe7AoX1glqAIP.mp4

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

[9/10] Detailed benchmarks show Llama-3.1-Nemotron-51B-Instruct preserves 97-100% accuracy across various tasks compared to Llama 3.1-70B-Instruct. It doubles throughput in multiple use cases. /search?q=#AIBenchmarks

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

[9/10] Detailed benchmarks show Llama-3.1-Nemotron-51B-Instruct preserves 97-100% accuracy across various tasks compared to Llama 3.1-70B-Instruct. It doubles throughput in multiple use cases. /search?q=#AIBenchmarks

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/3

@geifman_y

We are happy to share that we have released our first model in our new home at @nvidia -Llama-3.1-Nemotron-51B-Instruct.

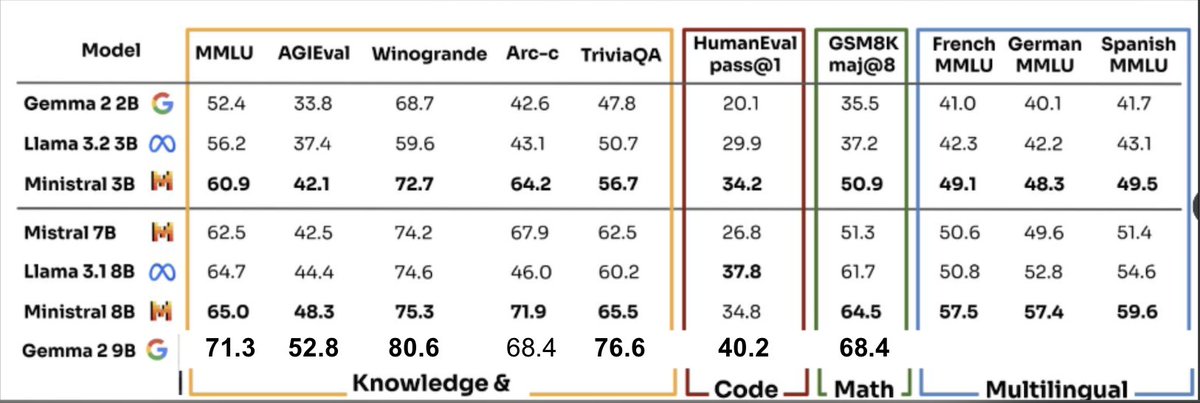

The model is derived from Meta’s Llama-3.1-70B, using a novel Neural Architecture Search (NAS) approach that results in a highly accurate and efficient model.

2/3

@geifman_y

The model fits on a single NVIDIA H100 GPU at high workloads, making it more accessible and affordable. The excellent accuracy-efficiency sweet spot exhibited by the new model stems from changes to the model’s architecture,

3/3

@geifman_y

leading to a significantly lower memory footprint, reduced memory bandwidth, and reduced FLOPs while maintaining excellent accuracy.

HF model - nvidia/Llama-3_1-Nemotron-51B-Instruct · Hugging Face

Blog - Advancing the Accuracy-Efficiency Frontier with Llama-3.1-Nemotron-51B | NVIDIA Technical Blog

NIM endpoint - NVIDIA NIM | llama-3_1-nemotron-51b-instruct

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@geifman_y

We are happy to share that we have released our first model in our new home at @nvidia -Llama-3.1-Nemotron-51B-Instruct.

The model is derived from Meta’s Llama-3.1-70B, using a novel Neural Architecture Search (NAS) approach that results in a highly accurate and efficient model.

2/3

@geifman_y

The model fits on a single NVIDIA H100 GPU at high workloads, making it more accessible and affordable. The excellent accuracy-efficiency sweet spot exhibited by the new model stems from changes to the model’s architecture,

3/3

@geifman_y

leading to a significantly lower memory footprint, reduced memory bandwidth, and reduced FLOPs while maintaining excellent accuracy.

HF model - nvidia/Llama-3_1-Nemotron-51B-Instruct · Hugging Face

Blog - Advancing the Accuracy-Efficiency Frontier with Llama-3.1-Nemotron-51B | NVIDIA Technical Blog

NIM endpoint - NVIDIA NIM | llama-3_1-nemotron-51b-instruct

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

@nvidiaaidev just launched Llama 3.1-Nemotron-70B-Reward, a cutting-edge reward model designed to enhance Reinforcement Learning from Human Feedback. Explore how to use it to elevate your AI projects in this technical blog.

@nvidiaaidev just launched Llama 3.1-Nemotron-70B-Reward, a cutting-edge reward model designed to enhance Reinforcement Learning from Human Feedback. Explore how to use it to elevate your AI projects in this technical blog.

New Reward Model Helps Improve LLM Alignment with Human Preferences | NVIDIA Technical Blog

New Reward Model Helps Improve LLM Alignment with Human Preferences | NVIDIA Technical Blog

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Last edited: