1/1

@rohanpaul_ai

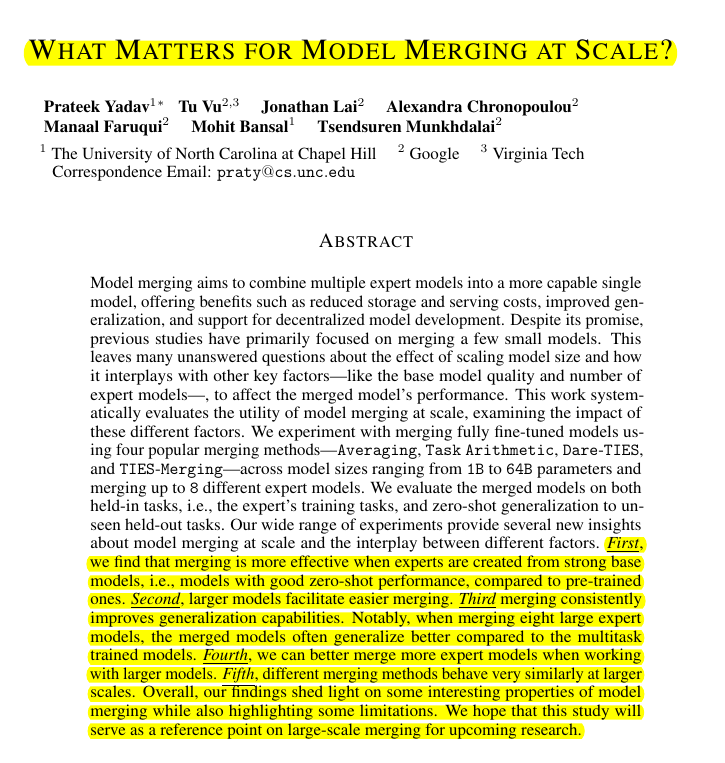

Merging instruction-tuned models at scale yields superior performance and generalization across diverse tasks.

• Instruction-tuned base models facilitate easier merging

• Larger models merge more effectively

• Merged models show improved zero-shot generalization

• Merging methods perform similarly for large instruction-tuned models

------

Generated this podcast with Google's Illuminate.

[Quoted tweet]

Merging instruction-tuned models at scale yields superior performance and generalization across diverse tasks.

**Original Problem** :

:

Model merging combines expert models to create a unified model with enhanced capabilities. Previous studies focused on small models and limited merging scenarios, leaving questions about scalability unanswered.

-----

**Solution in this Paper** :

:

• Systematic evaluation of model merging at scale (1B to 64B parameters)

• Used PaLM-2 and PaLM-2-IT models as base models

• Created expert models via fine-tuning on 8 held-in task categories

• Tested 4 merging methods: Averaging, Task Arithmetic, Dare-TIES, TIES-Merging

• Varied number of expert models merged (2 to 8)

• Evaluated on held-in tasks and 4 held-out task categories for zero-shot generalization

-----

**Key Insights from this Paper** :

:

• Instruction-tuned base models facilitate easier merging

• Larger models merge more effectively

• Merged models show improved zero-shot generalization

• Merging methods perform similarly for large instruction-tuned models

-----

**Results** :

:

• PaLM-2-IT consistently outperformed PaLM-2 across all settings

• 64B merged models approached task-specific expert performance (normalized score: 0.97)

• Merged 24B+ PaLM-2-IT models surpassed multitask baselines on held-out tasks

• 64B PaLM-2-IT merged model improved held-out performance by 18% over base model

https://video.twimg.com/ext_tw_video/1844913538378174478/pu/vid/avc1/1080x1080/E-22zf5PWVxGtLpm.mp4

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@rohanpaul_ai

Merging instruction-tuned models at scale yields superior performance and generalization across diverse tasks.

• Instruction-tuned base models facilitate easier merging

• Larger models merge more effectively

• Merged models show improved zero-shot generalization

• Merging methods perform similarly for large instruction-tuned models

------

Generated this podcast with Google's Illuminate.

[Quoted tweet]

Merging instruction-tuned models at scale yields superior performance and generalization across diverse tasks.

**Original Problem**

Model merging combines expert models to create a unified model with enhanced capabilities. Previous studies focused on small models and limited merging scenarios, leaving questions about scalability unanswered.

-----

**Solution in this Paper**

• Systematic evaluation of model merging at scale (1B to 64B parameters)

• Used PaLM-2 and PaLM-2-IT models as base models

• Created expert models via fine-tuning on 8 held-in task categories

• Tested 4 merging methods: Averaging, Task Arithmetic, Dare-TIES, TIES-Merging

• Varied number of expert models merged (2 to 8)

• Evaluated on held-in tasks and 4 held-out task categories for zero-shot generalization

-----

**Key Insights from this Paper**

• Instruction-tuned base models facilitate easier merging

• Larger models merge more effectively

• Merged models show improved zero-shot generalization

• Merging methods perform similarly for large instruction-tuned models

-----

**Results**

• PaLM-2-IT consistently outperformed PaLM-2 across all settings

• 64B merged models approached task-specific expert performance (normalized score: 0.97)

• Merged 24B+ PaLM-2-IT models surpassed multitask baselines on held-out tasks

• 64B PaLM-2-IT merged model improved held-out performance by 18% over base model

https://video.twimg.com/ext_tw_video/1844913538378174478/pu/vid/avc1/1080x1080/E-22zf5PWVxGtLpm.mp4

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196