1/11

@reach_vb

Let's goo! F5-TTS

> Trained on 100K hours of data

> Zero-shot voice cloning

> Speed control (based on total duration)

> Emotion based synthesis

> Long-form synthesis

> Supports code-switching

> Best part: CC-BY license (commercially permissive)

Diffusion based architecture:

> Non-Autoregressive + Flow Matching with DiT

> Uses ConvNeXt to refine text representation, alignment

Synthesised: I was, like, talking to my friend, and she’s all, um, excited about her, uh, trip to Europe, and I’m just, like, so jealous, right? (Happy emotion)

The TTS scene is on fire!

https://video.twimg.com/ext_tw_video/1845154255683919887/pu/vid/avc1/480x300/mzGDLl_iiw5TUzGg.mp4

2/11

@reach_vb

Check out the open model weights here:

SWivid/F5-TTS · Hugging Face

3/11

@reach_vb

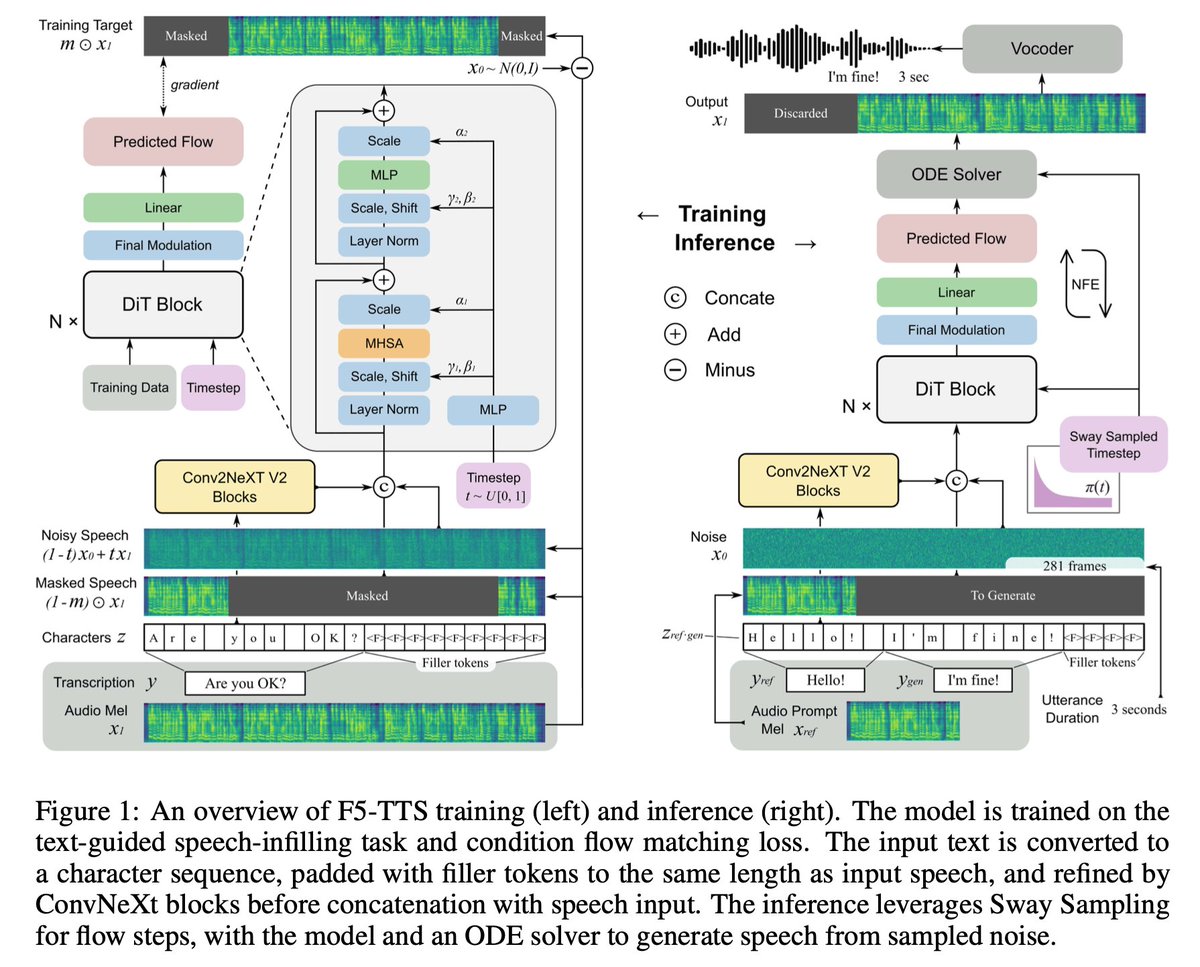

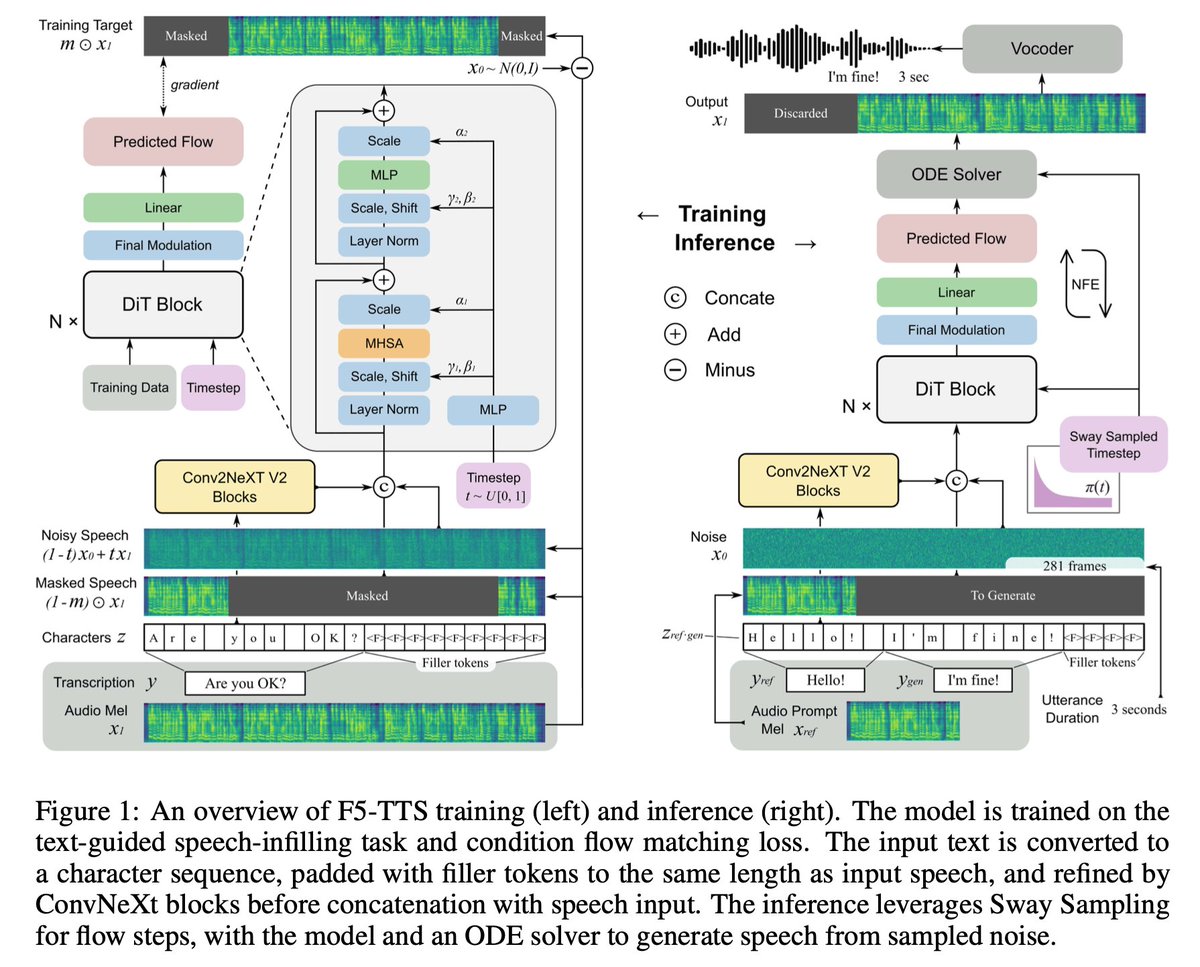

Overall architecture:

4/11

@reach_vb

Also, @realmrfakename made a dope space to play with the model:

E2/F5 TTS - a Hugging Face Space by mrfakename

5/11

@reach_vb

Some notes on the model:

1. Non-Autoregressive Design: Uses filler tokens to match text and speech lengths, eliminating complex models like duration and text encoders.

2. Flow Matching with DiT: Employs flow matching with a Diffusion Transformer (DiT) for denoising and speech generation.

3. ConvNeXt for Text: used to refine text representation, enhancing alignment with speech.

4. Sway Sampling: Introduces an inference-time Sway Sampling strategy to boost performance and efficiency, applicable without retraining.

5. Fast Inference: Achieves an inference Real-Time Factor (RTF) of 0.15, faster than state-of-the-art diffusion-based TTS models.

6. Multilingual Zero-Shot: Trained on a 100K hours multilingual dataset, demonstrates natural, expressive zero-shot speech, seamless code-switching, and efficient speed control.

6/11

@TommyFalkowski

This model is pretty good indeed! I haven't tried long form generation yet though but am really excited to have a model that could replace the online edge tts I'm currently using.

[Quoted tweet]

I think we might finally have an elevenlabs level text-to-speech model at home! I got the demo to run on a machine with a 3070 with 8GB of vram!

https://video.twimg.com/ext_tw_video/1844477815166500885/pu/vid/avc1/1108x720/ULTpniql5_9M761K.mp4

7/11

@realkieranlewis

can this be ran on replicate etc? any indication on cost vs ElevenLabs?

8/11

@j6sp5r

nice

Wen in open NotebookLM

9/11

@lalopenguin

it sounds great!!

10/11

@BhanuKonepalli

This one's a game changer !!

11/11

@modeless

Ooh, this looks really great!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@reach_vb

Let's goo! F5-TTS

> Trained on 100K hours of data

> Zero-shot voice cloning

> Speed control (based on total duration)

> Emotion based synthesis

> Long-form synthesis

> Supports code-switching

> Best part: CC-BY license (commercially permissive)

Diffusion based architecture:

> Non-Autoregressive + Flow Matching with DiT

> Uses ConvNeXt to refine text representation, alignment

Synthesised: I was, like, talking to my friend, and she’s all, um, excited about her, uh, trip to Europe, and I’m just, like, so jealous, right? (Happy emotion)

The TTS scene is on fire!

https://video.twimg.com/ext_tw_video/1845154255683919887/pu/vid/avc1/480x300/mzGDLl_iiw5TUzGg.mp4

2/11

@reach_vb

Check out the open model weights here:

SWivid/F5-TTS · Hugging Face

3/11

@reach_vb

Overall architecture:

4/11

@reach_vb

Also, @realmrfakename made a dope space to play with the model:

E2/F5 TTS - a Hugging Face Space by mrfakename

5/11

@reach_vb

Some notes on the model:

1. Non-Autoregressive Design: Uses filler tokens to match text and speech lengths, eliminating complex models like duration and text encoders.

2. Flow Matching with DiT: Employs flow matching with a Diffusion Transformer (DiT) for denoising and speech generation.

3. ConvNeXt for Text: used to refine text representation, enhancing alignment with speech.

4. Sway Sampling: Introduces an inference-time Sway Sampling strategy to boost performance and efficiency, applicable without retraining.

5. Fast Inference: Achieves an inference Real-Time Factor (RTF) of 0.15, faster than state-of-the-art diffusion-based TTS models.

6. Multilingual Zero-Shot: Trained on a 100K hours multilingual dataset, demonstrates natural, expressive zero-shot speech, seamless code-switching, and efficient speed control.

6/11

@TommyFalkowski

This model is pretty good indeed! I haven't tried long form generation yet though but am really excited to have a model that could replace the online edge tts I'm currently using.

[Quoted tweet]

I think we might finally have an elevenlabs level text-to-speech model at home! I got the demo to run on a machine with a 3070 with 8GB of vram!

https://video.twimg.com/ext_tw_video/1844477815166500885/pu/vid/avc1/1108x720/ULTpniql5_9M761K.mp4

7/11

@realkieranlewis

can this be ran on replicate etc? any indication on cost vs ElevenLabs?

8/11

@j6sp5r

nice

Wen in open NotebookLM

9/11

@lalopenguin

it sounds great!!

10/11

@BhanuKonepalli

This one's a game changer !!

11/11

@modeless

Ooh, this looks really great!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196