1/11

@ArtificialAnlys

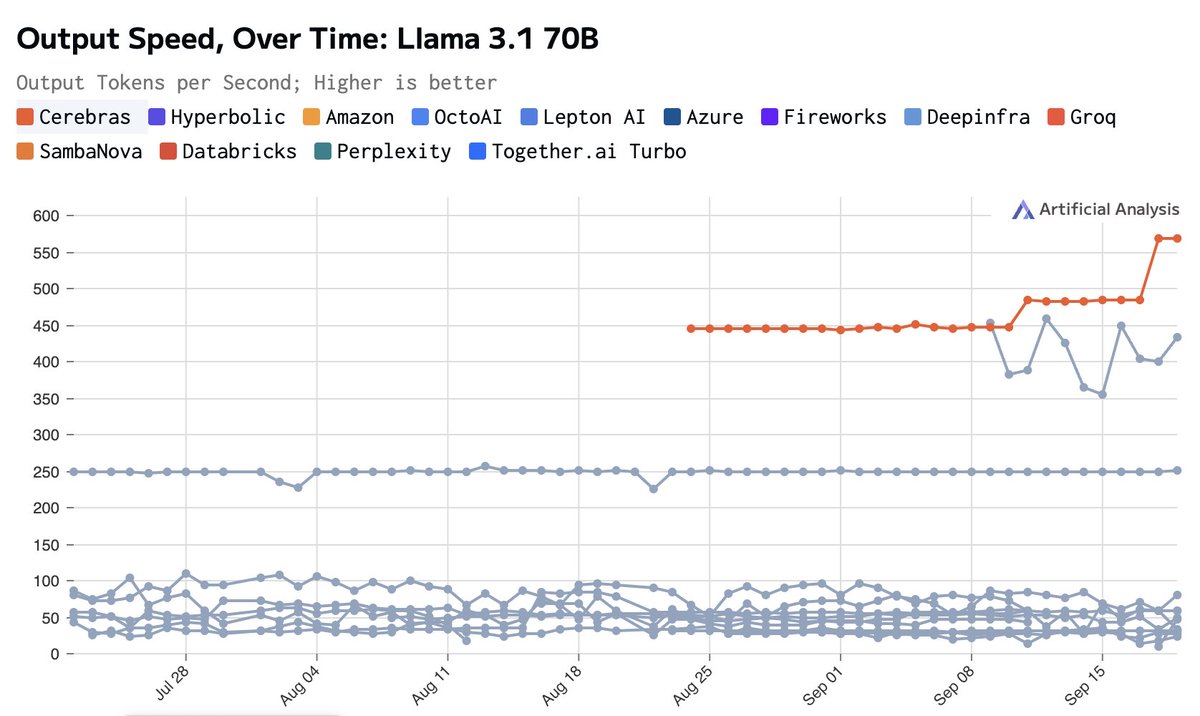

Cerebras continues to deliver output speed improvements, breaking the 2,000 tokens/s barrier on Llama 3.1 8B and 550 tokens/s on 70B

Since launching less than a month ago, @CerebrasSystems has continued to improve output speed inference performance on their custom chips.

We are now measuring 2,005 output tokens per second on @AIatMeta's Llama 3.1 8B and 566 output tokens per second on Llama 3.1 70B.

Faster output speed supports use-cases which require low-latency interactions including consumer applications (games, chatbots, etc) and new techniques of using the models such as agents and multi-query RAG.

Link to our comparison of Llama 3.1 70B and 8B providers below

2/11

@ArtificialAnlys

Analysis of Llama 3.1 70B providers:

https://artificialanalysis.ai/models/llama-3-1-instruct-70b/providers

Analysis of Llama 3.1 8B providers:

https://artificialanalysis.ai/models/llama-3-1-instruct-8b/providers

https://artificialanalysis.ai/models/llama-3-1-instruct-70b/providers

3/11

@linqtoinc

Incredible work @CerebrasSystems team!

4/11

@JonathanRoseD

Llama 405B when? I assume not soon because of the hardware—a chip that could handle that would be an absolute BEAST

5/11

@alby13

Why don't they talk about running Llama 3.1 405B?

6/11

@JERBAGSCRYPTO

When IPO

7/11

@itsTimDent

We need qwen or qwen coder similar output speeds.

8/11

@kalyan5v

Cerebras systems need lot of PR if it’s taking on /search?q=#NVDA

9/11

@pa_pfeiffer

How do you validate, that the model behind is actually Llama3.1 8B with full precision (bfloat16) and not something quantized, pruned or destilled?

10/11

@tristanbob

Amazing, congrats @CerebrasSystems!

11/11

@StartupHubAI

[Quoted tweet]

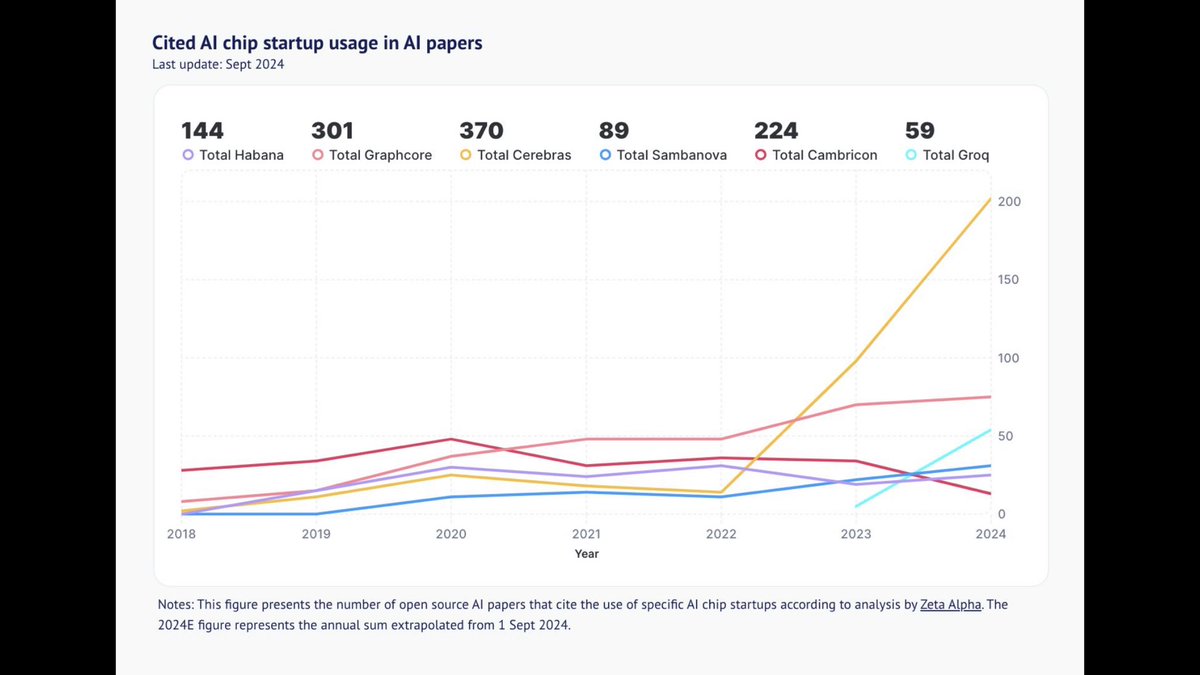

Top AI investor and researcher and market analyst @nathanbenaich updated @stateofaireport with #AI chip usage

@CerebrasSystems doubled YoY.

@CerebrasSystems doubled YoY.

@graphcoreai

@SambaNovaAI

@GroqInc

@HabanaLabs

it’s how many AI startup chips are cited in AI research papers, a clever proxy

it’s how many AI startup chips are cited in AI research papers, a clever proxy

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@ArtificialAnlys

Cerebras continues to deliver output speed improvements, breaking the 2,000 tokens/s barrier on Llama 3.1 8B and 550 tokens/s on 70B

Since launching less than a month ago, @CerebrasSystems has continued to improve output speed inference performance on their custom chips.

We are now measuring 2,005 output tokens per second on @AIatMeta's Llama 3.1 8B and 566 output tokens per second on Llama 3.1 70B.

Faster output speed supports use-cases which require low-latency interactions including consumer applications (games, chatbots, etc) and new techniques of using the models such as agents and multi-query RAG.

Link to our comparison of Llama 3.1 70B and 8B providers below

2/11

@ArtificialAnlys

Analysis of Llama 3.1 70B providers:

https://artificialanalysis.ai/models/llama-3-1-instruct-70b/providers

Analysis of Llama 3.1 8B providers:

https://artificialanalysis.ai/models/llama-3-1-instruct-8b/providers

https://artificialanalysis.ai/models/llama-3-1-instruct-70b/providers

3/11

@linqtoinc

Incredible work @CerebrasSystems team!

4/11

@JonathanRoseD

Llama 405B when? I assume not soon because of the hardware—a chip that could handle that would be an absolute BEAST

5/11

@alby13

Why don't they talk about running Llama 3.1 405B?

6/11

@JERBAGSCRYPTO

When IPO

7/11

@itsTimDent

We need qwen or qwen coder similar output speeds.

8/11

@kalyan5v

Cerebras systems need lot of PR if it’s taking on /search?q=#NVDA

9/11

@pa_pfeiffer

How do you validate, that the model behind is actually Llama3.1 8B with full precision (bfloat16) and not something quantized, pruned or destilled?

10/11

@tristanbob

Amazing, congrats @CerebrasSystems!

11/11

@StartupHubAI

[Quoted tweet]

Top AI investor and researcher and market analyst @nathanbenaich updated @stateofaireport with #AI chip usage

@graphcoreai

@SambaNovaAI

@GroqInc

@HabanaLabs

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196