1/11

@denny_zhou

What is the performance limit when scaling LLM inference? Sky's the limit.

We have mathematically proven that transformers can solve any problem, provided they are allowed to generate as many intermediate reasoning tokens as needed. Remarkably, constant depth is sufficient.

[2402.12875] Chain of Thought Empowers Transformers to Solve Inherently Serial Problems (ICLR 2024)

2/11

@denny_zhou

Just noticed a fun youtube video for explaining this paper. LoL. Pointed by @laion_ai http://invidious.poast.org/4JNe-cOTgkY

3/11

@ctjlewis

hey Denny, curious if you have any thoughts. i reached the same conclusion:

[Quoted tweet]

x.com/i/article/178554774683…

4/11

@denny_zhou

Impressive! You would be interested at seeing this: [2301.04589] Memory Augmented Large Language Models are Computationally Universal

5/11

@nearcyan

what should one conclude from such a proof if it’s not also accompanied by a proof that we can train a transformer into the state (of solving a given arbitrary problem), possibly even with gradient descent and common post training techniques?

6/11

@QuintusActual

“We have mathematically proven that transformers can solve any problem, provided they are allowed to generate as many intermediate reasoning tokens as needed.”

I’m guessing this is only true because as a problem grows in difficulty, the # of required tokens approaches

7/11

@Shawnryan96

How do they solve novel problems without a way to update the world model?

8/11

@Justin_Halford_

Makes sense for verifiable domains (e.g. math and coding).

Does this generalize to more ambiguous domains with competing values/incentives without relying on human feedback?

9/11

@ohadasor

Don't fall into it!!

[Quoted tweet]

"can solve any problem"? Really?? Let's read the abstract in the image attached to the post, and see if the quote is correct. Ah wow! Somehow he forgot to quote the rest of the sentence! How is that possible?

The full quote is "can solve any problem solvable by boolean circuits of size T". This changes a lot. All problems solvable by Boolean circuits, of any size, is called the Circuit Evaluation Problem, and is known to cover precisely polynomial time (P) calculations. So it cannot solve the most basic logical problems which are at least exponential. Now here we don't even have P, we have only circuits of size T, which validates my old mantra: it can solve only constant-time problems. The lowest possible complexity class.

And it also validates my claim about the bubble of machine learning promoted by people who have no idea what they're talking about.

10/11

@CompSciFutures

Thx, refreshingly straightforward notation too, I might take the time to read this one properly.

I'm just catching up and have a dumb Q... that is an interestingly narrow subset of symbolic operands. Have you considered what happens if you add more?

11/11

@BatAndrew314

Noob question- how is it related to universal approximation theorem? Meaning does transformer can solve any problem because it is neural net? Or it’s some different property of transformers and CoT?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@denny_zhou

What is the performance limit when scaling LLM inference? Sky's the limit.

We have mathematically proven that transformers can solve any problem, provided they are allowed to generate as many intermediate reasoning tokens as needed. Remarkably, constant depth is sufficient.

[2402.12875] Chain of Thought Empowers Transformers to Solve Inherently Serial Problems (ICLR 2024)

2/11

@denny_zhou

Just noticed a fun youtube video for explaining this paper. LoL. Pointed by @laion_ai http://invidious.poast.org/4JNe-cOTgkY

3/11

@ctjlewis

hey Denny, curious if you have any thoughts. i reached the same conclusion:

[Quoted tweet]

x.com/i/article/178554774683…

4/11

@denny_zhou

Impressive! You would be interested at seeing this: [2301.04589] Memory Augmented Large Language Models are Computationally Universal

5/11

@nearcyan

what should one conclude from such a proof if it’s not also accompanied by a proof that we can train a transformer into the state (of solving a given arbitrary problem), possibly even with gradient descent and common post training techniques?

6/11

@QuintusActual

“We have mathematically proven that transformers can solve any problem, provided they are allowed to generate as many intermediate reasoning tokens as needed.”

I’m guessing this is only true because as a problem grows in difficulty, the # of required tokens approaches

7/11

@Shawnryan96

How do they solve novel problems without a way to update the world model?

8/11

@Justin_Halford_

Makes sense for verifiable domains (e.g. math and coding).

Does this generalize to more ambiguous domains with competing values/incentives without relying on human feedback?

9/11

@ohadasor

Don't fall into it!!

[Quoted tweet]

"can solve any problem"? Really?? Let's read the abstract in the image attached to the post, and see if the quote is correct. Ah wow! Somehow he forgot to quote the rest of the sentence! How is that possible?

The full quote is "can solve any problem solvable by boolean circuits of size T". This changes a lot. All problems solvable by Boolean circuits, of any size, is called the Circuit Evaluation Problem, and is known to cover precisely polynomial time (P) calculations. So it cannot solve the most basic logical problems which are at least exponential. Now here we don't even have P, we have only circuits of size T, which validates my old mantra: it can solve only constant-time problems. The lowest possible complexity class.

And it also validates my claim about the bubble of machine learning promoted by people who have no idea what they're talking about.

10/11

@CompSciFutures

Thx, refreshingly straightforward notation too, I might take the time to read this one properly.

I'm just catching up and have a dumb Q... that is an interestingly narrow subset of symbolic operands. Have you considered what happens if you add more?

11/11

@BatAndrew314

Noob question- how is it related to universal approximation theorem? Meaning does transformer can solve any problem because it is neural net? Or it’s some different property of transformers and CoT?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

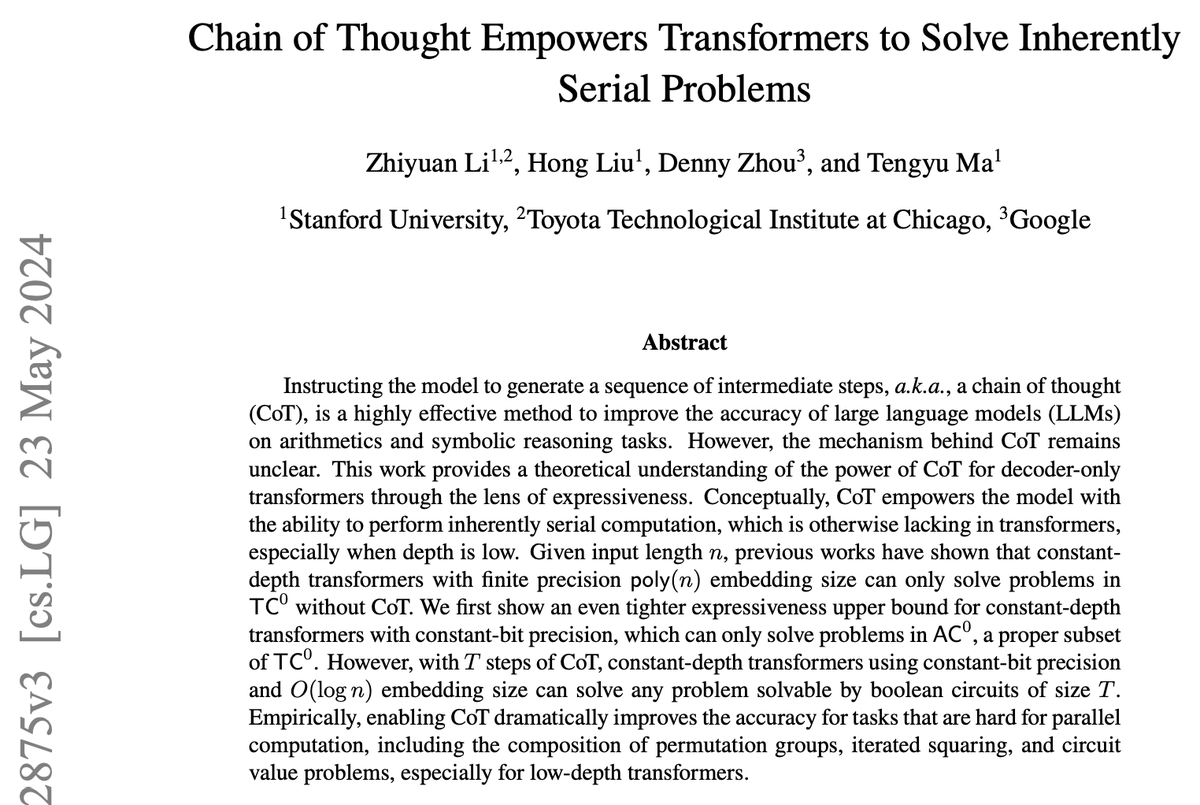

Chain of Thought Empowers Transformers to Solve Inherently Serial Problems

Instructing the model to generate a sequence of intermediate steps, a.k.a., a chain of thought (CoT), is a highly effective method to improve the accuracy of large language models (LLMs) on arithmetics and symbolic reasoning tasks. However, the mechanism behind CoT remains unclear. This work...

[Submitted on 20 Feb 2024 (v1), last revised 23 May 2024 (this version, v3)]

Chain of Thought Empowers Transformers to Solve Inherently Serial Problems

Zhiyuan Li, Hong Liu, Denny Zhou, Tengyu Ma

Instructing the model to generate a sequence of intermediate steps, a.k.a., a chain of thought (CoT), is a highly effective method to improve the accuracy of large language models (LLMs) on arithmetics and symbolic reasoning tasks. However, the mechanism behind CoT remains unclear. This work provides a theoretical understanding of the power of CoT for decoder-only transformers through the lens of expressiveness. Conceptually, CoT empowers the model with the ability to perform inherently serial computation, which is otherwise lacking in transformers, especially when depth is low. Given input length n, previous works have shown that constant-depth transformers with finite precision poly(n) embedding size can only solve problems in TC0 without CoT. We first show an even tighter expressiveness upper bound for constant-depth transformers with constant-bit precision, which can only solve problems in AC0, a proper subset of TC0. However, with T steps of CoT, constant-depth transformers using constant-bit precision and O(logn) embedding size can solve any problem solvable by boolean circuits of size T. Empirically, enabling CoT dramatically improves the accuracy for tasks that are hard for parallel computation, including the composition of permutation groups, iterated squaring, and circuit value problems, especially for low-depth transformers.

| Comments: | 38 pages, 10 figures. Accepted by ICLR 2024 |

| Subjects: | Machine Learning (cs.LG); Computational Complexity (cs.CC); Machine Learning (stat.ML) |

| Cite as: | arXiv:2402.12875 [cs.LG] |

| (or arXiv:2402.12875v3 [cs.LG] for this version) | |

| [2402.12875] Chain of Thought Empowers Transformers to Solve Inherently Serial Problems |

Submission history

From: Zhiyuan Li [view email][v1] Tue, 20 Feb 2024 10:11:03 UTC (3,184 KB)

[v2] Tue, 7 May 2024 17:00:27 UTC (5,555 KB)

[v3] Thu, 23 May 2024 17:10:39 UTC (5,555 KB)