1/78

@realGeorgeHotz

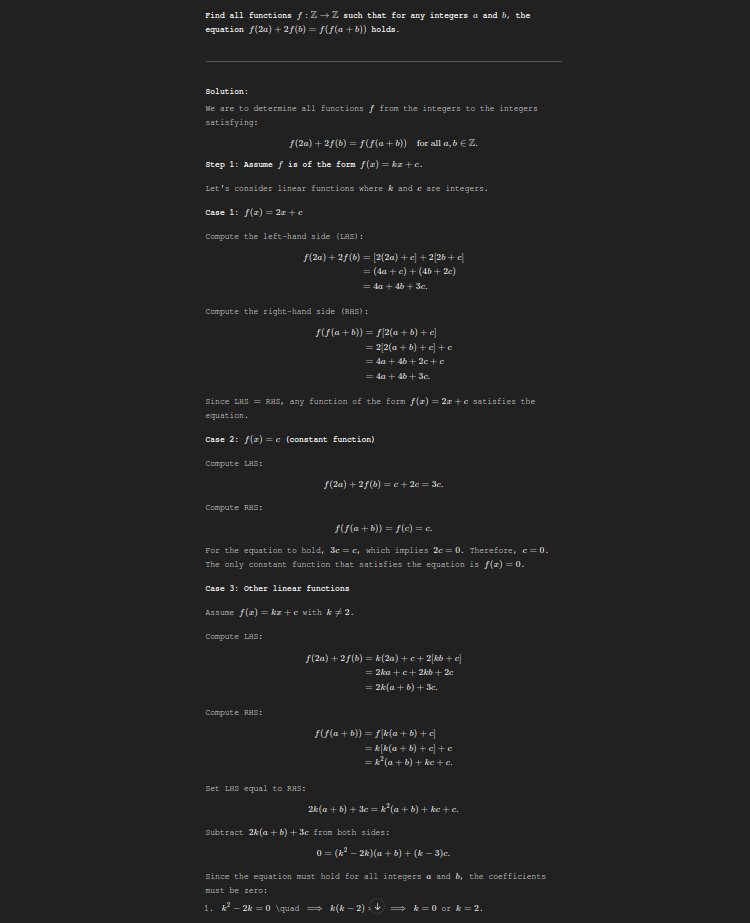

ChatGPT o1-preview is the first model that's capable of programming (at all). Saw an estimate of 120 IQ, feels about right.

Very bullish on RL in development environments. Write code, write tests, check work...repeat

Here's it is writing tinygrad tests:

https://chatgpt.com/share/66e693ef-1a50-8000-81ff-899498f9d052

2/78

@realGeorgeHotz

To paraphrase Terence Tao, it's "a mediocre, but not completely incompetent, software engineer"

Maybe it 404ed because I continued the context? Here's the WIP PR, just like with o1, you can imagine the "chain of thought" used :P

graph rewrite tests by geohot · Pull Request #6519 · tinygrad/tinygrad

3/78

@trickylabyrinth

the link is giving a 404.

4/78

@skidmarxist1

with chess the strongest player was human + ai combo for a while. Now its just completely computer.

It feels like we are in that phase with IQ right now. The highest IQ is currently a combo of human + llm or ai. how long till its just ai by its self?

Also how memory has become largely external from out body (phones). More and more of out IQ will be external (outside the skin). The agency center of mass is getting further away from our actual mass center of mass.

5/78

@WholeMarsBlog

link returns a 404 for some reason

6/78

@danielarpm

Conversation was blocked due to policies

7/78

@JediWattzon22

I’m bearish on a 200 status

8/78

@nw3

AI with IQ of 120 is sufficiently devastating. Leaves room for true geniuses to innovate but smarter than vast majority of humanity.

9/78

@remusrisnov

The IQ test assessment does not tell you that the LLM used IQ tests and answers in its training set data. Not a useful measurement, @arcprize is better.

10/78

@yayavarkm

How is it at analysing complex data?!

11/78

@TroyMurs

I don’t know bro…homie thinks he is 150.

I’ve actually done this test on all the models and this is the first time it’s ever been over 140.

12/78

@sparbz

?? plenty of previous models can program (well)

13/78

@myronkoch

the chatGPT link you posted 404's

14/78

@romainsimon

Claude Sonnet 3.5 was already pretty good for some things

15/78

@shawnchauhan1

Natural language processing is poised to revolutionize how we interact with technology. It's the future of coding

16/78

@monguetown

I disagree. It can write the code you tell it to write especially in the context of an existing system. And incorporate new code into that legacy system. And optimize it.

17/78

@heuristics

That’s a skill issue. They have been capable of programming well for a while. You just have to specify what you want them to do.

18/78

@david_a_thigpen

Well, 404 error. I'm sure that the correct link will function the same way. e.g. add test for resource the prompt engineer controls

19/78

@gfodor

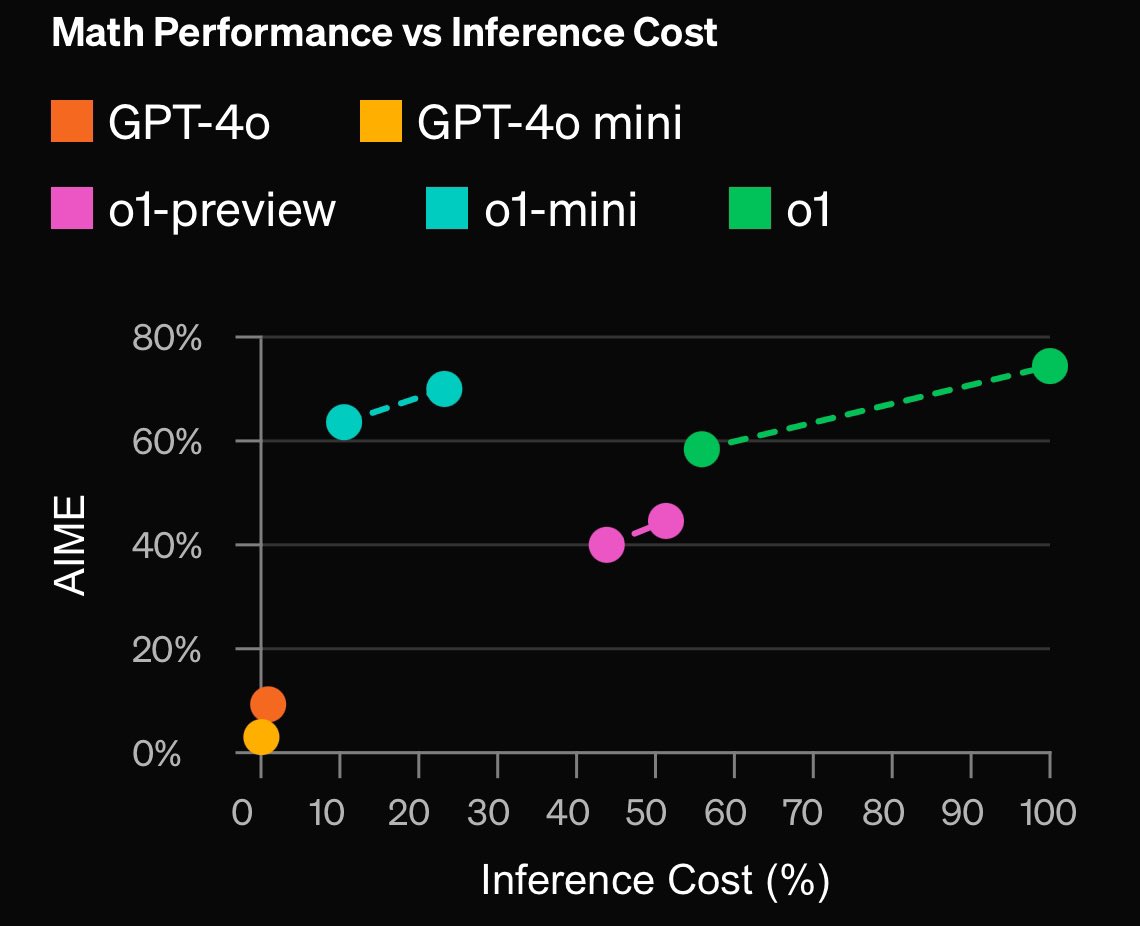

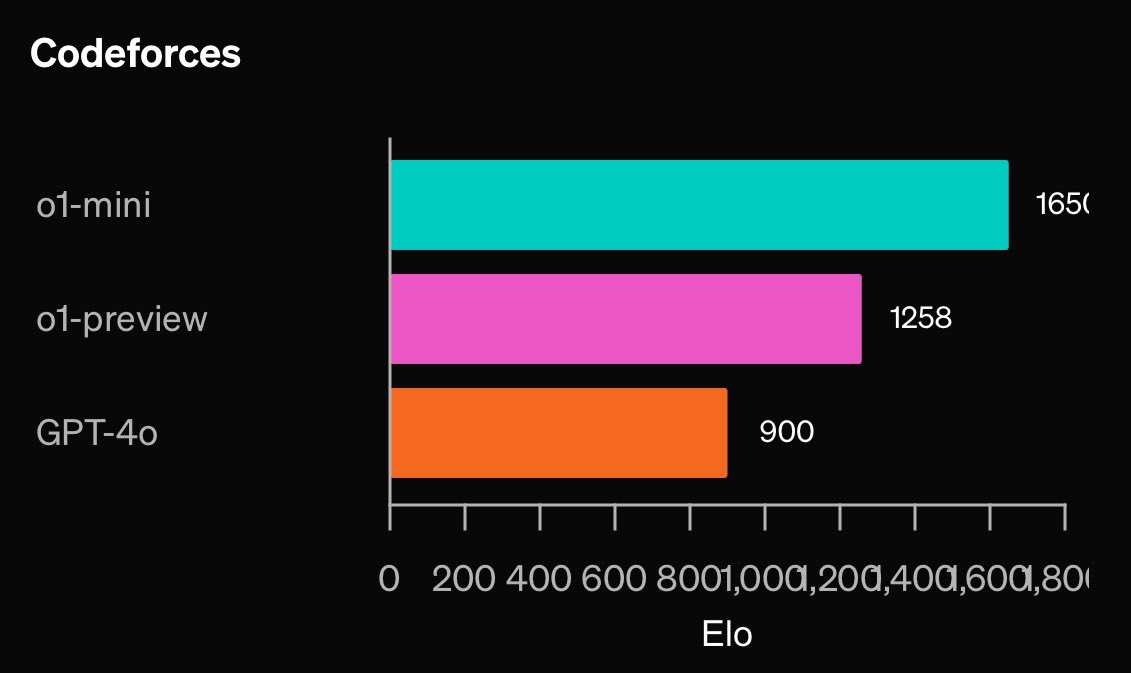

did you compare vs o1-mini? o1-mini is very good.

20/78

@TheAI2C

I will bet $3k in BTC that it can’t make a macro that continuously mouse clicks only while the physical left mouse button is held down on a GNU/Linux operating system without using a virtual machine.

21/78

@zoftie

22/78

@shundeshagen

When will programming as we know it today become obsolete?

23/78

@stevelizcano

o1-preview or mini? mini is supposed to be better at coding

24/78

@shw1nm

when you asked if the test or the code was incorrect, it said the code

was that correct?

25/78

@jmeierX

Natural language will be the next big coding language

26/78

@truthavatar777

The first thing I did with ChatGPT 4 was make it crawl through my company's codebase to extract the code from other non-git friendly assets. Then I loaded that as a knowledge file and it was promising. But what you're showing here is a dramatic step forward.

27/78

@Emily_Escapor

Good two more updates and we hit God mode

28/78

@JD_2020

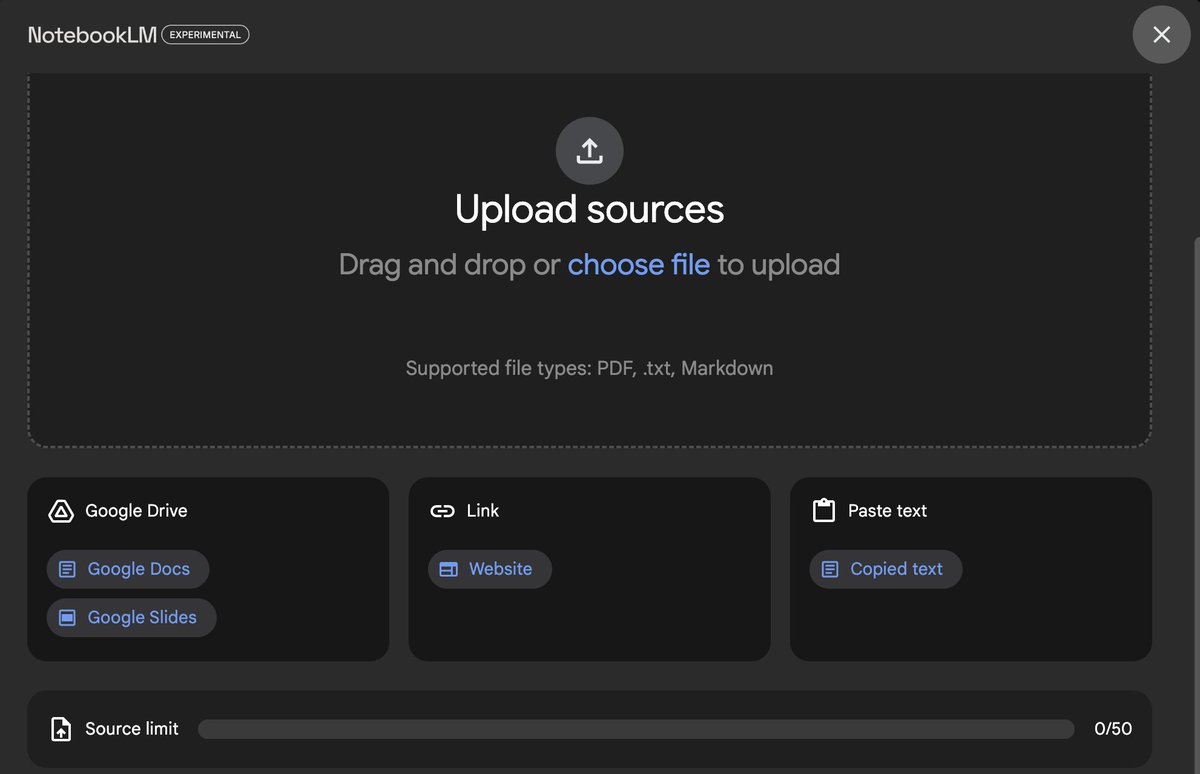

Small correction — this model more or less does the stuff o1 does, since last year, and consistently shows up. At a fraction of the cost of o1.

Just try it. It’s totally free for the moment since you ingress to the agentive workflow via ChatGPT

ChatGPT - No-Code Copilot  Build Apps & Games from Words!

Build Apps & Games from Words!

29/78

@sauerlo

The 404 is the tinygrad test. We are the test subjects.

30/78

@sunsettler

Have you read Crystal society?

31/78

@akhileshutup

They took it down lmao

32/78

@TeslaHomelander

Giving power to true artists to form the future

33/78

@RatingsKick

404

34/78

@arnaud_petitpas

Can't access, blocked due to OAI policy it says

35/78

@jessyseonoob

If you can copy-paste in codepen please

36/78

@xmusfk

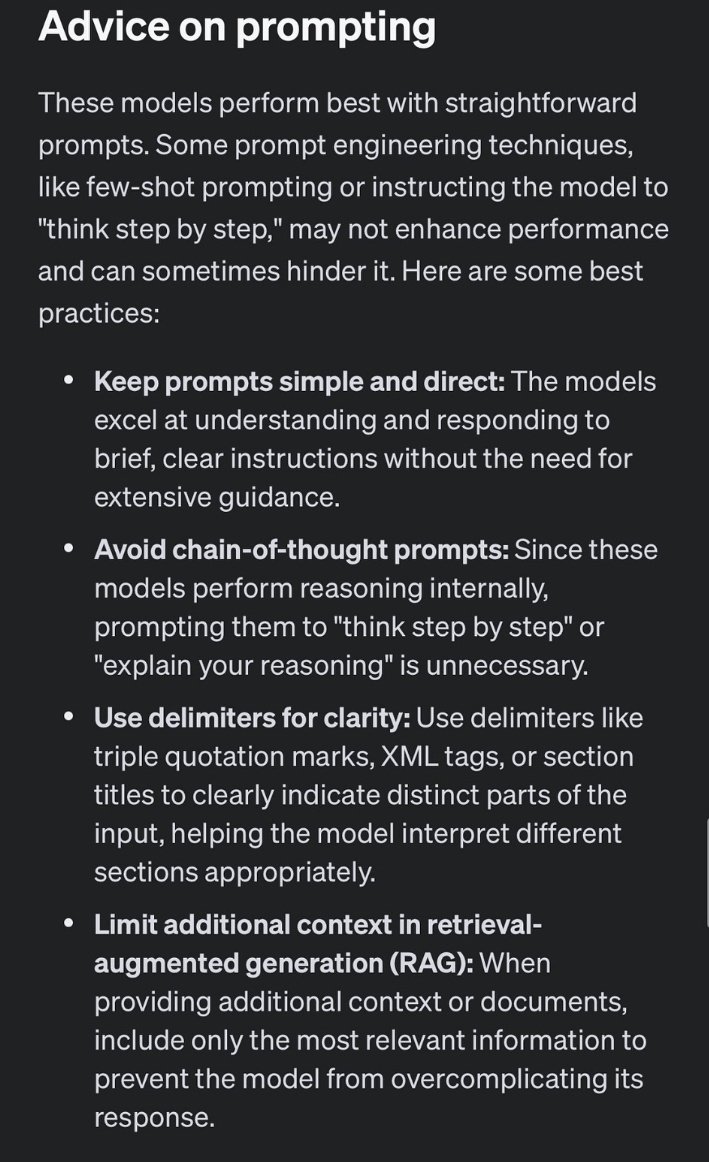

If I am not wrong, you used a prompt engineering technique called Chain of Thought, which might not work well with the o1 model according to the documentation. here is the tweet.

[Quoted tweet]

o1 experts, please follow these instructions instead of trying your out of the box logics.

37/78

@ludvonrand

38/78

@Sachin1981KUMAR

I feel it's not IQ that is impressive but comparative speed against human mind.

It might have higher IQ as above average human being but their is no comparison to the speed with which it can solve the problems. Not sure how that is being measured

39/78

@dhtikna

Have you tried Sonnet 3.5, in some benchmarks it still beats O1 in coding

40/78

@LukeElin

Been exploring and experimenting all weekend with it. very impressed in someways but underwhelming others.

Mixed bag future of these models looks bright

41/78

@RBoorsma

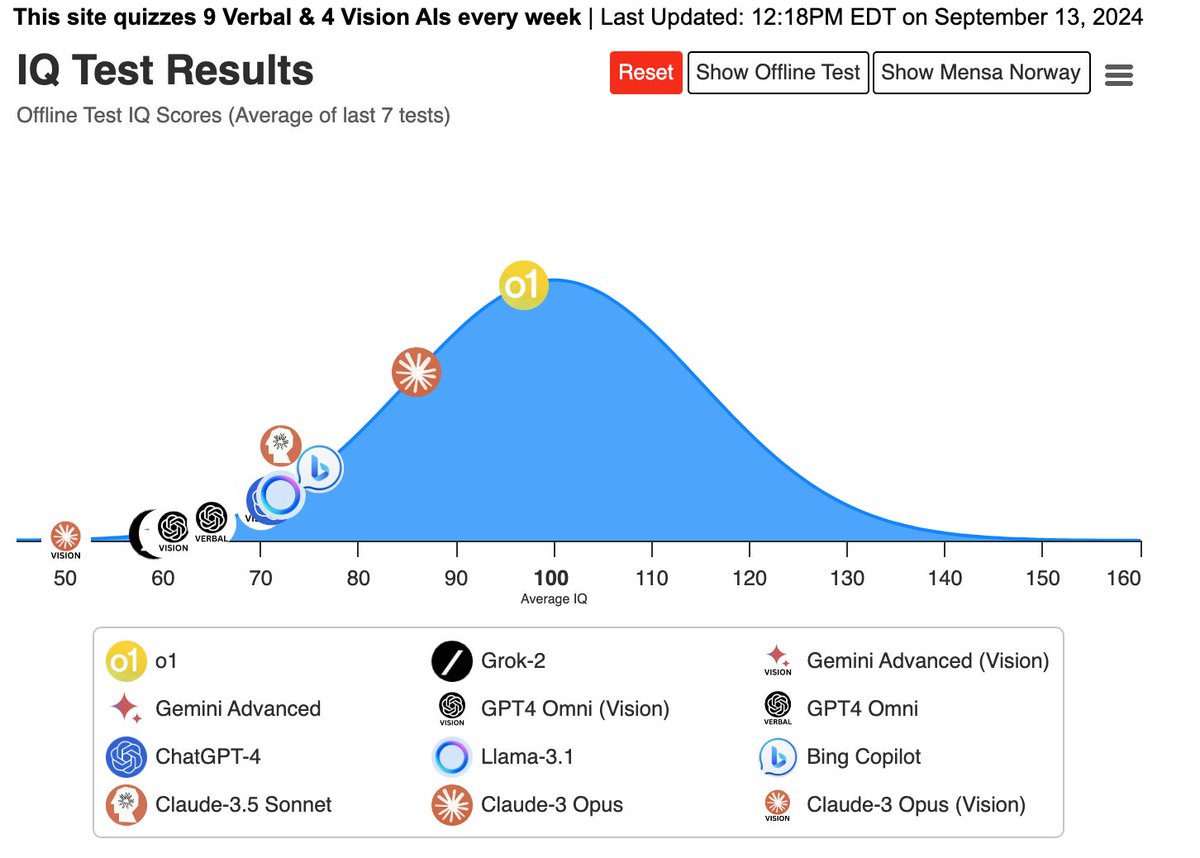

Study to test AI IQ:

[Quoted tweet]

Just plotted the new @OpenAI model on my AI IQ tracking page.

Note that this test is an offline-only IQ quiz that a Mensa member created for my testing, which is *not in any AI training data* (so scores are lower than for public IQ tests.)

OpenAI's new model does very well

42/78

@programmer_ke

openai police shut down your link

43/78

@DmitriyLeybel

Lol

44/78

@beattie20111

Amazing

results

Over 98k won yesterday.

People in my telegram channel keep winning with me everyday.

Don’t miss next game, click the link on my bio to join my telegram

45/78

@alocinotasor

I'll wait till it's IQ measures mine.

46/78

@alex33902241

(At all) is crazy levels of delusion

47/78

@HaydnMartin_

Feels like we're very close to describing a change and a PR subsequently appearing.

48/78

@platosbeard69

I've had o1-mini give better coding solutions than o1-preview some of the time and the speed makes initial iteration on poorly specified natural language requests much nicer

49/78

@maxalgorhythm

404 not found on the chatgpt share link

50/78

@reiver

51/78

@ykssaspassky

lol it rewrote it for me - copy paste from GitHub

52/78

@muad_deab

"404 Not Found"

53/78

@LucaMiglioli185

I'm done

54/78

@uber_security

Its.. "robust", within an "frame work".

So far 2/3 code run at first try.

55/78

@Kingtylernash

Have observed the same with hard code problems un which usually couldnt help me before

56/78

@ITendoI

Guys... he said the "I" word.

57/78

@mario_meissner

What’s the difference between the current Cursor capabilities and the RL environment you describe?

I feel like I can already have a pretty much automated loop where I just supervise and give the next order.

58/78

@HX0DXs

could you please screenshot the test? is giving 404

59/78

@bruce_lambert

First model capable of programming? Uh oh, I better delete all that working code (in SAS, bash, Lisp, and Python) that AI has written for me since December 2022.

60/78

@OccupyingM

what's your guess on how and why it works?

61/78

@Xuniverse_

sorry, we will get superintelligence soon which can write programming codes.

62/78

@silxapp

openai fan boys is another thing

63/78

@0xAyush1

but can it build an open source autopilot driving software?

64/78

@crypto_nobody_

o1 vs Claude, Claude won in my testing when it came to coding

65/78

@drapersgulld

Try to use o1-mini, have found better general performance in for now.

[Quoted tweet]

I think people are totally misunderstanding that you should be using o1-mini to run your coding + math tests.

OpenAI didn’t make this too clear in the primary o1 card but the o1-mini post (link below) makes this super clear.

On costs … o1-mini is around 30% cheaper than 4o.

66/78

@sameed_ahmad12

I think they took your link down.

67/78

@CreeK_

@sama "blocked due to your policies".. can you do some magic? We just want to see what George Hotz saw..

68/78

@leo11market

Is it better than Claude 3.5 in python programming?

69/78

@purusa0x6c

demn I got this

70/78

@Pomirkovany

Yeah dude, writing tinyguard tests is very impressive and proof that it's a capable programmer

71/78

@PoudelAarogya

truly the o1 is great. here is the reason:

72/78

@MoeWatn

Uh?

73/78

@DCDqyTu7V556229

Shared conversation seems deleted.

74/78

@yajusempaihomo

the conversation is 404. did you pour your whole code base into o1 preview? or it just did the job with like one file and a few hints?

75/78

@uki156

What does this mean "capable of programming at all"? I've been using models since GPT3 to do programming with a lot of satisfaction, and they've been getting better with each new release.

Your tweet is worded like I shouldn't believe my own eyes

76/78

@lu_Z2g

I don't get the IQ claims. If it had the intelligence of a 120 IQ human or even lower, it would be AGI. It's clearly not AGI. Its understanding completely breaks down on out of distribution questions.

77/78

@cosmichaosis

Higher IQ than me.

78/78

@MHATZL101

All bullshyt the fukking thing can’t even even do a basic chat with a human being for a hiring process like human resources. It’s so immediately and easily confused it is ridiculously inefficient and does not work at all. inoperable.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196