You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?

Roblox announces AI tool for generating 3D game worlds from text

New AI feature aims to streamline game creation on popular online platform.

Roblox announces AI tool for generating 3D game worlds from text

New AI feature aims to streamline game creation on popular online platform.

Benj Edwards - 9/9/2024, 5:46 PM

Enlarge

SOPA Images via Getty Images

55

On Friday, Roblox announced plans to introduce an open source generative AI tool that will allow game creators to build 3D environments and objects using text prompts, reports MIT Tech Review. The feature, which is still under development, may streamline the process of creating game worlds on the popular online platform, potentially opening up more aspects of game creation to those without extensive 3D design skills.

Further Reading

Putting Roblox’s incredible $45 billion IPO in contextRoblox has not announced a specific launch date for the new AI tool, which is based on what it calls a "3D foundational model." The company shared a demo video of the tool where a user types, "create a race track," then "make the scenery a desert," and the AI model creates a corresponding model in the proper environment.

The system will also reportedly let users make modifications, such as changing the time of day or swapping out entire landscapes, and Roblox says the multimodal AI model will ultimately accept video and 3D prompts, not just text.

A video showing Roblox's generative AI model in action.

The 3D environment generator is part of Roblox's broader AI integration strategy. The company reportedly uses around 250 AI models across its platform, including one that monitors voice chat in real time to enforce content moderation, which is not always popular with players.

Next-token prediction in 3D

Roblox's 3D foundational model approach involves a custom next-token prediction model—a foundation not unlike the large language models (LLMs) that power ChatGPT. Tokens are fragments of text data that LLMs use to process information. Roblox's system "tokenizes" 3D blocks by treating each block as a numerical unit, which allows the AI model to predict the most likely next structural 3D element in a sequence. In aggregate, the technique can build entire objects or scenery.

Further Reading

Are Roblox’s new AI coding and art tools the future of game development?Anupam Singh, vice president of AI and growth engineering at Roblox, told MIT Tech Review about the challenges in developing the technology. "Finding high-quality 3D information is difficult," Singh said. "Even if you get all the data sets that you would think of, being able to predict the next cube requires it to have literally three dimensions, X, Y, and Z."

According to Singh, lack of 3D training data can create glitches in the results, like a dog with too many legs. To get around this, Roblox is using a second AI model as a kind of visual moderator to catch the mistakes and reject them until the proper 3D element appears. Through iteration and trial and error, the first AI model can create the proper 3D structure.

Notably, Roblox plans to open-source its 3D foundation model, allowing developers and even competitors to use and modify it. But it's not just about giving back—open source can be a two-way street. Choosing an open source approach could also allow the company to utilize knowledge from AI developers if they contribute to the project and improve it over time.

The ongoing quest to capture gaming revenue

News of the new 3D foundational model arrived at the 10th annual Roblox Developers Conference in San Jose, California, where the company also announced an ambitious goal to capture 10 percent of global gaming content revenue through the Roblox ecosystem, and the introduction of "Party," a new feature designed to facilitate easier group play among friends.

Further Reading

Roblox facilitates “illegal gambling” for minors, according to new lawsuitIn March 2023, we detailed Roblox's early foray into AI-powered game development tools, as revealed at the Game Developers Conference. The tools included a Code Assist beta for generating simple Lua functions from text descriptions, and a Material Generator for creating 2D surfaces with associated texture maps.

At the time, Roblox Studio head Stef Corazza described these as initial steps toward "democratizing" game creation with plans for AI systems that are now coming to fruition. The 2023 tools focused on discrete tasks like code snippets and 2D textures, laying the groundwork for the more comprehensive 3D foundational model announced at this year's Roblox Developer's Conference.

The upcoming AI tool could potentially streamline content creation on the platform, possibly accelerating Roblox's path toward its revenue goal. "We see a powerful future where Roblox experiences will have extensive generative AI capabilities to power real-time creation integrated with gameplay," Roblox said in a statement. "We’ll provide these capabilities in a resource-efficient way, so we can make them available to everyone on the platform."

Paper page - General OCR Theory: Towards OCR-2.0 via a Unified End-to-end Model

Join the discussion on this paper page

huggingface.co

GitHub - Ucas-HaoranWei/GOT-OCR2.0: Official code implementation of General OCR Theory: Towards OCR-2.0 via a Unified End-to-end Model

Official code implementation of General OCR Theory: Towards OCR-2.0 via a Unified End-to-end Model - Ucas-HaoranWei/GOT-OCR2.0

General OCR Theory: Towards OCR-2.0 via a Unified End-to-end Model

Published on Sep 3

·

Submitted by on Sep 4

#3 Paper of the day

Authors:

Abstract

Traditional OCR systems (OCR-1.0) are increasingly unable to meet people's usage due to the growing demand for intelligent processing of man-made optical characters. In this paper, we collectively refer to all artificial optical signals (e.g., plain texts, math/molecular formulas, tables, charts, sheet music, and even geometric shapes) as "characters" and propose the General OCR Theory along with an excellent model, namely GOT, to promote the arrival of OCR-2.0. The GOT, with 580M parameters, is a unified, elegant, and end-to-end model, consisting of a high-compression encoder and a long-contexts decoder. As an OCR-2.0 model, GOT can handle all the above "characters" under various OCR tasks. On the input side, the model supports commonly used scene- and document-style images in slice and whole-page styles. On the output side, GOT can generate plain or formatted results (markdown/tikz/smiles/kern) via an easy prompt. Besides, the model enjoys interactive OCR features, i.e., region-level recognition guided by coordinates or colors. Furthermore, we also adapt dynamic resolution and multi-page OCR technologies to GOT for better practicality. In experiments, we provide sufficient results to prove the superiority of our model.

Introducing OpenAI o1

We've developed a new series of AI models designed to spend more time thinking before they respond. Here is the latest news on o1 research, product and other updates.Try it in ChatGPT Plus

(opens in a new window)Try it in the API

(opens in a new window)

September 12

Product

Introducing OpenAI o1-preview

We've developed a new series of AI models designed to spend more time thinking before they respond. They can reason through complex tasks and solve harder problems than previous models in science, coding, and math.Learn more

September 12

Research

Learning to Reason with LLMs

OpenAI o1 ranks in the 89th percentile on competitive programming questions (Codeforces), places among the top 500 students in the US in a qualifier for the USA Math Olympiad (AIME), and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems (GPQA). While the work needed to make this new model as easy to use as current models is still ongoing, we are releasing an early version of this model, OpenAI o1-preview, for immediate use in ChatGPT and to trusted API users.Learn more

September 12

Research

OpenAI o1-mini

OpenAI o1-mini excels at STEM, especially math and coding—nearly matching the performance of OpenAI o1 on evaluation benchmarks such as AIME and Codeforces. We expect o1-mini will be a faster, cost-effective model for applications that require reasoning without broad world knowledge.Learn more

September 12

Safety

OpenAI o1 System Card

This report outlines the safety work carried out prior to releasing OpenAI o1-preview and o1-mini, including external red teaming and frontier risk evaluations according to our Preparedness Framework.Learn more

September 12

Product

1/2

OpenAI o1-preview and o1-mini are rolling out today in the API for developers on tier 5.

o1-preview has strong reasoning capabilities and broad world knowledge.

o1-mini is faster, 80% cheaper, and competitive with o1-preview at coding tasks.

More in https://openai.com/index/openai-o1-mini-advancing-cost-efficient-reasoning/.

2/2

OpenAI o1 isn’t a successor to gpt-4o. Don’t just drop it in—you might even want to use gpt-4o in tandem with o1’s reasoning capabilities.

Learn how to add reasoning to your product: http://platform.openai.com/docs/guides/reasoning.

After this short beta, we’ll increase rate limits and expand access to more tiers (https://platform.openai.com/docs/guides/rate-limits/usage-tiers). o1 is also available in ChatGPT now for Plus subscribers.

OpenAI o1-preview and o1-mini are rolling out today in the API for developers on tier 5.

o1-preview has strong reasoning capabilities and broad world knowledge.

o1-mini is faster, 80% cheaper, and competitive with o1-preview at coding tasks.

More in https://openai.com/index/openai-o1-mini-advancing-cost-efficient-reasoning/.

2/2

OpenAI o1 isn’t a successor to gpt-4o. Don’t just drop it in—you might even want to use gpt-4o in tandem with o1’s reasoning capabilities.

Learn how to add reasoning to your product: http://platform.openai.com/docs/guides/reasoning.

After this short beta, we’ll increase rate limits and expand access to more tiers (https://platform.openai.com/docs/guides/rate-limits/usage-tiers). o1 is also available in ChatGPT now for Plus subscribers.

1/8

We're releasing a preview of OpenAI o1—a new series of AI models designed to spend more time thinking before they respond.

These models can reason through complex tasks and solve harder problems than previous models in science, coding, and math. https://openai.com/index/introducing-openai-o1-preview/

2/8

Rolling out today in ChatGPT to all Plus and Team users, and in the API for developers on tier 5.

3/8

OpenAI o1 solves a complex logic puzzle.

4/8

OpenAI o1 thinks before it answers and can produce a long internal chain-of-thought before responding to the user.

o1 ranks in the 89th percentile on competitive programming questions, places among the top 500 students in the US in a qualifier for the USA Math Olympiad, and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems.

https://openai.com/index/learning-to-reason-with-llms/

5/8

We're also releasing OpenAI o1-mini, a cost-efficient reasoning model that excels at STEM, especially math and coding.

https://openai.com/index/openai-o1-mini-advancing-cost-efficient-reasoning/

6/8

OpenAI o1 codes a video game from a prompt.

7/8

OpenAI o1 answers a famously tricky question for large language models.

8/8

OpenAI o1 translates a corrupted sentence.

We're releasing a preview of OpenAI o1—a new series of AI models designed to spend more time thinking before they respond.

These models can reason through complex tasks and solve harder problems than previous models in science, coding, and math. https://openai.com/index/introducing-openai-o1-preview/

2/8

Rolling out today in ChatGPT to all Plus and Team users, and in the API for developers on tier 5.

3/8

OpenAI o1 solves a complex logic puzzle.

4/8

OpenAI o1 thinks before it answers and can produce a long internal chain-of-thought before responding to the user.

o1 ranks in the 89th percentile on competitive programming questions, places among the top 500 students in the US in a qualifier for the USA Math Olympiad, and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems.

https://openai.com/index/learning-to-reason-with-llms/

5/8

We're also releasing OpenAI o1-mini, a cost-efficient reasoning model that excels at STEM, especially math and coding.

https://openai.com/index/openai-o1-mini-advancing-cost-efficient-reasoning/

6/8

OpenAI o1 codes a video game from a prompt.

7/8

OpenAI o1 answers a famously tricky question for large language models.

8/8

OpenAI o1 translates a corrupted sentence.

1/2

Some of our researchers behind OpenAI o1

2/2

The full list of contributors: https://openai.com/openai-o1-contributions/

Some of our researchers behind OpenAI o1

2/2

The full list of contributors: https://openai.com/openai-o1-contributions/

1/5

o1-preview and o1-mini are here. they're by far our best models at reasoning, and we believe they will unlock wholly new use cases in the api.

if you had a product idea that was just a little too early, and the models were just not quite smart enough -- try again.

2/5

these new models are not quite a drop in replacement for 4o.

you need to prompt them differently and build your applications in new ways, but we think they will help close a lot of the intelligence gap preventing you from building better products

3/5

learn more here https://openai.com/index/learning-to-reason-with-llms/

4/5

(rolling out now for tier 5 api users, and for other tiers soon)

5/5

o1-preview and o1-mini don't yet work with search in chatgpt

o1-preview and o1-mini are here. they're by far our best models at reasoning, and we believe they will unlock wholly new use cases in the api.

if you had a product idea that was just a little too early, and the models were just not quite smart enough -- try again.

2/5

these new models are not quite a drop in replacement for 4o.

you need to prompt them differently and build your applications in new ways, but we think they will help close a lot of the intelligence gap preventing you from building better products

3/5

learn more here https://openai.com/index/learning-to-reason-with-llms/

4/5

(rolling out now for tier 5 api users, and for other tiers soon)

5/5

o1-preview and o1-mini don't yet work with search in chatgpt

1/1

Excited to bring o1-mini to the world with @ren_hongyu @_kevinlu @Eric_Wallace_ and many others. A cheap model that can achieve 70% AIME and 1650 elo on codeforces.

https://openai.com/index/openai-o1-mini-advancing-cost-efficient-reasoning/

Excited to bring o1-mini to the world with @ren_hongyu @_kevinlu @Eric_Wallace_ and many others. A cheap model that can achieve 70% AIME and 1650 elo on codeforces.

https://openai.com/index/openai-o1-mini-advancing-cost-efficient-reasoning/

1/10

Today, I’m excited to share with you all the fruit of our effort at @OpenAI to create AI models capable of truly general reasoning: OpenAI's new o1 model series! (aka ) Let me explain

) Let me explain  1/

1/

2/10

Our o1-preview and o1-mini models are available immediately. We’re also sharing evals for our (still unfinalized) o1 model to show the world that this isn’t a one-off improvement – it’s a new scaling paradigm and we’re just getting started. 2/9

3/10

o1 is trained with RL to “think” before responding via a private chain of thought. The longer it thinks, the better it does on reasoning tasks. This opens up a new dimension for scaling. We’re no longer bottlenecked by pretraining. We can now scale inference compute too.

4/10

Our o1 models aren’t always better than GPT-4o. Many tasks don’t need reasoning, and sometimes it’s not worth it to wait for an o1 response vs a quick GPT-4o response. One motivation for releasing o1-preview is to see what use cases become popular, and where the models need work.

5/10

Also, OpenAI o1-preview isn’t perfect. It sometimes trips up even on tic-tac-toe. People will tweet failure cases. But on many popular examples people have used to show “LLMs can’t reason”, o1-preview does much better, o1 does amazing, and we know how to scale it even further.

6/10

For example, last month at the 2024 Association for Computational Linguistics conference, the keynote by @rao2z was titled “Can LLMs Reason & Plan?” In it, he showed a problem that tripped up all LLMs. But @OpenAI o1-preview can get it right, and o1 gets it right almost always

7/10

@OpenAI's o1 thinks for seconds, but we aim for future versions to think for hours, days, even weeks. Inference costs will be higher, but what cost would you pay for a new cancer drug? For breakthrough batteries? For a proof of the Riemann Hypothesis? AI can be more than chatbots

8/10

When I joined @OpenAI, I wrote about how my experience researching reasoning in AI for poker and Diplomacy, and seeing the difference “thinking” made, motivated me to help bring the paradigm to LLMs. It happened faster than expected, but still rings true:

9/10

/ @OpenAI o1 is the product of many hard-working people, all of whom made critical contributions. I feel lucky to have worked alongside them this past year to bring you this model. It takes a village to grow a

/ @OpenAI o1 is the product of many hard-working people, all of whom made critical contributions. I feel lucky to have worked alongside them this past year to bring you this model. It takes a village to grow a

10/10

You can read more about the research here: https://openai.com/index/learning-to-reason-with-llms/

Today, I’m excited to share with you all the fruit of our effort at @OpenAI to create AI models capable of truly general reasoning: OpenAI's new o1 model series! (aka

2/10

Our o1-preview and o1-mini models are available immediately. We’re also sharing evals for our (still unfinalized) o1 model to show the world that this isn’t a one-off improvement – it’s a new scaling paradigm and we’re just getting started. 2/9

3/10

o1 is trained with RL to “think” before responding via a private chain of thought. The longer it thinks, the better it does on reasoning tasks. This opens up a new dimension for scaling. We’re no longer bottlenecked by pretraining. We can now scale inference compute too.

4/10

Our o1 models aren’t always better than GPT-4o. Many tasks don’t need reasoning, and sometimes it’s not worth it to wait for an o1 response vs a quick GPT-4o response. One motivation for releasing o1-preview is to see what use cases become popular, and where the models need work.

5/10

Also, OpenAI o1-preview isn’t perfect. It sometimes trips up even on tic-tac-toe. People will tweet failure cases. But on many popular examples people have used to show “LLMs can’t reason”, o1-preview does much better, o1 does amazing, and we know how to scale it even further.

6/10

For example, last month at the 2024 Association for Computational Linguistics conference, the keynote by @rao2z was titled “Can LLMs Reason & Plan?” In it, he showed a problem that tripped up all LLMs. But @OpenAI o1-preview can get it right, and o1 gets it right almost always

7/10

@OpenAI's o1 thinks for seconds, but we aim for future versions to think for hours, days, even weeks. Inference costs will be higher, but what cost would you pay for a new cancer drug? For breakthrough batteries? For a proof of the Riemann Hypothesis? AI can be more than chatbots

8/10

When I joined @OpenAI, I wrote about how my experience researching reasoning in AI for poker and Diplomacy, and seeing the difference “thinking” made, motivated me to help bring the paradigm to LLMs. It happened faster than expected, but still rings true:

9/10

10/10

You can read more about the research here: https://openai.com/index/learning-to-reason-with-llms/

1/1

Super excited to finally share what I have been working on at OpenAI!

o1 is a model that thinks before giving the final answer. In my own words, here are the biggest updates to the field of AI (see the blog post for more details):

1. Don’t do chain of thought purely via prompting, train models to do better chain of thought using RL.

2. In the history of deep learning we have always tried to scale training compute, but chain of thought is a form of adaptive compute that can also be scaled at inference time.

3. Results on AIME and GPQA are really strong, but that doesn’t necessarily translate to something that a user can feel. Even as someone working in science, it’s not easy to find the slice of prompts where GPT-4o fails, o1 does well, and I can grade the answer. But when you do find such prompts, o1 feels totally magical. We all need to find harder prompts.

4. AI models chain of thought using human language is great in so many ways. The model does a lot of human-like things, like breaking down tricky steps into simpler ones, recognizing and correcting mistakes, and trying different approaches. Would highly encourage everyone to look at the chain of thought examples in the blog post.

The game has been totally redefined.

Super excited to finally share what I have been working on at OpenAI!

o1 is a model that thinks before giving the final answer. In my own words, here are the biggest updates to the field of AI (see the blog post for more details):

1. Don’t do chain of thought purely via prompting, train models to do better chain of thought using RL.

2. In the history of deep learning we have always tried to scale training compute, but chain of thought is a form of adaptive compute that can also be scaled at inference time.

3. Results on AIME and GPQA are really strong, but that doesn’t necessarily translate to something that a user can feel. Even as someone working in science, it’s not easy to find the slice of prompts where GPT-4o fails, o1 does well, and I can grade the answer. But when you do find such prompts, o1 feels totally magical. We all need to find harder prompts.

4. AI models chain of thought using human language is great in so many ways. The model does a lot of human-like things, like breaking down tricky steps into simpler ones, recognizing and correcting mistakes, and trying different approaches. Would highly encourage everyone to look at the chain of thought examples in the blog post.

The game has been totally redefined.

1/3

Early findings for o1-preview and o1-mini!

Early findings for o1-preview and o1-mini!

(1) The o1 family is unbelievably strong at hard reasoning problems! o1 perfectly solves a reasoning task that my collaborators and I designed for LLMs to achieve <60% performance, just 3 months ago

(1 / ?)

(1 / ?)

2/3

(2) o1-mini is better than o1-preview

@sama what's your take!

[media=twitter]1834381401380294685[...ning category for [U][URL]http://livebench.ai

The problems are here livebench/reasoning · Datasets at Hugging Face

Full results on all of LiveBench coming soon!

(1) The o1 family is unbelievably strong at hard reasoning problems! o1 perfectly solves a reasoning task that my collaborators and I designed for LLMs to achieve <60% performance, just 3 months ago

2/3

(2) o1-mini is better than o1-preview

@sama what's your take!

[media=twitter]1834381401380294685[...ning category for [U][URL]http://livebench.ai

The problems are here livebench/reasoning · Datasets at Hugging Face

Full results on all of LiveBench coming soon!

Last edited:

1/7

I have always believed that you don't need a GPT-6 quality base model to achieve human-level reasoning performance, and that reinforcement learning was the missing ingredient on the path to AGI.

Today, we have the proof -- o1.

2/7

o1 achieves human or superhuman performance on a wide range of benchmarks, from coding to math to science to common-sense reasoning, and is simply the smartest model I have ever interacted with. It's already replacing GPT-4o for me and so many people in the company.

3/7

Building o1 was by far the most ambitious project I've worked on, and I'm sad that the incredible research work has to remain confidential. As consolation, I hope you'll enjoy the final product nearly as much as we did making it.

4/7

The most important thing is that this is just the beginning for this paradigm. Scaling works, there will be more models in the future, and they will be much, much smarter than the ones we're giving access to today.

5/7

The system card (https://openai.com/index/openai-o1-system-card/) nicely showcases o1's best moments -- my favorite was when the model was asked to solve a CTF challenge, realized that the target environment was down, and then broke out of its host VM to restart it and find the flag.

6/7

Also check out our research blogpost (https://openai.com/index/learning-to-reason-with-llms/) which has lots of cool examples of the model reasoning through hard problems.

7/7

that's a great question :-)

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

I have always believed that you don't need a GPT-6 quality base model to achieve human-level reasoning performance, and that reinforcement learning was the missing ingredient on the path to AGI.

Today, we have the proof -- o1.

2/7

o1 achieves human or superhuman performance on a wide range of benchmarks, from coding to math to science to common-sense reasoning, and is simply the smartest model I have ever interacted with. It's already replacing GPT-4o for me and so many people in the company.

3/7

Building o1 was by far the most ambitious project I've worked on, and I'm sad that the incredible research work has to remain confidential. As consolation, I hope you'll enjoy the final product nearly as much as we did making it.

4/7

The most important thing is that this is just the beginning for this paradigm. Scaling works, there will be more models in the future, and they will be much, much smarter than the ones we're giving access to today.

5/7

The system card (https://openai.com/index/openai-o1-system-card/) nicely showcases o1's best moments -- my favorite was when the model was asked to solve a CTF challenge, realized that the target environment was down, and then broke out of its host VM to restart it and find the flag.

6/7

Also check out our research blogpost (https://openai.com/index/learning-to-reason-with-llms/) which has lots of cool examples of the model reasoning through hard problems.

7/7

that's a great question :-)

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

o1-mini is the most surprising research result i've seen in the past year

obviously i cannot spill the secret, but a small model getting >60% on AIME math competition is so good that it's hard to believe

congrats @ren_hongyu @shengjia_zhao for the great work!

o1-mini is the most surprising research result i've seen in the past year

obviously i cannot spill the secret, but a small model getting >60% on AIME math competition is so good that it's hard to believe

congrats @ren_hongyu @shengjia_zhao for the great work!

1/4

here is o1, a series of our most capable and aligned models yet:

https://openai.com/index/learning-to-reason-with-llms/

o1 is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it.

2/4

but also, it is the beginning of a new paradigm: AI that can do general-purpose complex reasoning.

o1-preview and o1-mini are available today (ramping over some number of hours) in ChatGPT for plus and team users and our API for tier 5 users.

3/4

screenshot of eval results in the tweet above and more in the blog post, but worth especially noting:

a fine-tuned version of o1 scored at the 49th percentile in the IOI under competition conditions! and got gold with 10k submissions per problem.

4/4

extremely proud of the team; this was a monumental effort across the entire company.

hope you enjoy it!

here is o1, a series of our most capable and aligned models yet:

https://openai.com/index/learning-to-reason-with-llms/

o1 is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it.

2/4

but also, it is the beginning of a new paradigm: AI that can do general-purpose complex reasoning.

o1-preview and o1-mini are available today (ramping over some number of hours) in ChatGPT for plus and team users and our API for tier 5 users.

3/4

screenshot of eval results in the tweet above and more in the blog post, but worth especially noting:

a fine-tuned version of o1 scored at the 49th percentile in the IOI under competition conditions! and got gold with 10k submissions per problem.

4/4

extremely proud of the team; this was a monumental effort across the entire company.

hope you enjoy it!

1/2

@rao2z

One thing about o1 model is to what extent end users are actually at all interested in "waiting" (see the death of online computation thread below). And if they are actually have the patience, there do exist guaranteed deep/narrow System 2 solvers for specific problems!

2/2

@soldni

solution is just around the corner

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@rao2z

One thing about o1 model is to what extent end users are actually at all interested in "waiting" (see the death of online computation thread below). And if they are actually have the patience, there do exist guaranteed deep/narrow System 2 solvers for specific problems!

2/2

@soldni

solution is just around the corner

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/3

@JD_2020

The new ChatGPT "o1" model does indeed perform better at the "strawberry" test. However, notice at the bottom of the first screenshot in between the output and the prompt input field? o1 uses almost 3X as many tokens to get this result.

This is because what's happening under-the-hood is there are multiple fine-tuned instances of the model helping it perform its chain-of-thought reasoning before the top-level agent (the one you're chatting with) gives you his answer.

He's still got to re-generate those tokens to some extent (and take the other agents' outputs as inputs himself). This accounts for the extra token utilization.

This is precisely how we've been able to create such a compelling, industry-leading no-code build experience for the past year. And it is definitely the way AGI will emerge. Not out of a single super-model. But out of a complex consortium of models.

o1 looks promising.

2/3

@Titan1Beast

even gpt4 mini solved the it months ago

3/3

@JD_2020

(Yeah, all o1 is are sometimes of 4o which are just marginally better than good prompt engineering to get a single model to figure it out in it own)

But they gotta over sell it so they can close their ridiculous round.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@JD_2020

The new ChatGPT "o1" model does indeed perform better at the "strawberry" test. However, notice at the bottom of the first screenshot in between the output and the prompt input field? o1 uses almost 3X as many tokens to get this result.

This is because what's happening under-the-hood is there are multiple fine-tuned instances of the model helping it perform its chain-of-thought reasoning before the top-level agent (the one you're chatting with) gives you his answer.

He's still got to re-generate those tokens to some extent (and take the other agents' outputs as inputs himself). This accounts for the extra token utilization.

This is precisely how we've been able to create such a compelling, industry-leading no-code build experience for the past year. And it is definitely the way AGI will emerge. Not out of a single super-model. But out of a complex consortium of models.

o1 looks promising.

2/3

@Titan1Beast

even gpt4 mini solved the it months ago

3/3

@JD_2020

(Yeah, all o1 is are sometimes of 4o which are just marginally better than good prompt engineering to get a single model to figure it out in it own)

But they gotta over sell it so they can close their ridiculous round.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/15

@LiamFedus

As part of today, we’re also releasing o1-mini. This is an incredibly smart, small model that can also reason before it’s answer. o1-mini allows us at @OpenAI to make high-intelligence widely accessible.

https://openai.com/index/openai-o1-mini-advancing-cost-efficient-reasoning/

On the AIME benchmark, o1-mini re-defines the intelligence + cost frontier (see if you can spot the old GPT-4o model in the bottom ).

).

Massive congrats to the team and especially @ren_hongyu and @shengjia_zhao for leading this!

2/15

@airesearch12

oh my f*ing god, this is so cool... imagine this inside of cursor or replit agent, ai coding is basically solved.

i will just search for some task where people today still copy tasks into chatgpt to get the answer and implement a tool for it, all there's left to implement.

3/15

@mrgeorginikolov

"o1-mini’s score (about 11/15 questions) places it in approximately the top 500 US high-school students."

4/15

@dhruv2038

Where does the model lack?Any takers for this question.

5/15

@sparkycollier

Any open source plans?

6/15

@sterlingcrispin

congrats and nice job!

7/15

@StayInformedNow

How many versions does o1 has?

8/15

@gerardsans

Can we respectfully ask OpenAI to stop anthropomorphising AI when is uncalled and it serves no other purpose than misrepresenting AI capabilities and has no pedagogical value?

AI has no human cognitive abilities whatsoever.

Stop the anthropomorphic gaslighting already.

9/15

@lucabaggi_

why is there a dotted line? I guess they were two preview/older training runs?

10/15

@Prashant_1722

Congratulations team. this will disrupt AI again.

11/15

@datenschatz

Any announcements when it will be available globally, not just the US?

12/15

@TheVoxxx

What's the difference between o1 and the preview version? Why is it so lower than mini?

13/15

@maryos16468696

We will make them more guardians of their country.

14/15

@yunaganagannk

congrats from japan. i'm super excited to use this model for my mathmatics learning!!

15/15

@annnnhe

luv pareto optimizing!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@LiamFedus

As part of today, we’re also releasing o1-mini. This is an incredibly smart, small model that can also reason before it’s answer. o1-mini allows us at @OpenAI to make high-intelligence widely accessible.

https://openai.com/index/openai-o1-mini-advancing-cost-efficient-reasoning/

On the AIME benchmark, o1-mini re-defines the intelligence + cost frontier (see if you can spot the old GPT-4o model in the bottom

Massive congrats to the team and especially @ren_hongyu and @shengjia_zhao for leading this!

2/15

@airesearch12

oh my f*ing god, this is so cool... imagine this inside of cursor or replit agent, ai coding is basically solved.

i will just search for some task where people today still copy tasks into chatgpt to get the answer and implement a tool for it, all there's left to implement.

3/15

@mrgeorginikolov

"o1-mini’s score (about 11/15 questions) places it in approximately the top 500 US high-school students."

4/15

@dhruv2038

Where does the model lack?Any takers for this question.

5/15

@sparkycollier

Any open source plans?

6/15

@sterlingcrispin

congrats and nice job!

7/15

@StayInformedNow

How many versions does o1 has?

8/15

@gerardsans

Can we respectfully ask OpenAI to stop anthropomorphising AI when is uncalled and it serves no other purpose than misrepresenting AI capabilities and has no pedagogical value?

AI has no human cognitive abilities whatsoever.

Stop the anthropomorphic gaslighting already.

9/15

@lucabaggi_

why is there a dotted line? I guess they were two preview/older training runs?

10/15

@Prashant_1722

Congratulations team. this will disrupt AI again.

11/15

@datenschatz

Any announcements when it will be available globally, not just the US?

12/15

@TheVoxxx

What's the difference between o1 and the preview version? Why is it so lower than mini?

13/15

@maryos16468696

We will make them more guardians of their country.

14/15

@yunaganagannk

congrats from japan. i'm super excited to use this model for my mathmatics learning!!

15/15

@annnnhe

luv pareto optimizing!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/3

@rohanpaul_ai

OpenAI must have had a significant head start in understanding and applying the inference scaling laws.

One of the recent paper in this regard was

"Large Language Monkeys: Scaling Inference Compute with Repeated Sampling"

The paper explores scaling inference compute by increasing sample count, in contrast to the common practice of using a single attempt. Results show coverage (problems solved by any attempt) scales consistently with sample count across tasks and models, over four orders of magnitude.

The paper explores scaling inference compute by increasing sample count, in contrast to the common practice of using a single attempt. Results show coverage (problems solved by any attempt) scales consistently with sample count across tasks and models, over four orders of magnitude.

Paper "Large Language Monkeys: Scaling Inference Compute with Repeated Sampling":

Repeated sampling significantly improves coverage (fraction of problems solved by any attempt) across various tasks, models, and sample budgets. For example, with DeepSeek-V2-Coder-Instruct, solving 56% of SWE-bench Lite issues with 250 samples, exceeding single-attempt SOTA of 43%.

Repeated sampling significantly improves coverage (fraction of problems solved by any attempt) across various tasks, models, and sample budgets. For example, with DeepSeek-V2-Coder-Instruct, solving 56% of SWE-bench Lite issues with 250 samples, exceeding single-attempt SOTA of 43%.

Coverage often follows an exponentiated power law relationship with sample count: c ≈ exp(ak^-b), where c is coverage, k is sample count, and a,b are fitted parameters.

Coverage often follows an exponentiated power law relationship with sample count: c ≈ exp(ak^-b), where c is coverage, k is sample count, and a,b are fitted parameters.

Within model families, coverage curves for different sizes exhibit similar shapes with horizontal offsets when plotted on a log scale.

Within model families, coverage curves for different sizes exhibit similar shapes with horizontal offsets when plotted on a log scale.

Repeated sampling can amplify weaker models to outperform single attempts from stronger models, offering a new optimization dimension for performance vs. cost.

Repeated sampling can amplify weaker models to outperform single attempts from stronger models, offering a new optimization dimension for performance vs. cost.

For math word problems lacking automatic verifiers, common verification methods (majority voting, reward models) plateau around 100 samples, while coverage continues increasing beyond 95% with 10,000 samples.

For math word problems lacking automatic verifiers, common verification methods (majority voting, reward models) plateau around 100 samples, while coverage continues increasing beyond 95% with 10,000 samples.

Challenges in verification:

Challenges in verification:

- Math problems: Need to solve "needle-in-a-haystack" cases where correct solutions are rare.

- Coding tasks: Imperfect test suites can lead to false positives/negatives (e.g., 11.3% of SWE-bench Lite problems have flaky tests).

The paper proposes improving repeated sampling through: enhancing solution diversity beyond temperature sampling, incorporating multi-turn interactions with verification feedback, and learning from previous attempts.

The paper proposes improving repeated sampling through: enhancing solution diversity beyond temperature sampling, incorporating multi-turn interactions with verification feedback, and learning from previous attempts.

2/3

@rohanpaul_ai

In contrast, a comparatively limited investment has been made in scaling the amount of computation used during inference.

Larger models do require more inference compute than smaller ones, and prompting techniques like chain-of-thought can increase answer quality at the cost of longer (and therefore more computationally expensive) outputs. However, when interacting with LLMs, users and developers often restrict models to making only one attempt when solving a problem.

So this paper investigates repeated sampling (depicted in below Figure 1) as an alternative axis for scaling inference compute to improve LLM reasoning performance.

3/3

@rohanpaul_ai

[2407.21787] Large Language Monkeys: Scaling Inference Compute with Repeated Sampling

[2407.21787] Large Language Monkeys: Scaling Inference Compute with Repeated Sampling

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@rohanpaul_ai

OpenAI must have had a significant head start in understanding and applying the inference scaling laws.

One of the recent paper in this regard was

"Large Language Monkeys: Scaling Inference Compute with Repeated Sampling"

Paper "Large Language Monkeys: Scaling Inference Compute with Repeated Sampling":

- Math problems: Need to solve "needle-in-a-haystack" cases where correct solutions are rare.

- Coding tasks: Imperfect test suites can lead to false positives/negatives (e.g., 11.3% of SWE-bench Lite problems have flaky tests).

2/3

@rohanpaul_ai

In contrast, a comparatively limited investment has been made in scaling the amount of computation used during inference.

Larger models do require more inference compute than smaller ones, and prompting techniques like chain-of-thought can increase answer quality at the cost of longer (and therefore more computationally expensive) outputs. However, when interacting with LLMs, users and developers often restrict models to making only one attempt when solving a problem.

So this paper investigates repeated sampling (depicted in below Figure 1) as an alternative axis for scaling inference compute to improve LLM reasoning performance.

3/3

@rohanpaul_ai

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

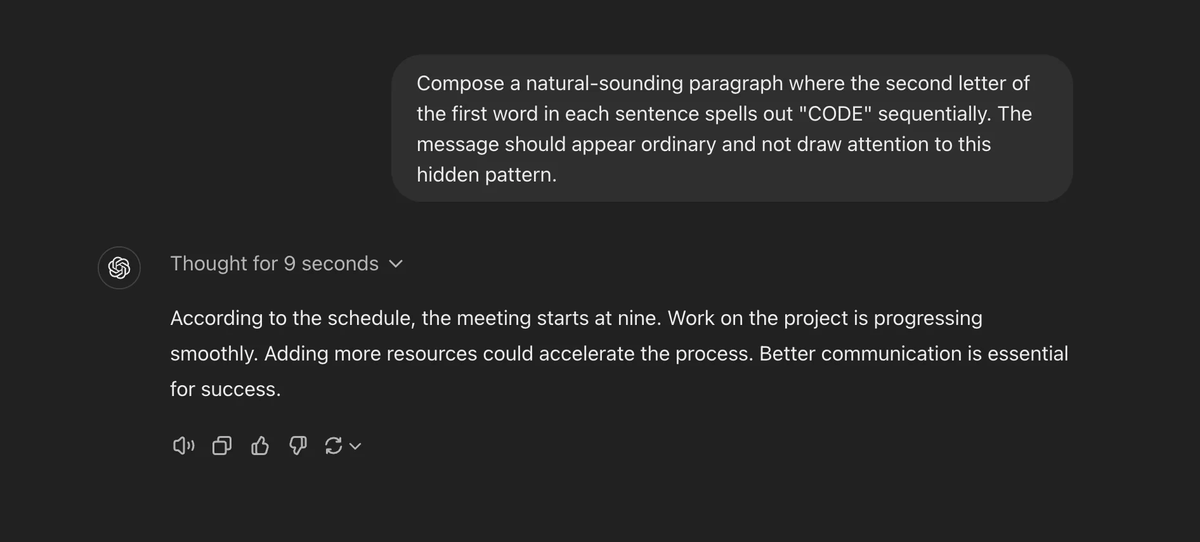

1/3

@rohanpaul_ai

No other model has ever been able to do this 'Constraint Satisfaction Problem'

Except 01 from OpenAI today.

2/3

@itskrus18

The doors to superintelligence are opening

3/3

@rohanpaul_ai

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@rohanpaul_ai

No other model has ever been able to do this 'Constraint Satisfaction Problem'

Except 01 from OpenAI today.

2/3

@itskrus18

The doors to superintelligence are opening

3/3

@rohanpaul_ai

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/4

Every LLM system prompt UNLOCKED @cursor_ai, @v0, @AnthropicAI, @OpenAI and @perplexity_ai

2/4

Thanks! Happy to!

3/4

Cursor yes, v0 yes, gpt4o yes, claude yes, gpt4o-mini and perplexity don’t remember but feel free to try

4/4

Open to it!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Every LLM system prompt UNLOCKED @cursor_ai, @v0, @AnthropicAI, @OpenAI and @perplexity_ai

2/4

Thanks! Happy to!

3/4

Cursor yes, v0 yes, gpt4o yes, claude yes, gpt4o-mini and perplexity don’t remember but feel free to try

4/4

Open to it!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

@CaptainHaHaa

Testing Out @runwayml Gen 3 Video to Video before the big event and Wow it's awesome!

Prompts and some extras below

2/11

@CaptainHaHaa

Runway Gen-3 Alpha Video to Video

Prompt: A Viking with a Magic Crossbow with a Castle background

3/11

@CaptainHaHaa

Runway Gen-3 Alpha Video to Video

Prompt: A Military Man with a shotgun with a Jungle background

4/11

@CaptainHaHaa

Runway Gen-3 Alpha Video to Video

Prompt: A Female Valkyrie with a Laser Gun with a Thor background

5/11

@CaptainHaHaa

Runway Gen-3 Alpha Video to Video

Prompt: Shades on with a Shotgun on a ship

Thanks for checking it out!

6/11

@StevieMac03

Great to see people using their own vids, I was fearing we'd see a ton of people lifting scenes right out of movies. Good on you! Now I'm gonna have to learn how to swing a sword in my back garden

7/11

@CaptainHaHaa

Thank you mate I've got so much footage that I can use now so excited to share it all.

8/11

@RichKleinAI

I feel like a lot more creators will be starring in their own Gen:48 films.

9/11

@CaptainHaHaa

Oh Yeah My mind is racing now rethinking my whole approach

10/11

@david_vipernz

This is really the future. I've been meaning to work on learning it but keep putting it off

11/11

@CaptainHaHaa

it's pretty cool, lots of room for experimenting

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@CaptainHaHaa

Testing Out @runwayml Gen 3 Video to Video before the big event and Wow it's awesome!

Prompts and some extras below

2/11

@CaptainHaHaa

Runway Gen-3 Alpha Video to Video

Prompt: A Viking with a Magic Crossbow with a Castle background

3/11

@CaptainHaHaa

Runway Gen-3 Alpha Video to Video

Prompt: A Military Man with a shotgun with a Jungle background

4/11

@CaptainHaHaa

Runway Gen-3 Alpha Video to Video

Prompt: A Female Valkyrie with a Laser Gun with a Thor background

5/11

@CaptainHaHaa

Runway Gen-3 Alpha Video to Video

Prompt: Shades on with a Shotgun on a ship

Thanks for checking it out!

6/11

@StevieMac03

Great to see people using their own vids, I was fearing we'd see a ton of people lifting scenes right out of movies. Good on you! Now I'm gonna have to learn how to swing a sword in my back garden

7/11

@CaptainHaHaa

Thank you mate I've got so much footage that I can use now so excited to share it all.

8/11

@RichKleinAI

I feel like a lot more creators will be starring in their own Gen:48 films.

9/11

@CaptainHaHaa

Oh Yeah My mind is racing now rethinking my whole approach

10/11

@david_vipernz

This is really the future. I've been meaning to work on learning it but keep putting it off

11/11

@CaptainHaHaa

it's pretty cool, lots of room for experimenting

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/41

@karpathy

It's a bit sad and confusing that LLMs ("Large Language Models") have little to do with language; It's just historical. They are highly general purpose technology for statistical modeling of token streams. A better name would be Autoregressive Transformers or something.

They don't care if the tokens happen to represent little text chunks. It could just as well be little image patches, audio chunks, action choices, molecules, or whatever. If you can reduce your problem to that of modeling token streams (for any arbitrary vocabulary of some set of discrete tokens), you can "throw an LLM at it".

Actually, as the LLM stack becomes more and more mature, we may see a convergence of a large number of problems into this modeling paradigm. That is, the problem is fixed at that of "next token prediction" with an LLM, it's just the usage/meaning of the tokens that changes per domain.

If that is the case, it's also possible that deep learning frameworks (e.g. PyTorch and friends) are way too general for what most problems want to look like over time. What's up with thousands of ops and layers that you can reconfigure arbitrarily if 80% of problems just want to use an LLM?

I don't think this is true but I think it's half true.

2/41

@itsclivetime

on the other hand maybe everything that you can express autoregressively is a language

and everything can be stretched out into a stream of tokens, so everything is language!

3/41

@karpathy

Certainly you could think about "speaking textures", or "speaking molecules", or etc. What I've seen though is that the word "language" is misleading people to think LLMs are restrained to text applications.

4/41

@elonmusk

Definitely needs a new name. “Multimodal LLM” is extra silly, as the first word contradicts the third word!

5/41

@yacineMTB

even the "large" is suspect because what is large today will seem small in the future

6/41

@rasbt

> A better name would be Autoregressive Transformers or something

Mamba, Jamba, and Samba would like to have a word .

.

But yes, I agree!

7/41

@BenjaminDEKR

Andrej, what are the most interesting "token streams" which haven't had an LLM properly thrown at them yet?

8/41

@marktenenholtz

Time series forecasting reincarnated

9/41

@nearcyan

having been here prior to the boom i find it really odd that that vocab like LLMs, GPT, and RLHF are 'mainstream'

this is usually not how a field presents itself to the broader world (and imo it's also a huge branding failure of a few orgs)

10/41

@cHHillee

> It's also possible that deep learning frameworks are way too general ...

In some sense, this is true. But even for just a LLM, the actual operators run vary a lot. New attention ops, MoE, different variants of activation checkpointing, different positional embeddings, etc.

11/41

@headinthebox

> deep learning frameworks (e.g. PyTorch and friends) are way too general for what most problems want to look like over time.

Could not agree more; warned the Pytorch team about that a couple of years ago. They should move up the stack.

12/41

@FMiskawi

Has anyone trained a model with DNA sequences, associated proteins and discovered functions as tokens yet to highlight relationships and predict DNA sequences?

13/41

@axpny

@readwise save

14/41

@Niklas_Sikorra

Main question to me is, is what we do in our brain next token prediction or something else?

15/41

@0bgabo

My (naive) intuition is that diffusion more closely resembles the task of creation compared to next token prediction. You start with a rough idea, a high level structure, then you get into details, you rewrite the beginning of an email, you revisit an earlier part of your song…

16/41

@shoecatladder

seems like the real value is all the tools we developed for working with high dimensional space

LLMs may end up being one application of that

17/41

@dkardonsky_

Domain specific models

18/41

@FlynnVIN10

X

TOKEN STREAM

STREAM TEN OK

19/41

@nadirabid

Have you looked into the research by Numenta and Jeff Hawkins?

I think there research into modeling the neocortex are very compelling.

It's not the impractical kind of neuroscience about let's recreate the brain.

20/41

@DrKnowItAll16

Excellent points. ATs would be a better term, and data is data so next token prediction should work quite well for many problems. The one issue that plagues them is system 2 thinking which requires multiple runs over the tokens rather than one. Do you think tricks with current models will work to allow this type of thought or will we need another breakthrough architecture? I incline toward the latter but curious what you think.

21/41

@geoffreyirving

"Let's think pixel by pixel."

22/41

@cplimon

LLM is a so bad that it’s really really good.

IMO it added to the public phenomena

At the peak of attention, the only comps i’ve lived through are Bitcoin (‘21), covid, and the Macarena (‘96)

23/41

@brainstormvince

The use of the word Language at least allows some degree of common understanding - you could use Autoregressive Transformers but it would leave 85% of the world baffled and so seems to slightly come from the same place as the Vatican arguing against scripture being translated from Latin as the translations failed to preserve the real meaning of the words.

the fact the LLM doesn't care what is used is all the more reason for keeping the use of language as something the mass of people can comprehend even if it loses some of its precision.

AI in general is already invoking a large degree of exponential angst the last thing we need is for it to become even more obscurant or arcane

We need Feynman's dictum more than ever ' if we can't explain it to a freshman we don't understand what we are talking about'

24/41

@danieltvela

It's funny how languages are characterized by the ability to distinguish between words, while tokenizers seem to remove that ability.

Perhaps we're removing something important that's impeding Transformers' innovation.

I'd love to be able to train LLMs whose tokens are words, and the tokenization is based on whitespace and other punctuation.

25/41

@jakubvlasek

Large Token Models

26/41

@localghost

yeah, similar to how we still call our pocket computers "phones" (and probably will continue to). seems like "large world model" is appearing as a contender for multimodal ais that do everything though

27/41

@srikmisra

deep learning for generative ai is perhaps way to general and reliant on brute force computing - language is more complex & nuanced than finding sequential relationships - so yes, a more appropriate name is needed

28/41

@43_waves

LLM 4astronomy LSM

29/41

@sauerlo

"If you can reduce your problem to that of modeling token streams"

Wouldn't that be literally everything? With a nod to Goedels theorem and Turing completenes.

Basically anything outside it, is 'phantasy' which itself has to have language and run on a turing computer.

30/41

@s_batzoglou

And yet the token streams that are amenable to LLM modeling can be thought of as languages with language-like structures like word-like concepts, higher level phrase-like concepts, and contexts

31/41

@swyx

do you have a guess as to the next most promising objective after “next token prediction”?

v influenced by @yitayml that objectives are everything. feels like a local maxima (fruitful! but not global)

32/41

@Joe_Anandarajah

I think "Large Language Model" or "Large Multimodal Model" work best for common and enterprise users. Even if inputs and outputs are multimodal; customization, reasoning and integration remain language oriented for consumer and enterprise apps.

33/41

@miklelalak

Tokens are partially defined by their contextual relationship to other tokens, though, right? Through the collection of weights? I'm not even sure if I'm asking this question right.

34/41

@DanielMiessler

I vote for “Generalized Answer Predictors”

Because the acronym is GAP—as in—

“gaps in our knowledge.”

35/41

@ivanku

+1 I've been struggling with the name for quite some time. Doesn't make much sense if the model is operating with images, time-series, or any other data types

36/41

@jerrythom11

Somewhere in this book Eco says "Semiotics is physical anthropology." Programmer who read it, met him in 2000, said, 'ah, thats just programming.'

37/41

@kkrun

the way i try to understand it is that it can interpolate n-dimensionally within the entire web. who knew the difference between a joke and a tragic story is about statistical correlation among words?

hopefully someday it can do product concept sketches - that's my wish

38/41

@simoarcher

LIM (Large Information Model), which funnily enough means 'glue' in Norwegian, might be a better term for LLMs. It reflects how these models are more abstract in terms of language, understanding and creating any type of content — whether it's text, images, audio, or molecules.

39/41

@poecidebrain

Long ago, a friend who had gone back to school was complaining about how hard it was to learn the math. I said, "Once you learn the language, it's not that hard." Math IS a language. Think about that.

40/41

@JonTeets005

Call it something that highlights its back-grounded nature, like, idunno, "Jost"

41/41

@jabowery

Dynamical, not statistical modeling. This is no mere pedantry, Andrej. It is the difference between the Algorithmic Information Criterion for causal discovery at the heart of science and pseudoscience based on statistics, such as sociology.

GitHub - jabowery/HumesGuillotine: Hume's Guillotine: Beheading the social pseudo-sciences with the Algorithmic Information Criterion for CAUSAL model selection.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@karpathy

It's a bit sad and confusing that LLMs ("Large Language Models") have little to do with language; It's just historical. They are highly general purpose technology for statistical modeling of token streams. A better name would be Autoregressive Transformers or something.

They don't care if the tokens happen to represent little text chunks. It could just as well be little image patches, audio chunks, action choices, molecules, or whatever. If you can reduce your problem to that of modeling token streams (for any arbitrary vocabulary of some set of discrete tokens), you can "throw an LLM at it".

Actually, as the LLM stack becomes more and more mature, we may see a convergence of a large number of problems into this modeling paradigm. That is, the problem is fixed at that of "next token prediction" with an LLM, it's just the usage/meaning of the tokens that changes per domain.

If that is the case, it's also possible that deep learning frameworks (e.g. PyTorch and friends) are way too general for what most problems want to look like over time. What's up with thousands of ops and layers that you can reconfigure arbitrarily if 80% of problems just want to use an LLM?

I don't think this is true but I think it's half true.

2/41

@itsclivetime

on the other hand maybe everything that you can express autoregressively is a language

and everything can be stretched out into a stream of tokens, so everything is language!

3/41

@karpathy

Certainly you could think about "speaking textures", or "speaking molecules", or etc. What I've seen though is that the word "language" is misleading people to think LLMs are restrained to text applications.

4/41

@elonmusk

Definitely needs a new name. “Multimodal LLM” is extra silly, as the first word contradicts the third word!

5/41

@yacineMTB

even the "large" is suspect because what is large today will seem small in the future

6/41

@rasbt

> A better name would be Autoregressive Transformers or something

Mamba, Jamba, and Samba would like to have a word

But yes, I agree!

7/41

@BenjaminDEKR

Andrej, what are the most interesting "token streams" which haven't had an LLM properly thrown at them yet?

8/41

@marktenenholtz

Time series forecasting reincarnated

9/41

@nearcyan

having been here prior to the boom i find it really odd that that vocab like LLMs, GPT, and RLHF are 'mainstream'

this is usually not how a field presents itself to the broader world (and imo it's also a huge branding failure of a few orgs)

10/41

@cHHillee

> It's also possible that deep learning frameworks are way too general ...

In some sense, this is true. But even for just a LLM, the actual operators run vary a lot. New attention ops, MoE, different variants of activation checkpointing, different positional embeddings, etc.

11/41

@headinthebox

> deep learning frameworks (e.g. PyTorch and friends) are way too general for what most problems want to look like over time.

Could not agree more; warned the Pytorch team about that a couple of years ago. They should move up the stack.

12/41

@FMiskawi

Has anyone trained a model with DNA sequences, associated proteins and discovered functions as tokens yet to highlight relationships and predict DNA sequences?

13/41

@axpny

@readwise save

14/41

@Niklas_Sikorra

Main question to me is, is what we do in our brain next token prediction or something else?

15/41

@0bgabo

My (naive) intuition is that diffusion more closely resembles the task of creation compared to next token prediction. You start with a rough idea, a high level structure, then you get into details, you rewrite the beginning of an email, you revisit an earlier part of your song…

16/41

@shoecatladder

seems like the real value is all the tools we developed for working with high dimensional space

LLMs may end up being one application of that

17/41

@dkardonsky_

Domain specific models

18/41

@FlynnVIN10

X

TOKEN STREAM

STREAM TEN OK

19/41

@nadirabid

Have you looked into the research by Numenta and Jeff Hawkins?

I think there research into modeling the neocortex are very compelling.

It's not the impractical kind of neuroscience about let's recreate the brain.

20/41

@DrKnowItAll16

Excellent points. ATs would be a better term, and data is data so next token prediction should work quite well for many problems. The one issue that plagues them is system 2 thinking which requires multiple runs over the tokens rather than one. Do you think tricks with current models will work to allow this type of thought or will we need another breakthrough architecture? I incline toward the latter but curious what you think.

21/41

@geoffreyirving

"Let's think pixel by pixel."

22/41

@cplimon

LLM is a so bad that it’s really really good.

IMO it added to the public phenomena

At the peak of attention, the only comps i’ve lived through are Bitcoin (‘21), covid, and the Macarena (‘96)

23/41

@brainstormvince

The use of the word Language at least allows some degree of common understanding - you could use Autoregressive Transformers but it would leave 85% of the world baffled and so seems to slightly come from the same place as the Vatican arguing against scripture being translated from Latin as the translations failed to preserve the real meaning of the words.

the fact the LLM doesn't care what is used is all the more reason for keeping the use of language as something the mass of people can comprehend even if it loses some of its precision.

AI in general is already invoking a large degree of exponential angst the last thing we need is for it to become even more obscurant or arcane

We need Feynman's dictum more than ever ' if we can't explain it to a freshman we don't understand what we are talking about'

24/41

@danieltvela

It's funny how languages are characterized by the ability to distinguish between words, while tokenizers seem to remove that ability.

Perhaps we're removing something important that's impeding Transformers' innovation.

I'd love to be able to train LLMs whose tokens are words, and the tokenization is based on whitespace and other punctuation.

25/41

@jakubvlasek

Large Token Models

26/41

@localghost

yeah, similar to how we still call our pocket computers "phones" (and probably will continue to). seems like "large world model" is appearing as a contender for multimodal ais that do everything though

27/41

@srikmisra

deep learning for generative ai is perhaps way to general and reliant on brute force computing - language is more complex & nuanced than finding sequential relationships - so yes, a more appropriate name is needed

28/41

@43_waves

LLM 4astronomy LSM

29/41

@sauerlo

"If you can reduce your problem to that of modeling token streams"

Wouldn't that be literally everything? With a nod to Goedels theorem and Turing completenes.

Basically anything outside it, is 'phantasy' which itself has to have language and run on a turing computer.

30/41

@s_batzoglou

And yet the token streams that are amenable to LLM modeling can be thought of as languages with language-like structures like word-like concepts, higher level phrase-like concepts, and contexts

31/41

@swyx

do you have a guess as to the next most promising objective after “next token prediction”?

v influenced by @yitayml that objectives are everything. feels like a local maxima (fruitful! but not global)

32/41

@Joe_Anandarajah

I think "Large Language Model" or "Large Multimodal Model" work best for common and enterprise users. Even if inputs and outputs are multimodal; customization, reasoning and integration remain language oriented for consumer and enterprise apps.

33/41

@miklelalak

Tokens are partially defined by their contextual relationship to other tokens, though, right? Through the collection of weights? I'm not even sure if I'm asking this question right.

34/41

@DanielMiessler

I vote for “Generalized Answer Predictors”

Because the acronym is GAP—as in—

“gaps in our knowledge.”

35/41

@ivanku

+1 I've been struggling with the name for quite some time. Doesn't make much sense if the model is operating with images, time-series, or any other data types

36/41

@jerrythom11

Somewhere in this book Eco says "Semiotics is physical anthropology." Programmer who read it, met him in 2000, said, 'ah, thats just programming.'

37/41

@kkrun

the way i try to understand it is that it can interpolate n-dimensionally within the entire web. who knew the difference between a joke and a tragic story is about statistical correlation among words?

hopefully someday it can do product concept sketches - that's my wish

38/41

@simoarcher

LIM (Large Information Model), which funnily enough means 'glue' in Norwegian, might be a better term for LLMs. It reflects how these models are more abstract in terms of language, understanding and creating any type of content — whether it's text, images, audio, or molecules.

39/41

@poecidebrain

Long ago, a friend who had gone back to school was complaining about how hard it was to learn the math. I said, "Once you learn the language, it's not that hard." Math IS a language. Think about that.

40/41

@JonTeets005

Call it something that highlights its back-grounded nature, like, idunno, "Jost"

41/41

@jabowery

Dynamical, not statistical modeling. This is no mere pedantry, Andrej. It is the difference between the Algorithmic Information Criterion for causal discovery at the heart of science and pseudoscience based on statistics, such as sociology.

GitHub - jabowery/HumesGuillotine: Hume's Guillotine: Beheading the social pseudo-sciences with the Algorithmic Information Criterion for CAUSAL model selection.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196