Intel unveils new AI chip to compete with Nvidia and AMD

Intel announced new details about PC, server, and AI chips on Thursday, including Gaudi3, an AI chip for generative AI software that will compete with Nvidia.

TECH

Intel unveils new AI chip to compete with Nvidia and AMD

PUBLISHED THU, DEC 14 202311:20 AM EST

UPDATED 44 MIN AGO

Kif Leswing@KIFLESWING

KEY POINTS

- Intel unveiled new computer chips on Thursday, including Gaudi3, a chip for generative AI software.

- Intel also announced Core Ultra chips, designed for Windows laptops and PCs, and new fifth-generation Xeon server chips.

- Intel’s server and PC processors include specialized AI parts called NPUs that can be used to run AI programs faster.

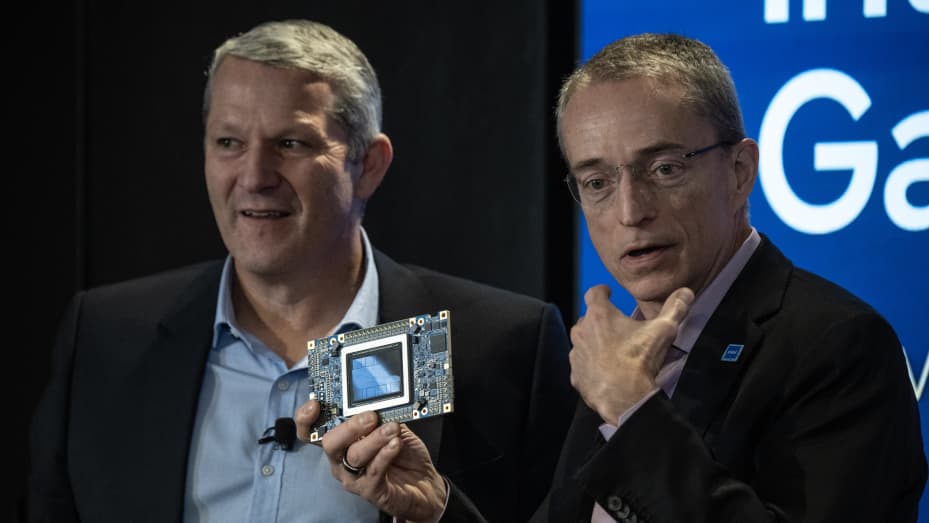

Patrick Gelsinger, chief executive officer of Intel Corp., speaks during the Intel AI Everywhere launch event in New York, US, on Thursday, Dec. 14, 2023.

Victor J. Blue | Bloomberg | Getty Images

Intel unveiled new computer chips on Thursday, including Gaudi3, an artificial intelligence chip for generative AI software. Gaudi3 will launch next year and will compete with rival chips from Nvidia and AMD that power big and power-hungry AI models.

The most prominent AI models, like OpenAI’s ChatGPT, run on Nvidia GPUs in the cloud. It’s one reason Nvidia stock has been up nearly 230% year to date while Intel shares have risen 68%. And it’s why companies like AMD and, now Intel, have announced chips that they hope will attract AI companies away from Nvidia’s dominant position in the market.

Shares of Intel were up 1% on Thursday.

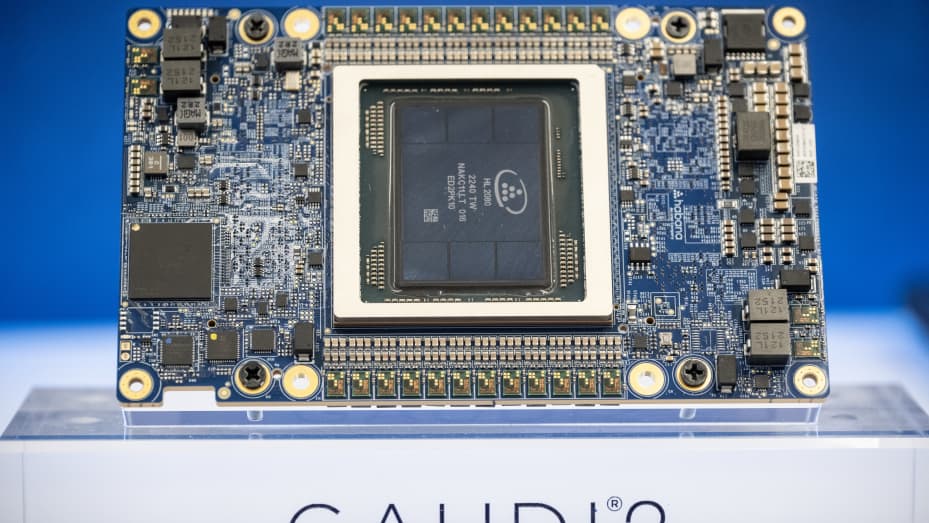

While the company was light on details, Gaudi3 will compete with Nvidia’s H100, the main choice among companies that build huge farms of the chips to power AI applications, and AMD’s forthcoming MI300X, when it starts shipping to customers in 2024.

Intel has been building Gaudi chips since 2019, when it bought a chip developer called Habana Labs.

An Intel Gaudi2 AI accelerator during the Intel AI Everywhere launch event in New York, US, on Thursday, Dec. 14, 2023. Intel Corp. announced new chips for PCs and data centers that the company hopes will give it a bigger slice of the booming market for artificial intelligence hardware. Photographer: Victor J. Blue/Bloomberg via Getty Images

Bloomberg | Bloomberg | Getty Images

“We’ve been seeing the excitement with generative AI, the star of the show for 2023,” Intel CEO Pat Gelsinger said at a launch event in New York where he announced Gaudi3 along other chips focused on AI applications.

“We think the AI PC will be the star of the show for the upcoming year,” Gelsinger added. And that’s where Intel’s new Core Ultra processors, also announced on Thursday, will come into play.

Intel Core Ultra and new Xeon chips

Intel also announced Core Ultra chips, designed for Windows laptops and PCs, and new fifth-generation Xeon server chips. Both include a specialized AI part called an NPU that can be used to run AI programs faster.It’s the latest sign that traditional processor makers, including Intel rivals AMD and Qualcomm, are reorienting their products lines around and alerting investors to the possibility of AI models leading to surging demand for their chips.

The Core Ultra won’t provide the same kind of power to run a chatbot like ChatGPT without an internet connection, but can handle smaller tasks. For example, Intel said, Zoom runs its background-blurring feature on its chips. They’re built using the company’s 7-nanometer process, which is more power efficient than earlier chips.

But, importantly, the 7-nanometer chips show Gelsinger’s strategy to catch up to Taiwan Semiconductor Manufacturing Co. in terms of chip manufacturing prowess by 2026 hasn’t fallen behind.

Core Ultra chips also include more powerful gaming capabilities and the added graphics muscle can help programs like Adobe Premier run more than 40% faster. The lineup launched in laptops that hit stores on Thursday.

Finally, Intel’s fifth-generation Xeon processors power servers deployed by large organizations like cloud companies. Intel didn’t share pricing, but the previous Xeon cost thousands of dollars. Intel’s Xeon processors are often paired with Nvidia GPUs in the systems that are used for training and deploying generative AI. In some systems, eight GPUs are paired to one or two Xeon CPUs.

Intel said the latest Xeon processor will be particularly good for inferencing, or the process of deploying an AI model, which is less power hungry than the training process.