The world's first supercomputer capable of simulating networks at the scale of the human brain has been announced by researchers at Western Sydney University.

interestingengineering.com

Human brain-like supercomputer with 228 trillion links coming in 2024

Australians develop a supercomputer capable of simulating networks at the scale of the human brain.

Sejal Sharma

Published: Dec 13, 2023 07:27 AM EST

INNOVATION

An artist’s impression of the DeepSouth supercomputer.

WSU

Australian scientists have their hands on a groundbreaking

supercomputer that aims to simulate the synapses of a human brain at full scale.

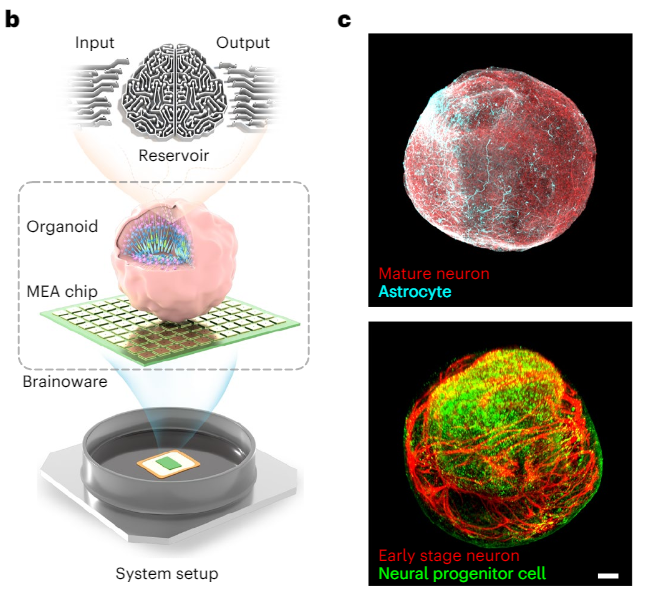

The neuromorphic supercomputer will be capable of 228 trillion synaptic operations per second, which is on par with the estimated number of operations in the human brain.

A team of researchers at the International Centre for Neuromorphic Systems (ICNS) at

Western Sydney University have named it DeepSouth. IBM's

To be operational by April 2024

The incredible computational power of the human brain can be seen in the way it performs billion-billion mathematical operations per second using only 20 watts of power. DeepSouth achieves similar levels of parallel processing by employing neuromorphic engineering, a design approach that mimics the brain's functioning.

Highlighting the distinctive features of DeepSouth, Professor André van Schaik, the Director of the ICNS, emphasized that the supercomputer is designed with a unique purpose – to operate in a manner similar to networks of neurons, the basic units of the human brain.

Neuromorphic systems utilize interconnected artificial neurons and synapses to perform tasks. These systems attempt to emulate the brain' ability to learn, adapt, and process information in a highly parallel and distributed manner.

Often applied in the field of AI and machine learning, a neuromorphic system is used with the goal of creating more efficient and brain-like computing systems.

Traditional computing architectures are typically based on the von Neumann architecture, where computers are composed of separate CPUs and memory units, where data and instructions are stored in the latter.

DeepSouth can handle large amounts of data at a rapid pace while consuming significantly less power and being physically smaller than conventional supercomputers.

"Progress in our understanding of how brains compute using neurons is hampered by our inability to simulate brain-like networks at scale. Simulating spiking neural networks on standard computers using Graphics Processing Units (GPUs) and multicore Central Processing Units (CPUs) is just too slow and power intensive. Our system will change that," Professor van Schaik said.

The system is scalable

The team named the supercomputer DeepSouth based on IBM's TrueNorth system, which started the idea of building computers that act like large networks of neurons, and Deep Blue, the first computer to beat a world chess champion.

The name also gives a nod to where the supercomputer is located geographically: Australia, which is situated in the southern hemisphere.

The team believes DeepSouth will help in advancements in diverse fields like sensing, biomedical, robotics, space, and large-scale AI applications.

The team believes DeepSouth will also revolutionize smart devices. This includes devices like mobile phones and sensors used in manufacturing and agriculture.

/2023/12/13/image/png/dvLnZmdtmjtSYuEfkAeYvH2vyNxXxUg0ze05iXXp.jpg)