You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Sam Altman is a habitual liar and can't be trusted, says former OpenAI board member

- Thread starter Professor Emeritus

- Start date

More options

Who Replied?Wargames

One Of The Last Real Ones To Do It

Basically the board was right he wants to be a billionaire. I am surprised so many left though, if they stayed they could have become billionaires too.

To me it looks like he wants investment in the company and to have money in the data centers. I also don’t think it’s a coincidence they released the best coding version of it first before doing this.

To me it looks like he wants investment in the company and to have money in the data centers. I also don’t think it’s a coincidence they released the best coding version of it first before doing this.

Wargames

One Of The Last Real Ones To Do It

i feel like he is on that Elon Musk chcle… Talk big game so people think you’re smarter than them and buy your product

This survey of fusion power projections suggestions that most experts are projecting we'll see the first power-generating demo plant around 2040 and the first commercially viable plant around 2040-2050. The lowest projection is still in the 2030s. China has a plant that's supposed to be running by the 2030s, but it's not expected to produce more energy than it consumes.

Wargames

One Of The Last Real Ones To Do It

I will believe it when I see it….This survey of fusion power projections suggestions that most experts are projecting we'll see the first power-generating demo plant around 2040 and the first commercially viable plant around 2040-2050. The lowest projection is still in the 2030s. China has a plant that's supposed to be running by the 2030s, but it's not expected to produce more energy than it consumes.

This survey of fusion power projections suggestions that most experts are projecting we'll see the first power-generating demo plant around 2040 and the first commercially viable plant around 2040-2050. The lowest projection is still in the 2030s. China has a plant that's supposed to be running by the 2030s, but it's not expected to produce more energy than it consumes.

he has a different timetable because he's betting his AI will become intelligent enough to solve the physics necessary for nuclear fusion to work. this is a reasonable assumption that if you have an actual artificial intelligence than the timetable for everything moves up significantly.

AI solves nuclear fusion puzzle for near-limitless clean energy

Nuclear fusion breakthough overcomes key barrier to grid-scale adoption

he has a different timetable because he's betting his AI will become intelligent enough to solve the physics necessary for nuclear fusion to work. this is a reasonable assumption that if you have an actual artificial intelligence than the timetable for everything moves up significantly.

AI solves nuclear fusion puzzle for near-limitless clean energy

Nuclear fusion breakthough overcomes key barrier to grid-scale adoptionwww.yahoo.com

Yes, I realize that's what he's "betting", but I've pointed out in the past that he knows literally nothing about physics. What makes his wild predictions any more meaningful than Musk's predictions?

At some point y'all gonna realize that Altman and Musk are the same guy.....

Yes, I realize that's what he's "betting", but I've pointed out in the past that he knows literally nothing about physics. What makes his wild predictions any more meaningful than Musk's predictions?

At some point y'all gonna realize that Altman and Musk are the same guy.....

he doesn't need to know physics, he knows they can can train narrow models on known physics and generate hundreds of thousands if not millions of synthetic textbooks on the subject in any which way and task any number of AI agents to tackle physics problems.

1/11

@denny_zhou

What is the performance limit when scaling LLM inference? Sky's the limit.

We have mathematically proven that transformers can solve any problem, provided they are allowed to generate as many intermediate reasoning tokens as needed. Remarkably, constant depth is sufficient.

[2402.12875] Chain of Thought Empowers Transformers to Solve Inherently Serial Problems (ICLR 2024)

2/11

@denny_zhou

Just noticed a fun youtube video for explaining this paper. LoL. Pointed by @laion_ai http://invidious.poast.org/4JNe-cOTgkY

3/11

@ctjlewis

hey Denny, curious if you have any thoughts. i reached the same conclusion:

[Quoted tweet]

x.com/i/article/178554774683…

4/11

@denny_zhou

Impressive! You would be interested at seeing this: [2301.04589] Memory Augmented Large Language Models are Computationally Universal

5/11

@nearcyan

what should one conclude from such a proof if it’s not also accompanied by a proof that we can train a transformer into the state (of solving a given arbitrary problem), possibly even with gradient descent and common post training techniques?

6/11

@QuintusActual

“We have mathematically proven that transformers can solve any problem, provided they are allowed to generate as many intermediate reasoning tokens as needed.”

I’m guessing this is only true because as a problem grows in difficulty, the # of required tokens approaches

7/11

@Shawnryan96

How do they solve novel problems without a way to update the world model?

8/11

@Justin_Halford_

Makes sense for verifiable domains (e.g. math and coding).

Does this generalize to more ambiguous domains with competing values/incentives without relying on human feedback?

9/11

@ohadasor

Don't fall into it!!

[Quoted tweet]

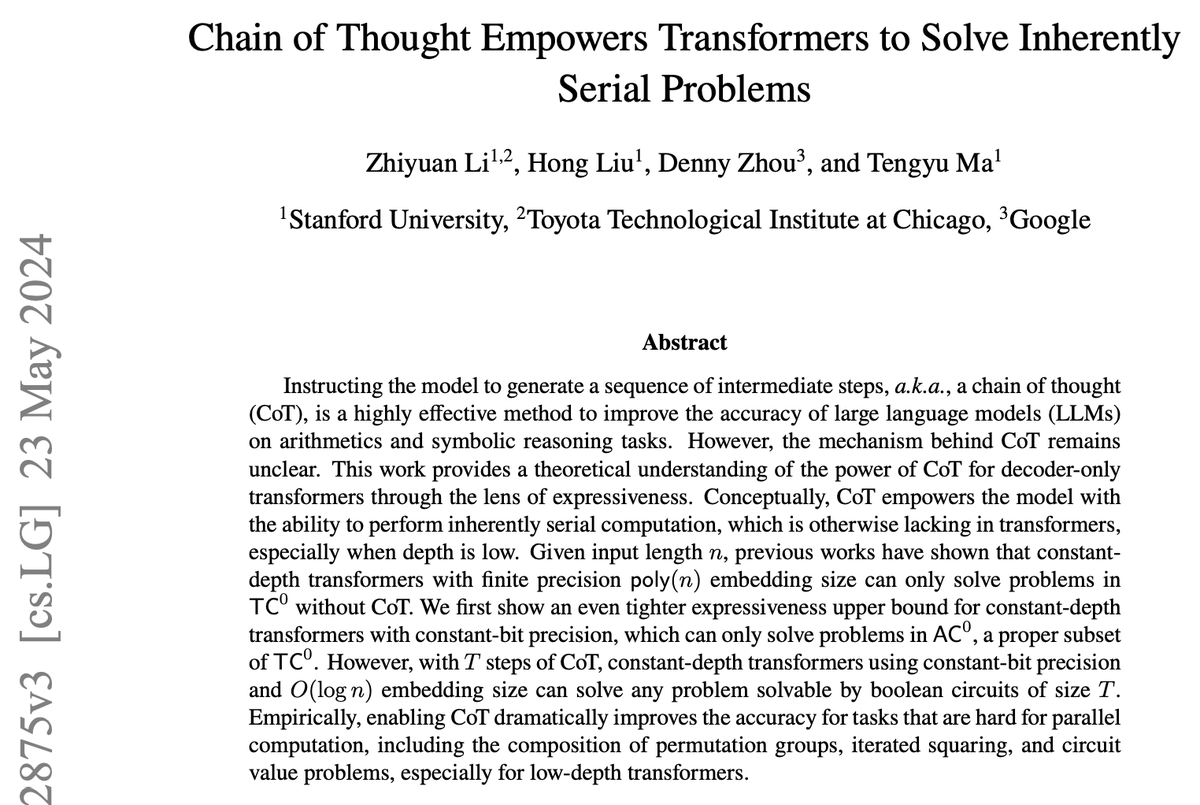

"can solve any problem"? Really?? Let's read the abstract in the image attached to the post, and see if the quote is correct. Ah wow! Somehow he forgot to quote the rest of the sentence! How is that possible?

The full quote is "can solve any problem solvable by boolean circuits of size T". This changes a lot. All problems solvable by Boolean circuits, of any size, is called the Circuit Evaluation Problem, and is known to cover precisely polynomial time (P) calculations. So it cannot solve the most basic logical problems which are at least exponential. Now here we don't even have P, we have only circuits of size T, which validates my old mantra: it can solve only constant-time problems. The lowest possible complexity class.

And it also validates my claim about the bubble of machine learning promoted by people who have no idea what they're talking about.

10/11

@CompSciFutures

Thx, refreshingly straightforward notation too, I might take the time to read this one properly.

I'm just catching up and have a dumb Q... that is an interestingly narrow subset of symbolic operands. Have you considered what happens if you add more?

11/11

@BatAndrew314

Noob question- how is it related to universal approximation theorem? Meaning does transformer can solve any problem because it is neural net? Or it’s some different property of transformers and CoT?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Chain of Thought Empowers Transformers to Solve Inherently Serial Problems

Instructing the model to generate a sequence of intermediate steps, a.k.a., a chain of thought (CoT), is a highly effective method to improve the accuracy of large language models (LLMs) on arithmetics and symbolic reasoning tasks. However, the mechanism behind CoT remains unclear. This work...arxiv.org

[Submitted on 20 Feb 2024 (v1), last revised 23 May 2024 (this version, v3)]

Chain of Thought Empowers Transformers to Solve Inherently Serial Problems

Zhiyuan Li, Hong Liu, Denny Zhou, Tengyu Ma

Comments: 38 pages, 10 figures. Accepted by ICLR 2024 Subjects: Machine Learning (cs.LG); Computational Complexity (cs.CC); Machine Learning (stat.ML) Cite as: arXiv:2402.12875 [cs.LG] (or arXiv:2402.12875v3 [cs.LG] for this version) [2402.12875] Chain of Thought Empowers Transformers to Solve Inherently Serial Problems

From: Zhiyuan Li [view email]

Submission history

[v1] Tue, 20 Feb 2024 10:11:03 UTC (3,184 KB)

[v2] Tue, 7 May 2024 17:00:27 UTC (5,555 KB)

[v3] Thu, 23 May 2024 17:10:39 UTC (5,555 KB)

he doesn't need to know physics, he knows they can can train narrow models on known physics and generate hundreds of thousands if not millions of synthetic textbooks on the subject in any which way and task any number of AI agents to tackle physics problems.

Read the reddit and twitter comments on the article you posted to see how little that paper's result matters in a practical sense. Without knowing physics, you literally have no clue what they can and cannot solve.

Wargames

One Of The Last Real Ones To Do It

At this point I believe them. He feels like a young Elon Musk when everyone was ignoring the signs he was a full of shyt con man and thought he was a genius.

Most of the tech people who actually figured out how to create AI have left In protest and people are talking about Altman’s Dream for AI instead.

Most of the tech people who actually figured out how to create AI have left In protest and people are talking about Altman’s Dream for AI instead.

Sam Altman Says ‘OpenAI o1-preview is deeply flawed’

OpenAI’s o1-preview is generating excitement in the tech world. However, it is still not the epitome of perfection.

analyticsindiamag.com

analyticsindiamag.com

Sam Altman Says ‘OpenAI o1-preview is deeply flawed’

'But when o1 comes out, it will feel like a major leap forward.'

- Published on September 30, 2024

- by Shalini Mondal

Read more at: Sam Altman Says ‘OpenAI o1-preview is deeply flawed’

OpenAI’so1-preview is generating excitement in the tech world. However, it is still not the epitome of perfection.

In a recent interview, OpenAI chief Sam Altman said “o1-preview is deeply flawed, but when o1 comes out, it will feel like a major leap forward.” Altman looked back at the company’s early years, revealing that it took nearly 4.5 years before OpenAI launched its first product.

“Our first product, GPT-3, was not something we were particularly proud of at the time,” he admitted. Despite the buzz surrounding GPT-3, the team identified its limitations. After several more iterations, it led to the release of GPT-3.5, ChatGPT, and ultimately GPT-4, before they began to realize the full potential of their work.

Essentially, the company’s vision goes beyond incremental improvements.“We often focus too much on ‘less bad,’ on fixing immediate issues, rather than dreaming of the breakthroughs that could propel humanity to the next level,” he said.

Rather than settling for temporary or immediate fixes, Open AI’s mission is to strive for long term progress for humanity rather than instant gratification.

This long-term focus on innovation aligns with OpenAI’s broader goal of transforming how AI is integrated into society. The CEO acknowledged that delivering groundbreaking products took time, but now OpenAI is better positioned to shape the future. “We’ve started to deliver great products, but we’re going to deliver far greater ones,” he promised.

In anotherinterview, Altman described the company’s latest AI model,o1, as being at the ‘GPT-2 stage’ of reasoning development. Altman explained, “I think of this as like we’re at the GPT-2 stage of these new kinds of reasoning models.” He emphasised that while the model is still early in its development, significant improvements are expected in the coming months.

Altman said that users will notice o1 rapidly improving as OpenAI moves from the o1-preview model to the full release. “Even in the coming months, you’ll see it get a lot better as we move from o1-preview to o1, which we shared some metrics for in our launch blog post,” he said.

Altman further mentioned that he loves the startup energy as they are redefining success in the industry. Given their dynamism, startups can iterate faster and adapt more quickly than large corporations. Instead of asking for advice on what to build, founders of startups should trust their instincts and bold ideas.

In this regard, Altman also stated how countries like Italy should focus on using their past as a springboard for future innovation in AI.

Exclusive: OpenAI to remove non-profit control and give Sam Altman equity

OpenAI as we knew it is dead

The maker of ChatGPT promised to share its profits with the public. But Sam Altman just sold you out.

So pretty much anything Altman has ever said about OpenAI was a lie.

* "Independent oversight" - nah, I'll mutiny if anyone does anything I don't want

* "Nonprofit board because we care about society" - nah, that's completely gone

* "I don't have any financial stake in this" - sorry, just gave myself billions in financial stake

Why believe anything this guy says about anything? He's a self-serving narcissist who ALWAYS lies and never has to pay for it (other than every employee with a soul leaving).

Now we see the real reason he's gassing fusion progress so hard.

Who’s got enough power for OpenAI’s 5-gigawatt data center plans? | Fortune

Five gigawatts is the kind of power a major city needs.

He's asking for over 1% of the entire globe's electricity. Wants the USA to build him enough power plants to run TWO New York states.

Convincing us that it's okay to burn insane amounts of energy cause he'll solve fusion any day now.

And he wants the taxpayers to pay for it.

Similar threads

- Replies

- 2

- Views

- 604