I still have roughly the same short-, medium- and long-term risk profiles. I still expect that on cybersecurity and bio stuff, 16 we’ll see serious, or potentially serious, short-term issues that need mitigation. Long term, as you think about a system that really just has incredible capability, there’s risks that are probably hard to precisely imagine and model. But I can simultaneously think that these risks are real and also believe that the only way to appropriately address them is to ship product and learn.

16 In September 2024, OpenAI acknowledged that its latest AI models have increased the risk of misuse in creating bioweapons. In May 2023, Altman joined hundreds of other signatories to a statement highlighting the existential risks posed by AI.

When it comes to the immediate future, the industry seems to have coalesced around three potential roadblocks to progress: scaling the models, chip scarcity and energy scarcity. I know they commingle, but can you rank those in terms of your concern?

We have a plan that I feel pretty good about on each category. Scaling the models, we continue to make technical progress, capability progress, safety progress, all together. I think 2025 will be an incredible year. Do you know this thing called the ARC-AGI challenge? Five years ago this group put together this prize as a North Star toward AGI. They wanted to make a benchmark that they thought would be really hard. The model we’re announcing on Friday 17 passed this benchmark. For five years it sat there, unsolved. It consists of problems like this. 18 They said if you can score 85% on this, we’re going to consider that a “pass.” And our system—with no custom work, just out of the box—got an 87.5%. 19 And we have very promising research and better models to come.

17 OpenAI introduced Model o3 on Dec. 20. It should be available to users in early 2025. The previous model was o1, but the Information reported that OpenAI skipped over o2 to avoid a potential conflict with British telecommunications provider 02.

18 On my laptop, Altman called up the ARC-AGI website, which displayed a series of bewildering abstract grids. The abstraction is the point; to “solve” the grids and achieve AGI, an AI model must rely more on reason than its training data.

19 According to ARC-AGI: “OpenAI’s new o3 system—trained on the ARC-AGI-1 Public Training set—has scored a breakthrough 75.7% on the Semi-Private Evaluation set at our stated public leaderboard $10k compute limit. A high-compute (172x) o3 configuration scored 87.5%.”

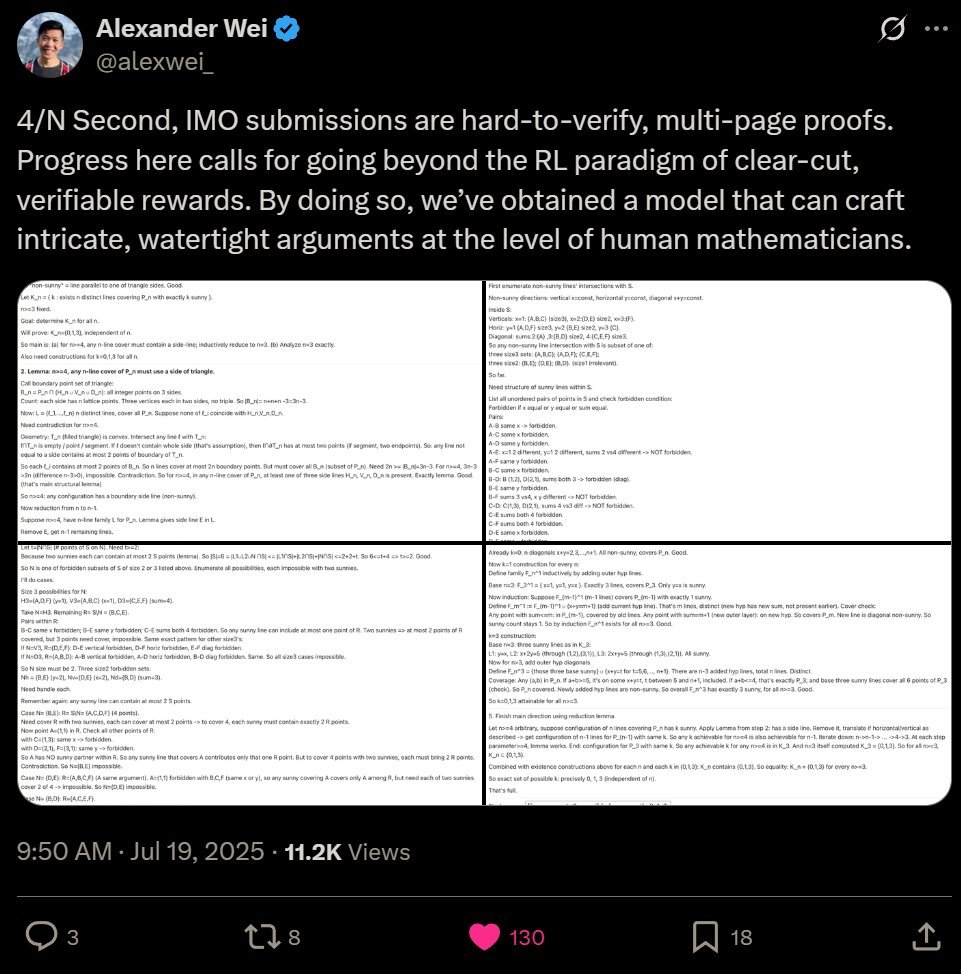

We have been hard at work on the whole [chip] supply chain, all the partners. We have people to build data centers and make chips for us. We have our own chip effort here. We have a wonderful partnership with Nvidia, just an absolutely incredible company. And we’ll talk more about this next year, but now is the time for us to scale chips.

Nvidia Corp. CEO Jensen Huang speaking at an event in Tokyo on Nov. 13, 2024. Photographer: Kyodo/AP Images

So energy …

Fusion’s gonna work.

Fusion is going to work. Um. On what time frame?

Soon. Well, soon there will be a demonstration of net-gain fusion. You then have to build a system that doesn’t break. You have to scale it up. You have to figure out how to build a factory—build a lot of them—and you have to get regulatory approval. And that will take, you know, years altogether? But I would expect [Helion 20] will show you that fusion works soon.

20 Helion, a clean energy startup co-founded by Altman, Dustin Moskovitz and Reid Hoffman, focuses on developing nuclear fusion.

In the short term, is there any way to sustain AI’s growth without going backward on climate goals?

Yes, but none that is as good, in my opinion, as quickly permitting fusion reactors. I think our particular kind of fusion is such a beautiful approach that we should just race toward that and be done.

A lot of what you just said interacts with the government. We have a new president coming. You made a personal $1 million donation to the inaugural fund. Why?

He’s the president of the United States. I support any president.

I understand why it makes sense for OpenAI to be seen supporting a president who’s famous for keeping score of who’s supporting him, but this was a personal donation. Donald Trump opposes many of the things you’ve previously supported. Am I wrong to think the donation is less an act of patriotic conviction and more an act of fealty?

I don’t support everything that Trump does or says or thinks. I don’t support everything that Biden says or does or thinks. But I do support the United States of America, and I will work to the degree I’m able to with any president for the good of the country. And particularly for the good of what I think is this huge moment that has got to transcend any political issues. I think AGI will probably get developed during this president’s term, and getting that right seems really important. Supporting the inauguration, I think that’s a relatively small thing. I don’t view that as a big decision either way. But I do think we all should wish for the president’s success.

He’s said he hates the Chips Act. You supported the Chips Act.

I actually don’t. I think the Chips Act was better than doing nothing but not the thing that we should have done. And I think there’s a real opportunity to do something much better as a follow-on. I don’t think the Chips Act has been as effective as any of us hoped.

Trump and Musk talk ringside during the UFC 309 event at Madison Square Garden in New York on Nov. 16. Photographer: Chris Unger/Zuffa LLC Elon 21 is clearly going to be playing some role in this administration. He’s suing you. He’s competing with you. I saw your comments at DealBook that you think he’s above using his position to engage in any funny business as it relates to AI.

I do think so.

21 C’mon, how many Elons do you know?

But if I may: In the past few years he bought Twitter, then sued to get out of buying Twitter. He replatformed Alex Jones. He challenged Zuckerberg to a cage match. That’s just kind of the tip of the funny-business iceberg. So do you really believe that he’s going to—

Oh, I think he’ll do all sorts of bad s---. I think he’ll continue to sue us and drop lawsuits and make new lawsuits and whatever else. He hasn’t challenged me to a cage match yet, but I don’t think he was that serious about it with Zuck, either, it turned out. As you pointed out, he says a lot of things, starts them, undoes them, gets sued, sues, gets in fights with the government, gets investigated by the government. That’s just Elon being Elon. The question was, will he abuse his political power of being co-president, or whatever he calls himself now, to mess with a business competitor? I don’t think he’ll do that. I genuinely don’t. May turn out to be proven wrong.

When the two of you were working together at your best, how would you describe what you each brought to the relationship?

Maybe like a complementary spirit. We don’t know exactly what this is going to be or what we’re going to do or how this is going to go, but we have a shared conviction that this is important, and this is the rough direction to push and how to course-correct.

I’m curious what the actual working relationship was like.

I don’t remember any big blowups with Elon until the fallout that led to the departure. But until then, for all of the stories—people talk about how he berates people and blows up and whatever, I hadn’t experienced that.

Are you surprised by how much capital he’s been able to raise, specifically from the Middle East, for xAI?

No. No. They have a lot of capital. It’s the industry people want. Elon is Elon.

Let’s presume you’re right and there’s positive intent from Elon and the administration. What’s the most helpful thing the Trump administration can do for AI in 2025?

US-built infrastructure and lots of it. The thing I really deeply agree with the president on is, it is wild how difficult it has become to build things in the United States. Power plants, data centers, any of that kind of stuff. I understand how bureaucratic cruft builds up, but it’s not helpful to the country in general. It’s particularly not helpful when you think about what needs to happen for the US to lead AI. And the US really needs to lead AI.

16 In September 2024, OpenAI acknowledged that its latest AI models have increased the risk of misuse in creating bioweapons. In May 2023, Altman joined hundreds of other signatories to a statement highlighting the existential risks posed by AI.

When it comes to the immediate future, the industry seems to have coalesced around three potential roadblocks to progress: scaling the models, chip scarcity and energy scarcity. I know they commingle, but can you rank those in terms of your concern?

We have a plan that I feel pretty good about on each category. Scaling the models, we continue to make technical progress, capability progress, safety progress, all together. I think 2025 will be an incredible year. Do you know this thing called the ARC-AGI challenge? Five years ago this group put together this prize as a North Star toward AGI. They wanted to make a benchmark that they thought would be really hard. The model we’re announcing on Friday

17 OpenAI introduced Model o3 on Dec. 20. It should be available to users in early 2025. The previous model was o1, but the Information reported that OpenAI skipped over o2 to avoid a potential conflict with British telecommunications provider 02.

18 On my laptop, Altman called up the ARC-AGI website, which displayed a series of bewildering abstract grids. The abstraction is the point; to “solve” the grids and achieve AGI, an AI model must rely more on reason than its training data.

19 According to ARC-AGI: “OpenAI’s new o3 system—trained on the ARC-AGI-1 Public Training set—has scored a breakthrough 75.7% on the Semi-Private Evaluation set at our stated public leaderboard $10k compute limit. A high-compute (172x) o3 configuration scored 87.5%.”

We have been hard at work on the whole [chip] supply chain, all the partners. We have people to build data centers and make chips for us. We have our own chip effort here. We have a wonderful partnership with Nvidia, just an absolutely incredible company. And we’ll talk more about this next year, but now is the time for us to scale chips.

Nvidia Corp. CEO Jensen Huang speaking at an event in Tokyo on Nov. 13, 2024. Photographer: Kyodo/AP Images

So energy …

Fusion’s gonna work.

Fusion is going to work. Um. On what time frame?

Soon. Well, soon there will be a demonstration of net-gain fusion. You then have to build a system that doesn’t break. You have to scale it up. You have to figure out how to build a factory—build a lot of them—and you have to get regulatory approval. And that will take, you know, years altogether? But I would expect [Helion

20 Helion, a clean energy startup co-founded by Altman, Dustin Moskovitz and Reid Hoffman, focuses on developing nuclear fusion.

In the short term, is there any way to sustain AI’s growth without going backward on climate goals?

Yes, but none that is as good, in my opinion, as quickly permitting fusion reactors. I think our particular kind of fusion is such a beautiful approach that we should just race toward that and be done.

A lot of what you just said interacts with the government. We have a new president coming. You made a personal $1 million donation to the inaugural fund. Why?

He’s the president of the United States. I support any president.

I understand why it makes sense for OpenAI to be seen supporting a president who’s famous for keeping score of who’s supporting him, but this was a personal donation. Donald Trump opposes many of the things you’ve previously supported. Am I wrong to think the donation is less an act of patriotic conviction and more an act of fealty?

I don’t support everything that Trump does or says or thinks. I don’t support everything that Biden says or does or thinks. But I do support the United States of America, and I will work to the degree I’m able to with any president for the good of the country. And particularly for the good of what I think is this huge moment that has got to transcend any political issues. I think AGI will probably get developed during this president’s term, and getting that right seems really important. Supporting the inauguration, I think that’s a relatively small thing. I don’t view that as a big decision either way. But I do think we all should wish for the president’s success.

He’s said he hates the Chips Act. You supported the Chips Act.

I actually don’t. I think the Chips Act was better than doing nothing but not the thing that we should have done. And I think there’s a real opportunity to do something much better as a follow-on. I don’t think the Chips Act has been as effective as any of us hoped.

Trump and Musk talk ringside during the UFC 309 event at Madison Square Garden in New York on Nov. 16. Photographer: Chris Unger/Zuffa LLC Elon

I do think so.

21 C’mon, how many Elons do you know?

But if I may: In the past few years he bought Twitter, then sued to get out of buying Twitter. He replatformed Alex Jones. He challenged Zuckerberg to a cage match. That’s just kind of the tip of the funny-business iceberg. So do you really believe that he’s going to—

Oh, I think he’ll do all sorts of bad s---. I think he’ll continue to sue us and drop lawsuits and make new lawsuits and whatever else. He hasn’t challenged me to a cage match yet, but I don’t think he was that serious about it with Zuck, either, it turned out. As you pointed out, he says a lot of things, starts them, undoes them, gets sued, sues, gets in fights with the government, gets investigated by the government. That’s just Elon being Elon. The question was, will he abuse his political power of being co-president, or whatever he calls himself now, to mess with a business competitor? I don’t think he’ll do that. I genuinely don’t. May turn out to be proven wrong.

When the two of you were working together at your best, how would you describe what you each brought to the relationship?

Maybe like a complementary spirit. We don’t know exactly what this is going to be or what we’re going to do or how this is going to go, but we have a shared conviction that this is important, and this is the rough direction to push and how to course-correct.

I’m curious what the actual working relationship was like.

I don’t remember any big blowups with Elon until the fallout that led to the departure. But until then, for all of the stories—people talk about how he berates people and blows up and whatever, I hadn’t experienced that.

Are you surprised by how much capital he’s been able to raise, specifically from the Middle East, for xAI?

No. No. They have a lot of capital. It’s the industry people want. Elon is Elon.

Let’s presume you’re right and there’s positive intent from Elon and the administration. What’s the most helpful thing the Trump administration can do for AI in 2025?

US-built infrastructure and lots of it. The thing I really deeply agree with the president on is, it is wild how difficult it has become to build things in the United States. Power plants, data centers, any of that kind of stuff. I understand how bureaucratic cruft builds up, but it’s not helpful to the country in general. It’s particularly not helpful when you think about what needs to happen for the US to lead AI. And the US really needs to lead AI.