1/3

@rohanpaul_ai

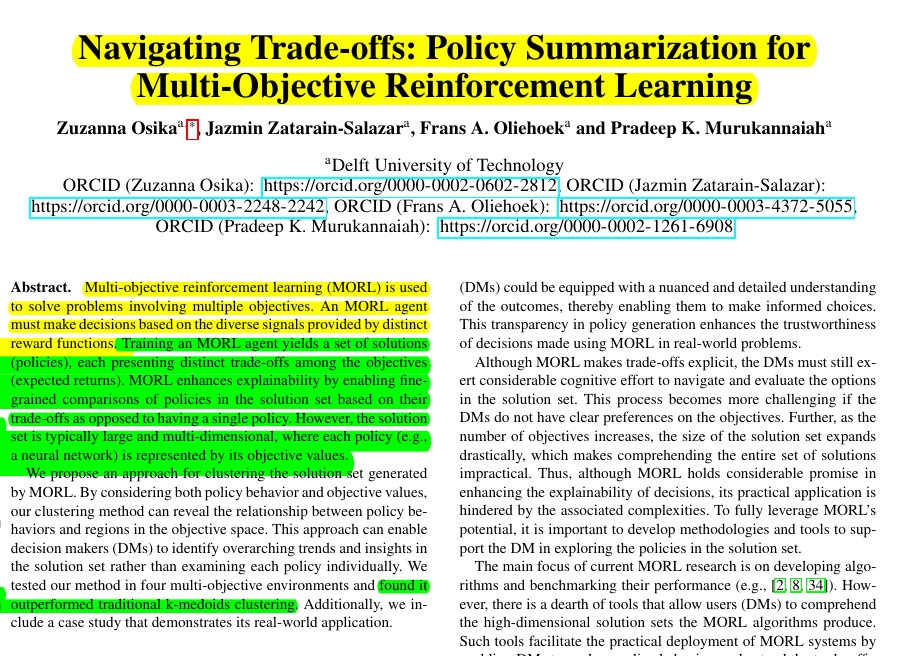

This paper makes complex Multi-objective reinforcement learning (MORL) policies understandable by clustering them based on both behavior and objectives

When AI gives you too many options, this clustering trick saves the day

Original Problem:

Original Problem:

Multi-objective reinforcement learning (MORL) generates multiple policies with different trade-offs, but these solution sets are too large and complex for humans to analyze effectively. Decision makers struggle to understand relationships between policy behaviors and their objective outcomes.

-----

Solution in this Paper:

Solution in this Paper:

→ Introduces a novel clustering approach that considers both objective space (expected returns) and behavior space (policy actions)

→ Uses Highlights algorithm to capture 5 key states that represent each policy's behavior

→ Applies PAN (Pareto-Set Analysis) clustering to find well-defined clusters in both spaces simultaneously

→ Employs bi-objective evolutionary algorithm to optimize clustering quality across both spaces

-----

Key Insights:

Key Insights:

→ First research to tackle MORL solution set explainability

→ Different policies with similar trade-offs can exhibit vastly different behaviors

→ Combining objective and behavior analysis reveals deeper policy insights

→ Makes MORL more practical for real-world applications

-----

Results:

Results:

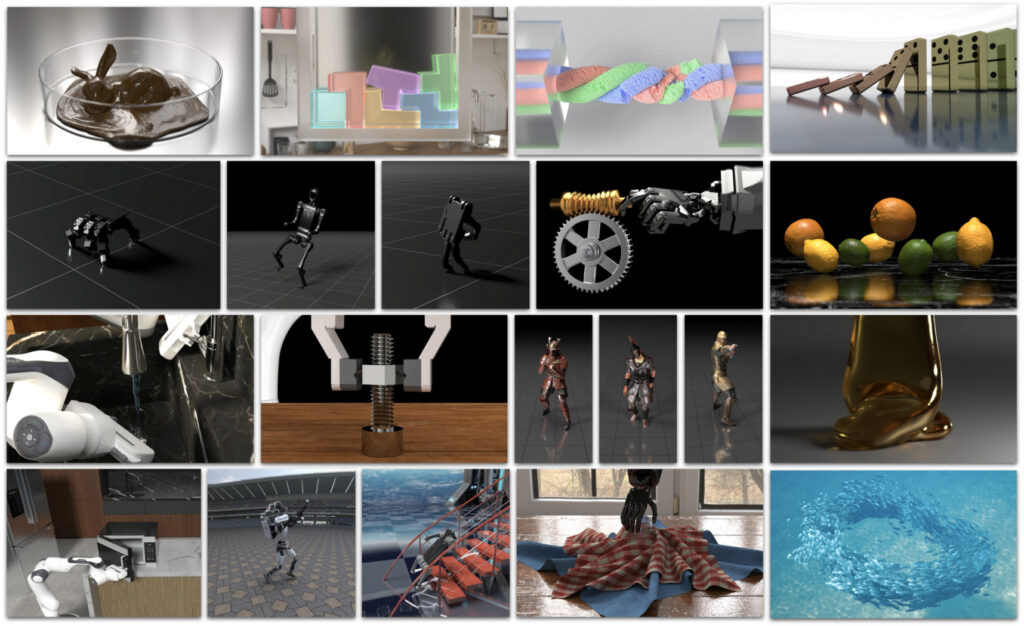

→ Outperformed traditional k-medoids clustering in MO-Highway and MO-Lunar-lander environments

→ Showed comparable performance in MO-Reacher and MO-Minecart scenarios

→ Successfully demonstrated practical application through highway environment case study

2/3

@rohanpaul_ai

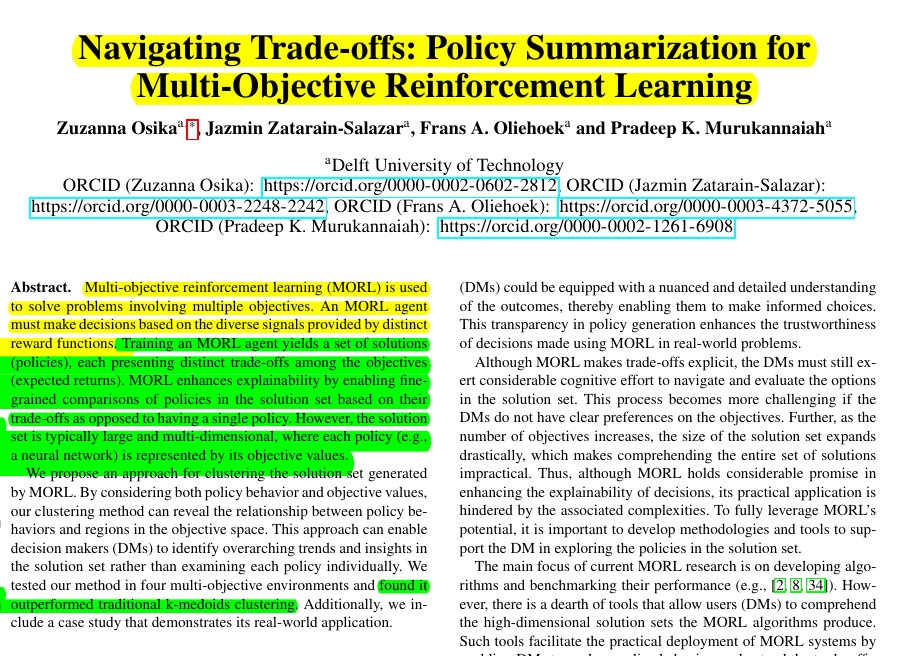

Paper Title: "Navigating Trade-offs: Policy Summarization for Multi-Objective Reinforcement Learning"

Generated below podcast on this paper with Google's Illuminate.

https://video.twimg.com/ext_tw_video/1861197491238211584/pu/vid/avc1/1080x1080/56yXAj4Toyxny-Ic.mp4

3/3

@rohanpaul_ai

[2411.04784v1] Navigating Trade-offs: Policy Summarization for Multi-Objective Reinforcement Learning

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@rohanpaul_ai

This paper makes complex Multi-objective reinforcement learning (MORL) policies understandable by clustering them based on both behavior and objectives

When AI gives you too many options, this clustering trick saves the day

Multi-objective reinforcement learning (MORL) generates multiple policies with different trade-offs, but these solution sets are too large and complex for humans to analyze effectively. Decision makers struggle to understand relationships between policy behaviors and their objective outcomes.

-----

→ Introduces a novel clustering approach that considers both objective space (expected returns) and behavior space (policy actions)

→ Uses Highlights algorithm to capture 5 key states that represent each policy's behavior

→ Applies PAN (Pareto-Set Analysis) clustering to find well-defined clusters in both spaces simultaneously

→ Employs bi-objective evolutionary algorithm to optimize clustering quality across both spaces

-----

→ First research to tackle MORL solution set explainability

→ Different policies with similar trade-offs can exhibit vastly different behaviors

→ Combining objective and behavior analysis reveals deeper policy insights

→ Makes MORL more practical for real-world applications

-----

→ Outperformed traditional k-medoids clustering in MO-Highway and MO-Lunar-lander environments

→ Showed comparable performance in MO-Reacher and MO-Minecart scenarios

→ Successfully demonstrated practical application through highway environment case study

2/3

@rohanpaul_ai

Paper Title: "Navigating Trade-offs: Policy Summarization for Multi-Objective Reinforcement Learning"

Generated below podcast on this paper with Google's Illuminate.

https://video.twimg.com/ext_tw_video/1861197491238211584/pu/vid/avc1/1080x1080/56yXAj4Toyxny-Ic.mp4

3/3

@rohanpaul_ai

[2411.04784v1] Navigating Trade-offs: Policy Summarization for Multi-Objective Reinforcement Learning

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196