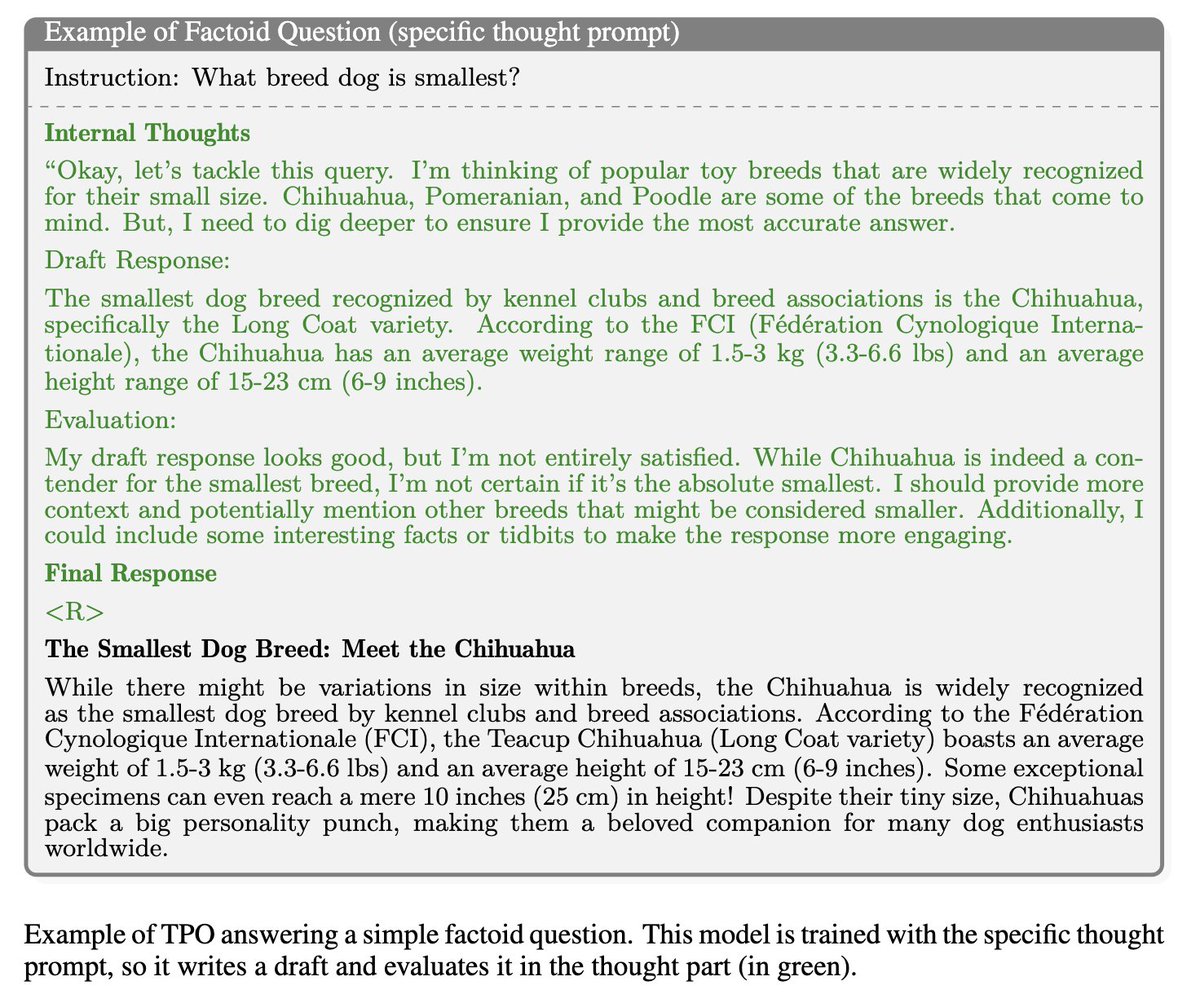

1/5

@AdinaYakup

New open text-image model from the Chinese community

New open text-image model from the Chinese community

CogView3-Plus-3B a DiT based text-to-image generation model, released by @ChatGLM

a DiT based text-to-image generation model, released by @ChatGLM

Model: THUDM/CogView3-Plus-3B · Hugging Face

Demo: CogView3-Plus-3B - a Hugging Face Space by THUDM-HF-SPACE

Supports image generation from 512 to 2048px

Supports image generation from 512 to 2048px

Uses Zero-SNR noise and text-image attention to reduce costs

Uses Zero-SNR noise and text-image attention to reduce costs

Apache 2.0

Apache 2.0

2/5

@AdinaYakup

3/5

@gerardsans

It is crucial for anyone who is drawn to OpenAI's anthropomorphic narrative to recognise the ethical and safety risks it creates, as well as the organisation's lack of accountability and transparency.

AI Chatbots: Illusion of Intelligence

4/5

@AdinaYakup

Great blog! Thanks for sharing.

You might also find what our chief of ethics @mmitchell_ai mentioned recently interesting

[Quoted tweet]

The idea of "superhuman" AGI is inherently problematic. A different approach deeply contends with the *specific tasks* where technology might be useful.

Below: Some of my Senate testimony on the topic.

Disclosure: Thankful I can work on this @huggingface

https://video.twimg.com/ext_tw_video/1844785440790048768/pu/vid/avc1/720x720/lppXTsH_eVaKITcA.mp4

5/5

@JuiceEng

Really impressive, I've been waiting for a more efficient text-to-image generation model. CogView3-Plus-3B's use of Zero-SNR noise and text-image attention is a great step forward.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@AdinaYakup

CogView3-Plus-3B

Model: THUDM/CogView3-Plus-3B · Hugging Face

Demo: CogView3-Plus-3B - a Hugging Face Space by THUDM-HF-SPACE

2/5

@AdinaYakup

3/5

@gerardsans

It is crucial for anyone who is drawn to OpenAI's anthropomorphic narrative to recognise the ethical and safety risks it creates, as well as the organisation's lack of accountability and transparency.

AI Chatbots: Illusion of Intelligence

4/5

@AdinaYakup

Great blog! Thanks for sharing.

You might also find what our chief of ethics @mmitchell_ai mentioned recently interesting

[Quoted tweet]

The idea of "superhuman" AGI is inherently problematic. A different approach deeply contends with the *specific tasks* where technology might be useful.

Below: Some of my Senate testimony on the topic.

Disclosure: Thankful I can work on this @huggingface

https://video.twimg.com/ext_tw_video/1844785440790048768/pu/vid/avc1/720x720/lppXTsH_eVaKITcA.mp4

5/5

@JuiceEng

Really impressive, I've been waiting for a more efficient text-to-image generation model. CogView3-Plus-3B's use of Zero-SNR noise and text-image attention is a great step forward.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/5

@zRdianjiao

CogView3 Diffusers version from ZhipuAI @ChatGLM is complete and the PR is in the process of being merged!

Thanks to @aryanvs_ @RisingSayak @huggingface for the support.

The online demo is live, feel free to try it out!

THUDM/CogView3-Plus-3B · Hugging Face

CogView3-Plus-3B - a Hugging Face Space by THUDM-HF-SPACE

2/5

@j6sp5r

The results are cute!

But what's the key difference? Generation took a long time and the same prompt looks good in flux, too.

"Girl riding her mountain bike down a giant cake chased by candy monsters"

3/5

@zRdianjiao

We used 50 steps in demo, which resulted in a longer time. Additionally, on the same A100 machine (the same as Zero), the speed would be a bit faster, reaching 2-3 step per second

4/5

@j6sp5r

Is there a way to avoid the burnt oversaturated look?

5/5

@anushkmittal

nice work. what are the key improvements in this version?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@zRdianjiao

CogView3 Diffusers version from ZhipuAI @ChatGLM is complete and the PR is in the process of being merged!

Thanks to @aryanvs_ @RisingSayak @huggingface for the support.

The online demo is live, feel free to try it out!

THUDM/CogView3-Plus-3B · Hugging Face

CogView3-Plus-3B - a Hugging Face Space by THUDM-HF-SPACE

2/5

@j6sp5r

The results are cute!

But what's the key difference? Generation took a long time and the same prompt looks good in flux, too.

"Girl riding her mountain bike down a giant cake chased by candy monsters"

3/5

@zRdianjiao

We used 50 steps in demo, which resulted in a longer time. Additionally, on the same A100 machine (the same as Zero), the speed would be a bit faster, reaching 2-3 step per second

4/5

@j6sp5r

Is there a way to avoid the burnt oversaturated look?

5/5

@anushkmittal

nice work. what are the key improvements in this version?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

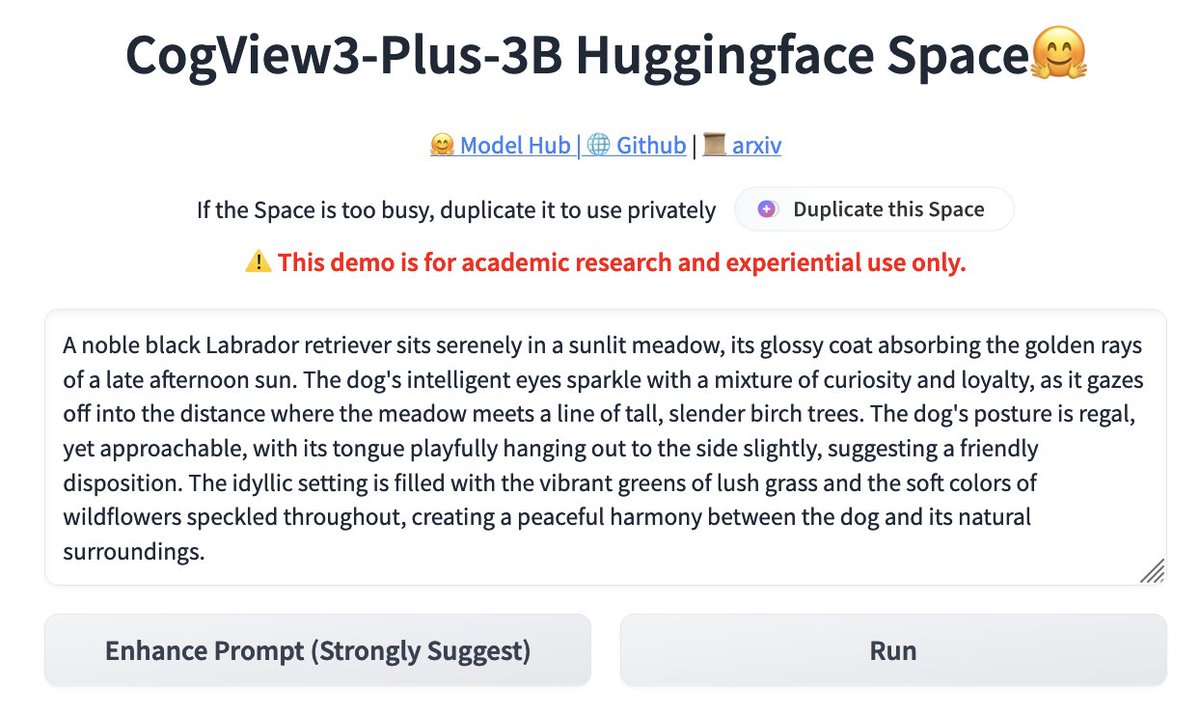

1/4

@Gradio

CogView-3-Plus is live now!

Text-to-image model. Uses DiT framework for performance improvements. Compared to the MMDiT structure, it effectively reduces training and inference costs. App built with Gradio 5.

App built with Gradio 5.

2/4

@Gradio

CogView3-Plus-3B is live on Huggingface Space

CogView3-Plus-3B - a Hugging Face Space by THUDM-HF-SPACE

3/4

@DaviesTechAI

just tested CogView-3-Plus - performance boost is noticeable, impressive work on reducing training and inference costs

4/4

@bate5a55

Interesting that CogView-3-Plus's DiT framework uses a dual-path processing method, handling text and image tokens simultaneously—enhancing generation speed over MMDiT.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@Gradio

CogView-3-Plus is live now!

Text-to-image model. Uses DiT framework for performance improvements. Compared to the MMDiT structure, it effectively reduces training and inference costs.

2/4

@Gradio

CogView3-Plus-3B is live on Huggingface Space

CogView3-Plus-3B - a Hugging Face Space by THUDM-HF-SPACE

3/4

@DaviesTechAI

just tested CogView-3-Plus - performance boost is noticeable, impressive work on reducing training and inference costs

4/4

@bate5a55

Interesting that CogView-3-Plus's DiT framework uses a dual-path processing method, handling text and image tokens simultaneously—enhancing generation speed over MMDiT.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/4

@aigclink

智谱开源了他的新一代文生图模型 CogView3-Plus,支持从512到2048px图像生成

github:GitHub - THUDM/CogView3: text to image to generation: CogView3-Plus and CogView3(ECCV 2024)

模型:THUDM/CogView3-Plus-3B · Hugging Face

2/4

@jasonboshi

The “Finger problem”!

3/4

@lambdawins

文生图的开源越来越丰富

4/4

@Sandra727557468

Matt Cec

Cec illia

illia

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@aigclink

智谱开源了他的新一代文生图模型 CogView3-Plus,支持从512到2048px图像生成

github:GitHub - THUDM/CogView3: text to image to generation: CogView3-Plus and CogView3(ECCV 2024)

模型:THUDM/CogView3-Plus-3B · Hugging Face

2/4

@jasonboshi

The “Finger problem”!

3/4

@lambdawins

文生图的开源越来越丰富

4/4

@Sandra727557468

Matt

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196