1/2

Today we’re premiering Meta Movie Gen: the most advanced media foundation models to-date.

Today we’re premiering Meta Movie Gen: the most advanced media foundation models to-date.

Developed by AI research teams at Meta, Movie Gen delivers state-of-the-art results across a range of capabilities. We’re excited for the potential of this line of research to usher in entirely new possibilities for casual creators and creative professionals alike.

More details and examples of what Movie Gen can do Meta Movie Gen - the most advanced media foundation AI models

Meta Movie Gen - the most advanced media foundation AI models

Movie Gen models and capabilities

Movie Gen models and capabilities

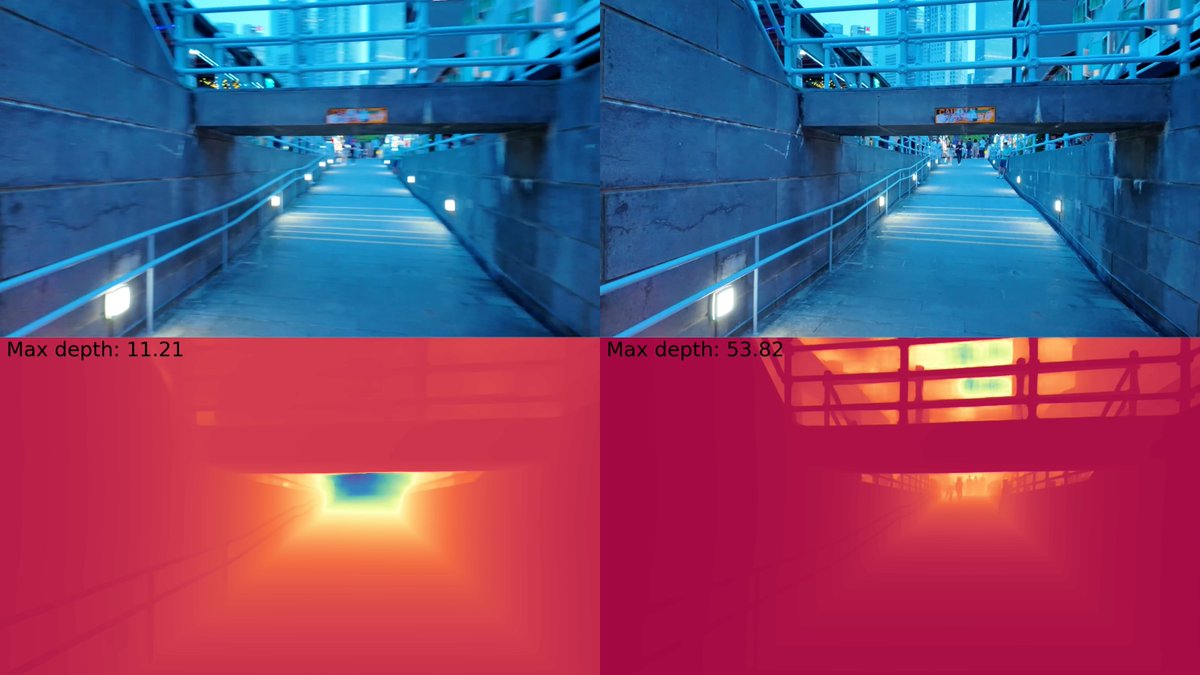

Movie Gen Video: 30B parameter transformer model that can generate high-quality and high-definition images and videos from a single text prompt.

Movie Gen Audio: A 13B parameter transformer model that can take a video input along with optional text prompts for controllability to generate high-fidelity audio synced to the video. It can generate ambient sound, instrumental background music and foley sound — delivering state-of-the-art results in audio quality, video-to-audio alignment and text-to-audio alignment.

Precise video editing: Using a generated or existing video and accompanying text instructions as an input it can perform localized edits such as adding, removing or replacing elements — or global changes like background or style changes.

Personalized videos: Using an image of a person and a text prompt, the model can generate a video with state-of-the-art results on character preservation and natural movement in video.

We’re continuing to work closely with creative professionals from across the field to integrate their feedback as we work towards a potential release. We look forward to sharing more on this work and the creative possibilities it will enable in the future.

2/2

As part of our continued belief in open science and progressing the state-of-the-art in media generation, we’ve published more details on Movie Gen in a new research paper for the academic community https://go.fb.me/toz71j

https://go.fb.me/toz71j

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Developed by AI research teams at Meta, Movie Gen delivers state-of-the-art results across a range of capabilities. We’re excited for the potential of this line of research to usher in entirely new possibilities for casual creators and creative professionals alike.

More details and examples of what Movie Gen can do

Movie Gen Video: 30B parameter transformer model that can generate high-quality and high-definition images and videos from a single text prompt.

Movie Gen Audio: A 13B parameter transformer model that can take a video input along with optional text prompts for controllability to generate high-fidelity audio synced to the video. It can generate ambient sound, instrumental background music and foley sound — delivering state-of-the-art results in audio quality, video-to-audio alignment and text-to-audio alignment.

Precise video editing: Using a generated or existing video and accompanying text instructions as an input it can perform localized edits such as adding, removing or replacing elements — or global changes like background or style changes.

Personalized videos: Using an image of a person and a text prompt, the model can generate a video with state-of-the-art results on character preservation and natural movement in video.

We’re continuing to work closely with creative professionals from across the field to integrate their feedback as we work towards a potential release. We look forward to sharing more on this work and the creative possibilities it will enable in the future.

2/2

As part of our continued belief in open science and progressing the state-of-the-art in media generation, we’ve published more details on Movie Gen in a new research paper for the academic community

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/2

More examples of what Meta Movie Gen can do across video generation, precise video editing, personalized video generation and audio generation.

2/2

We’ve shared more details on the models and Movie Gen capabilities in a new blog post How Meta Movie Gen could usher in a new AI-enabled era for content creators

How Meta Movie Gen could usher in a new AI-enabled era for content creators

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

More examples of what Meta Movie Gen can do across video generation, precise video editing, personalized video generation and audio generation.

2/2

We’ve shared more details on the models and Movie Gen capabilities in a new blog post

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

https://reddit.com/link/1fvyvr9/video/v6ozewbtoqsd1/player

Generate videos from text Edit video with text

Produce personalized videos

Create sound effects and soundtracks

Paper: MovieGen: A Cast of Media Foundation Models

https://ai.meta.com/static-resource/movie-gen-research-paper

Source: AI at Meta on X: