A new way to build neural networks could make AI more understandable

The simplified approach makes it easier to see how neural networks produce the outputs they do.

A new way to build neural networks could make AI more understandable

The simplified approach makes it easier to see how neural networks produce the outputs they do.

By Anil Ananthaswa

myarchive page

August 30, 2024

Stephanie Arnett/ MIT Technology Review | Envato

A tweak to the way artificial neurons work in neural networks could make AIs easier to decipher.

Artificial neurons—the fundamental building blocks of deep neural networks—have survived almost unchanged for decades. While these networks give modern artificial intelligence its power, they are also inscrutable.

Existing artificial neurons, used in large language models like GPT4, work by taking in a large number of inputs, adding them together, and converting the sum into an output using another mathematical operation inside the neuron. Combinations of such neurons make up neural networks, and their combined workings can be difficult to decode.

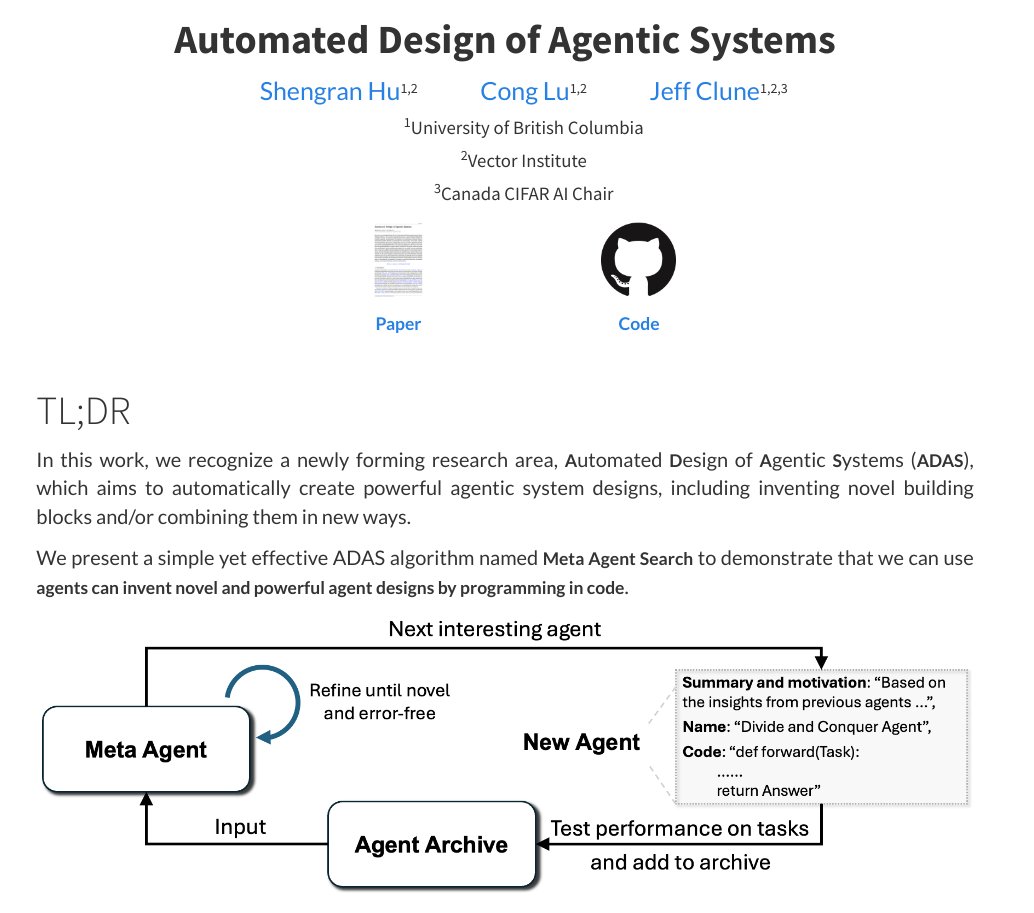

But the new way to combine neurons works a little differently. Some of the complexity of the existing neurons is both simplified and moved outside the neurons. Inside, the new neurons simply sum up their inputs and produce an output, without the need for the extra hidden operation. Networks of such neurons are called Kolmogorov-Arnold Networks (KANs), after the Russian mathematicians who inspired them.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The simplification, studied in detail by a group led by researchers at MIT, could make it easier to understand why neural networks produce certain outputs, help verify their decisions, and even probe for bias. Preliminary evidence also suggests that as KANs are made bigger, their accuracy increases faster than networks built of traditional neurons.

“It's interesting work,” says Andrew Wilson, who studies the foundations of machine learning at New York University. “It's nice that people are trying to fundamentally rethink the design of these [networks].”

The basic elements of KANs were actually proposed in the 1990s, and researchers kept building simple versions of such networks. But the MIT-led team has taken the idea further, showing how to build and train bigger KANs, performing empirical tests on them, and analyzing some KANs to demonstrate how their problem-solving ability could be interpreted by humans. “We revitalized this idea,” said team member Ziming Liu, a PhD student in Max Tegmark’s lab at MIT. “And, hopefully, with the interpretability… we [may] no longer [have to] think neural networks are black boxes.”

While it's still early days, the team’s work on KANs is attracting attention. GitHub pages have sprung up that show how to use KANs for myriad applications, such as image recognition and solving fluid dynamics problems.

Finding the formula

The current advance came when Liu and colleagues at MIT, Caltech, and other institutes were trying to understand the inner workings of standard artificial neural networks.

Today, almost all types of AI, including those used to build large language models and image recognition systems, include sub-networks known as a multilayer perceptron (MLP). In an MLP, artificial neurons are arranged in dense, interconnected “layers.” Each neuron has within it something called an “activation function”—a mathematical operation that takes in a bunch of inputs and transforms them in some pre-specified manner into an output.

In an MLP, each artificial neuron receives inputs from all the neurons in the previous layer and multiplies each input with a corresponding “weight” (a number signifying the importance of that input). These weighted inputs are added together and fed to the activation function inside the neuron to generate an output, which is then passed on to neurons in the next layer. An MLP learns to distinguish between images of cats and dogs, for example, by choosing the correct values for the weights of the inputs for all the neurons. Crucially, the activation function is fixed and doesn’t change during training.

Once trained, all the neurons of an MLP and their connections taken together essentially act as another function that takes an input (say, tens of thousands of pixels in an image) and produces the desired output (say, 0 for cat and 1 for dog). Understanding what that function looks like, meaning its mathematical form, is an important part of being able to understand why it produces some output. For example, why does it tag someone as creditworthy given inputs about their financial status? But MLPs are black boxes. Reverse-engineering the network is nearly impossible for complex tasks such as image recognition.

And even when Liu and colleagues tried to reverse-engineer an MLP for simpler tasks that involved bespoke “synthetic” data, they struggled.

“If we cannot even interpret these synthetic datasets from neural networks, then it's hopeless to deal with real-world data sets,” says Liu. “We found it really hard to try to understand these neural networks. We wanted to change the architecture.”

Mapping the math

The main change was to remove the fixed activation function and introduce a much simpler learnable function to transform each incoming input before it enters the neuron.

Unlike the activation function in an MLP neuron, which takes in numerous inputs, each simple function outside the KAN neuron takes in one number and spits out another number. Now, during training, instead of learning the individual weights, as happens in an MLP, the KAN just learns how to represent each simple function. In a paper posted this year on the preprint server ArXiv, Liu and colleagues showed that these simple functions outside the neurons are much easier to interpret, making it possible to reconstruct the mathematical form of the function being learned by the entire KAN.

Related Story

What is AI?

Everyone thinks they know but no one can agree. And that’s a problem.

The team, however, has only tested the interpretability of KANs on simple, synthetic data sets, not on real-world problems, such as image recognition, which are more complicated. “[We are] slowly pushing the boundary,” says Liu. “Interpretability can be a very challenging task.”

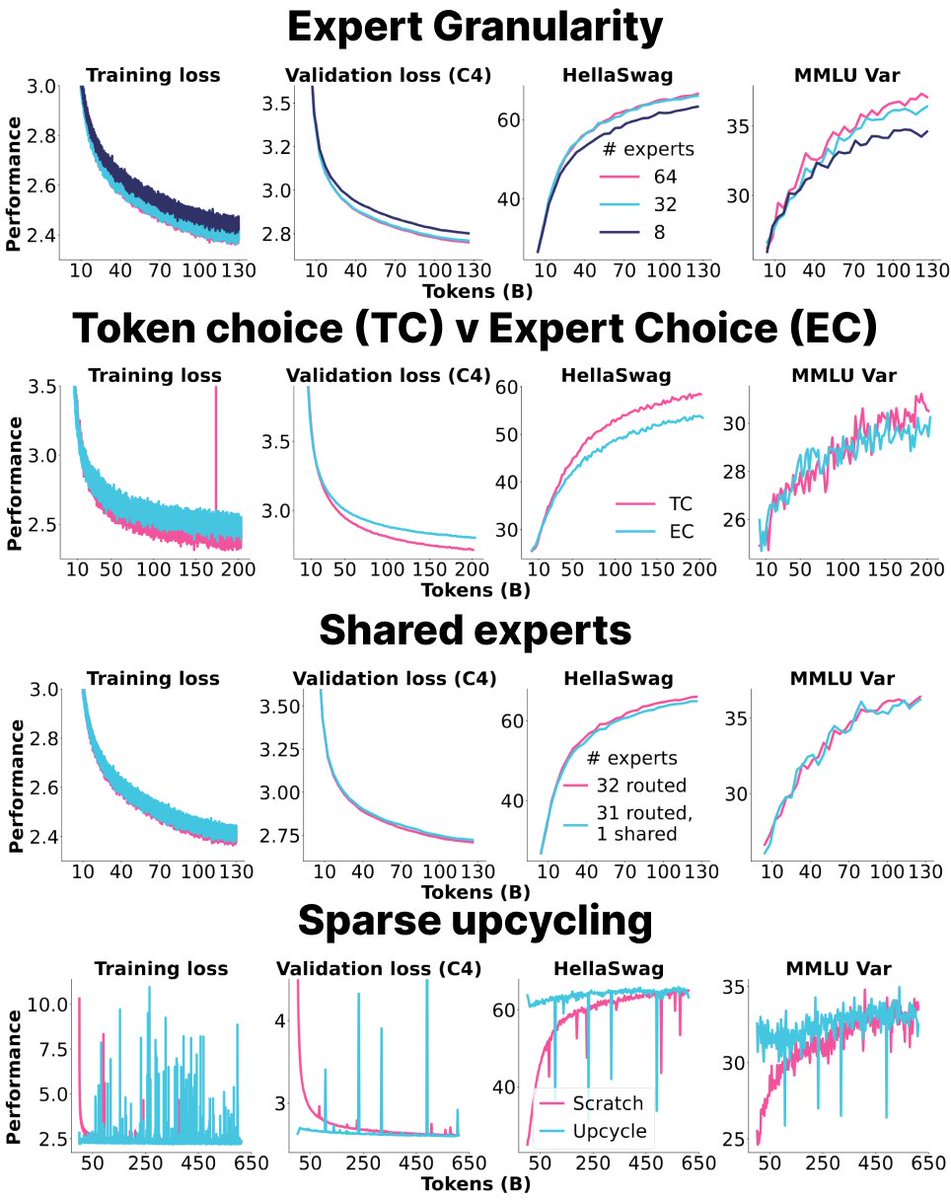

Liu and colleagues have also shown that KANs get more accurate at their tasks with increasing size faster than MLPs do. The team proved the result theoretically and showed it empirically for science-related tasks (such as learning to approximate functions relevant to physics). “It's still unclear whether this observation will extend to standard machine learning tasks, but at least for science-related tasks, it seems promising,” Liu says.

Liu acknowledges that KANs come with one important downside: it takes more time and compute power to train a KAN, compared to an MLP.

“This limits the application efficiency of KANs on large-scale data sets and complex tasks,” says Di Zhang, of Xi’an Jiaotong-Liverpool University in Suzhou, China. But he suggests that more efficient algorithms and hardware accelerators could help.

Anil Ananthaswamy is a science journalist and author who writes about physics, computational neuroscience, and machine learning. His new book, WHY MACHINES LEARN: The Elegant Math Behind Modern AI, was published by Dutton (Penguin Random House US) in July.