RealFill: Reference-Driven Generation for Authentic Image Completion

RealFill: Reference-Driven Generation for Authentic Image Completion

realfill.github.io

RealFill

Reference-Driven Generation for Authentic Image Completion

Luming Tang1,2, Nataniel Ruiz1, Qinghao Chu1, Yuanzhen Li1, Aleksander Holynski1, David E. Jacobs1,Bharath Hariharan2, Yael Pritch1, Neal Wadhwa1, Kfir Aberman1, Michael Rubinstein1

1Google Research, 2Cornell University

arXiv

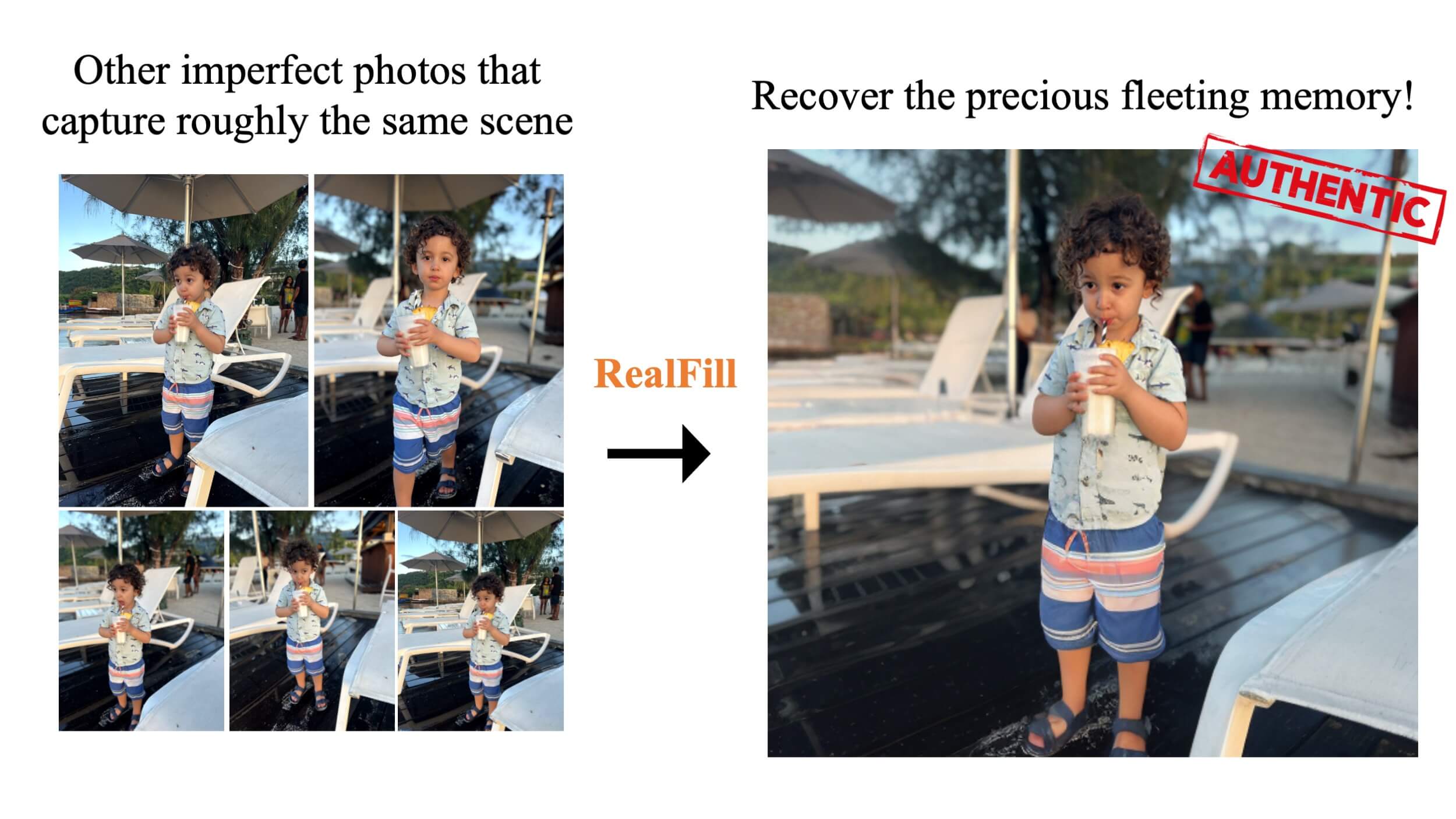

RealFill is able to complete the image with what should have been there.

Abstract

Recent advances in generative imagery have brought forth outpainting and inpainting models that can produce high-quality, plausible image content in unknown regions, but the content these models hallucinate is necessarily inauthentic, since the models lack sufficient context about the true scene. In this work, we propose RealFill, a novel generative approach for image completion that fills in missing regions of an image with the content that should have been there. RealFill is a generative inpainting model that is personalized using only a few reference images of a scene. These reference images do not have to be aligned with the target image, and can be taken with drastically varying viewpoints, lighting conditions, camera apertures, or image styles. Once personalized, RealFill is able to complete a target image with visually compelling contents that are faithful to the original scene. We evaluate RealFill on a new image completion benchmark that covers a set of diverse and challenging scenarios, and find that it outperforms existing approaches by a large margin.Method

Authentic Image Completion: Given a few reference images (up to five) and one target image that captures roughly the same scene (but in a different arrangement or appearance), we aim to fill missing regions of the target image with high-quality image content that is faithful to the originally captured scene. Note that for the sake of practical benefit, we focus particularly on the more challenging, unconstrained setting in which the target and reference images may have very different viewpoints, environmental conditions, camera apertures, image styles, or even moving objects.RealFill: For a given scene, we first create a personalized generative model by fine-tuning a pre-trained inpainting diffusion model on the reference and target images. This fine-tuning process is designed such that the adapted model not only maintains a good image prior, but also learns the contents, lighting, and style of the scene in the input images. We then use this fine-tuned model to fill the missing regions in the target image through a standard diffusion sampling process.

Results

Given the reference images on the left, RealFill is able to either uncrop or inpaint the target image on the right, resulting in high-quality images that are both visually compelling and also faithful to the references, even when there are large differences between references and targets including viewpoint, aperture, lighting, image style, and object motion.Comparison with Baselines

A comparison of RealFill and baseline methods. Transparent white masks are overlayed on the unaltered known regions of the target images.- Paint-by-Example does not achieve high scene fidelity because it relies on CLIP embeddings, which only capture high-level semantic information.

- Stable Diffusion Inpainting produces plausible results, they are inconsistent with the reference images because prompts have limited expressiveness.

In contrast, RealFill generates high-quality results that have high fidelity with respect to the reference images.

Limitations

- RealFill needs to go through a gradient-based fine-tuning process on input images, rendering it relatively slow.

- When viewpoint change between reference and target images is very large, RealFill tends to fail at recovering the 3D scene, especially when there is only a single reference image.

- Because RealFill mainly relies on the image prior inherited from the base pre-trained model, it also fails to handle cases where that are challenging for the base model, such as text for Stable Diffusion.