Stable Diffusion XL 1.0 Base on a Raspberry Pi Zero 2 (or in 298MB of RAM) - GitHub - vitoplantamura/OnnxStream at 846da873570a737b49154e8f835704264864b0fe

github.com

Stable Diffusion XL 1.0 Base on a Raspberry Pi Zero 2 (or in 298MB of RAM) - GitHub - vitoplantamura/OnnxStream: Stable Diffusion XL 1.0 Base on a Raspberry Pi Zero 2 (or in 298MB of RAM)

github.com

About

Stable Diffusion XL 1.0 Base on a Raspberry Pi Zero 2 (or in 298MB of RAM)

UPDATE (OCTOBER 2023)

UPDATE (OCTOBER 2023)  Added support for Stable Diffusion XL 1.0 Base! And it runs on a RPI Zero 2! Please see the section below

Added support for Stable Diffusion XL 1.0 Base! And it runs on a RPI Zero 2! Please see the section below

The challenge is to run

Stable Diffusion 1.5, which includes a large transformer model with almost 1 billion parameters, on a

Raspberry Pi Zero 2, which is a microcomputer with 512MB of RAM, without adding more swap space and without offloading intermediate results on disk. The recommended minimum RAM/VRAM for Stable Diffusion is typically 8GB.

Generally major machine learning frameworks and libraries are focused on minimizing inference latency and/or maximizing throughput, all of which at the cost of RAM usage. So I decided to write a super small and hackable inference library specifically focused on minimizing memory consumption: OnnxStream.

OnnxStream is based on the idea of decoupling the inference engine from the component responsible of providing the model weights, which is a class derived from WeightsProvider. A WeightsProvider specialization can implement any type of loading, caching and prefetching of the model parameters. For example a custom WeightsProvider can decide to download its data from an HTTP server directly, without loading or writing anything to disk (hence the word "Stream" in "OnnxStream"). Two default WeightsProviders are available: DiskNoCache and DiskPrefetch.

OnnxStream can consume even 55x less memory than OnnxRuntime while being only 0.5-2x slower (on CPU, see the Performance section below).

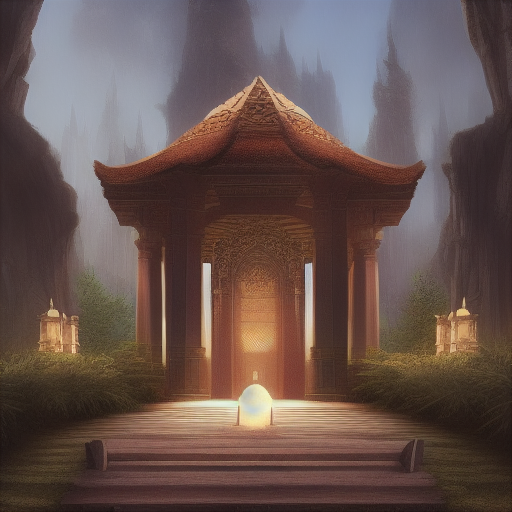

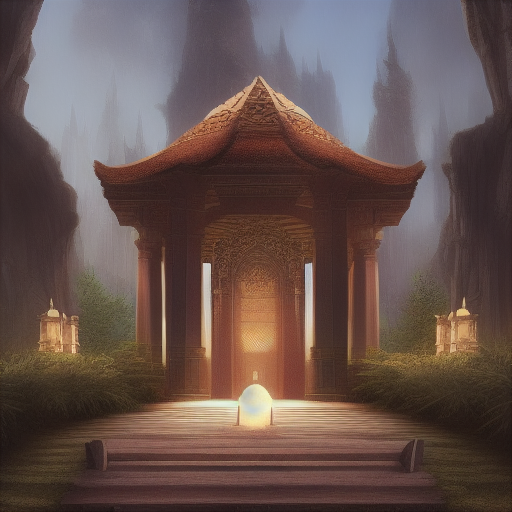

These images were generated by the Stable Diffusion example implementation included in this repo, using OnnxStream, at different precisions of the VAE decoder. The VAE decoder is the only model of Stable Diffusion that could not fit into the RAM of the Raspberry Pi Zero 2 in single or half precision. This is caused by the presence of residual connections and very big tensors and convolutions in the model. The only solution was static quantization (8 bit). The third image was generated by my RPI Zero 2 in about 3 hours 1.5 hours (using the MAX_SPEED option when compiling). The first image was generated on my PC using the same latents generated by the RPI Zero 2, for comparison:

VAE decoder in W16A16 precision:

VAE decoder in W8A32 precision:

VAE decoder in W8A8 precision, generated by my RPI Zero 2 in about 3 hours 1.5 hours (using the MAX_SPEED option when compiling):

The OnnxStream Stable Diffusion example implementation now supports SDXL 1.0 (without the Refiner). The ONNX files were exported from the SDXL 1.0 implementation of the Hugging Face's

Diffusers library (version 0.19.3).

SDXL 1.0 is significantly more computationally expensive than SD 1.5. The most significant difference is the ability to generate 1024x1024 images instead of 512x512. To give you an idea, generating a 10-steps image with HF's Diffusers takes 26 minutes on my 12-core PC with 32GB of RAM. The minimum recommended VRAM for SDXL is typically 12GB.

OnnxStream can run SDXL 1.0 in less than 300MB of RAM and therefore is able to run it comfortably on a RPI Zero 2, without adding more swap space and without writing anything to disk during inference. Generating a 10-steps image takes about 11 hours on my RPI Zero 2.

The same set of optimizations for SD 1.5 has been used for SDXL 1.0, but with the following differences.

As for the UNET model, in order to make it run in less than 300MB of RAM on the RPI Zero 2, UINT8 dynamic quantization is used, but limited to a specific subset of large intermediate tensors.

The situation for the VAE decoder is more complex than for SD 1.5. SDXL 1.0's VAE decoder is 4x the size of SD 1.5's, and consumes 4.4GB of RAM when run with OnnxStream in FP32 precision.

In the case of SD 1.5 the VAE decoder is statically quantized (UINT8 precision) and this is enough to reduce RAM consumption to 260MB. Instead, the SDXL 1.0's VAE decoder overflows when run with FP16 arithmetic and the numerical ranges of its activations are too large to get good quality images with UINT8 quantization.

So we are stuck with a model that consumes 4.4GB of RAM, which cannot be run in FP16 precision and which cannot be quantized in UINT8 precision. (NOTE: there is at least

one solution to the FP16 problem, but I have not investigated further since even running the VAE decoder in FP16 precision, the total memory consumed would be divided by 2, so the model would ultimately consume 2.2GB instead of 4.4GB, which is still way too much for the RPI Zero 2)

The inspiration for the solution came from the implementation of the VAE decoder of the Hugging Face's

Diffusers library, i.e. using tiled decoding. The final result is absolutely indistinguishable from an image decoded by the full decoder and in this way it is possible to reduce RAM memory consumption from 4.4GB to 298MB!

The idea is simple. The result of the diffusion process is a tensor with shape (1,4,128,128). The idea is to split this tensor into 5x5 (therefore 25) overlapping tensors with shape (1,4,32,32) and to decode these tensors separately. Each of these tensors is overlapped by 25% on the tile to its left and the one above. The decoding result is a tensor with shape (1,3,256,256) which is then appropriately blended into the final image.

For example, this is an image generated by the tiled decoder with blending manually turned off in the code.

You can clearly see the tiles in the image:

While this is the same image with blending turned on.

This is the final result:

This is another image generated by my RPI Zero 2 in about 11 hours: (10 steps, Euler Ancestral)

- Inference engine decoupled from the WeightsProvider

- WeightsProvider can be DiskNoCache, DiskPrefetch or custom

- Attention slicing

- Dynamic quantization (8 bit unsigned, asymmetric, percentile)

- Static quantization (W8A8 unsigned, asymmetric, percentile)

- Easy calibration of a quantized model

- FP16 support (with or without FP16 arithmetic)

- 25 ONNX operators implemented (the most common)

- Operations executed sequentially but all operators are multithreaded

- Single implementation file + header file

- XNNPACK calls wrapped in the XnnPack class (for future replacement)

OnnxStream depends on

XNNPACK for some (accelerated) primitives: MatMul, Convolution, element-wise Add/Sub/Mul/Div, Sigmoid and Softmax.

Stable Diffusion consists of three models:

a text encoder (672 operations and 123 million parameters), the

UNET model (2050 operations and 854 million parameters) and the

VAE decoder (276 operations and 49 million parameters). Assuming that the batch size is equal to 1, a full image generation with 10 steps, which yields good results (with the Euler Ancestral scheduler), requires 2 runs of the text encoder, 20 (i.e. 2*10) runs of the UNET model and 1 run of the VAE decoder.

This table shows the various inference times of the three models of Stable Diffusion, together with the memory consumption (i.e. the Peak Working Set Size in Windows or the Maximum Resident Set Size in Linux).

| Model / Library | 1st run | 2nd run | 3rd run |

|---|

| FP16 UNET / OnnxStream | 0.133 GB - 18.2 secs | 0.133 GB - 18.7 secs | 0.133 GB - 19.8 secs |

| FP16 UNET / OnnxRuntime | 5.085 GB - 12.8 secs | 7.353 GB - 7.28 secs | 7.353 GB - 7.96 secs |

| FP32 Text Enc / OnnxStream | 0.147 GB - 1.26 secs | 0.147 GB - 1.19 secs | 0.147 GB - 1.19 secs |

| FP32 Text Enc / OnnxRuntime | 0.641 GB - 1.02 secs | 0.641 GB - 0.06 secs | 0.641 GB - 0.07 secs |

| FP32 VAE Dec / OnnxStream | 1.004 GB - 20.9 secs | 1.004 GB - 20.6 secs | 1.004 GB - 21.2 secs |

| FP32 VAE Dec / OnnxRuntime | 1.330 GB - 11.2 secs | 2.026 GB - 10.1 secs | 2.026 GB - 11.1 secs |

In the case of the UNET model (when run in FP16 precision, with FP16 arithmetic enabled in OnnxStream), OnnxStream can consume even 55x less memory than OnnxRuntime while being 0.5-2x slower.

news.ycombinator.com