Harvard Business Publishing Education

AN INSPIRING MINDS SERIES

Student Use Cases for AI

Start by Sharing These Guidelines with Your Classby Ethan Mollick and Lilach Mollick

September 25, 2023

Getty Images / Shutterstock / HBP Staff

Explore more

Share this article

PrintEmailTweetSharePost

Generative AI tools and the large language models (LLMs) they’re built on create exciting opportunities and pose enormous challenges for teaching and learning. After all, AI can now be ubiquitous in the classroom; every student and educator with a computer and internet has free access to the most powerful AI models in the world. And, like any tool, AI offers both new capabilities and new risks.

To help you explore some of the ways students can use this disruptive new technology to improve their learning—while making your job easier and more effective—we’ve written a series of articles that examine the following student use cases:

For each of these roles, we offer practical recommendations—and a detailed, shareable prompt—for how exactly you can guide students in wielding AI to achieve these ends.

But before you assign or encourage students to use AI, it’s important to first establish some guidelines around properly using these tools. That way, there’s less ambiguity about what students can expect from the AI, from “hallucinations” to privacy concerns.

Since these guidelines can be used generally—and across all four use cases we propose in this series—we wanted to share them in this introductory article. These are the same guidelines we provide our own students; feel free to use or adapt them for your class.

Student guidelines for proper AI use

Understanding LLMs

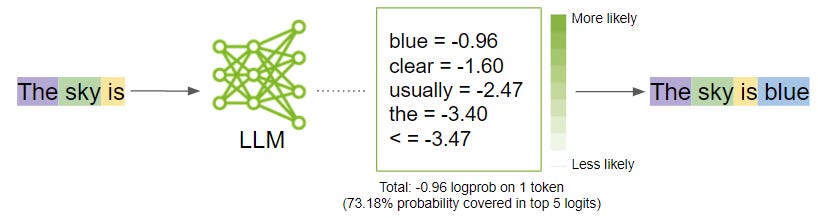

LLMs are trained on vast amounts of content that allows them to predict what word should come next in written text, much like the autocomplete feature in search bars. When you type something (called a prompt) into ChatGPT or another LLM, it tries to extend the prompt logically based on its training. Since LLMs like ChatGPT have been pre-trained on large amounts of information, they’re capable of many tasks across many fields. However, there is no instruction manual that comes with LLMs, so it can be hard to know what tasks they are good or bad at without considerable experience. Keep in mind that LLMs don’t have real understanding and often make mistakes, so it’s up to the user to verify their outputs.Benefits and challenges of working with LLMs

- Fabrication. AI can lie and produce plausible-sounding but incorrect information. Don’t trust anything it says at face value. If it gives you a number or fact, assume it is wrong unless you either know the answer or can check with another source. You will be responsible for any errors or omissions provided by the tool. It works best for topics you understand and can verify. Larger LLMs (like GPT-4) fabricate less, but all AIs fabricate to some degree.

- AI bias. AI can carry biases, stemming from its training data or human intervention. These biases vary across LLMs and can range from gender and racial biases to biases against particular viewpoints, approaches, or political affiliations. Each LLM has the potential for its own set of biases, and those biases can be subtle. You will need to critically consider answers and be aware of the potential for these sorts of biases.

- Privacy concerns. When data is entered into the AI, it can be used for future training. While ChatGPT offers a privacy mode that claims not to use input there for future AI training, the current state of privacy remains unclear for many models, and the legal implications are often uncertain. Do not share anything with AI that you want to keep private.

Best practices for AI interactions

When interacting with AI, remember the following:- You are accountable for your own work. Take every piece of advice or explanation given by AI critically and evaluate that advice independently.

- AI is not a person, but it can act like one. It’s very easy to read human intent into AI responses, but AI is not a real person responding to you. It is capable of a lot, but it doesn’t know you or your context. It can also get stuck in a loop, repeating similar content over and over.

“AI can now be ubiquitous in the classroom; every student and educator with a computer and internet has free access to the most powerful AI models in the world.”

- AI is unpredictable. AI has trained on billions of documents on the web, and it tries to fulfill or respond to your prompt reasonably based on what it has read. But you can’t know ahead of time what it’s going to say. The very same prompt can get a radically different response from the AI each time you use it. That means that your classmates may get different responses, as will trying the prompt more than once yourself.

- You are in charge. If the AI gets stuck in a loop and you’re ready to move on, then direct the AI to do what you’d like.

- Only share what you are comfortable sharing. Do not feel compelled to share anything personal, even if the AI asks. Anything you share may be used as training data for the AI.

- Try another LLM. If the prompt doesn’t work in one LLM, try another. Remember that an AI’s output isn’t consistent and will vary. Take notes and share what worked for you.

To communicate more effectively with AI:

- Seek clarity. If something isn’t clear, don’t hesitate to ask the AI to expand its explanation or give you different examples. If you are confused by the AI’s output, ask it to use different wording. You can keep asking until you get what you need. Interact with it naturally, asking questions and pushing back on its answers.

- Provide context. The AI can provide better help if it knows where you’re having trouble. The more context you give it, the more likely it is to be useful to you. It often helps to give the AI a role: “You are a friendly teacher who explains economics concepts to college students in introductory courses,” for example.

- Don’t assume the AI is tracking the conversation. LLMs have limited memory; if it seems to be losing track, remind it of what you need and keep asking it questions.

Preparing students to work more effectively with AI

These guidelines help clarify what LLMs are and what students need to know to productively work with these tools. If you choose to share these guidelines, or a version of them, your students will have a better understanding of what to expect when interacting with AI and how to communicate their needs more effectively.STUDENT USE CASES FOR AI: AN INSPIRING MINDS SERIES

Prologue: Student Guidelines for AI UsePart 1: AI as Feedback Generator

Part 2: AI as Personal Tutor

Part 3: AI as Team Coach

Part 4: AI as Learner

Now, you’re ready to explore the rest of our series on student uses for AI beginning with “Part 1: AI as Feedback Generator,” which tackles one of educators’ most laborious tasks: giving frequent feedback to students.

From the editors: As you read this series, share with us how you are using generative AI in your classes. What is your experience so far? What are your biggest concerns? What use cases have you found beneficial? We look forward to learning from you.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24956945/23_Meta_Connect___Imagine.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/24956945/23_Meta_Connect___Imagine.jpg)

/cloudfront-us-east-2.images.arcpublishing.com/reuters/ETDD3VEQT5IWFE6WDAARZUNSUQ.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/24390406/STK149_AI_03.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/24390406/STK149_AI_03.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/24957310/IMG_8CA945372C16_1.jpeg)