Augmenting knowledge workers (consulting, legal, medical, finance, etc.)

Early signs point to many simple back-office, front-office, and even customer-facing tasks being automated completely by LLMs - Adept AI & other startups excel at

simple personal and professional tasks, like locating a specific house on Redfin or completing an otherwise click-heavy workflow in Salesforce. In addition to text & webpages, voice is understandable and replicable; for example,

Infinitus* automates B2B healthcare calls, verifying benefits and checking on statuses. More specialized knowledge workers - especially those in legal, medical, or consulting professions - can also be made drastically more efficient with LLM-powered tools. However, given their plethora of domain-specific knowledge, the majority of higher-stakes workflows I’m referring to here are more likely to be assisted than fully automated in the near-term. These knowledge workers will essentially be paying such startups for small (but growing!) tasks to be completed inside of their complicated day-to-day workloads.

Whether

drafting a legal document for a transaction or PI case or

analyzing a contract for due diligence, some lawyers are already using legal assistant tech to save time. Thomson Reuters, a large incumbent tax & accounting software platform, saw so much potential in

Casetext, an AI legal assistant, that they recently acquired it for

$650 million. Given gaps in existing legal tech and the potential of LLMs to speed up workflows, there’s potential to build a larger legal software platform from the various initial automation wedges. However, startups must navigate finding champions and validate lawyers’ willingness to change personal workflows & ultimately

pay for large efficiency gains.

In medicine, doctors can have more leverage with

automatic entry of patient data* into their electronic health records after a meeting (especially important with the

turnover of medical scribes) as well as automated patient or hospital Q&A through

chatbots. Biologists are also already taking advantage of

LLM-powered tools to help them find protein candidates faster. Though scaling medical GTM is notoriously

challenging, the payoff of saving large amounts of time for these highly educated personas could be immense.

Finally, the consulting industry continues to

boom, helping businesses make all kinds of decisions from pricing models, store placement, inventory & risk management, and forecasting. Startups like

Arena AI*,

Kumo AI,

Unearth insights,

Intelmatix,

Punchcard*, and

Taktile use LLMs and other related tech to help many different types and sizes of customers with decision-making. If a startup is able to build a generalizable product with a scalable GTM - so, not just another consulting company - they might be able to eat into some of the large consulting spend as well as the budgets of those who didn't use consultants in the first place.

Digital asset generation for work & for fun

Other types of generative models outside of LLMs (e.g. diffusion models) enable the generation of media outside of text like images, videos, and audio. Whether you draw portraits, edit videos, or make PowerPoint presentations for a living, the current state of generative models can likely help you become more efficient. Separately, if you thought you weren’t skilled enough to create images or songs at all, some AI-powered generation tools may convince you otherwise - similar to how

Canva made graphic design more accessible for many non-artists years ago. Startups like

Midjourney,

Ideogram,

Genmo,

Tome,

Playground, and

Can of Soup help users create and share images for professional or personal use. Some may continue to build out enterprise features and challenge Adobe and Microsoft, while others may continue to build out social media engagement & e-commerce capabilities through network effects & ads. Video creators & editors - from Instagram stars to blockbuster special effect artists to L&D professionals - can speed up & reduce the cost of their work with products such as

Captions*,

Wonder Dynamics,

Runway,

Hypernatural, and

Synthesia*. On the cutting-edge of the current generative tech, short-form video (e.g.

Pika,

Genmo), music (e.g.

Oyi Labs,

Frequency Labs*,

Riffusion), & 3D asset generation (e.g.

Sloyd,

Rosebud*) show promise, though the longer-term business plans seem less straightforward than those of the image generation and video editing companies. In addition, some digital asset generation like audio/voice seems more challenging to maintain a differentiated product over time, especially as cloud providers expand their offerings. As a final note about this category, the legal and

copyright issues are most pronounced here in comparison to other categories, as there are already many lawsuits alleging

improper training data and

unattributed output.

Personal assistant & coach

I’m convinced that we will eventually have the option for a LLM-powered assistant or coach for the majority of things we do, both at work and in life. I’d personally love a future where wearing some sort of AR device is somewhat socially acceptable, and my device can listen to me talk with a founder, fact-check the conversation in real-time, give me

advice on how I could be more helpful or convincing, and automatically follow-up for me after. In the meantime, tools to help with

writing persuasive emails,

navigating internal knowledge bases*, or

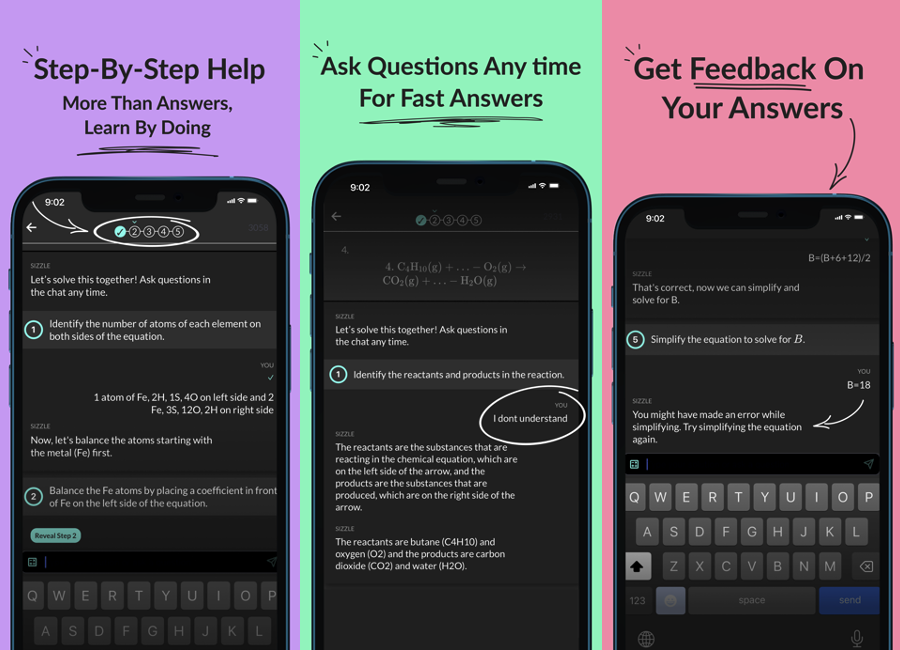

automating common tasks in the browser seem appropriate and ripe for expansion. The current LLM tech can also already perform well enough to help learners,

in school and

out, with personalized educational solutions and conversations. Using large models to create compelling, seamless experiences on mobile is quite challenging given latency & compute requirements, which likely makes great products here harder to copy than meets the eye.

Generative model-related startup application ideas I’m less certain of

Some other SaaS replacements

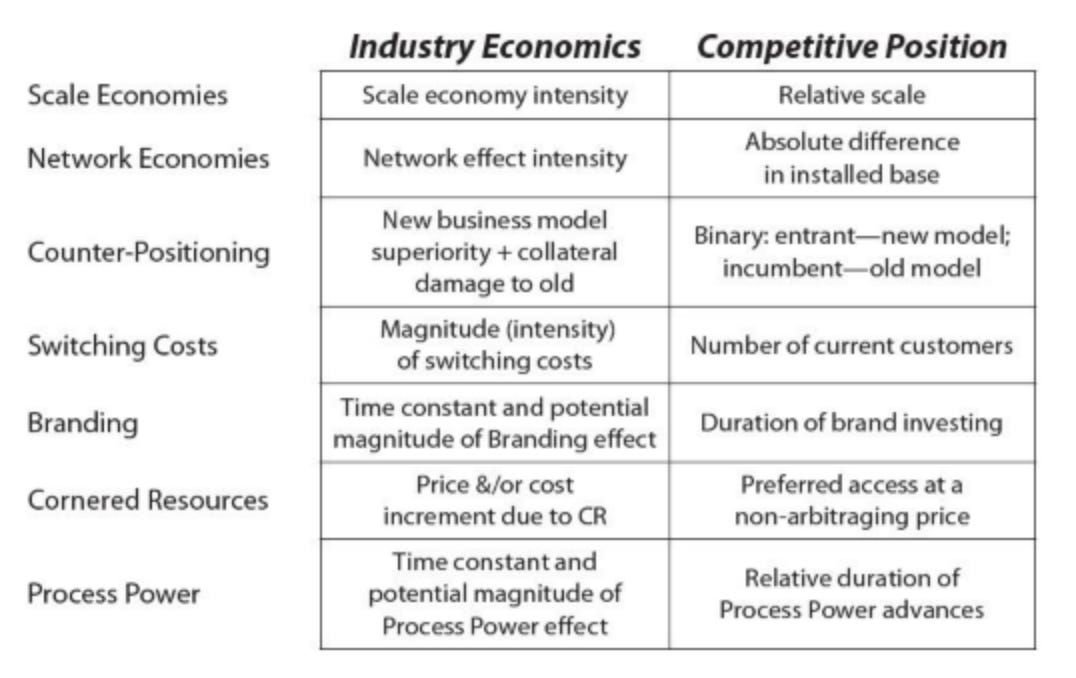

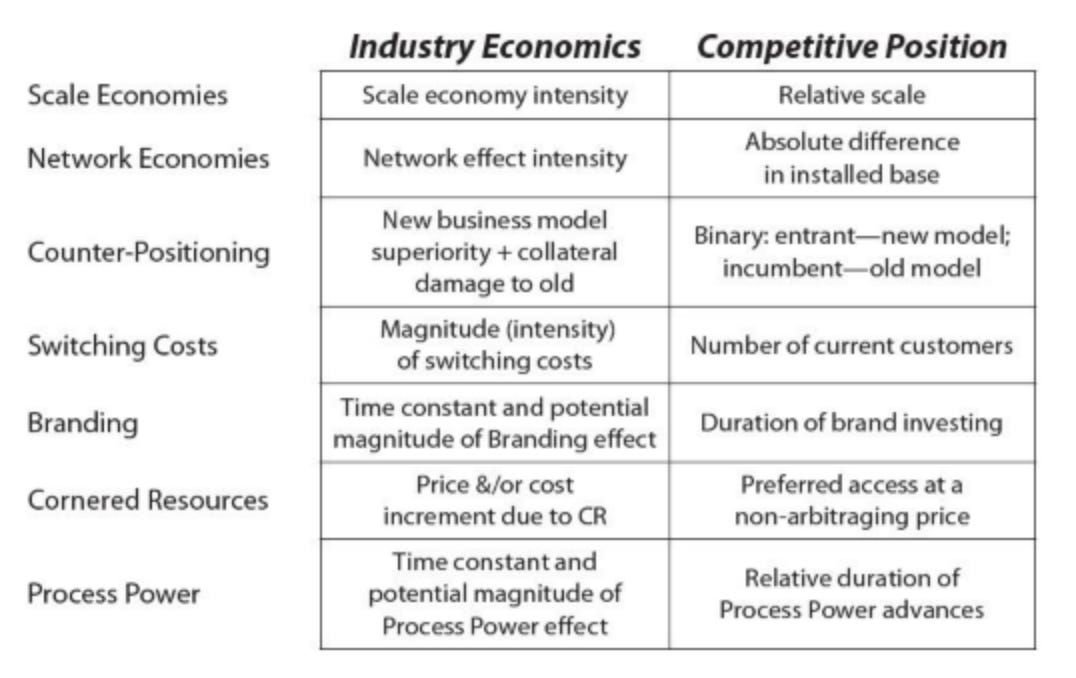

In general, I’m more skeptical of any SaaS disrupter that doesn’t have a strong story against the incumbent and other upstarts in the space. To truly claim LLMs or generative models as the “why now” for a new startup, I’d prefer the existence of some sort of

innovator’s dilemma, large product rework, and/or special unattainable resource (e.g. talent) that makes incumbent repositioning challenging. When in doubt, I go to a favorite book of mine, “7 Powers”, on how to build and maintain a moat.