You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?As an AI language model, I do not have personal experience or habits. However, here are some ways in which you can use LLM (Large Language Models) in your daily routine:

1Writing and editing documents: LLM models can help you improve the quality of your writing by providing suggestions for grammar, punctuation, and phrasing. You can also use them to edit and proofread your documents more efficiently.

2Translation: If you work with people from different parts of the world, LLM models can help you translate your messages into different languages more accurately and quickly.

3Speech recognition: You can use LLM models to transcribe your speech and turn it into text. This can be useful when you need to take notes during a meeting or lecture.

4Research: LLM models can help you gather information on various topics by summarizing articles and providing insights. This can be useful if you need to research a topic for a presentation or report.

5Personal assistant: LLM models can act as your personal assistant by scheduling appointments, reminding you of important dates, and answering your questions.

Overall, LLM models can save you time and help you communicate more effectively in your daily routine

1Writing and editing documents: LLM models can help you improve the quality of your writing by providing suggestions for grammar, punctuation, and phrasing. You can also use them to edit and proofread your documents more efficiently.

2Translation: If you work with people from different parts of the world, LLM models can help you translate your messages into different languages more accurately and quickly.

3Speech recognition: You can use LLM models to transcribe your speech and turn it into text. This can be useful when you need to take notes during a meeting or lecture.

4Research: LLM models can help you gather information on various topics by summarizing articles and providing insights. This can be useful if you need to research a topic for a presentation or report.

5Personal assistant: LLM models can act as your personal assistant by scheduling appointments, reminding you of important dates, and answering your questions.

Overall, LLM models can save you time and help you communicate more effectively in your daily routine

Demo of reproduced VALL-E X

Original description from Microsoft

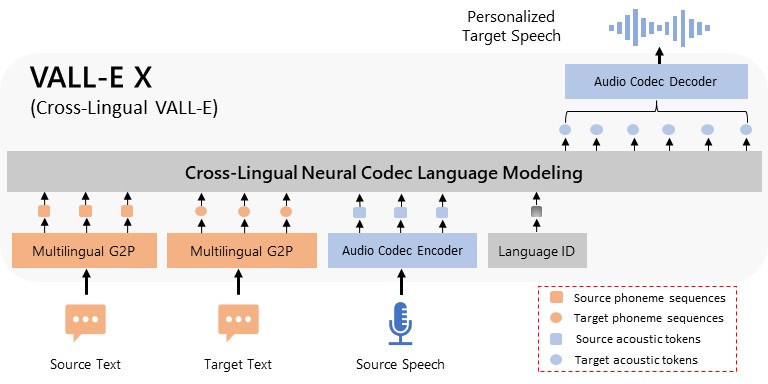

VALL-E X can synthesize personalized speech in another language for a monolingual speaker. Taking the phoneme sequences derived from the source and target text, and the source acoustic tokens derived from an audio codec model as prompts, VALL-E X is able to produce the acoustic tokens in the target language, which can be then decompressed to the target speech waveform. Thanks to its powerful in-context learning capabilities, VALL-E X does not require cross-lingual speech data of the same speakers for training and can perform various zero-shot cross-lingual speech generation tasks, such as cross-lingual text-to-speech synthesis and speech-to-speech translation.Additional description for reproduced model

| Data used for training | English | Chinese | Japanese |

|---|---|---|---|

| Microsoft's | LibriLight (70k+ hours) | Wenet Speech (10k+ hours) | - |

| Ours (reproduced) | LibriTTS + self-gathered (704 hours) | Aishell 1, 3, Aidatatang + self-gathered (598 hours) | JP commonvoice + self-gathered (437 hours) |

VALL-E paper: Neural Codec Language Models are Zero-Shot Text to Speech Synthesizers

VALL-E X paper: Speak Foreign Languages with Your Own Voice: Cross-Lingual Neural Codec Language Modeling

official demo page: VALL-E (X)

UI & API for usage: GitHub - Plachtaa/VALL-E-X: An open source implementation of Microsoft's VALL-E X zero-shot TTS model. Demo is available in https://plachtaa.github.io

Training code from: GitHub - lifeiteng/vall-e: PyTorch implementation of VALL-E(Zero-Shot Text-To-Speech), Reproduced Demo https://lifeiteng.github.io/valle/index.html

This page is for showing reproduced results only.

Model Overview

VALL-E X can synthesize personalized speech in another language for a monolingual speaker. Taking the phoneme sequences derived from the source and target text, and the source acoustic tokens derived from an audio codec model as prompts, VALL-E X is able to produce the acoustic tokens in the target language, which can be then decompressed to the target speech waveform. Thanks to its powerful in-context learning capabilities, VALL-E X does not require cross-lingual speech data of the same speakers for training and can perform various zero-shot cross-lingual speech generation tasks, such as cross-lingual text-to-speech synthesis and speech-to-speech translation.

This is a pair of gloves that could translate sign language into speech or text

by @gigadgets_