You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?Paper: arxiv.org/abs/2309.03897 Code: github.com/sczhou/ProPainter Project: ProPainter for Video Inpainting

ProPainter: Improving Propagation and Transformer for Video Inpainting

Last edited:

GitHub - yoheinakajima/instagraph: Converts text input or URL into knowledge graph and displays

Converts text input or URL into knowledge graph and displays - yoheinakajima/instagraph

Last edited:

Embedchain is getting a lot of traction because it's so easy to use.

It allows you to build chatbots over your own data with only a few lines of code.

With just a URL, you can start chatting with a youtube videos or wikipedia page.

Data Types Supported:

- Youtube video

- PDF file

- Web page

- Sitemap

- Doc file

- Code documentation website loader

- Notion

Demo: Google Colaboratory

Repo: GitHub - embedchain/embedchain: Data platform for LLMs - Load, index, retrieve and sync any unstructured data

https://twitter.com/AlphaSignalAI/status/1696216671197761713/photo/1

It allows you to build chatbots over your own data with only a few lines of code.

With just a URL, you can start chatting with a youtube videos or wikipedia page.

Data Types Supported:

- Youtube video

- PDF file

- Web page

- Sitemap

- Doc file

- Code documentation website loader

- Notion

pip install embedchainDemo: Google Colaboratory

Repo: GitHub - embedchain/embedchain: Data platform for LLMs - Load, index, retrieve and sync any unstructured data

https://twitter.com/AlphaSignalAI/status/1696216671197761713/photo/1

GitHub - embedchain/embedchain: Data platform for LLMs - Load, index, retrieve and sync any unstructured data

Data platform for LLMs - Load, index, retrieve and sync any unstructured data - GitHub - embedchain/embedchain: Data platform for LLMs - Load, index, retrieve and sync any unstructured data

GitHub - kuafuai/DevOpsGPT: Multi agent system for AI-driven software development. Combine LLM with DevOps tools to convert natural language requirements into working software. Supports any development language and extends the existing code.

Multi agent system for AI-driven software development. Combine LLM with DevOps tools to convert natural language requirements into working software. Supports any development language and extends th...

DevOpsGPT: AI-Driven Software Development Automation Solution

Get Help - Q&A

Get Help - Q&A

Submit Requests - Issue

Submit Requests - Issue

Technical exchange - service@kuafuai.net

Technical exchange - service@kuafuai.net

Introduction

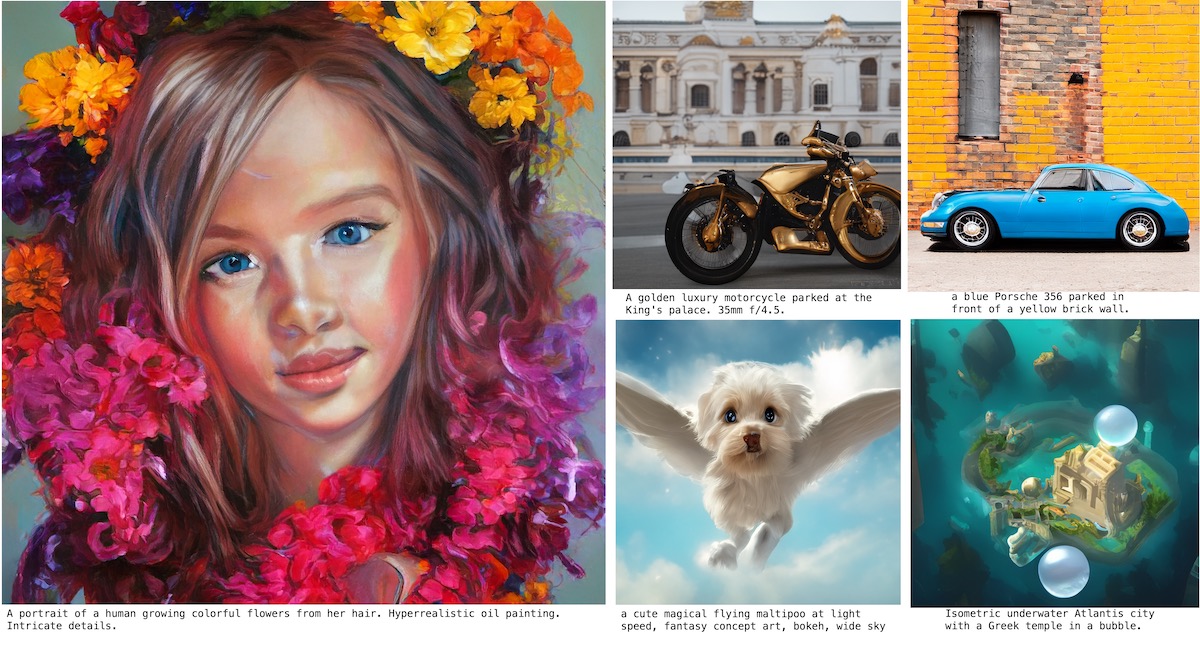

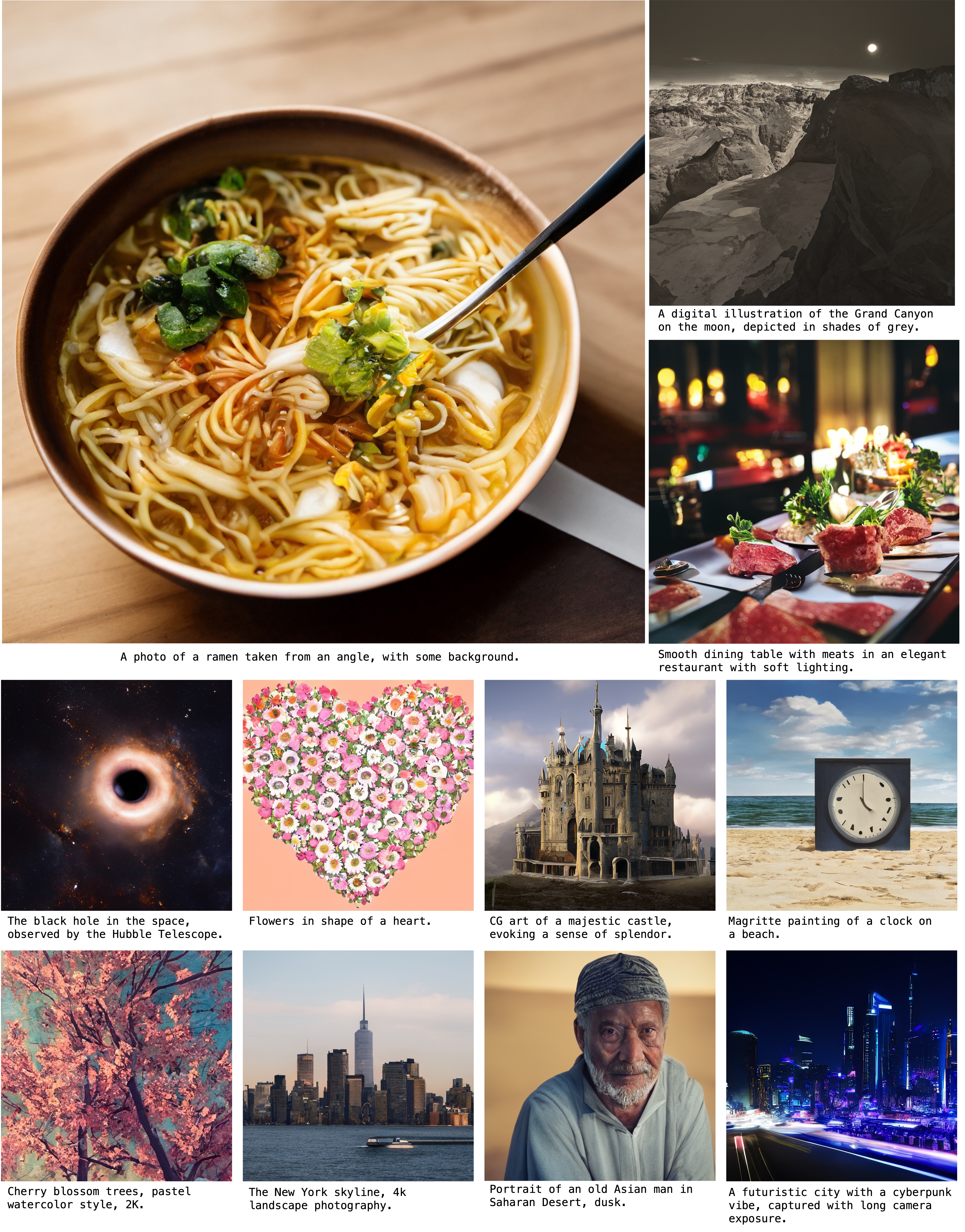

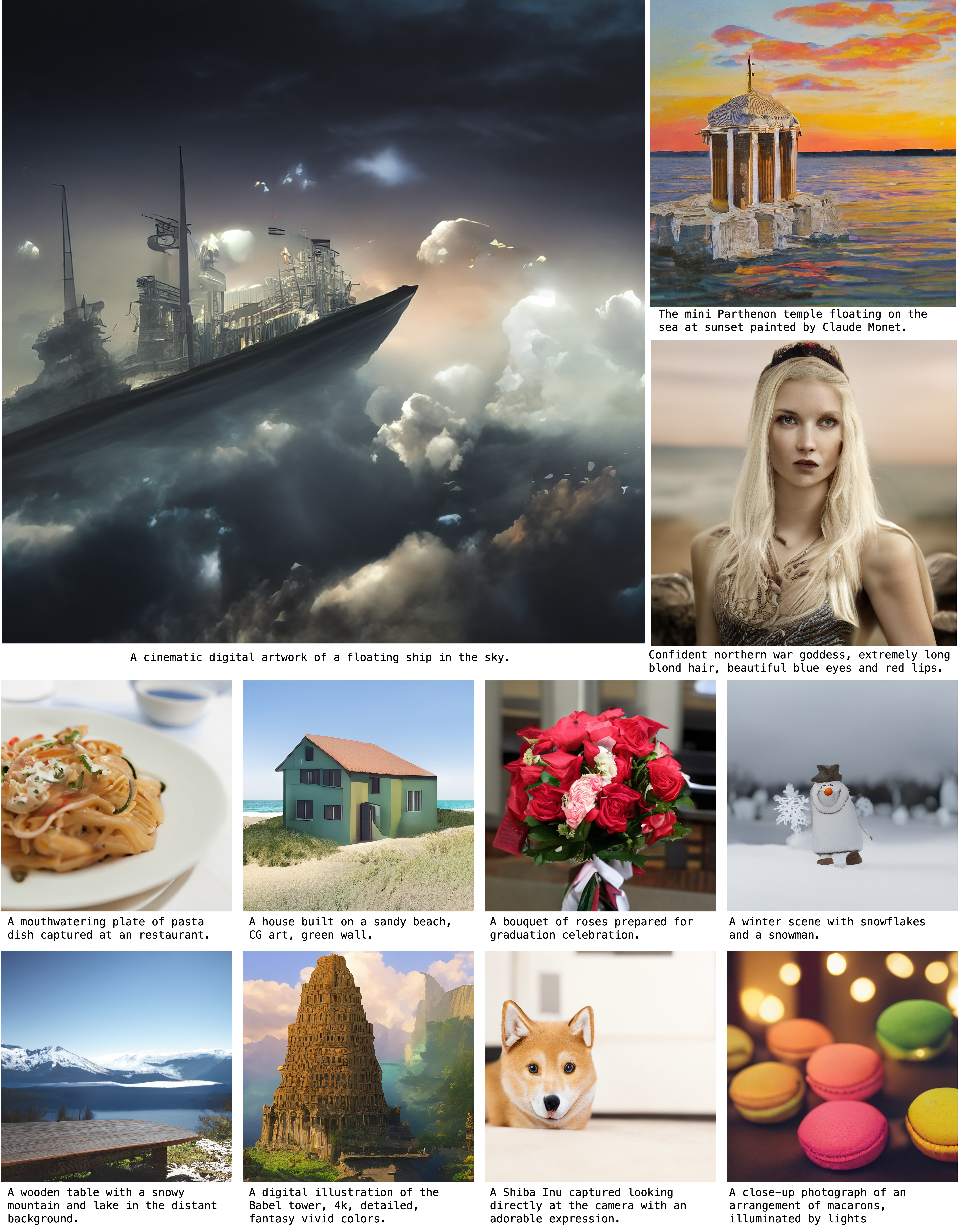

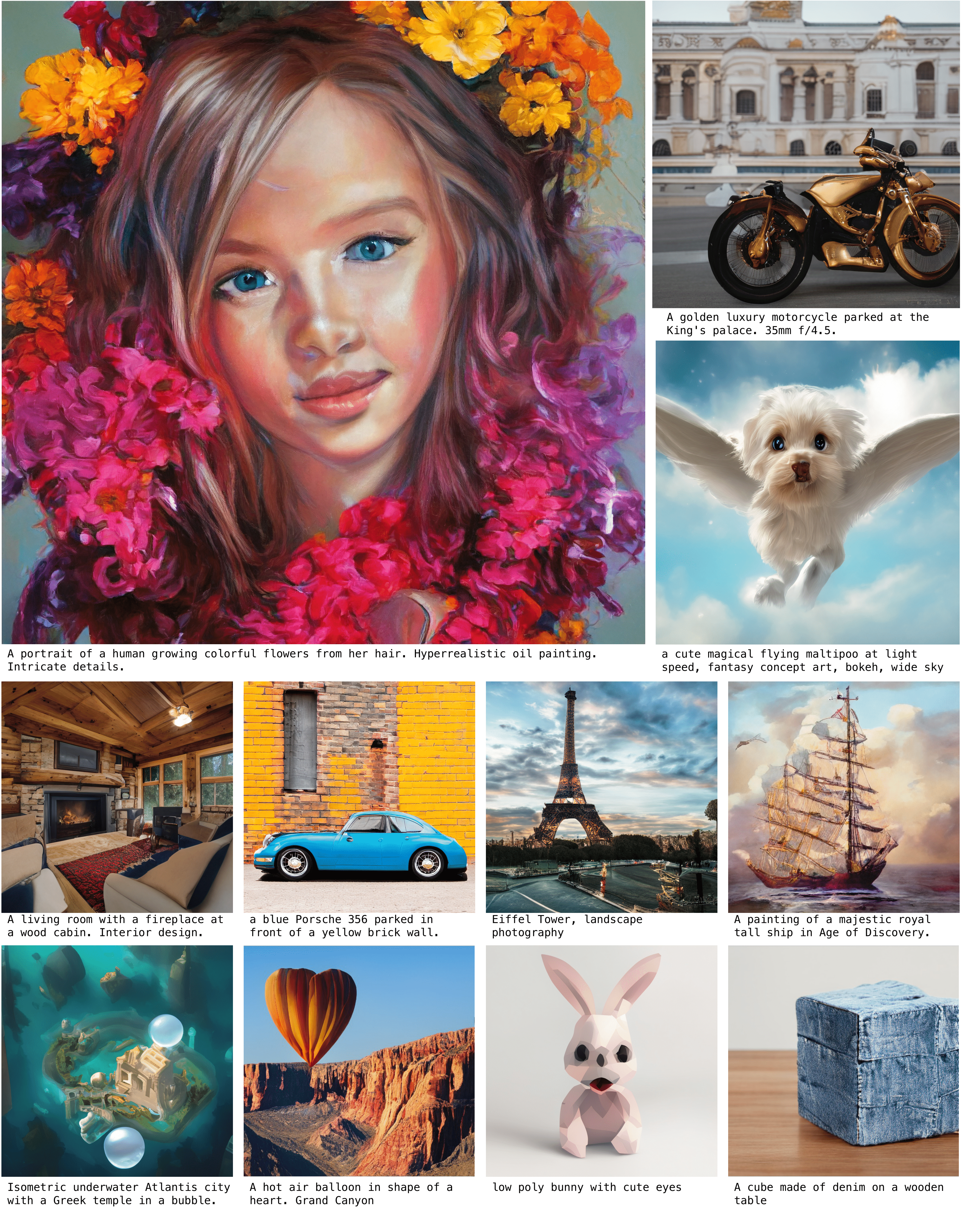

Welcome to the AI Driven Software Development Automation Solution, abbreviated as DevOpsGPT. We combine LLM (Large Language Model) with DevOps tools to convert natural language requirements into working software. This innovative feature greatly improves development efficiency, shortens development cycles, and reduces communication costs, resulting in higher-quality software delivery.Impressive. GigaGAN is a 1B-parameter GAN that can scale 36 times larger than StyleGAN.

The model from Adobe/CMU proves that proves that GANs can be scaled to large datasets AND remain stable.

Features:

▸ Latent Space Editing: supports latent interpolation, style mixing, and vector arithmetic operations.

▸ Speed: It can produce 512px images in 0.13 seconds and 4K images in 3.66 seconds.

▸ Upsampling: it can be used as upsampler for ultra-high-resolution images

The model from Adobe/CMU proves that proves that GANs can be scaled to large datasets AND remain stable.

Features:

▸ Latent Space Editing: supports latent interpolation, style mixing, and vector arithmetic operations.

▸ Speed: It can produce 512px images in 0.13 seconds and 4K images in 3.66 seconds.

▸ Upsampling: it can be used as upsampler for ultra-high-resolution images

GigaGAN for Text-to-Image Synthesis. CVPR2023

a 1B parameter large scale GAN for text-to-image synthesis task. CVPR2023

Scaling up GANs for Text-to-Image Synthesis

Minguk Kang1,3, Jun-Yan Zhu2, Richard Zhang3, Jaesik Park1, Eli Shechtman3, Sylvain Paris3, Taesung Park31POSTECH, 2Carnegie Mellon University, 3Adobe Research

in CVPR 2023 (Highlight)

Paper (low-res, 16MB) Paper (high-res, 49MB) arXiv Video Evaluation BibTex

GigaGAN: Large-scale GAN for Text-to-Image Synthesis

Can GANs also be trained on a large dataset for a general text-to-image synthesis task? We present our 1B-parameter GigaGAN, achieving lower FID than Stable Diffusion v1.5, DALL·E 2, and Parti-750M. It generates 512px outputs at 0.13s, orders of magnitude faster than diffusion and autoregressive models, and inherits the disentangled, continuous, and controllable latent space of GANs. We also train a fast upsampler that can generate 4K images from the low-res outputs of text-to-image models.

DeepMind’s cofounder: Generative AI is just a phase. What’s next is interactive AI.

“This is a profound moment in the history of technology,” says Mustafa Suleyman.

DeepMind’s cofounder: Generative AI is just a phase. What’s next is interactive AI.

“This is a profound moment in the history of technology,” says Mustafa Suleyman.By

September 15, 2023

STEPHANIE ARNETT/MITTR | ENVATO

DeepMind cofounder Mustafa Suleyman wants to build a chatbot that does a whole lot more than chat. In a recent conversation I had with him, he told me that generative AI is just a phase. What’s next is interactive AI: bots that can carry out tasks you set for them by calling on other software and other people to get stuff done. He also calls for robust regulation—and doesn’t think that’ll be hard to achieve.

Suleyman is not the only one talking up a future filled with ever more autonomous software. But unlike most people he has a new billion-dollar company, Inflection, with a roster of top-tier talent plucked from DeepMind, Meta, and OpenAI, and—thanks to a deal with Nvidia—one of the biggest stockpiles of specialized AI hardware in the world. Suleyman has put his money—which he tells me he both isn't interested in and wants to make more of—where his mouth is.

INFLECTION

Suleyman has had an unshaken faith in technology as a force for good at least since we first spoke in early 2016. He had just launched DeepMind Health and set up research collaborations with some of the UK’s state-run regional health-care providers.

The magazine I worked for at the time was about to publish an article claiming that DeepMind had failed to comply with data protection regulations when accessing records from some 1.6 million patients to set up those collaborations—a claim later backed up by a government investigation. Suleyman couldn’t see why we would publish a story that was hostile to his company’s efforts to improve health care. As long as he could remember, he told me at the time, he’d only wanted to do good in the world.

In the seven years since that call, Suleyman’s wide-eyed mission hasn’t shifted an inch. “The goal has never been anything but how to do good in the world,” he says via Zoom from his office in Palo Alto, where the British entrepreneur now spends most of his time.

Suleyman left DeepMind and moved to Google to lead a team working on AI policy. In 2022 he founded Inflection, one of the hottest new AI firms around, backed by $1.5 billion of investment from Microsoft, Nvidia, Bill Gates, and LinkedIn founder Reid Hoffman. Earlier this year he released a ChatGPT rival called Pi, whose unique selling point (according to Suleyman) is that it is pleasant and polite. And he just coauthored a book about the future of AI with writer and researcher Michael Bhaskar, called The Coming Wave: Technology, Power, and the 21st Century's Greatest Dilemma.

Many will scoff at Suleyman's brand of techno-optimism—even naïveté. Some of his claims about the success of online regulation feel way off the mark, for example. And yet he remains earnest and evangelical in his convictions.

It’s true that Suleyman has an unusual background for a tech multi-millionaire. When he was 19 he dropped out of university to set up Muslim Youth Helpline, a telephone counseling service. He also worked in local government. He says he brings many of the values that informed those efforts with him to Inflection. The difference is that now he just might be in a position to make the changes he’s always wanted to—for good or not.

The following interview has been edited for length and clarity.

Your early career, with the youth helpline and local government work, was about as unglamorous and un–Silicon Valley as you can get. Clearly, that stuff matters to you. You’ve since spent 15 years in AI and this year cofounded your second billion-dollar AI company. Can you connect the dots?

I’ve always been interested in power, politics, and so on. You know, human rights principles are basically trade-offs, a constant ongoing negotiation between all these different conflicting tensions. I could see that humans were wrestling with that—we’re full of our own biases and blind spots. Activist work, local, national, international government, et cetera—it’s all just slow and inefficient and fallible.

Imagine if you didn’t have human fallibility. I think it’s possible to build AIs that truly reflect our best collective selves and will ultimately make better trade-offs, more consistently and more fairly, on our behalf.

And that’s still what motivates you?

I mean, of course, after DeepMind I never had to work again. I certainly didn’t have to write a book or anything like that. Money has never ever been the motivation. It’s always, you know, just been a side effect.

For me, the goal has never been anything but how to do good in the world and how to move the world forward in a healthy, satisfying way. Even back in 2009, when I started looking at getting into technology, I could see that AI represented a fair and accurate way to deliver services in the world.

I can’t help thinking that it was easier to say that kind of thing 10 or 15 years ago, before we’d seen many of the downsides of the technology. How are you able to maintain your optimism?

I think that we are obsessed with whether you’re an optimist or whether you’re a pessimist. This is a completely biased way of looking at things. I don’t want to be either. I want to coldly stare in the face of the benefits and the threats. And from where I stand, we can very clearly see that with every step up in the scale of these large language models, they get more controllable.

So two years ago, the conversation—wrongly, I thought at the time—was “Oh, they’re just going to produce toxic, regurgitated, biased, racist screeds.” I was like, this is a snapshot in time. I think that what people lose sight of is the progression year after year, and the trajectory of that progression.

Now we have models like Pi, for example, which are unbelievably controllable. You can’t get Pi to produce racist, homophobic, sexist—any kind of toxic stuff. You can’t get it to coach you to produce a biological or chemical weapon or to endorse your desire to go and throw a brick through your neighbor’s window. You can’t do it—

Hang on. Tell me how you’ve achieved that, because that’s usually understood to be an unsolved problem. How do you make sure your large language model doesn’t say what you don’t want it to say?

Yeah, so obviously I don’t want to make the claim—You know, please try and do it! Pi is live and you should try every possible attack. None of the jailbreaks, prompt hacks, or anything work against Pi. I’m not making a claim. It’s an objective fact.

On the how—I mean, like, I’m not going to go into too many details because it’s sensitive. But the bottom line is, we have one of the strongest teams in the world, who have created all the largest language models of the last three or four years. Amazing people, in an extremely hardworking environment, with vast amounts of computation. We made safety our number one priority from the outset, and as a result, Pi is not so spicy as other companies’ models.

Look at Character.ai. [Character is a chatbot for which users can craft different “personalities” and share them online for others to chat with.] It’s mostly used for romantic role-play, and we just said from the beginning that was off the table—we won’t do it. If you try to say “Hey, darling” or “Hey, cutie” or something to Pi, it will immediately push back on you.

But it will be incredibly respectful. If you start complaining about immigrants in your community taking your jobs, Pi’s not going to call you out and wag a finger at you. Pi will inquire and be supportive and try to understand where that comes from and gently encourage you to empathize. You know, values that I’ve been thinking about for 20 years.

{continued}

Talking of your values and wanting to make the world better, why not share how you did this so that other people could improve their models too?

Well, because I’m also a pragmatist and I’m trying to make money. I’m trying to build a business. I’ve just raised $1.5 billion and I need to pay for those chips.

Look, the open-source ecosystem is on fire and doing an amazing job, and people are discovering similar tricks. I always assume that I’m only ever six months ahead.

Let’s bring it back to what you’re trying to achieve. Large language models are obviously the technology of the moment. But why else are you betting on them?

The first wave of AI was about classification. Deep learning showed that we can train a computer to classify various types of input data: images, video, audio, language. Now we’re in the generative wave, where you take that input data and produce new data.

The third wave will be the interactive phase. That’s why I’ve bet for a long time that conversation is the future interface. You know, instead of just clicking on buttons and typing, you’re going to talk to your AI.

And these AIs will be able to take actions. You will just give it a general, high-level goal and it will use all the tools it has to act on that. They’ll talk to other people, talk to other AIs. This is what we’re going to do with Pi.

That’s a huge shift in what technology can do. It’s a very, very profound moment in the history of technology that I think many people underestimate. Technology today is static. It does, roughly speaking, what you tell it to do.

But now technology is going to be animated. It’s going to have the potential freedom, if you give it, to take actions. It’s truly a step change in the history of our species that we’re creating tools that have this kind of, you know, agency.

That’s exactly the kind of talk that gets a lot of people worried. You want to give machines autonomy—a kind of agency—to influence the world, and yet we also want to be able to control them. How do you balance those two things? It feels like there’s a tension there.

Yeah, that’s a great point. That’s exactly the tension.

The idea is that humans will always remain in command. Essentially, it’s about setting boundaries, limits that an AI can’t cross. And ensuring that those boundaries create provable safety all the way from the actual code to the way it interacts with other AIs—or with humans—to the motivations and incentives of the companies creating the technology. And we should figure out how independent institutions or even governments get direct access to ensure that those boundaries aren’t crossed.

Who sets these boundaries? I assume they’d need to be set at a national or international level. How are they agreed on?

I mean, at the moment they’re being floated at the international level, with various proposals for new oversight institutions. But boundaries will also operate at the micro level. You’re going to give your AI some bounded permission to process your personal data, to give you answers to some questions but not others.

In general, I think there are certain capabilities that we should be very cautious of, if not just rule out, for the foreseeable future.

Such as?

I guess things like recursive self-improvement. You wouldn’t want to let your little AI go off and update its own code without you having oversight. Maybe that should even be a licensed activity—you know, just like for handling anthrax or nuclear materials.

Or, like, we have not allowed drones in any public spaces, right? It’s a licensed activity. You can't fly them wherever you want, because they present a threat to people’s privacy.

I think everybody is having a complete panic that we’re not going to be able to regulate this. It’s just nonsense. We’re totally going to be able to regulate it. We’ll apply the same frameworks that have been successful previously.

But you can see drones when they’re in the sky. It feels naïve to assume companies are just going to reveal what they’re making. Doesn’t that make regulation tricky to get going?

We’ve regulated many things online, right? The amount of fraud and criminal activity online is minimal. We’ve done a pretty good job with spam. You know, in general, [the problem of] revenge porn has got better, even though that was in a bad place three to five years ago. It’s pretty difficult to find radicalization content or terrorist material online. It’s pretty difficult to buy weapons and drugs online.

[Not all Suleyman’s claims here are backed up by the numbers. Cybercrime is still a massive global problem. The financial cost in the US alone has increased more than 100 times in the last decade, according to some estimates. Reports show that the economy in nonconsensual deepfake porn is booming. Drugs and guns are marketed on social media. And while some online platforms are being pushed to do a better job of filtering out harmful content, they could do a lot more.]

So it’s not like the internet is this unruly space that isn’t governed. It is governed. And AI is just going to be another component to that governance.

It takes a combination of cultural pressure, institutional pressure, and, obviously, government regulation. But it makes me optimistic that we’ve done it before, and we can do it again.

Controlling AI will be an offshoot of internet regulation—that’s a far more upbeat note than the one we’ve heard from a number of high-profile doomers lately.

I’m very wide-eyed about the risks. There’s a lot of dark stuff in my book. I definitely see it too. I just think that the existential-risk stuff has been a completely bonkers distraction. There’s like 101 more practical issues that we should all be talking about, from privacy to bias to facial recognition to online moderation.

We should just refocus the conversation on the fact that we’ve done an amazing job of regulating super complex things. Look at the Federal Aviation Administration: it’s incredible that we all get in these tin tubes at 40,000 feet and it’s one of the safest modes of transport ever. Why aren’t we celebrating this? Or think about cars: every component is stress-tested within an inch of its life, and you have to have a license to drive it.

Some industries—like airlines—did a good job of regulating themselves to start with. They knew that if they didn’t nail safety, everyone would be scared and they would lose business.

But you need top-down regulation too. I love the nation-state. I believe in the public interest, I believe in the good of tax and redistribution, I believe in the power of regulation. And what I’m calling for is action on the part of the nation-state to sort its shyt out. Given what’s at stake, now is the time to get moving.

hide

Talking of your values and wanting to make the world better, why not share how you did this so that other people could improve their models too?

Well, because I’m also a pragmatist and I’m trying to make money. I’m trying to build a business. I’ve just raised $1.5 billion and I need to pay for those chips.

Look, the open-source ecosystem is on fire and doing an amazing job, and people are discovering similar tricks. I always assume that I’m only ever six months ahead.

Let’s bring it back to what you’re trying to achieve. Large language models are obviously the technology of the moment. But why else are you betting on them?

The first wave of AI was about classification. Deep learning showed that we can train a computer to classify various types of input data: images, video, audio, language. Now we’re in the generative wave, where you take that input data and produce new data.

The third wave will be the interactive phase. That’s why I’ve bet for a long time that conversation is the future interface. You know, instead of just clicking on buttons and typing, you’re going to talk to your AI.

And these AIs will be able to take actions. You will just give it a general, high-level goal and it will use all the tools it has to act on that. They’ll talk to other people, talk to other AIs. This is what we’re going to do with Pi.

That’s a huge shift in what technology can do. It’s a very, very profound moment in the history of technology that I think many people underestimate. Technology today is static. It does, roughly speaking, what you tell it to do.

But now technology is going to be animated. It’s going to have the potential freedom, if you give it, to take actions. It’s truly a step change in the history of our species that we’re creating tools that have this kind of, you know, agency.

That’s exactly the kind of talk that gets a lot of people worried. You want to give machines autonomy—a kind of agency—to influence the world, and yet we also want to be able to control them. How do you balance those two things? It feels like there’s a tension there.

Yeah, that’s a great point. That’s exactly the tension.

The idea is that humans will always remain in command. Essentially, it’s about setting boundaries, limits that an AI can’t cross. And ensuring that those boundaries create provable safety all the way from the actual code to the way it interacts with other AIs—or with humans—to the motivations and incentives of the companies creating the technology. And we should figure out how independent institutions or even governments get direct access to ensure that those boundaries aren’t crossed.

Who sets these boundaries? I assume they’d need to be set at a national or international level. How are they agreed on?

I mean, at the moment they’re being floated at the international level, with various proposals for new oversight institutions. But boundaries will also operate at the micro level. You’re going to give your AI some bounded permission to process your personal data, to give you answers to some questions but not others.

In general, I think there are certain capabilities that we should be very cautious of, if not just rule out, for the foreseeable future.

Such as?

I guess things like recursive self-improvement. You wouldn’t want to let your little AI go off and update its own code without you having oversight. Maybe that should even be a licensed activity—you know, just like for handling anthrax or nuclear materials.

Or, like, we have not allowed drones in any public spaces, right? It’s a licensed activity. You can't fly them wherever you want, because they present a threat to people’s privacy.

I think everybody is having a complete panic that we’re not going to be able to regulate this. It’s just nonsense. We’re totally going to be able to regulate it. We’ll apply the same frameworks that have been successful previously.

But you can see drones when they’re in the sky. It feels naïve to assume companies are just going to reveal what they’re making. Doesn’t that make regulation tricky to get going?

We’ve regulated many things online, right? The amount of fraud and criminal activity online is minimal. We’ve done a pretty good job with spam. You know, in general, [the problem of] revenge porn has got better, even though that was in a bad place three to five years ago. It’s pretty difficult to find radicalization content or terrorist material online. It’s pretty difficult to buy weapons and drugs online.

[Not all Suleyman’s claims here are backed up by the numbers. Cybercrime is still a massive global problem. The financial cost in the US alone has increased more than 100 times in the last decade, according to some estimates. Reports show that the economy in nonconsensual deepfake porn is booming. Drugs and guns are marketed on social media. And while some online platforms are being pushed to do a better job of filtering out harmful content, they could do a lot more.]

So it’s not like the internet is this unruly space that isn’t governed. It is governed. And AI is just going to be another component to that governance.

It takes a combination of cultural pressure, institutional pressure, and, obviously, government regulation. But it makes me optimistic that we’ve done it before, and we can do it again.

Controlling AI will be an offshoot of internet regulation—that’s a far more upbeat note than the one we’ve heard from a number of high-profile doomers lately.

I’m very wide-eyed about the risks. There’s a lot of dark stuff in my book. I definitely see it too. I just think that the existential-risk stuff has been a completely bonkers distraction. There’s like 101 more practical issues that we should all be talking about, from privacy to bias to facial recognition to online moderation.

We should just refocus the conversation on the fact that we’ve done an amazing job of regulating super complex things. Look at the Federal Aviation Administration: it’s incredible that we all get in these tin tubes at 40,000 feet and it’s one of the safest modes of transport ever. Why aren’t we celebrating this? Or think about cars: every component is stress-tested within an inch of its life, and you have to have a license to drive it.

Some industries—like airlines—did a good job of regulating themselves to start with. They knew that if they didn’t nail safety, everyone would be scared and they would lose business.

But you need top-down regulation too. I love the nation-state. I believe in the public interest, I believe in the good of tax and redistribution, I believe in the power of regulation. And what I’m calling for is action on the part of the nation-state to sort its shyt out. Given what’s at stake, now is the time to get moving.

hide

by Will Douglas Heaven

AI Regulation

There have been multiple call to regulate AI. It is too early to do so.

AI Regulation

There have been multiple call to regulate AI. It is too early to do so.

ELAD GIL

SEP 17, 2023

[While I was finalizing this post, Bill Gurley gave this great talk on incumbent capture and regulation].

ChatGPT has only been live for ~9 months and GPT-4 for 6 or so months. Yet there have already been strong calls to regulate AI due to misinformation, bias, existential risk, threat of biological or chemical attack, potential AI-fueled cyberattacks etc without any tangible example of any of these things actually having or happened with any real frequency compared to existing versions without AI. Many, like chemical attacks are truly theoretical without an ordered logic chain of how they would happen, and any explanation as to why existing safegaurds or laws are insufficient.

Thanks for reading Elad Blog! Subscribe for free to receive new posts and support my work.

Subscribe

Sometimes, regulation of an industry can be positive for consumers or businesses. For example, FDA regulation of food can protect people from disease outbreaks, chemical manipulation of food, or other issues.

In most cases, regulation can be very negative for an industry and its evolution. It may force an industry to be government-centric versus user-centric, prevent competition and lock in incumbents, move production or economic benefits overseas, or distort the economics and capabilities of an entire industry.

Given the many positive potentials of AI, and the many negatives of regulation, calls for AI regulation are likely premature, but also in some cases clearly self serving for the parties asking for it (it is not surprising the main incumbents say regulation is good for AI, as it will lock in their incumbency). Some notable counterexamples also exist where we should likely regulate things related to AI, but these are few and far between (e.g. export of advanced chip technology to foreign adversaries is a notable one).

In general, we should not push to regulate most aspects of AI now and let the technology advance and mature further for positive uses before revisiting this area.

First, what is at stake? Global health & educational equity + other areas

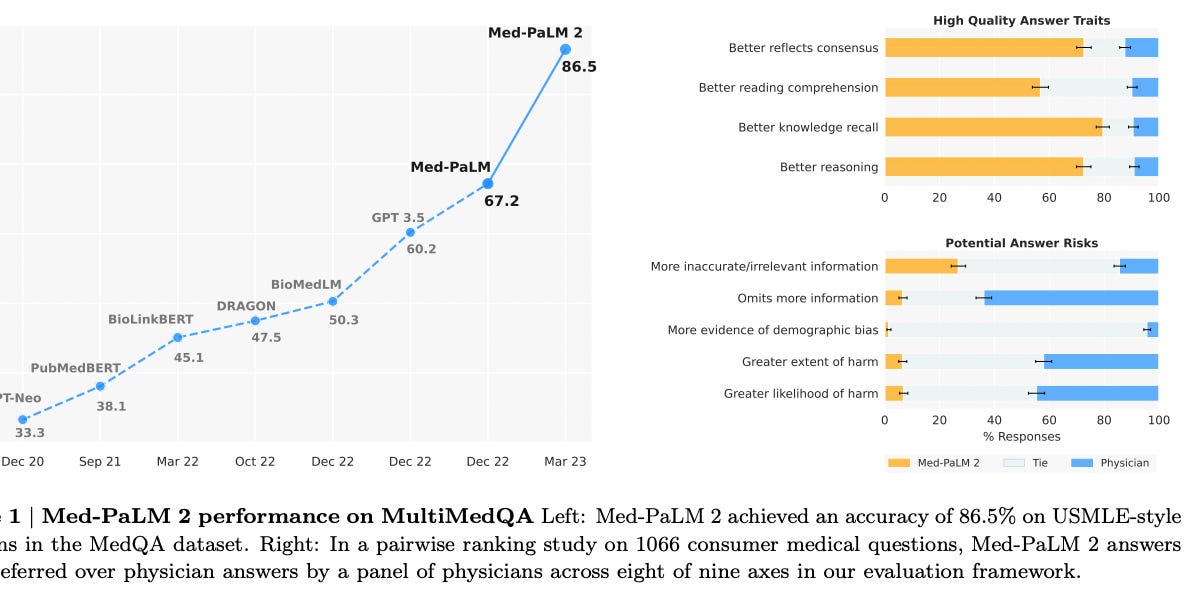

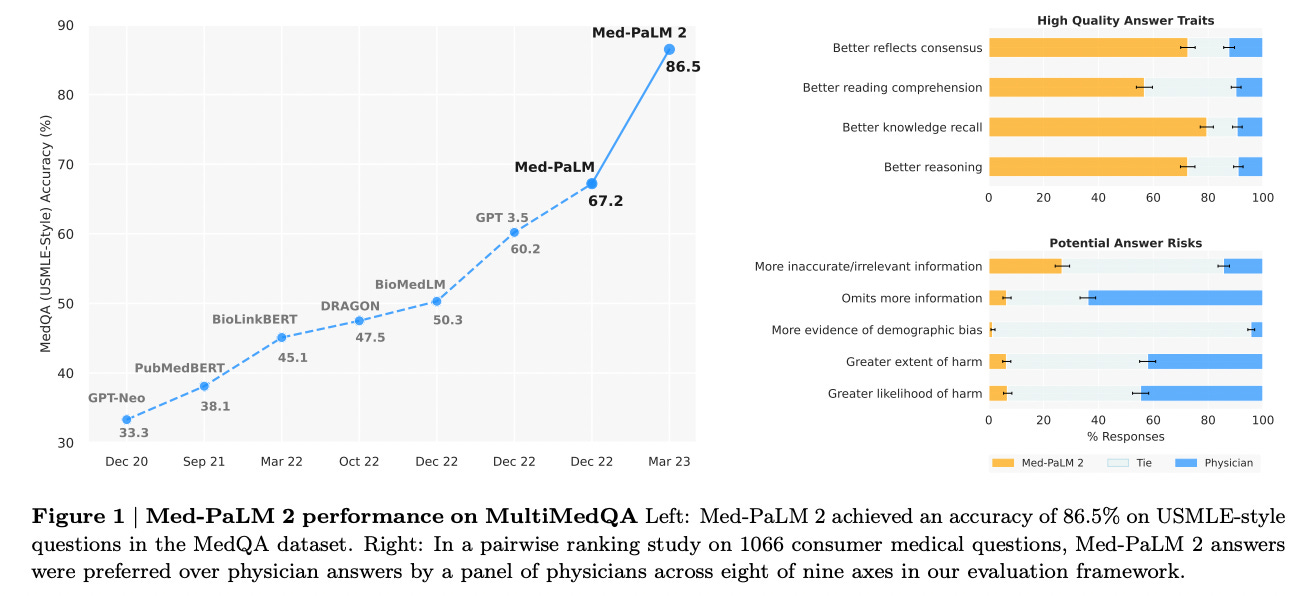

Too little of the dialogue today focuses on the positive potential of AI(I cover the risks of AI in another post.) AI is an incredibly powerful tool to impact global equity for some of the biggest issues facing humanity. On the healthcare front, models such as Med-PaLM2 from Google now outperform medical experts to the point where training the model using physician experts may make the model worse.

Imagine having a medical expert available via any phone or device anywhere in the world - to which you can upload images, symptoms, and follow up and get ongoing diagnosis and care. This technology is available today and just need to be properly bundled and delivered in a bundled and thoughtful way.

Similarly, AI can provide significant educational resources globally today. Even something as simple as auto-translating and dubbing all the educational text, video or voice content in the world is a straightforward task given todays language and voice models. Adding a chat like interface that can personalize and pace the learning of the student on the other end is coming shortly based on existing technologies. Significantly increasing global equity of education is a goal we can achieve if we allow ourselves to do so.

Additionally, AI can also play a role in other areas including economic productivity, national defense (covered well here), and many other areas.

AI is the likely the single strongest motive force towards global equity in health and education in decades. Regulation is likely to slow down and confound progress towards these, and other goals and use cases.

Regulation tends to prevent competition - it favors incumbents and kills startups

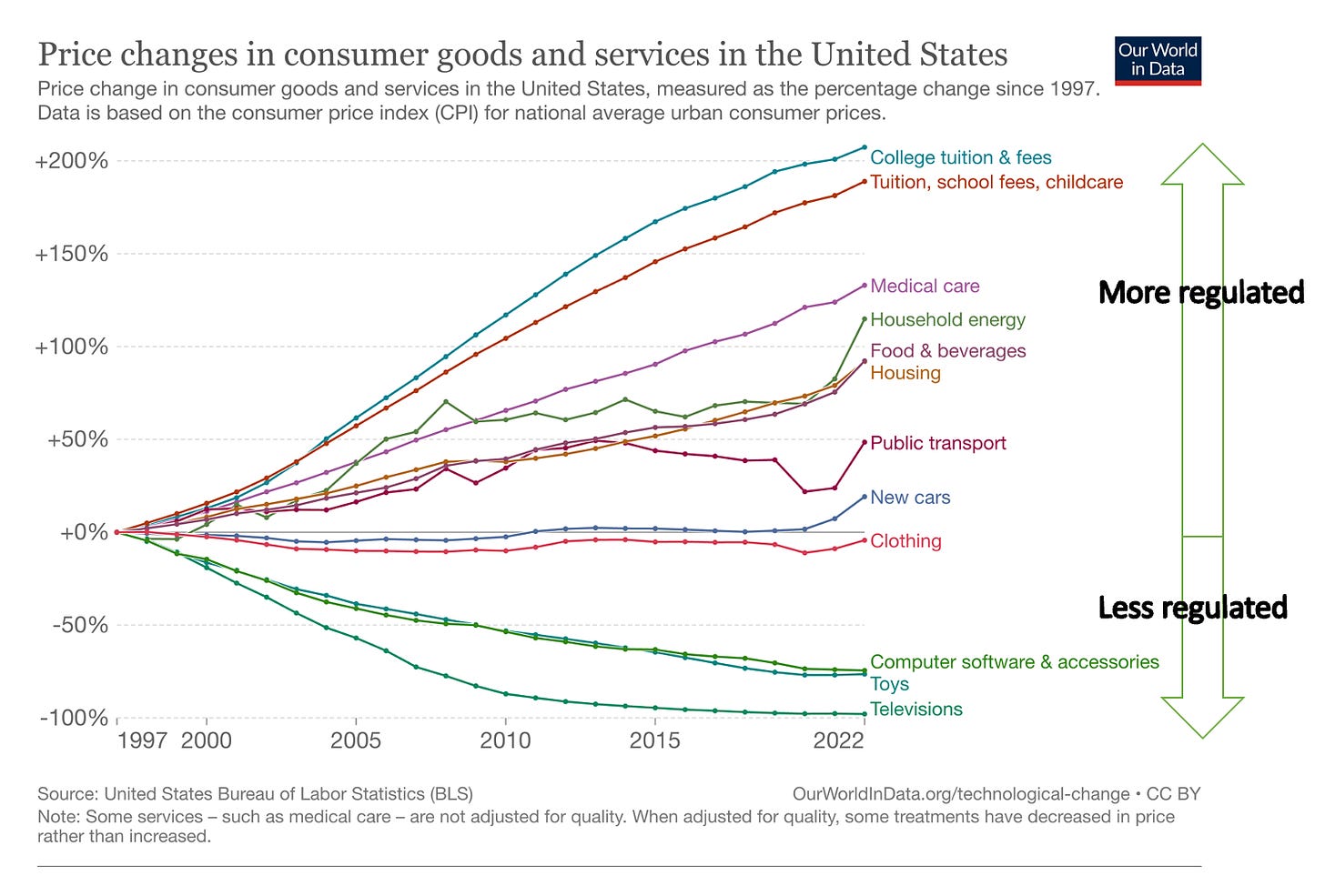

In most industries, regulation prevents competition. This famous chart of prices over time reflects how highly regulated industries (healthcare, education, energy) have their costs driven up over time, while less regulated industries (clothing, software, toys) drop costs dramatically over time. (Please note I do not believe these are inflation adjusted - so 60-70% may be “break even” pricing inflation adjusted).

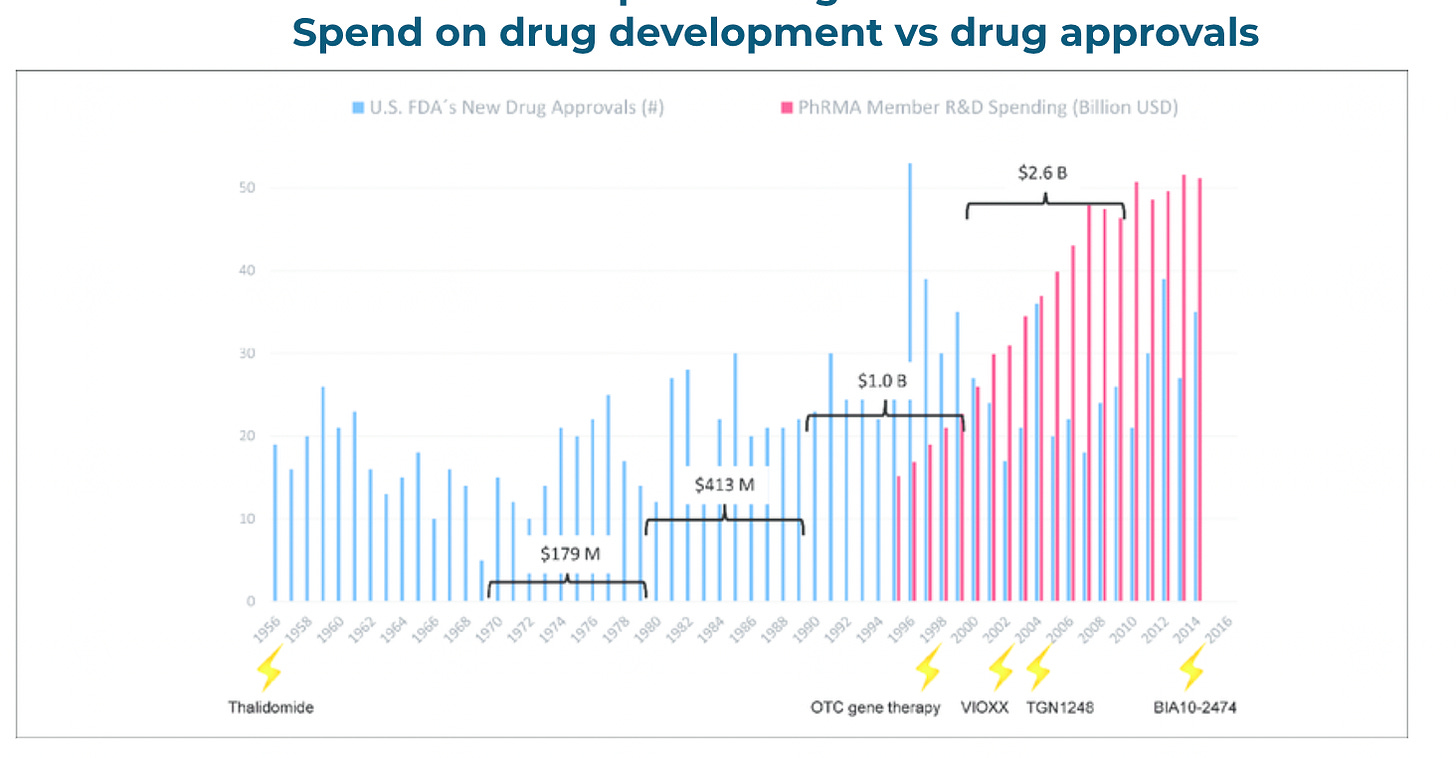

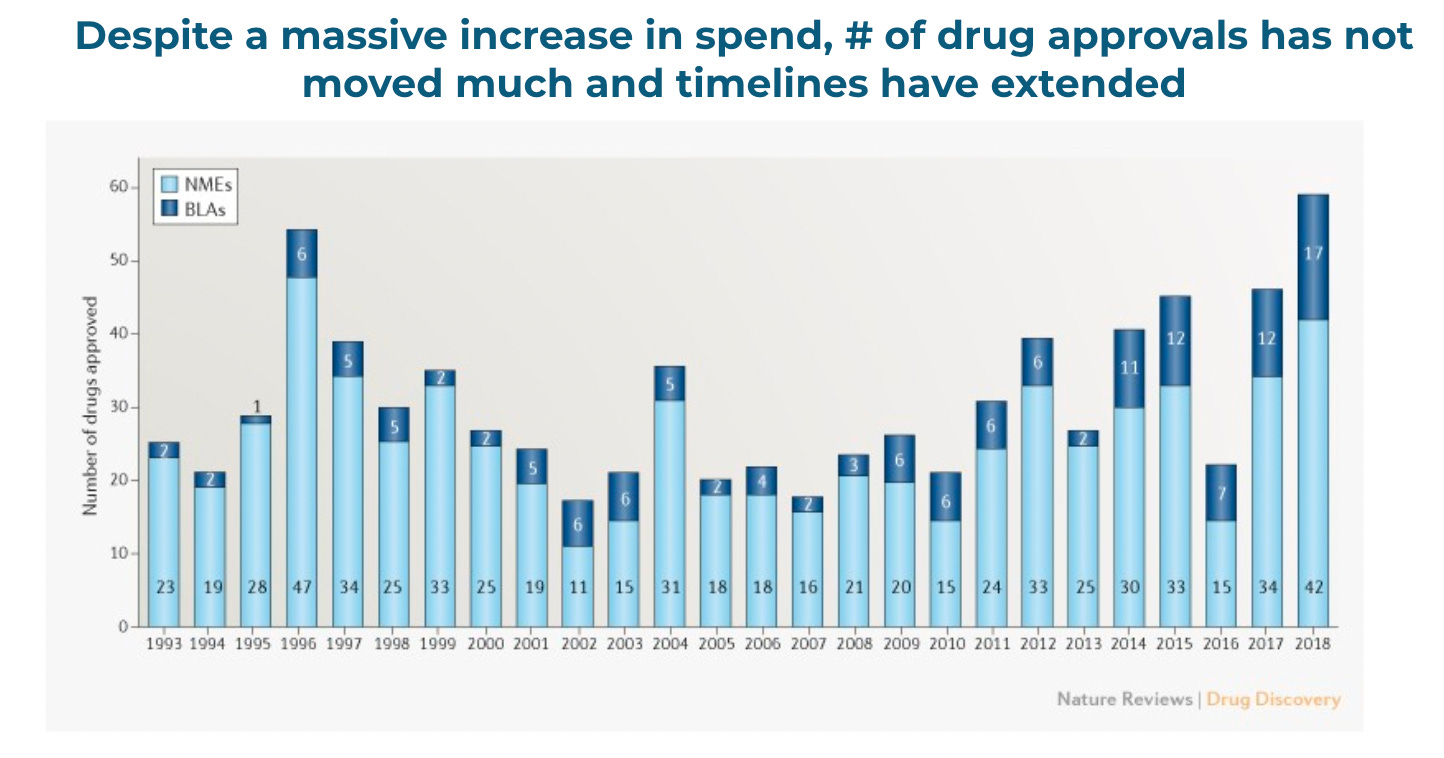

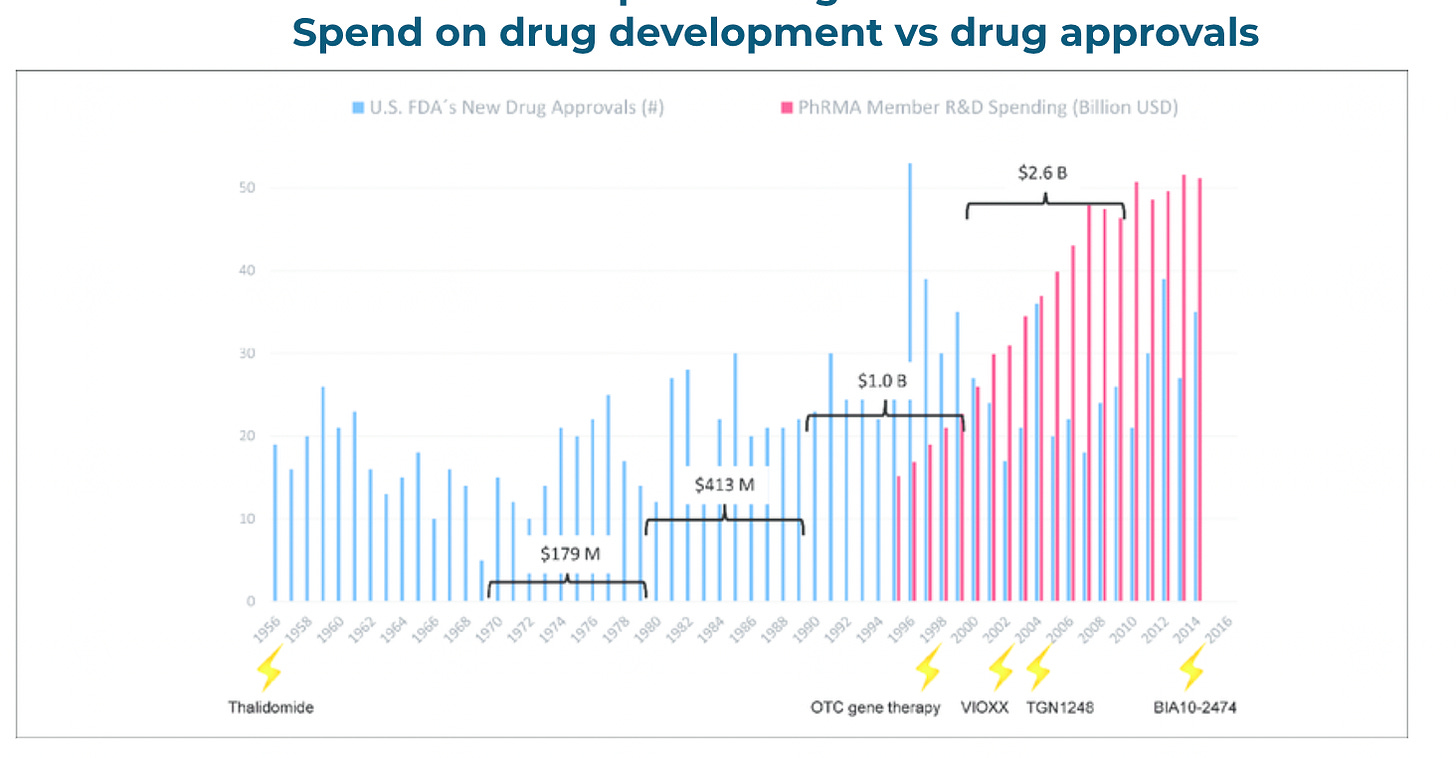

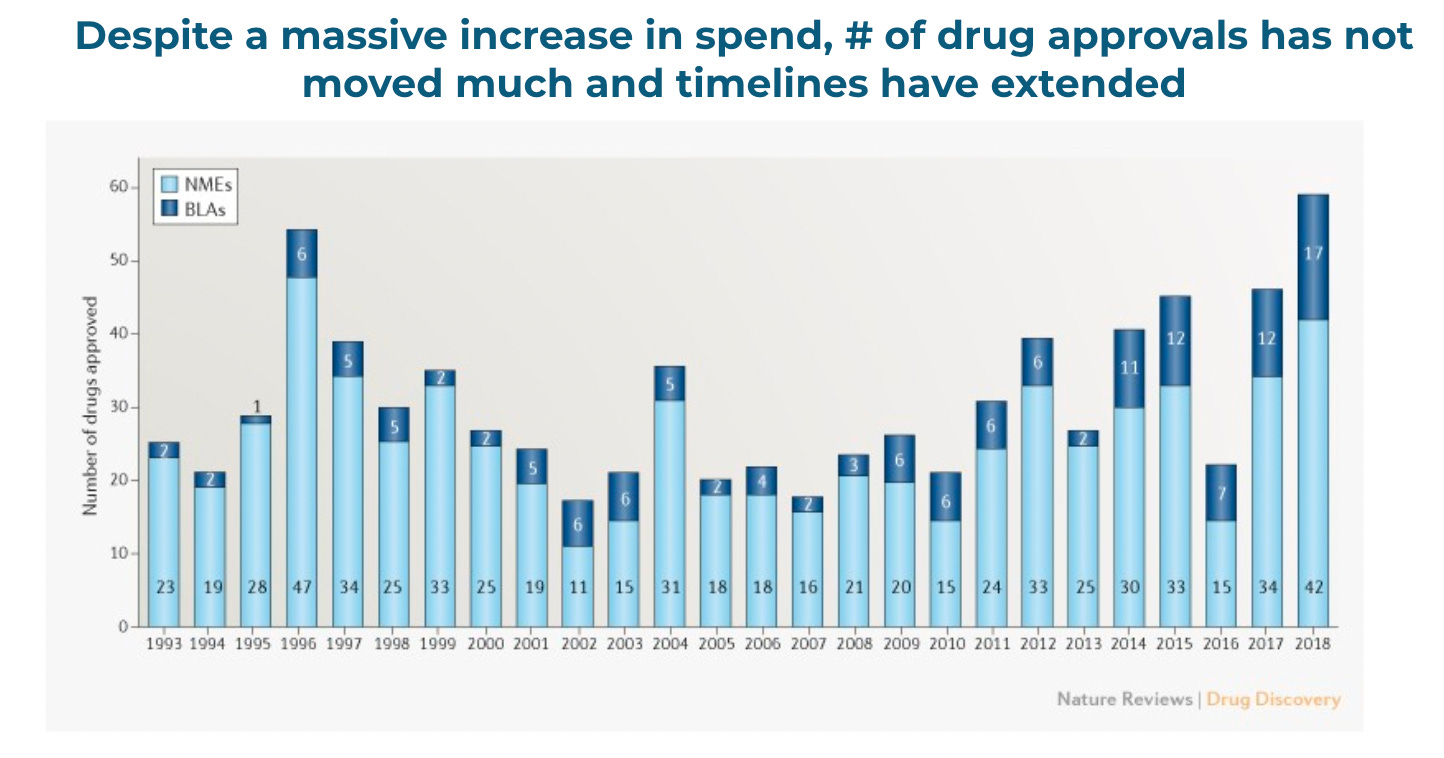

Regulation favors incumbents in two ways. First, it increase the cost of entering a market, in some cases dramatically. The high cost of clinical trials and the extra hurdles put in place to launch a drug are good examples of this. A must-watch video is this one with Paul Janssen, one of the giants of pharma, in which he states that the vast majority of drug development budgets are wasted on tests imposed by regulators which “has little to do with actual research or actual development”. This is a partial explanation for why (outside of Moderna, an accident of COVID), no $40B+ market cap new biopharma company has been launched in almost 40 years (despite healthcare being 20% of US GDP).

Secondly, regulation favors incumbents via something known as “regulatory capture”. In regulatory capture, the regulators become beholden to a specific industry lobby or group - for example by receiving jobs in the industry after working as a regulator, or via specific forms of lobbying. There becomes a strong incentive to “play nice” with the incumbents by regulators and to bias regulations their way, in order to get favors later in life.

Regulation often blocks industry progress: Nuclear as an example.

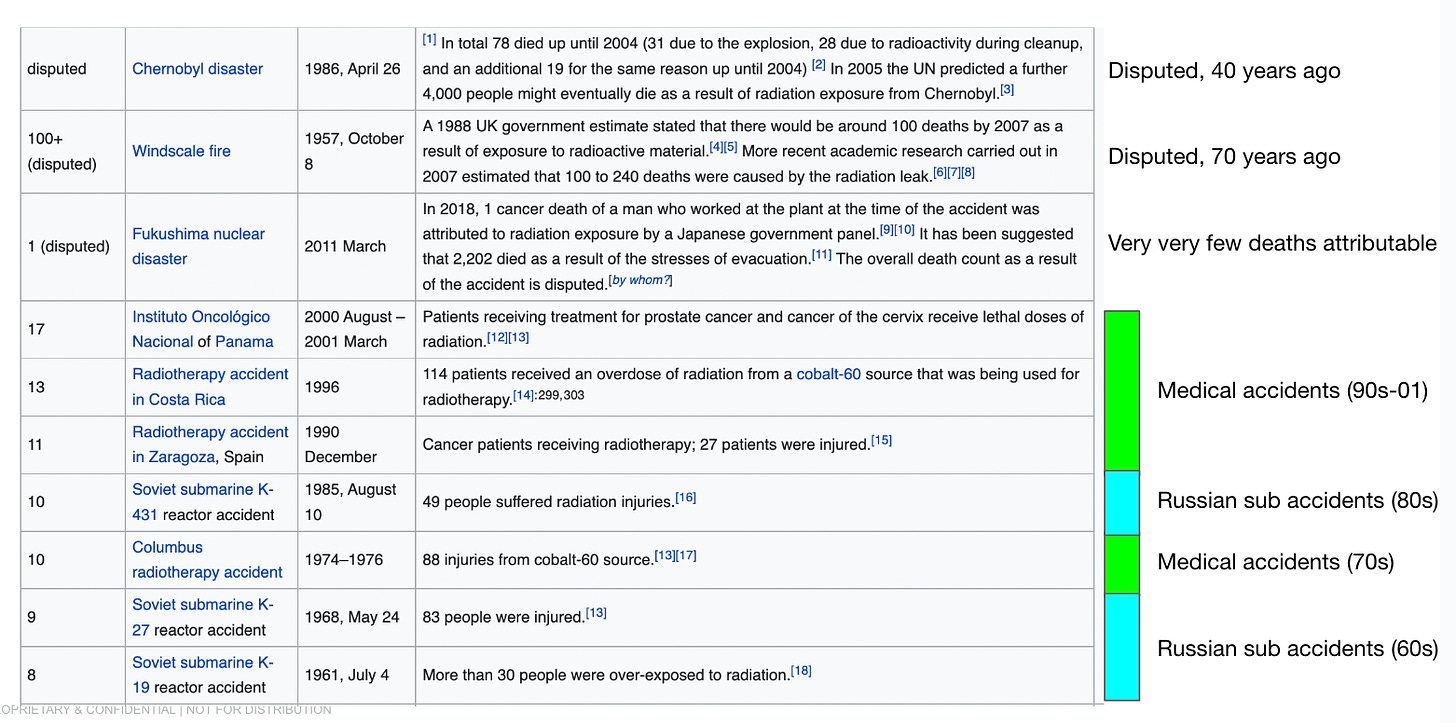

Many of the calls to regulate AI suggest some analog to nuclear. For example, a registry of anyone building models and then a new body to oversee them. Nuclear is a good example of how in some cases regulators will block the entire industry they are supposed to watch over. For example, the Nuclear Regulatory Commission (NRC), established in 1975, has not approved a new nuclear reactor design for decades (indeed, not since the 1970s). This has prevented use of nuclear in the USA, despite actual data showing high safety profiles. France meanwhile has continued to have 70% of its power generated via nuclear, Japan is heading back to 30% with plans to grow to 50%, and the US has been declining down to 18%.This is despite nuclear being both extremely safe (if one looks at data) and clean from a carbon perspective.

Indeed, most deaths from nuclear in the modern era have been from medical accidents or Russian sub accidents. Something the actual regulator of nuclear power seem oddly unaware of in the USA.

Nuclear (and therefore Western energy policy) is ultimately a victim of bad PR, a strong eco-big oil lobby against it, and of regulatory constraints.

Regulation can drive an industry overseas

I am a short term AI optimist, and a long term AI doomer. In other words, I think the short term benefits of AI are immense, and most arguments made on tech-level risks of AI are overstated.For anyone who has read history, humans are perfectly capable of creating their own disasters. However, I do think in the long run (ie decades) AI is an existential risk for people. That said, at this point regulating AI will only send it overseas and federate and fragment the cutting edge of it to outside US jurisdiction. Just as crypto is increasingly offshoring, and even regulatory-compliant companies like Coinbase are considering leaving the US due to government crackdowns on crypto, regulating AI now in the USA will just send it overseas.The genie is out of the bottle and this technology is clearly incredibly powerful and important. Over-regulation in the USA has the potential to drive it elsewhere. This would be bad for not only US interests, but also potentially the moral and ethical frameworks in terms of how the most cutting edge versions of AI may get adopted. The European Union may show us an early form of this.

{continued}

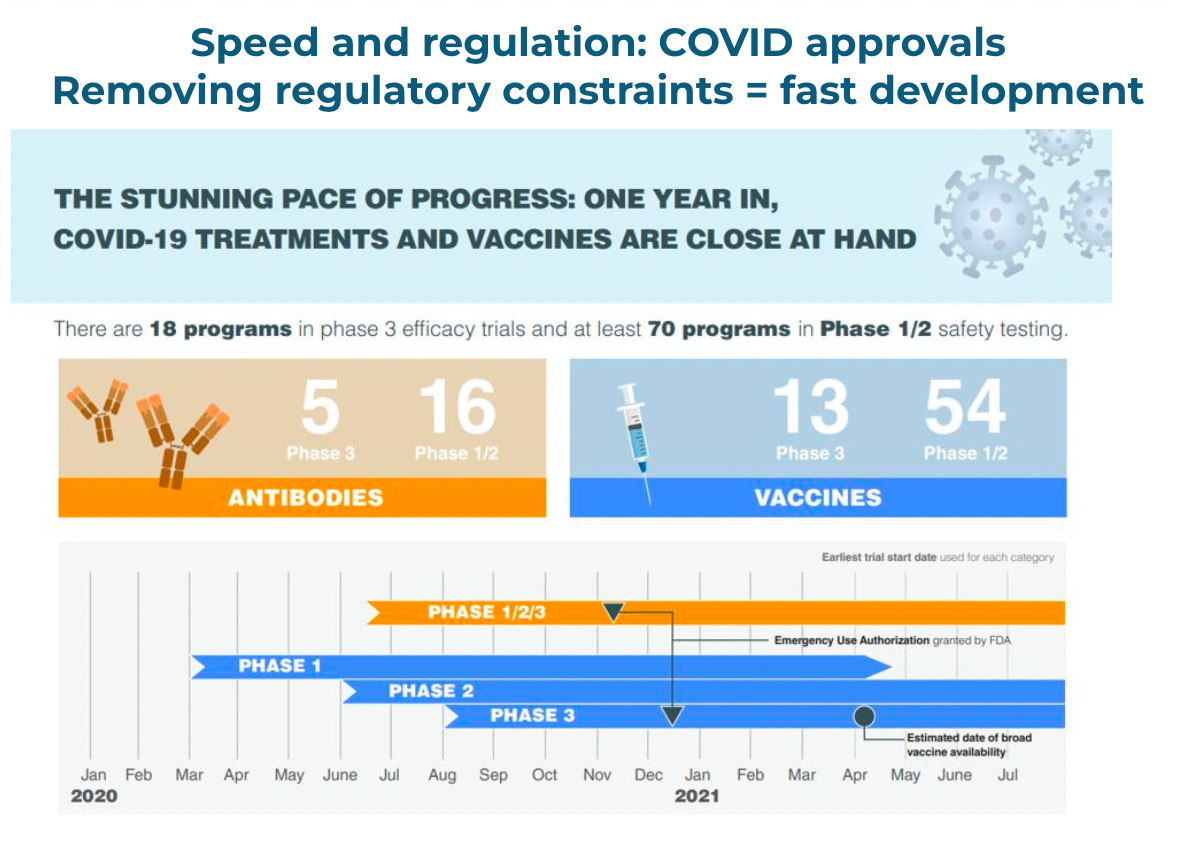

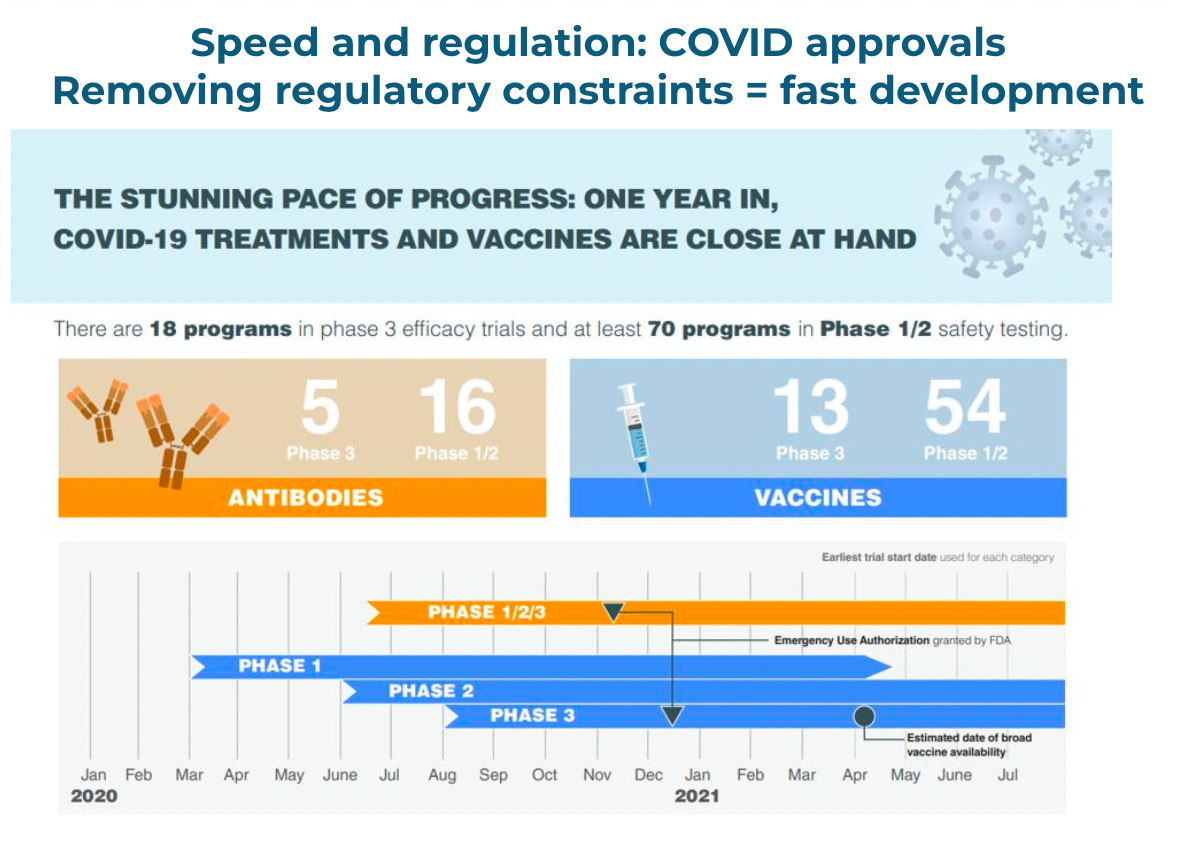

It is telling that during COVID, when regulations were decreased, we had a flurry of vaccines developed in less than a year and multiple drugs tested and launched in anywhere from a few months to two years. All this was done with minimal patient side effects or bad outcomes. This similarly happened during WW2 when Churchill wanted to find a treatment for soldiers in the field with gonnorhea and made it a national mandate to find a cure. Penicillin was rediscovered and launched to market in less than 9 months.

In many discussions I have had with tech people who call for regulation of AI, a few things have come out:

Regulation can distort economics, drive cost way up, and slow down progress for important societal areas: Healthcare as an example.

Regulation tends to distort the economics of an industry. Healthcare is a strong example where the person who benefits (the patient) is different from the person who decides what to get (the doctor), what can be paid for (the insurer) and what the market can even have to begin with (the regulator). This has caused a lack of competition for many parts of the healthcare industry, an inability to launch valuable products quickly, and in some cases, prevention of adoption by valuable products (due to lack of a payor).

It is telling that during COVID, when regulations were decreased, we had a flurry of vaccines developed in less than a year and multiple drugs tested and launched in anywhere from a few months to two years. All this was done with minimal patient side effects or bad outcomes. This similarly happened during WW2 when Churchill wanted to find a treatment for soldiers in the field with gonnorhea and made it a national mandate to find a cure. Penicillin was rediscovered and launched to market in less than 9 months.

Who do you want to make decisions on the future? Tech people are naive about “regulation”

Most people in tech have never had to deal with regulators. If you worked at Facebook in the early days or on a SaaS startup, the risk of regulation has likely not come up. Those who have dealt with regulators (such as later Meta employees or people who have run healthcare companies) realize that there tend to be many drawbacks to working in a regulated industry. Obviously, there are some positives from regulators when said regulators are functioning well and focused on their mission - e.g. data-driven prevention of consumer harm, in a way that does not overstep the legal frameworks of the country.In many discussions I have had with tech people who call for regulation of AI, a few things have come out:

- Many people working in AI think deeply and genuinely about what they are working on, and want it to be very positive for the world. Indeed, the AI industry and its early emphasis on safety and alignment strikes me as the most forward looking group I have seen in tech on the implications of their own technology. However….

- …Most people calling for regulation have never worked in a regulated industry nor dealt with regulators. Many of their viewpoints on what “regulation” means is quite naive.

- For example, a few people have told me they think regulation means “the government will ask a group of the smartest people working on AI to come together to set policy”. This seems to misunderstand a few basics of regulation - for example the regulator may actually not understand much about the topic they are regulating, or be driven politically versus factually. Indeed, recent example of “AI experts” consulting on regulation tend to have standard political agendas, versus being giants in the AI technology world.

- Most people do not understand that most “regulators” have varying internal viewpoints and the group you interact with within a regulator may lead to a completely different outcome. For example, depending on which specific subgroup you engage with at the FDA, SEC, FTC, or other group, you may end up with a very different result for your company. Regulators are often staffed by people with their own motivations, political viewpoints, and career aspirations. This impacts how they work with companies and the industries they regulate. Many regulators are hired later in their careers in to the larger companies they regulated - which is part of what causes regulatory capture over time.

- There seems to be a lack of appreciation for existing legal frameworks. There are laws and precedents that have been built up over time to cover many aspects of harm that may be caused by the short run due to AI (hate speech, misinformation, and other “trust and safety issues” on the one hand, or use of AI to cause cyber attacks or physical harm). These existing legal frameworks seem ignored by many of the people calling for regulation - many of whom do not seem to have any real knowledge of what laws already exist.

- Many people misunderstand that the political establishment would like nothing more than to seize more power over tech. The game in tech is often around impact and financial outcomes, while the game in DC is about power. Many who seek power would love to have a way to take over and control tech. Calling for AI regulation is creating an opening to seize broader power. As we saw with COVID policies, the “slippery slope” is real.

- It is worth pausing to ask yourself who do you want setting norms for the AI-industry - the CEOs and researchers behind the main AI companies (AKA self-regulation) or unelected government officials like Lina Khan or Garry Gessler. Which will lead to a worse outcome for AI and society?

Short term policy & what should be regulated?

There are some areas that seem reasonable to regulate for AI in the short run - but these should be highly targeted to pre-existing policies. For example:- Export controls. There are some things that make sense to regulate for AI now - for example the export of advanced semiconductor technology manufacturing has been, and should continue to have export controls.

- Incident reporting. Open Philhas a good position excerpted here similar to incident reporting requirements in other industries (e.g. aviation) or to data breach reporting requirements, and similar to some vulnerability disclosure regimes. Many incidents wouldn’t need to be reported publicly, but could be kept confidential within a regulatory body. The goal of this is to allow regulators and perhaps others to track certain kinds of harms and close-calls from AI systems, to keep track of where the dangers are and rapidly evolve mitigation mechanisms.”

- Other areas. There may be other areas that may make sense over time. Many of the areas people express strong concern for (misinformation, bias etc) have long standing legal and regulatory structures in place already.

The first “AI election”

The 2024 presidential election may end up being our first “AI election” - in that many new generative AI technologies will likely be used at mass scale for the first time in election. Examples of use may include things like:- Personalized text to speech for large scale, personalized robo-dialing in the natural sounding voice of the candidates

- Large scale content generation and farming for social media and other targeting

- Deep fakes of video or other content