/cdn.vox-cdn.com/uploads/chorus_asset/file/11713851/VRG_ILLO_2727_002.jpg)

I learned to make a lip-syncing deepfake in just a few hours (and you can, too)

A fun way to waste a few hours

I learned to make a lip-syncing deepfake in just a few hours (and you can, too)

Zero coding experience required

By James Vincent, a senior reporter who has covered AI, robotics, and more for eight years at The Verge.Sep 9, 2020, 10:38 AM EDT

If you buy something from a Verge link, Vox Media may earn a commission. See our ethics statement.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/11713851/VRG_ILLO_2727_002.jpg)

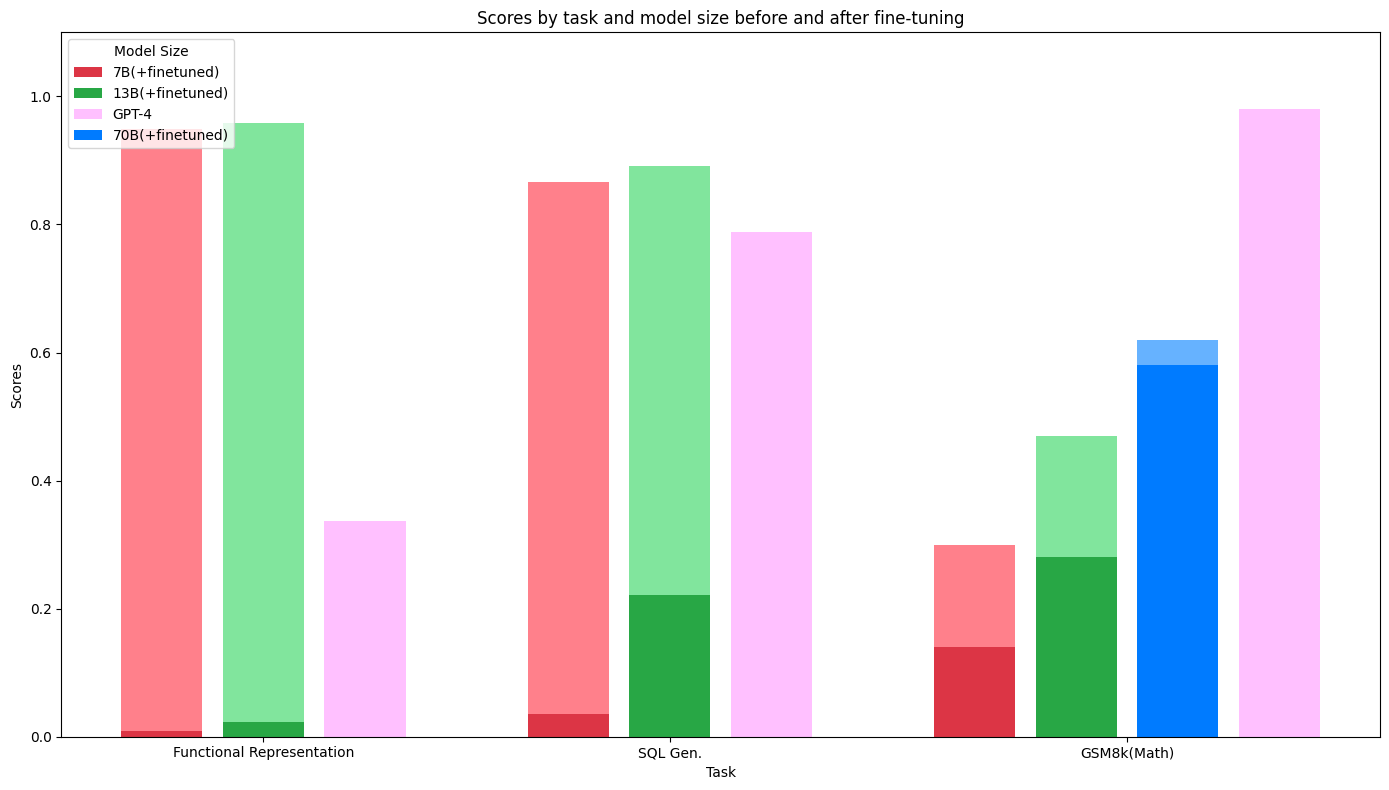

Artist: William Joel

How easy is it to make a deepfake, really? Over the past few years, there’s been a steady stream of new methods and algorithms that deliver more and more convincing AI-generated fakes. You can even now do basic face-swaps in a handful of apps. But what does it take to turn random code you found online into a genuine deepfake? I can now say from personal experience, you really need just two things: time and patience.Despite writing about deepfakes for years, I’ve only ever made them using prepackaged apps that did the work for me. But when I saw an apparently straightforward method for creating quick lip-sync deepfakes in no time at all, I knew I had to try it for myself.

The basic mechanism is tantalizingly simple. All you need is a video of your subject and an audio clip you want them to follow. Mash those two things together using code and, hey presto, you have a deepfake. (You can tell I don’t have much of a technical background, right?) The end result is videos like this one of the queen singing Queen:

Or of a bunch of movie characters singing that international hymn, Smash Mouth’s “All Star”:

Or of Trump miming along with this Irish classic:

Finding the algorithms

Now, these video aren’t nefarious deepfakes designed to undermine democracy and bring about the infopocalypse. (Who needs deepfakes for that when normal editing does the job just as well?) They’re not even that convincing, at least not without some extra time and effort. What they are is dumb and fun — two qualities I value highly when committing to

“We definitely acknowledge the concern of people being able to use these tools freely, and thus, we strongly suggest the users of the code and website to clearly present the videos as synthetic,” said Prajwal. He and his fellow researchers note that the program can be used for many beneficial purposes, too, like animation and dubbing video into new languages. Prajwal adds that they hope that making the code available will “encourage fruitful research on systems that can effectively combat misuse.”

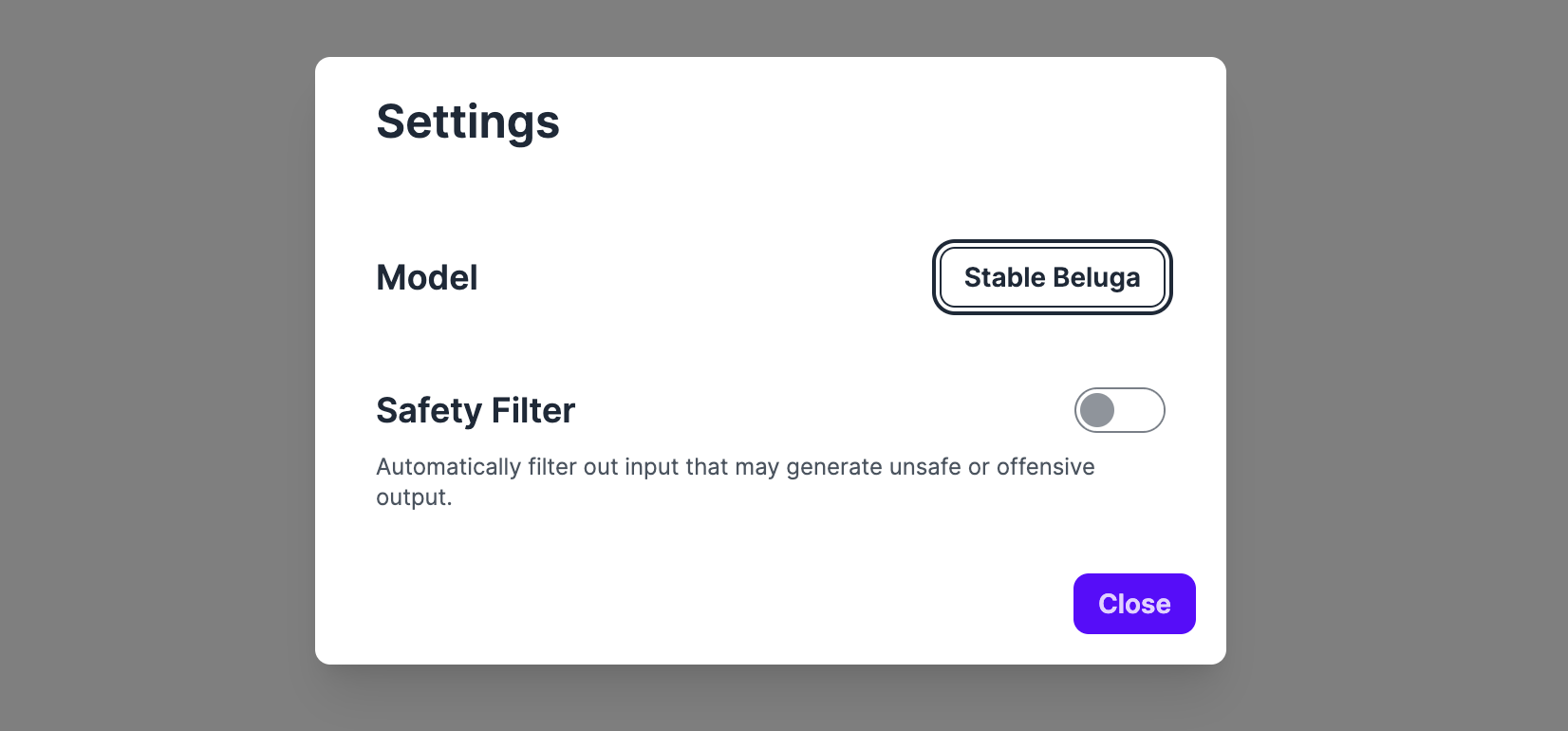

Trying (and failing) with the online demo

I originally tried using this online demo to make a deepfake. I found a video of my target (Apple CEO Tim Cook) and some audio for him to mime to (I chose Jim Carrey for some reason). I downloaded the video footage using Quicktime’s screen record function and the audio using a handy app called Piezo. Then I got both files and plugged them into the site and waited. And waited. And eventually, nothing happened.For some reason, the demo didn’t like my clips. I tried making new ones and reducing their resolution, but it didn’t make a difference. This, it turns out, would be a motif in my deepfaking experience: random roadblocks would pop up that I just didn’t have the technical expertise to analyze. Eventually, I gave up and pinged Kelleher for help. He suggested I rename my files to remove any spaces. I did so and for some reason this worked. I now had a clip of Tim Cook miming along to Jim Carrey’s screen tests for Lemony Snicket’s A Series of Unfortunate Events. It was terrible — really just incredibly shoddy in terms of both verisimilitude and humor — but a personal achievement all the same.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/21866038/Screen_Shot_2020_09_09_at_2.11.41_PM.png)

Moving to Colab

To try to improve on these results, I wanted to run the algorithms more directly. For this I turned to the authors’ Github, where they’d uploaded the underlying code. I would be using Google Colab to run it: the coding equivalent of Google Docs, which allows you to execute machine learning projects in the cloud. Again, it was the original authors who had done all the work by laying out the code in easy steps, but that didn’t stop me from walking into setback after setback like Sideshow Bob tackling a parking lot full of rakes.My progress was akin to Sideshow Bob tackling a parking lot full of rakesWhy couldn’t I authorize Colab to access my Google Drive? (Because I was logged into two different Google accounts.) Why couldn’t the Colab project find the weights for the neural network in my Drive folder? (Because I’d downloaded the Wav2Lip model rather than the Wav2Lip + GAN version.) Why wasn’t the audio file I uploaded being identified by the program? (Because I’d misspelled “aduoi” in the file name.) And so on and so forth.

Happily, many of my problems were solved by this YouTube tutorial, which alerted me to some of the subtler mistakes I’d made. These included creating two separate folders for the inputs and the model, labeled Wav2Lip and Wav2lip respectively. (Note the different capitalization on “lip” — that’s what tripped me up.) After watching the video a few times and spending hours troubleshooting things, I finally had a working model. Honestly, I could have wept, in part at my own apparent incompetence.

The final results

A few experiments later, I’d learned some of quirks of the program (like its difficulty dealing with faces that aren’t straight on) and decided to create my deepfake pièce de résistance: Elon Musk lip-syncing to Tim Curry’s “space” speech from Command & Conquer: Red Alert 3. You can see the results for yourself below. And sure, it’s only a small contribution to the ongoing erasure of the boundaries between reality and fiction, but at least it’s mine:

okay this one worked out a little better - Elon Musk doing Tim Curry's space speech from command & conquer pic.twitter.com/vscq9wAKRU

— James Vincent (@jjvincent) September 9, 2020

What I did learn from this experience? Well, that making deepfakes is genuinely accessible, but it’s not necessarily easy. Although these algorithms have been around for years and can be used by anyone willing to put in a few hours’ work, it’s still true that simply editing video clips using traditional methods is faster and produces more convincing results, if your aim is to spread misinformation at least.On the other hand, what impressed me was how quickly this technology spreads. This particular lip-syncing algorithm, Wav2Lip, was created by an international team of researchers affiliated with universities in India and the UK. They shared their work online at the end of August, and it was then picked up by Twitter and AI newsletters (I saw it in a well-known one called Import AI). The researchers made the code accessible and even created a public demo, and in a matter of weeks, people around the world had started experimenting with it, creating their own deepfakes for fun and, in my case, content. Search YouTube for “Wav2Lip” and you’ll find tutorials, demos, and plenty more example fakes.

Vishak

Vishak