Software from Baidu Research yields jabs for COVID that have greater shelf stability and that trigger a larger antibody response in mice than conventionally designed shots.

www.nature.com

‘Remarkable’ AI tool designs mRNA vaccines that are more potent and stable

Software from Baidu Research yields jabs for COVID that have greater shelf stability and that trigger a larger antibody response in mice than conventionally designed shots.

Elie Dolgin

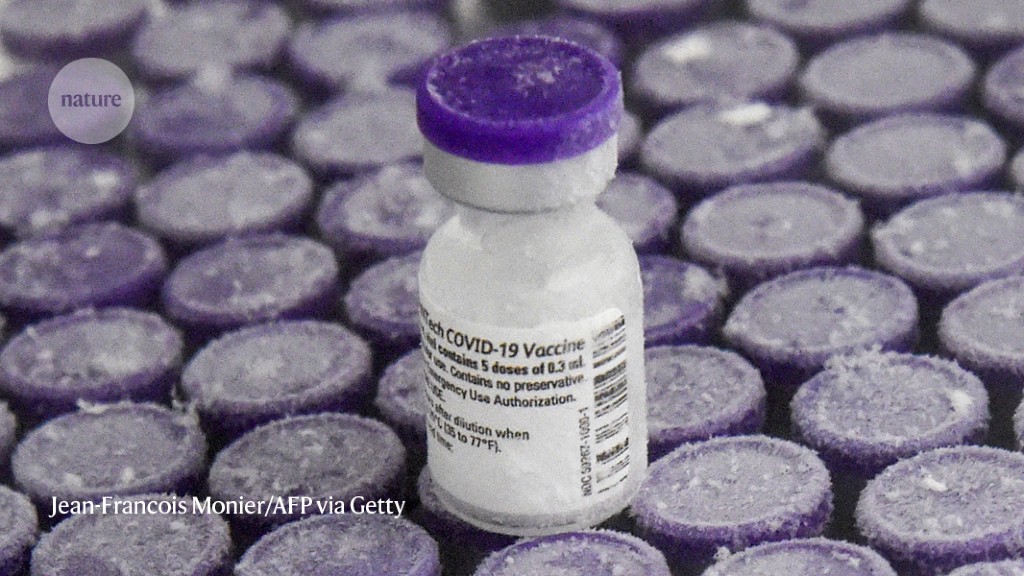

During the COVID-19 pandemic, mRNA vaccines against the coronavirus SARS-CoV-2 had to be kept at temperatures below –15 °C to maintain their stability. A new AI tool could improve that characteristic.Credit: Jean-Francois Monier/AFP via Getty

An artificial intelligence (AI) tool that optimizes the gene sequences found in mRNA vaccines could help to create jabs with greater potency and stability that could be deployed across the globe.

Developed by scientists at the California division of Baidu Research, an AI company based in Beijing, the software borrows techniques from computational linguistics to design mRNA sequences with shapes and structures more intricate than those used in current vaccines. This enables the genetic material to persist for longer than usual. The more stable the mRNA that’s delivered to a person’s cells, the more antigens are produced by the protein-making machinery in that person’s body. This, in turn, leads to a rise in protective antibodies, theoretically leaving immunized individuals better equipped to fend off infectious diseases.

What’s more, the enhanced structural complexity of the mRNA offers improved protection against vaccine degradation. During the COVID-19 pandemic, mRNA-based shots against the coronavirus SARS-CoV-2

famously had to be transported and kept at temperatures below –15 °C to maintain their stability. This limited their distribution in resource-poor regions of the world that lack access to ultracold storage facilities. A more resilient product, optimized by AI, could eliminate the need for cold-chain equipment to handle such jabs.

The new methodology is “remarkable”, says Dave Mauger, a computational RNA biologist who previously worked at Moderna in Cambridge, Massachusetts, a maker of mRNA vaccines. “The computational efficiency is really impressive and more sophisticated than anything that has come before.”

Linear thinking

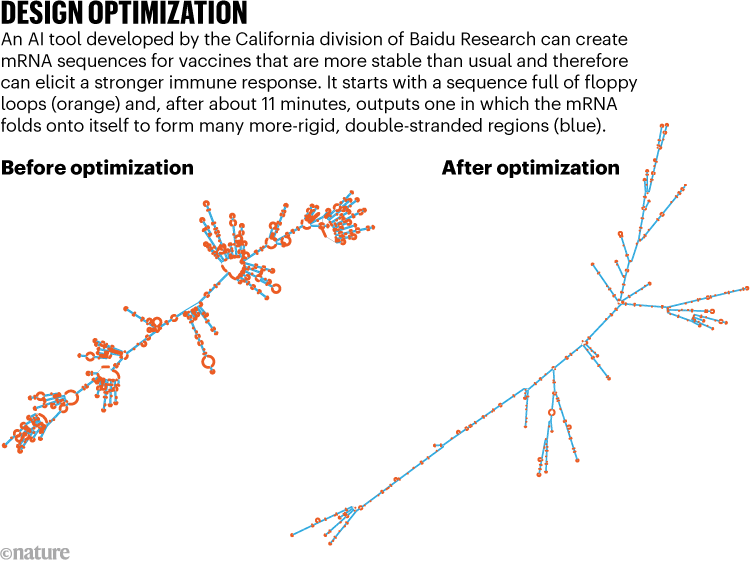

Vaccine developers already commonly adjust mRNA sequences to align with cells’ preferences for certain genetic instructions over others. This process, known as codon optimization, leads to more-efficient protein production. The Baidu tool takes this a step further, ensuring that the mRNA — usually a single-stranded molecule — loops back on itself to create double-stranded segments that are more rigid (see ‘Design optimization’).

Source: Adapted from Ref. 1

Known as LinearDesign, the tool takes just minutes to run on a desktop computer. In validation tests, it has yielded vaccines that, when evaluated in mice, triggered antibody responses up to 128 times greater than those mounted after immunization with more conventional, codon-optimized vaccines. The algorithm also helped to extend the shelf stability of vaccine designs up to sixfold in standard test-tube assays performed at body temperature.

“It’s a tremendous improvement,” says Yujian Zhang, former head of mRNA technology at StemiRNA Therapeutics in Shanghai, China, who led the experimental-validation studies.

So far, Zhang and his colleagues have tested LinearDesign-enhanced vaccines against only COVID-19 and shingles in mice. But the technique should prove useful when designing mRNA vaccines against any disease, says Liang Huang, a former Baidu scientist who spearheaded the tool’s creation. It should also help in mRNA-based therapeutics, says Huang, who is now a computational biologist at Oregon State University in Corvallis.

The researchers reported their findings on 2 May in

Nature1.

Optimal solutions

Already, the tool has been used to optimize at least one authorized vaccine: a COVID-19 shot from StemiRNA, called SW-BIC-213, that

won approval for emergency use in Laos late last year. Under a licensing agreement established in 2021, the French pharma giant Sanofi has been using LinearDesign in its own experimental mRNA products, too.

https://www.nature.com/articles/d41586-023-00042-z

Executives at both companies stress that many design features factor into the performance of their vaccine candidates. But LinearDesign is “certainly one type of algorithm that can help with this”, says Sanofi’s Frank DeRosa, head of research and biomarkers at the company’s mRNA Center of Excellence.

Another was reported last year. A team led by Rhiju Das, a computational biologist at Stanford School of Medicine in California, demonstrated that even greater protein expression can be eked out of mRNA — in cultured human cells at least — if certain loop patterns are taken out of their strands, even when such changes loosen the overall rigidity of the molecule

2.

That suggests that alternative algorithms might be preferable, says theoretical chemist Hannah Wayment-Steele, a former member of Das’s team who is now at Brandeis University in Waltham, Massachusetts. Or, it suggests that manual fine-tuning of LinearDesign-optimized mRNA could lead to even better vaccine sequences.

But according to David Mathews, a computational RNA biologist at the University of Rochester Medical Center in New York, LinearDesign can do the bulk of the heavy lifting. “It gets people in the right ballpark to start doing any optimization,” he says. Mathews helped develop the algorithm and is a co-founder, along with Huang, of Coderna.ai, a start-up based in Sunnyvale, California, that is developing the software further. Their first task has been updating the platform to account for the types of chemical modification found in most approved and experimental mRNA vaccines; LinearDesign, in its current form, is based on

an unmodified mRNA platform that has fallen out of favour among most vaccine developers.

A structured approach

But mouse studies and cell experiments are one thing. Human trials are another. Given that the immune system has evolved to recognize certain RNA structures as foreign — especially the twisted ladder shapes within many viruses that encode their genomes as double-stranded RNA — some researchers worry that an optimization algorithm such as LinearDesign could end up creating vaccine sequences that spur harmful immune reactions in people.

“That’s kind of a liability,” says Anna Blakney, an RNA bioengineer at the University of British Columbia in Vancouver, Canada, who was not involved in the study.

Early results from human clinical trials involving StemiRNA’s SW-BIC-213 suggest the extra structure is not a problem, however. In small booster trials reported so far, the shot’s side effects have proved no worse than those reported with other mRNA-based COVID-19 vaccines

3. But as Blakney points out: “We’ll learn more about that in the coming years.”

doi: ‘Remarkable’ AI tool designs mRNA vaccines that are more potent and stable