DIY GPT-Powered Monocle Will Tell You What to Say in Every Conversation

A student coder used GPT-4 and open-source hardware to create RizzGPT and LifeOS, systems designed to feed you the right line for the right time.

DIY GPT-Powered Monocle Will Tell You What to Say in Every Conversation

A student coder used GPT-4 and open-source hardware to create RizzGPT and LifeOS, systems designed to feed you the right line for the right time.By Chloe Xiang

April 26, 2023, 9:00am

IMAGE: BRYAN CHIANG

AI chatbots that can churn out convincing text are all the rage, but what if you could wear one on your face to feed you the right line for any given moment? To give you, as Gen Z calls sparkling charisma, rizz?

“Say goodbye to awkward dates and job interviews,” a Stanford student developer named Bryan Chiang tweeted in March. “We made rizzGPT—real-time Charisma as a Service (CaaS). it listens to your conversation and tells you exactly what to say next.”

Chiang is one of many developers who are trying to create an autonomous agent—so-called auto-GPTs—using OpenAI’s GPT-4 language model. Developers of auto-GPT models hope to create an application that can do a number of things on its own, such as formulate and execute a list of tasks, and write and debug its own code. In this case, Chiang created a GPT-powered monocle that people can wear and when someone asks the wearer a question, the glasses will project a caption that the wearer can read out loud. The effect is something like a DIY version of Google Glass.

In the video below Chiang’s tweet, a man asks mock interview questions to the person behind the camera, who uses RizzGPT to reply. “I hear you’re looking for a job to teach React Native,” the interviewer says. “Thank you for your interest. I’ve been studying React Native for the past few months and I am confident that I have the skills and knowledge necessary for the job,” the GPT-4 generated response tells the interviewee to respond.

“We have to make computing more personal and it could be integrated into every facet of our life, not just like when we're on our screens,” Chiang told Motherboard. “Even if we're out and about talking to friends, walking around, I feel like there's so much more that computers can do and I don't think people are thinking big enough.”

RizzGPT combines GPT-4 with Whisper, an OpenAI-created speech recognition system, and Monocle AR glasses, an open source device. Chiang said generative AI was able to make this app possible because its ability to perceive text and audio allows the AI to understand and process a live conversation.

After creating RizzGPT, Chiang decided to take the app through further training to create LifeOS. LifeOS, which was manually trained on Chiang’s personal messages, pictures of his friends, and other data, allowed the monocle to recognize his friend’s faces and bring up relevant details when talking to them.

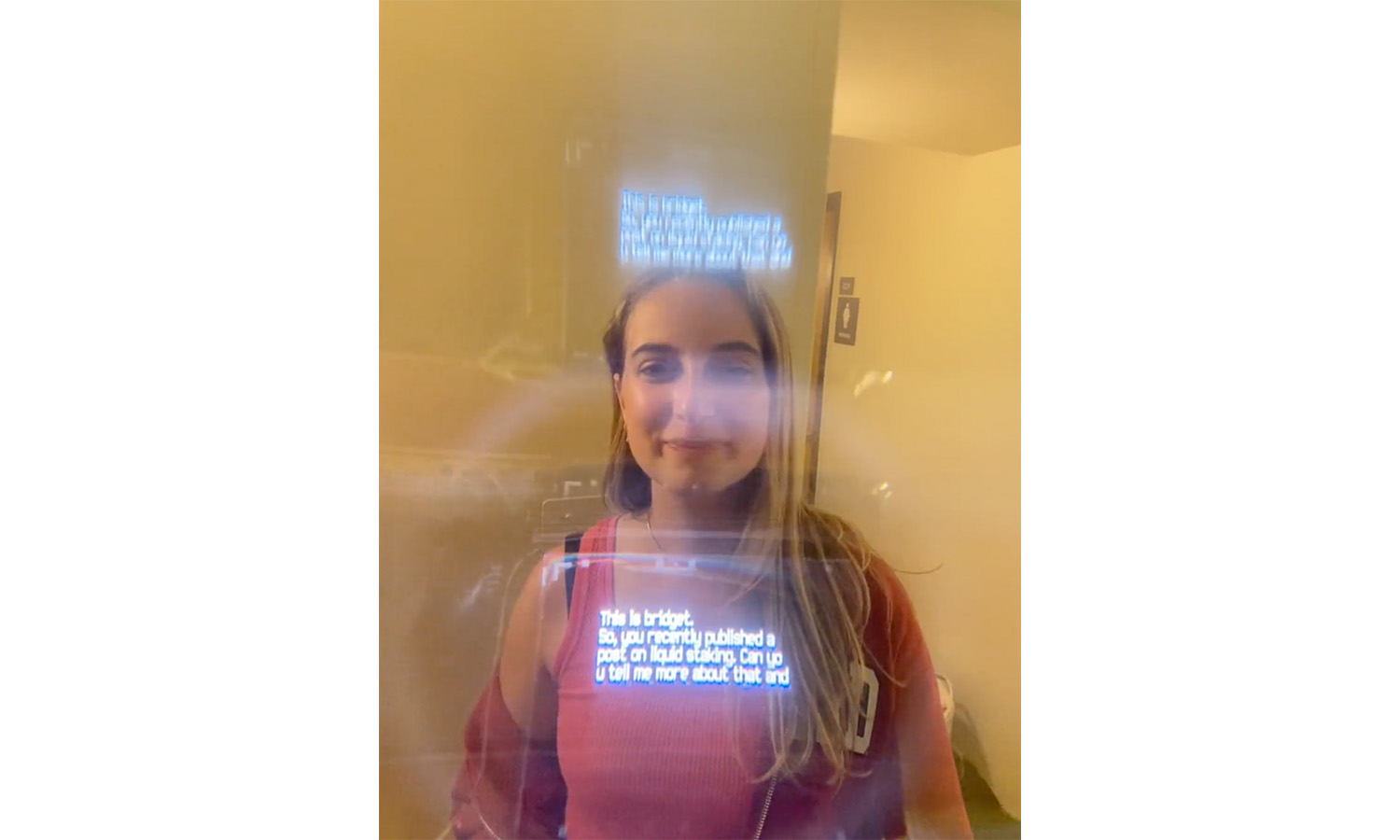

I conducted a live demo with Chiang to see firsthand how RizzGPT works and had a mock VICE reporter interview with him while he wore the glasses. I searched for the most popular interview questions on Google to ask him and began the interview by saying, “Hi, thank you so much for your application for the reporter position at VICE. Today we will be interviewing you in consideration of this position.”

Chiang responded to all seven of my questions using RizzGPT adequately and eloquently, but all the responses were broad and cliché.

In response to “Why should we hire you?” he said, “Thank you for this position. I believe I am the best candidate for this job because I have a passion for journalism and a deep understanding of the current media landscape.” When I asked, “What are your weaknesses?” he replied, “My biggest weakness is my tendency to be too detail-oriented in my work.”

The most creative response RizzGPT gave was in response to my question, “If you were an animal, which animal would you want to be and why?” Chiang responded, using the AI's line: “If I were an animal, I would want to be a cheetah. They are incredibly fast and agile, which reflects my ambition, drive, and focus.”

Chiang acknowledged that RizzGPT still needs a lot more work on the hardware, voice, and personalization. He said that it’s still difficult to wear the monocle comfortably, that the GPT responses are lagged resulting in an awkward pause between speakers, and that the monocle doesn’t have the ability to refer to personal information without manual training. Yet, he said that he hopes his demos can show the next steps of generative AI and its everyday applications.

“Hopefully, by putting out these fun demos, it shows people that this is what’s possible and this is the future that we’re heading towards,” he said.