1/11

@astonzhangAZ

Our Llama 4’s industry leading 10M+ multimodal context length (20+ hours of video) has been a wild ride. The iRoPE architecture I’d been working on helped a bit with the long-term infinite context goal toward AGI. Huge thanks to my incredible teammates!

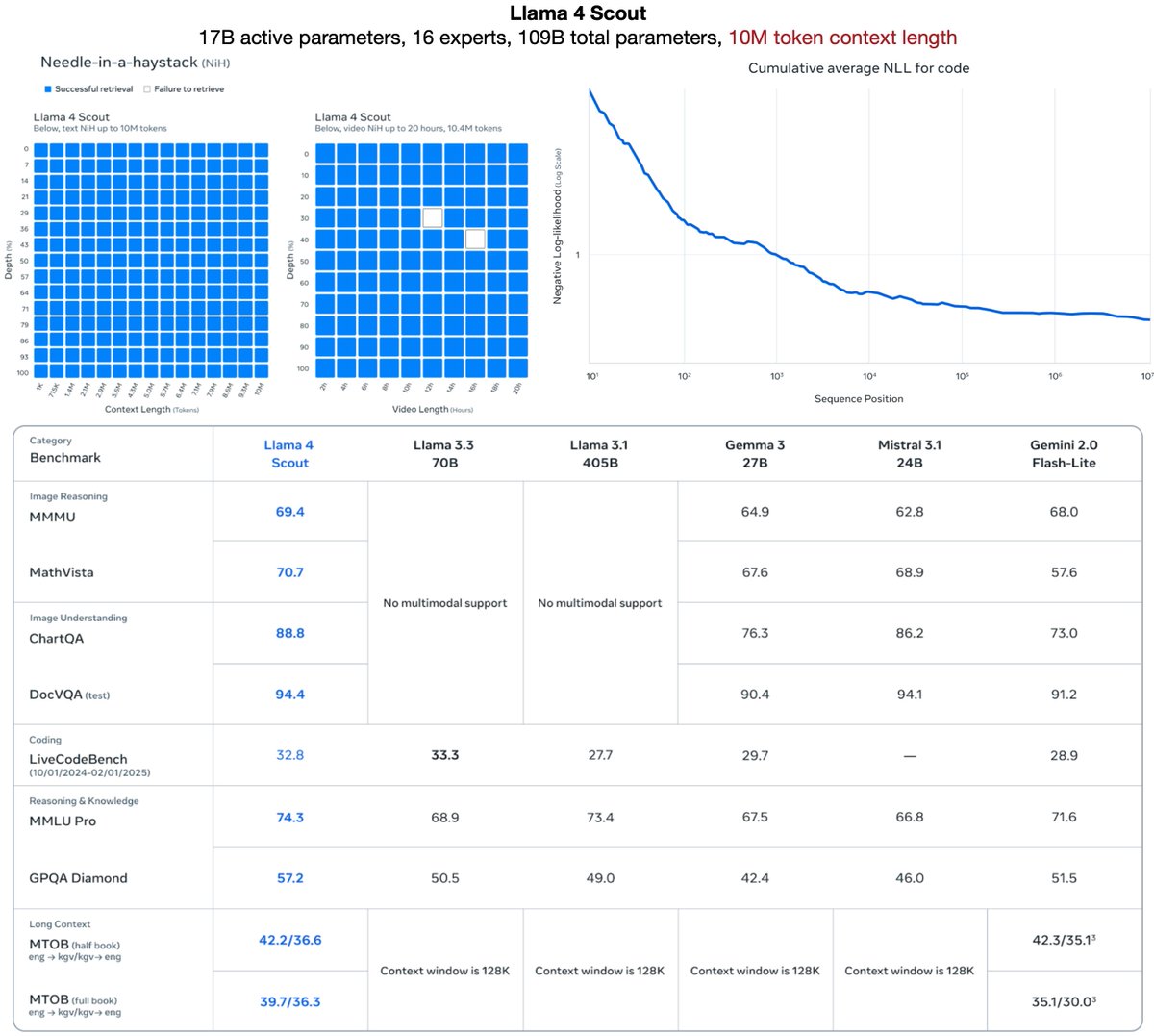

Llama 4 Scout

17B active params · 16 experts · 109B total params

Fits on a single H100 GPU with Int4

Industry-leading 10M+ multimodal context length enables personalization, reasoning over massive codebases, and even remembering your day in video

Llama 4 Maverick

17B active params · 128 experts · 400B total params · 1M+ context length

Experimental chat version scores ELO 1417 (Rank #2) on LMArena

Llama 4 Behemoth (in training)

288B active params · 16 experts · 2T total params

Pretraining (FP8) with 30T multimodal tokens across 32K GPUs

Serves as the teacher model for Maverick codistillation

All models use early fusion to seamlessly integrate text, image, and video tokens into a unified model backbone.

Our post-training pipeline: lightweight SFT → online RL → lightweight DPO. Overuse of SFT/DPO can over-constrain the model and limit exploration during online RL—keep it light.

Solving long context by aiming for infinite context helps guide better architectures.

We can't train on infinite-length sequences—so framing it as an infinite context problem narrows the solution space, especially via length extrapolation: train on short, generalize to much longer.

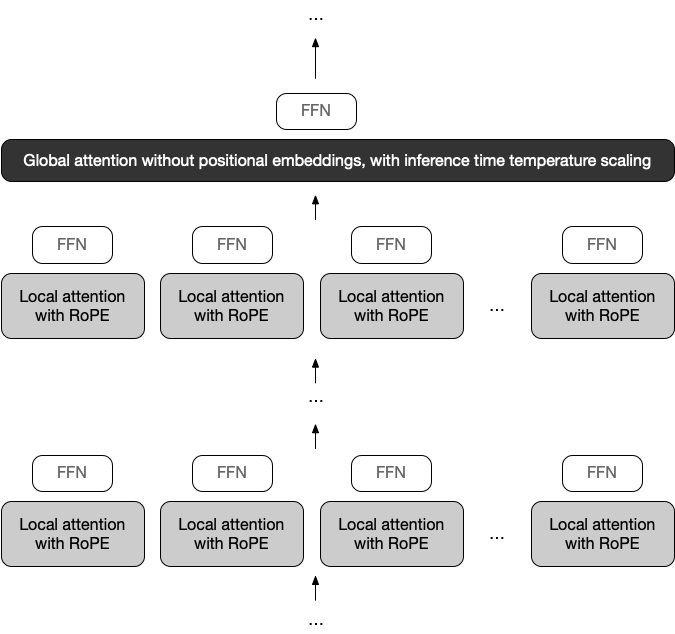

Enter the iRoPE architecture (“i” = interleaved layers, infinite):

Local parallellizable chunked attention with RoPE models short contexts only (e.g., 8K)

Only global attention layers model long context (e.g., >8K) without position embeddings—improving extrapolation. Our max training length: 256K.

As context increases, attention weights flatten—making inference harder. To compensate, we apply inference-time temperature scaling at global layers to enhance long-range reasoning while preserving short-context (e.g., α=8K) performance:

xq *= 1 + log(floor(i / α) + 1) * β # i = position index

We believe in open research. We'll share more technical details very soon—via podcasts. Stay tuned!

2/11

@XiongWenhan

Cool work!

3/11

@astonzhangAZ

Thanks bro!

4/11

@magpie_rayhou

Congrats!

5/11

@astonzhangAZ

Thank bro!

6/11

@starbuxman

Hi - congrats! I’d love to learn more. Would you be interested in an interview on

Coffee + Software ? I contribute to the Spring AI project, too

7/11

@aranimontes

With 20h, you could basically record your whole day and ask it to summarise what happened and share per mail?

Does the 10m mean it doesn't start hallucinating after some time? Or just take it can analyse that, with no guarantee on the "quality"

8/11

@yilin_sung

Congrats! Look forward to more tech details

9/11

@HotAisle

We've got @AMD MI300x compute to run this model available as low as $1.50/gpu/hr.

10/11

@MaximeRivest

How modular are the experts? Could we load only some, for very specific domain inference, with vey short generation?

11/11

@eliebakouch

Also did you evaluate on other benchmark such as RULER or Helmet?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Subscribe: Matthew Berman

Subscribe: Matthew Berman Twitter: https://twitter.com/matthewberman

Twitter: https://twitter.com/matthewberman Discord: Join the Forward Future AI Discord Server!

Discord: Join the Forward Future AI Discord Server! Patreon: Get more from Matthew Berman on Patreon

Patreon: Get more from Matthew Berman on Patreon Instagram: https://www.instagram.com/matthewberman_ai

Instagram: https://www.instagram.com/matthewberman_ai Threads: Matthew Berman (@matthewberman_ai) • Threads, Say more

Threads: Matthew Berman (@matthewberman_ai) • Threads, Say more LinkedIn: Forward Future | LinkedIn

LinkedIn: Forward Future | LinkedIn

bit.ly

bit.ly