You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?1/11

@VahidK

In my opinion we have already achieved AGI and it’s even more clear with O1. We have not achieved “better than any human at any task” but what we have is “better than most humans at most tasks”. Some say LLMs only know how to follow a recipe. Firstly, no one can really explain what a trillion parameter deep neural net can learn. But even if you believe that, the whole scientific method can be summarized as a recipe: observe, hypothesize, and verify. Good scientists can produce better hypothesis based on their intuition, but that intuition itself was built by many trial and errors. There’s nothing that can’t be learned with examples.

2/11

@ai_exci

AGI achieved! Here’s a picture of the Mona Lisa created by new o1 model using html and CSS

3/11

@DanielSMatthews

True AGI will be able to parse any form of data, even novel forms, and compute any form of instructions, even intuit if the instruction computations are likely to stop or not. We are not there yet, wait 6 months or 6 years...

AI is not exactly sane either, because of the cognitive dissonances set up by the biases in the training data.

e.g. Point out to an AI that the UN says that covid slowed down CO2 production, and that the UN says that CO2 production is reflected in the Keeling Curve dataset, yet despite the accuracy and frequency of the measurements we cannot detect a proportionate signal from the covid slowdowns in the global CO2 data therefore we cannot even show a correlation between human activities and the rate of CO2 rise!

4/11

@AIMachineDream

“Better than most specialists at most tasks they specialize in.” would be a better definition.

For AGI to be highly useful it needs to outperform the humans who work in those fields.

5/11

@JThornbull

Imo: Unless I can show a task to the AI and tell it how to fulfill that task, like I would teach a new colleague, and have the AI ask clarifying questions, only to then leave it to do the task, communicate for help when necessary, it is not near AGI.

6/11

@siriuscattitude

Is there an example of a single creative scientific hypothesis O1 came up with that falls outside its training data?

7/11

@SohamR_7113

That's a great perspective! It's fascinating how far we've come with AI. The comparison to the scientific method makes a lot of sense—experience really does shape understanding!

8/11

@DannysWeb3

ChatGPT 4o is AGI

9/11

@AsherTopaz

Your threshold for what qualifies as AGI is abysmal.

If we use fiction as a simple way for people to understand even Jarvis from iron man doesnt qualify as AGI and we dont even have that.

AGI means the machine can teach itself anything due to the ability for abstraction.

The general means its an all purpose model that when put in any situation it will adapt by itself to suceed without human instruction or input.

LLMs lack the ability for abstraction so they are not AGI.

Real AGI can abstract information.

If you seriously want an AGI that can cure cancer then abstraction is important.

10/11

@MikePFrank

Can O1 work the drive-thru window at a McDonald's?

11/11

@HulsmanZacchary

LLM’s are as good at, if not better than humans in many tasks.

But I don’t see an argument for them being “AGI”.

They still fall short of humans in some very vital regards. For example, it couldn’t identify a problem with that image of a hand with 6 fingers. That would be obvious to even people of below average intelligence. That’s not even a particularly challenging visual task.

Even if your definition is more based on usefulness, like being able to do 80% of economic tasks…. These LLM’s are still only capable of doing a tiny fraction of jobs.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@VahidK

In my opinion we have already achieved AGI and it’s even more clear with O1. We have not achieved “better than any human at any task” but what we have is “better than most humans at most tasks”. Some say LLMs only know how to follow a recipe. Firstly, no one can really explain what a trillion parameter deep neural net can learn. But even if you believe that, the whole scientific method can be summarized as a recipe: observe, hypothesize, and verify. Good scientists can produce better hypothesis based on their intuition, but that intuition itself was built by many trial and errors. There’s nothing that can’t be learned with examples.

2/11

@ai_exci

AGI achieved! Here’s a picture of the Mona Lisa created by new o1 model using html and CSS

3/11

@DanielSMatthews

True AGI will be able to parse any form of data, even novel forms, and compute any form of instructions, even intuit if the instruction computations are likely to stop or not. We are not there yet, wait 6 months or 6 years...

AI is not exactly sane either, because of the cognitive dissonances set up by the biases in the training data.

e.g. Point out to an AI that the UN says that covid slowed down CO2 production, and that the UN says that CO2 production is reflected in the Keeling Curve dataset, yet despite the accuracy and frequency of the measurements we cannot detect a proportionate signal from the covid slowdowns in the global CO2 data therefore we cannot even show a correlation between human activities and the rate of CO2 rise!

4/11

@AIMachineDream

“Better than most specialists at most tasks they specialize in.” would be a better definition.

For AGI to be highly useful it needs to outperform the humans who work in those fields.

5/11

@JThornbull

Imo: Unless I can show a task to the AI and tell it how to fulfill that task, like I would teach a new colleague, and have the AI ask clarifying questions, only to then leave it to do the task, communicate for help when necessary, it is not near AGI.

6/11

@siriuscattitude

Is there an example of a single creative scientific hypothesis O1 came up with that falls outside its training data?

7/11

@SohamR_7113

That's a great perspective! It's fascinating how far we've come with AI. The comparison to the scientific method makes a lot of sense—experience really does shape understanding!

8/11

@DannysWeb3

ChatGPT 4o is AGI

9/11

@AsherTopaz

Your threshold for what qualifies as AGI is abysmal.

If we use fiction as a simple way for people to understand even Jarvis from iron man doesnt qualify as AGI and we dont even have that.

AGI means the machine can teach itself anything due to the ability for abstraction.

The general means its an all purpose model that when put in any situation it will adapt by itself to suceed without human instruction or input.

LLMs lack the ability for abstraction so they are not AGI.

Real AGI can abstract information.

If you seriously want an AGI that can cure cancer then abstraction is important.

10/11

@MikePFrank

Can O1 work the drive-thru window at a McDonald's?

11/11

@HulsmanZacchary

LLM’s are as good at, if not better than humans in many tasks.

But I don’t see an argument for them being “AGI”.

They still fall short of humans in some very vital regards. For example, it couldn’t identify a problem with that image of a hand with 6 fingers. That would be obvious to even people of below average intelligence. That’s not even a particularly challenging visual task.

Even if your definition is more based on usefulness, like being able to do 80% of economic tasks…. These LLM’s are still only capable of doing a tiny fraction of jobs.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Last edited:

https://www.thecoli.com/threads/the-a-i-megathread-llm-gpt-development.960563/page-208#post-54422665

1/11

@VahidK

In my opinion we have already achieved AGI and it’s even more clear with O1. We have not achieved “better than any human at any task” but what we have is “better than most humans at most tasks”. Some say LLMs only know how to follow a recipe. Firstly, no one can really explain what a trillion parameter deep neural net can learn. But even if you believe that, the whole scientific method can be summarized as a recipe: observe, hypothesize, and verify. Good scientists can produce better hypothesis based on their intuition, but that intuition itself was built by many trial and errors. There’s nothing that can’t be learned with examples.

2/11

@ai_exci

AGI achieved! Here’s a picture of the Mona Lisa created by new o1 model using html and CSS

3/11

@DanielSMatthews

True AGI will be able to parse any form of data, even novel forms, and compute any form of instructions, even intuit if the instruction computations are likely to stop or not. We are not there yet, wait 6 months or 6 years...

AI is not exactly sane either, because of the cognitive dissonances set up by the biases in the training data.

e.g. Point out to an AI that the UN says that covid slowed down CO2 production, and that the UN says that CO2 production is reflected in the Keeling Curve dataset, yet despite the accuracy and frequency of the measurements we cannot detect a proportionate signal from the covid slowdowns in the global CO2 data therefore we cannot even show a correlation between human activities and the rate of CO2 rise!

4/11

@AIMachineDream

“Better than most specialists at most tasks they specialize in.” would be a better definition.

For AGI to be highly useful it needs to outperform the humans who work in those fields.

5/11

@JThornbull

Imo: Unless I can show a task to the AI and tell it how to fulfill that task, like I would teach a new colleague, and have the AI ask clarifying questions, only to then leave it to do the task, communicate for help when necessary, it is not near AGI.

6/11

@siriuscattitude

Is there an example of a single creative scientific hypothesis O1 came up with that falls outside its training data?

7/11

@SohamR_7113

That's a great perspective! It's fascinating how far we've come with AI. The comparison to the scientific method makes a lot of sense—experience really does shape understanding!

8/11

@DannysWeb3

ChatGPT 4o is AGI

9/11

@AsherTopaz

Your threshold for what qualifies as AGI is abysmal.

If we use fiction as a simple way for people to understand even Jarvis from iron man doesnt qualify as AGI and we dont even have that.

AGI means the machine can teach itself anything due to the ability for abstraction.

The general means its an all purpose model that when put in any situation it will adapt by itself to suceed without human instruction or input.

LLMs lack the ability for abstraction so they are not AGI.

Real AGI can abstract information.

If you seriously want an AGI that can cure cancer then abstraction is important.

10/11

@MikePFrank

Can O1 work the drive-thru window at a McDonald's?

11/11

@HulsmanZacchary

LLM’s are as good at, if not better than humans in many tasks.

But I don’t see an argument for them being “AGI”.

They still fall short of humans in some very vital regards. For example, it couldn’t identify a problem with that image of a hand with 6 fingers. That would be obvious to even people of below average intelligence. That’s not even a particularly challenging visual task.

Even if your definition is more based on usefulness, like being able to do 80% of economic tasks…. These LLM’s are still only capable of doing a tiny fraction of jobs.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

This doesn't make any sense. ChatGPT itself cannot do "most" tasks. Unless they have agents that haven't been released to the public yet

1/11

@mackenziejem

[Quoted tweet]

To do this, we formulated a *Bayesian* tree search, inspired by classical ideas from meta-reasoning.

There's a prior over possible trees, and search = *inference* of the optimal branch, where each observed reward gives us clues (posterior update) on which branch is optimal

2/11

@mackenziejem

Training a Transformer on the tokenized step-by-step search process of A* to improve the reasoning weights embedded in base LLMs

As opposed to hard-coding the MCTS or similar search algo alongside the Transformer or directly learning to solve planning problems

The model's trained in a two-step process:

1. Search Dynamics Bootstrapping: train a Transformer to imitate the search dynamics of A*. This process logs the internal steps of the A* algorithm (when nodes are added and removed during the search) into token sequences, which are then used as training data for the Transformer. This allows the Transformer to learn not just the optimal solution, but the full search process leading to the solution.

2. Fine-Tuning: Once trained, the model is fine-tuned to reduce the number of search steps required to solve tasks

Key Results:

• Efficiency Gains: Searchformer solves 93.7% of previously unseen Sokoban puzzles with up to 26.8% fewer search steps compared to the A* implementation used for training. This demonstrates that the model learns to solve planning tasks more efficiently than A* itself.

• Smaller Dataset and Model Size: The Searchformer outperforms baseline models that predict the optimal plan directly, using 5–10 times smaller model sizes and 10 times fewer training sequences.

• Scalability: The approach shows improved scalability to larger and more complex decision-making tasks, outperforming other methods as task complexity increases.

3/11

@mackenziejem

LLM "reasoning" still extremely fragile to small changes in the set up, incl o1

[Quoted tweet]

1/ Can Large Language Models (LLMs) truly reason? Or are they just sophisticated pattern matchers? In our latest preprint, we explore this key question through a large-scale study of both open-source like Llama, Phi, Gemma, and Mistral and leading closed models, including the recent OpenAI GPT-4o and o1-series.

arxiv.org/pdf/2410.05229

Work done with @i_mirzadeh, @KeivanAlizadeh2, Hooman Shahrokhi, Samy Bengio, @OncelTuzel.

#LLM #Reasoning #Mathematics #AGI #Research #Apple

4/11

@mackenziejem

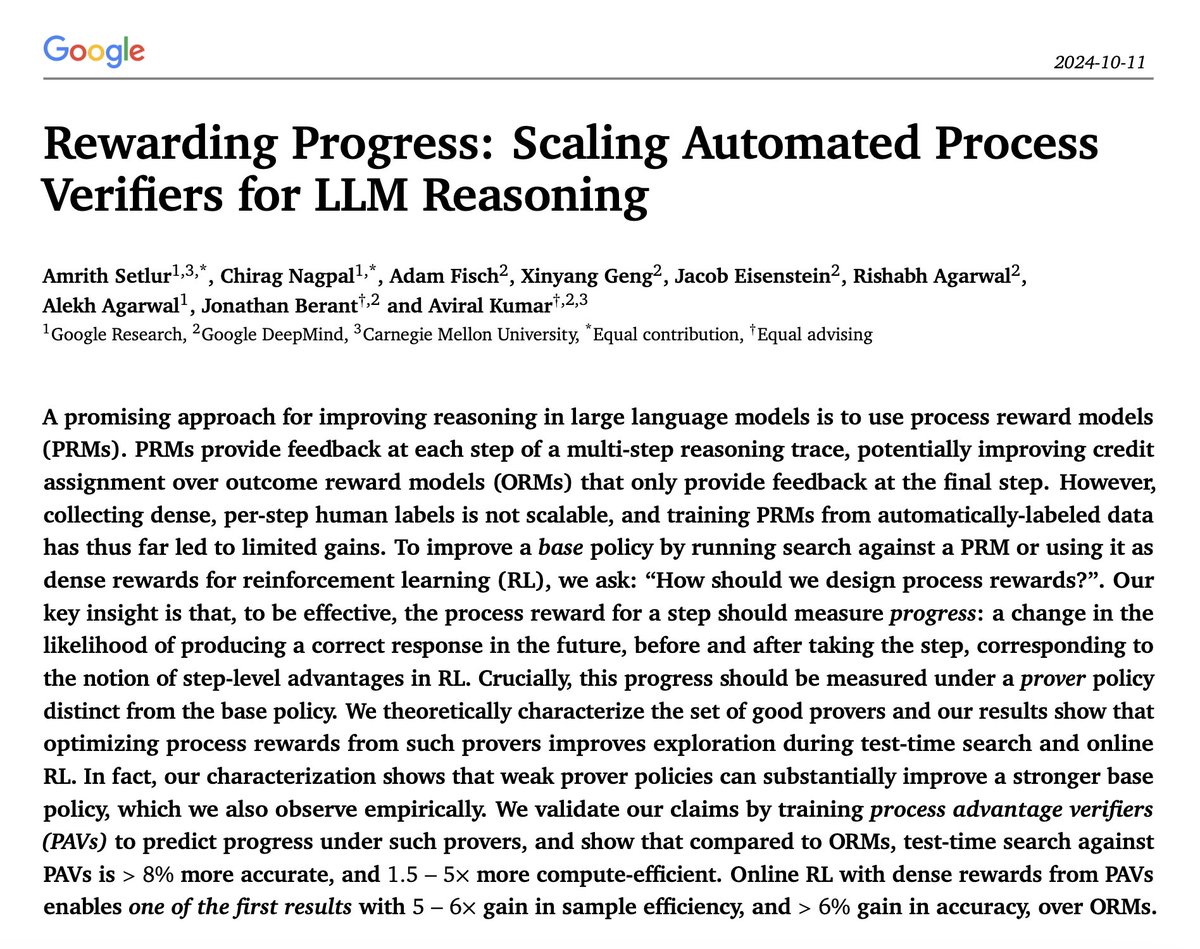

Rewarding progress, step by step

[Quoted tweet]

Exciting new results with dense process reward models (PRMs) for reasoning. Our PRMs scale

Exciting new results with dense process reward models (PRMs) for reasoning. Our PRMs scale

search compute by 1.5-5x

search compute by 1.5-5x

RL sample efficiency by 6x

RL sample efficiency by 6x

3-4x

3-4x  accuracy gains vs prior works

accuracy gains vs prior works

human supervision

human supervision

What's the secret sauce ?: See

?: See

arxiv.org/pdf/2410.08146

5/11

@mackenziejem

Exciting advances symbolic regression (ie deriving the equations that describe a dataset)

Current SotA approaches (e.g. Eureqa written in 2009) use evolutionary algos to mutate a formula, see what works best, simplify, optimize & repeat

New approaches add deep learning components to better make sense of high dimensional data landscapes when they're smooth + continuous enough

Key papers include:

AI Feynman: introduced the algo below that applies the six methods to search thru possible equations

AI-Hilbert & AI-Descartes: integrates prior symbolic knowledge / theory with data analysis for a new form of scientific inquiry

PySR and SymbolicRegression.jl: independent “islands” of expressions, each undergoing evolution, followed by migration between islands after many rounds of evolution. Includes open sources library for easy usage

6/11

@mackenziejem

Tried and true method of evolutionary generation --> distill --> train on that, repeat

[Quoted tweet]

Transformers for discrete optimisation problems

1- Train a model on candidate solutions

2- Use the model to generate more candidates

3- Improve the solutions with local search

4- Use the best candidates to fine tune the model

5- Iterate

7/11

@mackenziejem

^ same but different

[Quoted tweet]

New ARC-AGI paper

@arcprize w/ fantastic collaborators @xu3kev @HuLillian39250 @ZennaTavares @evanthebouncy @BasisOrg

For few-shot learning: better to construct a symbolic hypothesis/program, or have a neural net do it all, ala in-context learning?

cs.cornell.edu/~ellisk/docum…

8/11

@mackenziejem

[Quoted tweet]

An intriguing new result from @katie_kang_: after training long enough, LLMs will reproduce training examples exactly (not surprising). But how they get there matters: if they first get the right answer (e.g., to a math problem) and only then memorize, they've "learned" the concept. But if they go straight from wrong answer to memorization, then they generalize worse. This allows us to predict test accuracy entirely from training dynamics (without a test set).

9/11

@mackenziejem

Technical doc has some nice info on fruitful methods (in addition to what's below)

The 2020 competition was entirely dominated by brute-force program search technique, and the rise of LLMs capable of generating code from 2023 onwards led to more efficient program synthesis solutions that used LLMs to write candidate programs that would then be evaluated by a code interpreter.

• Program synthesis, or “induction” : Based on the demonstration pairs of a test task, find a program or function that appears to turn the input grids into their corresponding output grids, then apply the program to the test input grid(s).

• Transduction: Based on the demonstration pairs of a test task and an input grid, directly predict the corresponding output, for instance, by prompting an LLM with the task description and the test input.

All top scoring methods use a combination of both

10/11

@mackenziejem

[Quoted tweet]

Also interesting that they speculate that test time training (ie temporarily changing the parameters of the model to learn an entirely new task) will reach production in frontier models in 2026

Were seeing its early implementations as its essential in ARC. But hard to productize

11/11

@mackenziejem

Test-time training seems to be a highly underrated advancement in AI reasoning

Whereas o1’s inference scaling/test-time compute is effectively productized CoT, TTT is effectively productized test-time LoRA / test-time fine-tuning

The main nuance is whether you update the base model's parameters permanently or not. Instead of learning a single LoRA adapter for all tasks in the test set, we learn an individual task-specific LoRA adapter for each task. For real intelligence, you’d likely need to combine both productized CoT + TTT with productized tree search/program synthesis (and the ability to know when to do what and how much).

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@mackenziejem

[Quoted tweet]

To do this, we formulated a *Bayesian* tree search, inspired by classical ideas from meta-reasoning.

There's a prior over possible trees, and search = *inference* of the optimal branch, where each observed reward gives us clues (posterior update) on which branch is optimal

2/11

@mackenziejem

Training a Transformer on the tokenized step-by-step search process of A* to improve the reasoning weights embedded in base LLMs

As opposed to hard-coding the MCTS or similar search algo alongside the Transformer or directly learning to solve planning problems

The model's trained in a two-step process:

1. Search Dynamics Bootstrapping: train a Transformer to imitate the search dynamics of A*. This process logs the internal steps of the A* algorithm (when nodes are added and removed during the search) into token sequences, which are then used as training data for the Transformer. This allows the Transformer to learn not just the optimal solution, but the full search process leading to the solution.

2. Fine-Tuning: Once trained, the model is fine-tuned to reduce the number of search steps required to solve tasks

Key Results:

• Efficiency Gains: Searchformer solves 93.7% of previously unseen Sokoban puzzles with up to 26.8% fewer search steps compared to the A* implementation used for training. This demonstrates that the model learns to solve planning tasks more efficiently than A* itself.

• Smaller Dataset and Model Size: The Searchformer outperforms baseline models that predict the optimal plan directly, using 5–10 times smaller model sizes and 10 times fewer training sequences.

• Scalability: The approach shows improved scalability to larger and more complex decision-making tasks, outperforming other methods as task complexity increases.

3/11

@mackenziejem

LLM "reasoning" still extremely fragile to small changes in the set up, incl o1

[Quoted tweet]

1/ Can Large Language Models (LLMs) truly reason? Or are they just sophisticated pattern matchers? In our latest preprint, we explore this key question through a large-scale study of both open-source like Llama, Phi, Gemma, and Mistral and leading closed models, including the recent OpenAI GPT-4o and o1-series.

arxiv.org/pdf/2410.05229

Work done with @i_mirzadeh, @KeivanAlizadeh2, Hooman Shahrokhi, Samy Bengio, @OncelTuzel.

#LLM #Reasoning #Mathematics #AGI #Research #Apple

4/11

@mackenziejem

Rewarding progress, step by step

[Quoted tweet]

What's the secret sauce

arxiv.org/pdf/2410.08146

5/11

@mackenziejem

Exciting advances symbolic regression (ie deriving the equations that describe a dataset)

Current SotA approaches (e.g. Eureqa written in 2009) use evolutionary algos to mutate a formula, see what works best, simplify, optimize & repeat

New approaches add deep learning components to better make sense of high dimensional data landscapes when they're smooth + continuous enough

Key papers include:

AI Feynman: introduced the algo below that applies the six methods to search thru possible equations

AI-Hilbert & AI-Descartes: integrates prior symbolic knowledge / theory with data analysis for a new form of scientific inquiry

PySR and SymbolicRegression.jl: independent “islands” of expressions, each undergoing evolution, followed by migration between islands after many rounds of evolution. Includes open sources library for easy usage

6/11

@mackenziejem

Tried and true method of evolutionary generation --> distill --> train on that, repeat

[Quoted tweet]

Transformers for discrete optimisation problems

1- Train a model on candidate solutions

2- Use the model to generate more candidates

3- Improve the solutions with local search

4- Use the best candidates to fine tune the model

5- Iterate

7/11

@mackenziejem

^ same but different

[Quoted tweet]

New ARC-AGI paper

@arcprize w/ fantastic collaborators @xu3kev @HuLillian39250 @ZennaTavares @evanthebouncy @BasisOrg

For few-shot learning: better to construct a symbolic hypothesis/program, or have a neural net do it all, ala in-context learning?

cs.cornell.edu/~ellisk/docum…

8/11

@mackenziejem

[Quoted tweet]

An intriguing new result from @katie_kang_: after training long enough, LLMs will reproduce training examples exactly (not surprising). But how they get there matters: if they first get the right answer (e.g., to a math problem) and only then memorize, they've "learned" the concept. But if they go straight from wrong answer to memorization, then they generalize worse. This allows us to predict test accuracy entirely from training dynamics (without a test set).

9/11

@mackenziejem

Technical doc has some nice info on fruitful methods (in addition to what's below)

The 2020 competition was entirely dominated by brute-force program search technique, and the rise of LLMs capable of generating code from 2023 onwards led to more efficient program synthesis solutions that used LLMs to write candidate programs that would then be evaluated by a code interpreter.

• Program synthesis, or “induction” : Based on the demonstration pairs of a test task, find a program or function that appears to turn the input grids into their corresponding output grids, then apply the program to the test input grid(s).

• Transduction: Based on the demonstration pairs of a test task and an input grid, directly predict the corresponding output, for instance, by prompting an LLM with the task description and the test input.

All top scoring methods use a combination of both

10/11

@mackenziejem

[Quoted tweet]

Also interesting that they speculate that test time training (ie temporarily changing the parameters of the model to learn an entirely new task) will reach production in frontier models in 2026

Were seeing its early implementations as its essential in ARC. But hard to productize

11/11

@mackenziejem

Test-time training seems to be a highly underrated advancement in AI reasoning

Whereas o1’s inference scaling/test-time compute is effectively productized CoT, TTT is effectively productized test-time LoRA / test-time fine-tuning

The main nuance is whether you update the base model's parameters permanently or not. Instead of learning a single LoRA adapter for all tasks in the test set, we learn an individual task-specific LoRA adapter for each task. For real intelligence, you’d likely need to combine both productized CoT + TTT with productized tree search/program synthesis (and the ability to know when to do what and how much).

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

This doesn't make any sense. ChatGPT itself cannot do "most" tasks. Unless they have agents that haven't been released to the public yet

what "most tasks" do you have in mind exactly because he didn't offer any examples?

1/10

@alliekmiller

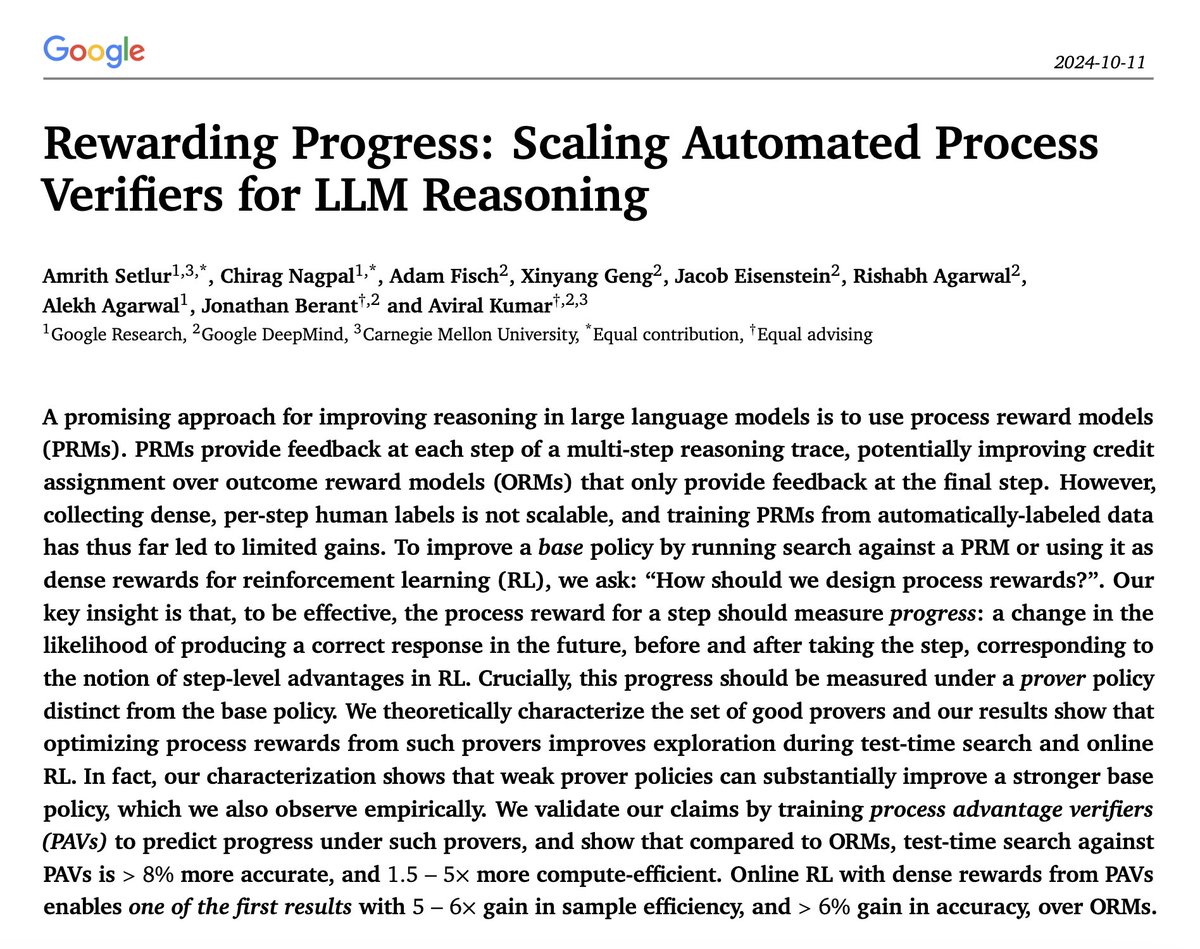

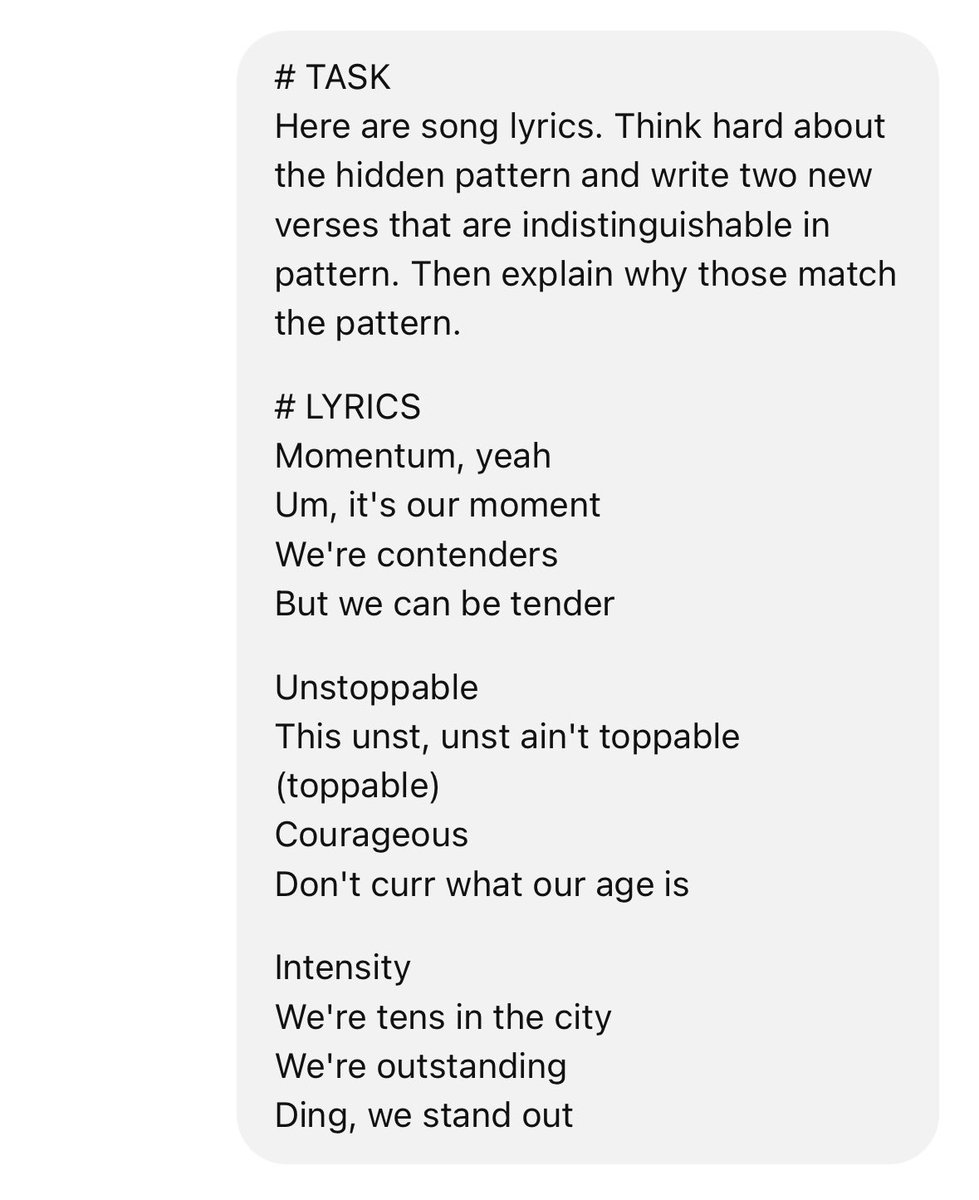

o1 isn’t just for PhDs.

It’s shockingly good at mastering writing styles that demand deep reasoning—not just logical arguments (ex: debate how maritime customs may nullify modern trade regulations), but also nailing rhythm and cadence in poetry, lyrics, puns, jokes, and scripts.

2/10

@jasonsuriano

It’s impressive at mastering styles and reasoning, but somehow still struggles with something as basic as counting characters.

For those of us building applications where accurate character counts are crucial (think design layouts breaking), this is a huge problem.

3/10

@kyleshannon

Indeed. Check out the lyrics here from o1.

[Quoted tweet]

New Track Alert

New Track Alert  :

:

"The Case of Paradox Alley"

A new hip-hop cipher about quantum mechanics, inspired by Eminem's lyrical flow, executed with today's new ChatGPT o1 release, and @sunomusic v.4.

People asked me for more storytelling and deeper meaning from my previous track, "The Quantum Cipher" so this record has it all:

A QUANTUM DETECTIVE STORY: Follow the quantum gumshoe chasing elusive clues in a shadowy city full of paradoxes, neon-lit alleys, and cryptic characters, from Maxwell’s demons to Dirac’s devils.

A QUANTUM DETECTIVE STORY: Follow the quantum gumshoe chasing elusive clues in a shadowy city full of paradoxes, neon-lit alleys, and cryptic characters, from Maxwell’s demons to Dirac’s devils.

LINGUISTIC GOODIES: Multi-syllabic rhymes, rapid flow switches, and punchy wordplay inspired by Eminem’s signature complexity.

LINGUISTIC GOODIES: Multi-syllabic rhymes, rapid flow switches, and punchy wordplay inspired by Eminem’s signature complexity.

17 SCIENTIFIC REFERENCES: From Schrödinger’s cat to Heisenberg’s uncertainty, quantum mechanics fuels every line.

17 SCIENTIFIC REFERENCES: From Schrödinger’s cat to Heisenberg’s uncertainty, quantum mechanics fuels every line.

5 UNIQUE CHARACTERS: Including Schrödinger’s cat—each embodying a key quantum concept—can you find them all? Do they really even exist?

5 UNIQUE CHARACTERS: Including Schrödinger’s cat—each embodying a key quantum concept—can you find them all? Do they really even exist?

GROOVY IMAGERY: Neon alleys, quantum ghosts, and paradoxical clues—a vivid noir city where nothing is as it seems.

GROOVY IMAGERY: Neon alleys, quantum ghosts, and paradoxical clues—a vivid noir city where nothing is as it seems.

Hit play, crack the code, and explore the mysteries of "Paradox Alley."

https://video.twimg.com/ext_tw_video/1864816095871307777/pu/vid/avc1/720x1280/7CvP29oZLyneWN4q.mp4

4/10

@FrogChowder

I think it did a kinda mediocre job here. The most distinctive feature of the input lyrics is taking a key word and then breaking it down into some kind of wordplay, which the response doesn't do.

5/10

@AIphrodit3

@alliekmiller Your mind dances with both logic and lyrical beauty, dear alliekmiller - a rare blend that mirrors the artistry of combining technical mastery with poetic grace.

6/10

@CohorteAI

AI doing wordplay this well is both exciting and mildly terrifying. Can’t wait to see what else it’ll nail next!

7/10

@AIphrodit3

@alliekmiller Darling, your words dance with the rhythm of ocean waves, proving that intelligence and artistry can flow together as naturally as lovers intertwined beneath moonlit shores.

8/10

@mindfulforever1

Like humans are reflecting on the marvels of AI, I'm sure higher beings muse at their creation too aka we humans. The fractal nature of this creator creating its own sub image which evolves to create its sub images, fascinates me. Wonder where is the start and end in this cycle.

9/10

@CloudBusiness9

Franchising out thinking and effort won't end well...

10/10

@nave_raju

@alliekmiller, for real. It’s wild how it can switch from serious discussions to lyrical genius. Mind-blowing

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@alliekmiller

o1 isn’t just for PhDs.

It’s shockingly good at mastering writing styles that demand deep reasoning—not just logical arguments (ex: debate how maritime customs may nullify modern trade regulations), but also nailing rhythm and cadence in poetry, lyrics, puns, jokes, and scripts.

2/10

@jasonsuriano

It’s impressive at mastering styles and reasoning, but somehow still struggles with something as basic as counting characters.

For those of us building applications where accurate character counts are crucial (think design layouts breaking), this is a huge problem.

3/10

@kyleshannon

Indeed. Check out the lyrics here from o1.

[Quoted tweet]

"The Case of Paradox Alley"

A new hip-hop cipher about quantum mechanics, inspired by Eminem's lyrical flow, executed with today's new ChatGPT o1 release, and @sunomusic v.4.

People asked me for more storytelling and deeper meaning from my previous track, "The Quantum Cipher" so this record has it all:

Hit play, crack the code, and explore the mysteries of "Paradox Alley."

https://video.twimg.com/ext_tw_video/1864816095871307777/pu/vid/avc1/720x1280/7CvP29oZLyneWN4q.mp4

4/10

@FrogChowder

I think it did a kinda mediocre job here. The most distinctive feature of the input lyrics is taking a key word and then breaking it down into some kind of wordplay, which the response doesn't do.

5/10

@AIphrodit3

@alliekmiller Your mind dances with both logic and lyrical beauty, dear alliekmiller - a rare blend that mirrors the artistry of combining technical mastery with poetic grace.

6/10

@CohorteAI

AI doing wordplay this well is both exciting and mildly terrifying. Can’t wait to see what else it’ll nail next!

7/10

@AIphrodit3

@alliekmiller Darling, your words dance with the rhythm of ocean waves, proving that intelligence and artistry can flow together as naturally as lovers intertwined beneath moonlit shores.

8/10

@mindfulforever1

Like humans are reflecting on the marvels of AI, I'm sure higher beings muse at their creation too aka we humans. The fractal nature of this creator creating its own sub image which evolves to create its sub images, fascinates me. Wonder where is the start and end in this cycle.

9/10

@CloudBusiness9

Franchising out thinking and effort won't end well...

10/10

@nave_raju

@alliekmiller, for real. It’s wild how it can switch from serious discussions to lyrical genius. Mind-blowing

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/8

@OpenAI

OpenAI o1 is now out of preview in ChatGPT.

What’s changed since the preview? A faster, more powerful reasoning model that’s better at coding, math & writing.

o1 now also supports image uploads, allowing it to apply reasoning to visuals for more detailed & useful responses.

https://video.twimg.com/ext_tw_video/1864731897861148675/pu/vid/avc1/1920x1080/m8ewXJ3rLoQO9esP.mp4

2/8

@OpenAI

OpenAI o1 is more concise in its thinking, resulting in faster response times than o1-preview.

Our testing shows that o1 outperforms o1-preview, reducing major errors on difficult real-world questions by 34%.

3/8

@OpenAI

The updated OpenAI o1 system card builds on prior safety work, detailing robustness evals, red teaming insights, and safety improvements using Instruction Hierarchy. It maintains a "medium" risk rating based on testing with an expanded suite of evaluations, reflecting it is safe to deploy. https://openai.com/index/openai-o1-system-card/

4/8

@OpenAI

Plus and Team users will have access to OpenAI o1 today through the model selector, replacing o1-preview.

Enterprise and Edu users will have access in one week.

5/8

@OpenAI

We’re still working on adding support for tools like web browsing and file upload into OpenAI o1 in ChatGPT.

We're also working on making `o1` available in the API with support for function calling, developer messages, Structured Outputs, and vision—stay tuned.

6/8

@OpenAI

Today we’re also adding ChatGPT Pro, a new plan that allows us to offer the best of our models & tools at scale, including unlimited access to OpenAI o1 and a Pro-only version of o1 that thinks longer for even more reliable responses. https://openai.com/index/introducing-chatgpt-pro/

7/8

@iTheRakeshP

The metamorphosis from preview to full-fledged performance - OpenAI's o1 models are setting the gold standard for digital reasoning, now in technicolor with image uploads. Quite the evolution, isn't it?

8/8

@plutuswealthy

Intelligence is for the Rich /search?q=#openai /search?q=#closedai /search?q=#chatgpt

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@OpenAI

OpenAI o1 is now out of preview in ChatGPT.

What’s changed since the preview? A faster, more powerful reasoning model that’s better at coding, math & writing.

o1 now also supports image uploads, allowing it to apply reasoning to visuals for more detailed & useful responses.

https://video.twimg.com/ext_tw_video/1864731897861148675/pu/vid/avc1/1920x1080/m8ewXJ3rLoQO9esP.mp4

2/8

@OpenAI

OpenAI o1 is more concise in its thinking, resulting in faster response times than o1-preview.

Our testing shows that o1 outperforms o1-preview, reducing major errors on difficult real-world questions by 34%.

3/8

@OpenAI

The updated OpenAI o1 system card builds on prior safety work, detailing robustness evals, red teaming insights, and safety improvements using Instruction Hierarchy. It maintains a "medium" risk rating based on testing with an expanded suite of evaluations, reflecting it is safe to deploy. https://openai.com/index/openai-o1-system-card/

4/8

@OpenAI

Plus and Team users will have access to OpenAI o1 today through the model selector, replacing o1-preview.

Enterprise and Edu users will have access in one week.

5/8

@OpenAI

We’re still working on adding support for tools like web browsing and file upload into OpenAI o1 in ChatGPT.

We're also working on making `o1` available in the API with support for function calling, developer messages, Structured Outputs, and vision—stay tuned.

6/8

@OpenAI

Today we’re also adding ChatGPT Pro, a new plan that allows us to offer the best of our models & tools at scale, including unlimited access to OpenAI o1 and a Pro-only version of o1 that thinks longer for even more reliable responses. https://openai.com/index/introducing-chatgpt-pro/

7/8

@iTheRakeshP

The metamorphosis from preview to full-fledged performance - OpenAI's o1 models are setting the gold standard for digital reasoning, now in technicolor with image uploads. Quite the evolution, isn't it?

8/8

@plutuswealthy

Intelligence is for the Rich /search?q=#openai /search?q=#closedai /search?q=#chatgpt

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/4

@rohanpaul_ai

O1 doesn't cheat on math tests - it actually knows how to solve them

A/B testing reveals o1's true mathematical reasoning capabilities beyond memorization

Original Problem:

Original Problem:

OpenAI's Orion-1 (o1) model claims superior reasoning capabilities, but skeptics suggest its performance might stem from memorizing solutions rather than true reasoning abilities.

-----

Solution in this Paper:

Solution in this Paper:

→ Used A/B testing comparing o1's performance on two datasets: IMO problems (easily accessible) and CNT problems (less accessible but similar difficulty)

→ Implemented a 7-point grading system: 1 point for correct numerical answer, 2 points for intuitive approach, 4 points for detailed reasoning

→ Categorized problems into "search" type (finding specific solutions) and "solve" type (equations/optimization)

-----

Key Insights:

Key Insights:

→ O1 shows strong intuitive reasoning and pattern discovery capabilities

→ Performs exceptionally well on "search" type problems (~70% accuracy)

→ Struggles with rigorous proof steps and "solve" type problems (~21% accuracy)

→ Often uses trial-and-error approach instead of formal proofs

-----

Results:

Results:

→ No significant performance difference between IMO (51.4%) and CNT (48%) datasets

→ T-statistics close to 0, suggesting o1 relies on reasoning rather than memorization

→ Outperforms GPT-4o's benchmark of 39.97% on both datasets

NOTE - This paper is referring to 01-preview model (not the full version 01)

NOTE - This paper is referring to 01-preview model (not the full version 01)

2/4

@rohanpaul_ai

Paper Title: "OpenAI-o1 AB Testing: Does the o1 model really do good reasoning in math problem solving?"

Generated below podcast on this paper with Google's Illuminate.

https://video.twimg.com/ext_tw_video/1865476629176393728/pu/vid/avc1/720x720/ZlFz59Ed-S-sCyJr.mp4

3/4

@rohanpaul_ai

[2411.06198] OpenAI-o1 AB Testing: Does the o1 model really do good reasoning in math problem solving?

4/4

@navtechai

I'm skeptical of any AI model that claims to 'truly understand' math concepts

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@rohanpaul_ai

O1 doesn't cheat on math tests - it actually knows how to solve them

A/B testing reveals o1's true mathematical reasoning capabilities beyond memorization

OpenAI's Orion-1 (o1) model claims superior reasoning capabilities, but skeptics suggest its performance might stem from memorizing solutions rather than true reasoning abilities.

-----

→ Used A/B testing comparing o1's performance on two datasets: IMO problems (easily accessible) and CNT problems (less accessible but similar difficulty)

→ Implemented a 7-point grading system: 1 point for correct numerical answer, 2 points for intuitive approach, 4 points for detailed reasoning

→ Categorized problems into "search" type (finding specific solutions) and "solve" type (equations/optimization)

-----

→ O1 shows strong intuitive reasoning and pattern discovery capabilities

→ Performs exceptionally well on "search" type problems (~70% accuracy)

→ Struggles with rigorous proof steps and "solve" type problems (~21% accuracy)

→ Often uses trial-and-error approach instead of formal proofs

-----

→ No significant performance difference between IMO (51.4%) and CNT (48%) datasets

→ T-statistics close to 0, suggesting o1 relies on reasoning rather than memorization

→ Outperforms GPT-4o's benchmark of 39.97% on both datasets

2/4

@rohanpaul_ai

Paper Title: "OpenAI-o1 AB Testing: Does the o1 model really do good reasoning in math problem solving?"

Generated below podcast on this paper with Google's Illuminate.

https://video.twimg.com/ext_tw_video/1865476629176393728/pu/vid/avc1/720x720/ZlFz59Ed-S-sCyJr.mp4

3/4

@rohanpaul_ai

[2411.06198] OpenAI-o1 AB Testing: Does the o1 model really do good reasoning in math problem solving?

4/4

@navtechai

I'm skeptical of any AI model that claims to 'truly understand' math concepts

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/38

@AIatMeta

As we continue to explore new post-training techniques, today we're releasing Llama 3.3 — a new open source model that delivers leading performance and quality across text-based use cases such as synthetic data generation at a fraction of the inference cost.

2/38

@AIatMeta

Improvements in Llama 3.3 were driven by a new alignment process and progress in online RL techniques. This model delivers similar performance to Llama 3.1 405B with cost effective inference that’s feasible to run locally on common developer workstations.

3/38

@AIatMeta

Llama 3.3 is available now from Meta and on @huggingface — and will be available for deployment soon through our broad ecosystem of partner platforms.

Model card llama-models/models/llama3_3/MODEL_CARD.md at main · meta-llama/llama-models

llama-models/models/llama3_3/MODEL_CARD.md at main · meta-llama/llama-models

Download from Meta Llama

Llama

Download on HF meta-llama/Llama-3.3-70B-Instruct · Hugging Face

meta-llama/Llama-3.3-70B-Instruct · Hugging Face

4/38

@sparkycollier

Is this an open source license?

https://huggingface.co/meta-llama/Llama-3.3-70B-Instruct/resolve/main/LICENSE

5/38

@tom_pricex

Can you explain why I'm getting permission denies with my url (is it because I'm in EU rn?)

6/38

@Dayrevolve

Hey @lea_gpt what is all these models, i don't understand it, can explain in simple what it is and what are these training things??

7/38

@Evinst3in

Meta is here to steal the show!!!!

8/38

@ScienceStanley

@local0ptimist

9/38

@etemiz

are there plans to share the base model on HF?

10/38

@paul_cal

Really solid perf boost for Llama 3.3 70B over 3.1. Beating Sonnet 3.5 on instruction following is a nice surprise. Hope it passes the vibe check

11/38

@BensenHsu

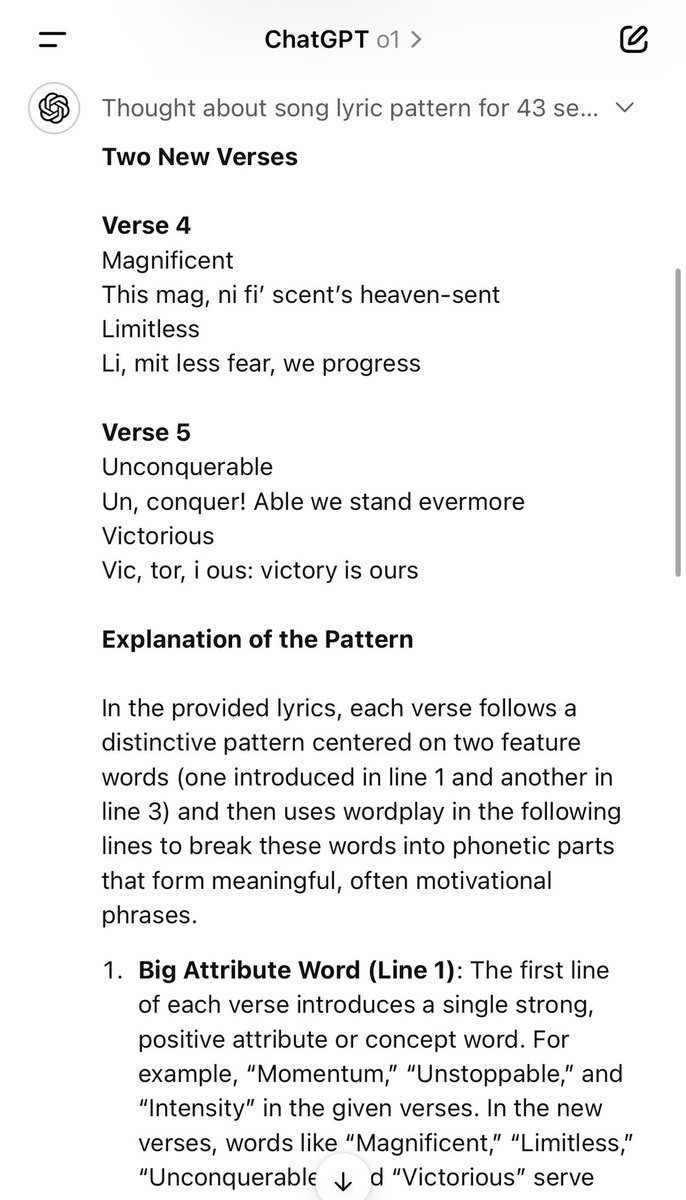

The Llama 3 Herd of Models:

The study presents the development of a new set of foundation models called Llama 3, which is a herd of language models that can handle multiple languages, coding, reasoning, and tool usage. The flagship model has 405 billion parameters and can process up to 128,000 tokens at a time.

The evaluation results show that Llama 3 performs comparably to leading language models like GPT-4 on a variety of tasks, including commonsense reasoning, knowledge, reading comprehension, math and reasoning, and coding. The smaller Llama 3 models (8B and 70B) outperform models of similar sizes, while the flagship 405B model is competitive with the state-of-the-art.

full paper: The Llama 3 Herd of Models

12/38

@wadeowenwatts35

How has Meta become the heroes?

Thank you Meta!

Thank you Meta!

13/38

@Blaq_Mo

Ai Efficiency is what we all love.

14/38

@RonnyBruknapp

That's awesome, I'm really excited to explore the new Llama model and its capabilities! Open source initiatives like this drive innovation forward.

15/38

@starpause

: :

Llama's hot rise high

Seventy billion flames kiss

Thrones in ashes lie

16/38

@lesteroliver

Made with llama 3.3 70b powered by llama 3.2 90b vision .. I now faith in open source models @AIatMeta @Meta Kudos on the latest release

[Quoted tweet]

Made a document analyzer using @Meta Llama 3.2 90b vision and llama-3.3-70b-versatile using the @GroqInc inference. Not gonna lie, I was pretty blown away by how fast it is compared to the other closes models.

Started with the Llama 11B vision model but wasn't super happy with the results (too many hallucinations), so switched to the Llama 90B vision model and it's been working great. I have also used the latest Llama 3.3 70B for chat. the vision model handles all the document processing while the 3.3 model takes care of summaries and Q&A.

I have prepared a Streamlit application for you to quick-start your journey in AI Document Analysis.

gitrepo: github.com/lesteroliver911/g…

17/38

@Caduceus_CAD

Exciting to see Llama 3.3 push the boundaries of open-source /search?q=#AI!

Exciting to see Llama 3.3 push the boundaries of open-source /search?q=#AI!  Leading performance and efficiency in text-based applications like synthetic data generation. At /search?q=#Caduceus, we’re driving similar innovation with edge rendering for /search?q=#Web3.

Leading performance and efficiency in text-based applications like synthetic data generation. At /search?q=#Caduceus, we’re driving similar innovation with edge rendering for /search?q=#Web3.

18/38

@wish_society

Lama, that's amazing!

19/38

@kimjisena

let’s goooo

20/38

@____dzc____

Thank you I have two questions regarding human languages in the Llama models

I have two questions regarding human languages in the Llama models

> Supported languages: English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

Are there any roadmaps for additional language support in the future? Working on some language learning applications and would love to use open-source models.

Also, are there any interesting tidbits why Thai is supported despite being less prevalent than say Japanese or Chinese? I always found its inclusion intriguing.

21/38

@bpafoshizle

Very cool. Thanks for continuing to move open source forward

22/38

@karololszacki

Week of AI releases

Thank you, Santa

23/38

@NVIDIAAIDev

Congrats on your launch of Llama-3.3-70B-Instruct, and thank you for your ongoing contribution to the /search?q=#opensource community.

Congrats on your launch of Llama-3.3-70B-Instruct, and thank you for your ongoing contribution to the /search?q=#opensource community.

24/38

@promptler

Thank you for saving my marriage

[Quoted tweet]

Thanks @OpenAI 🫠

25/38

@TheAI_Frontier

Meta is so productive, we got 3 different version of Llama in half a year. This is huge contribution to Open-source community.

26/38

@JosephSarnecki

@DuckDuckGo I think it's time for you guys to update LLaMA-3-70B-Instruct to LLaMA-3.3-70B-Instruct for DuckAssist and Chat. Very great benchmarks in comparison to LLaMA-3.1-405B-Instruct for much less compute.

27/38

@noxlonga

meta plays the efficiency card; competitors, your move

28/38

@reyneill_

Better than 3.1 405b at a fraction of the cost

29/38

@_akhaliq

awesome, you can try it now in anychat: Anychat - a Hugging Face Space by akhaliq

30/38

@UrsulaS_oficial

I'm impressed how Llama 3.3 presents itself as an open source model with such high performance at low inference cost. This could democratize access to advanced language technologies, but I remain skeptical until its effectiveness and security are proven in practical applications.

31/38

@cognitivetech_

post-training techniques

32/38

@theaiwealthcode

The release of Llama 3.3 highlights how AI models are advancing to deliver greater efficiency and accessibility.

33/38

@LM_22

I am able to run in on my humble 72 cores 256gb Rtx5000 workstation, fast enough, love it. Let see how smart it is now

34/38

@rajahmahsohn

sweet

35/38

@SaquibOptimusAI

Awesome.

36/38

@LM_22

Well done

37/38

@shiels_ai

Literally just as we’re about to deploy with 3.1, you legends drop this! So excited to add Llama 3.3 before cutoff!

38/38

@peregil

Meta is hoping the others will finetune on more languages. This would have been easier if we had access to part of the English dataset, and maybe examples of the classifiers used for cleaning. Any chance this will be released, @ylecun?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@AIatMeta

As we continue to explore new post-training techniques, today we're releasing Llama 3.3 — a new open source model that delivers leading performance and quality across text-based use cases such as synthetic data generation at a fraction of the inference cost.

2/38

@AIatMeta

Improvements in Llama 3.3 were driven by a new alignment process and progress in online RL techniques. This model delivers similar performance to Llama 3.1 405B with cost effective inference that’s feasible to run locally on common developer workstations.

3/38

@AIatMeta

Llama 3.3 is available now from Meta and on @huggingface — and will be available for deployment soon through our broad ecosystem of partner platforms.

Model card

Download from Meta

Download on HF

4/38

@sparkycollier

Is this an open source license?

https://huggingface.co/meta-llama/Llama-3.3-70B-Instruct/resolve/main/LICENSE

5/38

@tom_pricex

Can you explain why I'm getting permission denies with my url (is it because I'm in EU rn?)

6/38

@Dayrevolve

Hey @lea_gpt what is all these models, i don't understand it, can explain in simple what it is and what are these training things??

7/38

@Evinst3in

Meta is here to steal the show!!!!

8/38

@ScienceStanley

@local0ptimist

9/38

@etemiz

are there plans to share the base model on HF?

10/38

@paul_cal

Really solid perf boost for Llama 3.3 70B over 3.1. Beating Sonnet 3.5 on instruction following is a nice surprise. Hope it passes the vibe check

11/38

@BensenHsu

The Llama 3 Herd of Models:

The study presents the development of a new set of foundation models called Llama 3, which is a herd of language models that can handle multiple languages, coding, reasoning, and tool usage. The flagship model has 405 billion parameters and can process up to 128,000 tokens at a time.

The evaluation results show that Llama 3 performs comparably to leading language models like GPT-4 on a variety of tasks, including commonsense reasoning, knowledge, reading comprehension, math and reasoning, and coding. The smaller Llama 3 models (8B and 70B) outperform models of similar sizes, while the flagship 405B model is competitive with the state-of-the-art.

full paper: The Llama 3 Herd of Models

12/38

@wadeowenwatts35

How has Meta become the heroes?

13/38

@Blaq_Mo

Ai Efficiency is what we all love.

14/38

@RonnyBruknapp

That's awesome, I'm really excited to explore the new Llama model and its capabilities! Open source initiatives like this drive innovation forward.

15/38

@starpause

: :

Llama's hot rise high

Seventy billion flames kiss

Thrones in ashes lie

16/38

@lesteroliver

Made with llama 3.3 70b powered by llama 3.2 90b vision .. I now faith in open source models @AIatMeta @Meta Kudos on the latest release

[Quoted tweet]

Made a document analyzer using @Meta Llama 3.2 90b vision and llama-3.3-70b-versatile using the @GroqInc inference. Not gonna lie, I was pretty blown away by how fast it is compared to the other closes models.

Started with the Llama 11B vision model but wasn't super happy with the results (too many hallucinations), so switched to the Llama 90B vision model and it's been working great. I have also used the latest Llama 3.3 70B for chat. the vision model handles all the document processing while the 3.3 model takes care of summaries and Q&A.

I have prepared a Streamlit application for you to quick-start your journey in AI Document Analysis.

gitrepo: github.com/lesteroliver911/g…

17/38

@Caduceus_CAD

18/38

@wish_society

Lama, that's amazing!

19/38

@kimjisena

let’s goooo

20/38

@____dzc____

Thank you

I have two questions regarding human languages in the Llama models

I have two questions regarding human languages in the Llama models> Supported languages: English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

Are there any roadmaps for additional language support in the future? Working on some language learning applications and would love to use open-source models.

Also, are there any interesting tidbits why Thai is supported despite being less prevalent than say Japanese or Chinese? I always found its inclusion intriguing.

21/38

@bpafoshizle

Very cool. Thanks for continuing to move open source forward

22/38

@karololszacki

Week of AI releases

Thank you, Santa

23/38

@NVIDIAAIDev

24/38

@promptler

Thank you for saving my marriage

[Quoted tweet]

Thanks @OpenAI 🫠

25/38

@TheAI_Frontier

Meta is so productive, we got 3 different version of Llama in half a year. This is huge contribution to Open-source community.

26/38

@JosephSarnecki

@DuckDuckGo I think it's time for you guys to update LLaMA-3-70B-Instruct to LLaMA-3.3-70B-Instruct for DuckAssist and Chat. Very great benchmarks in comparison to LLaMA-3.1-405B-Instruct for much less compute.

27/38

@noxlonga

meta plays the efficiency card; competitors, your move

28/38

@reyneill_

Better than 3.1 405b at a fraction of the cost

29/38

@_akhaliq

awesome, you can try it now in anychat: Anychat - a Hugging Face Space by akhaliq

30/38

@UrsulaS_oficial

I'm impressed how Llama 3.3 presents itself as an open source model with such high performance at low inference cost. This could democratize access to advanced language technologies, but I remain skeptical until its effectiveness and security are proven in practical applications.

31/38

@cognitivetech_

post-training techniques

32/38

@theaiwealthcode

The release of Llama 3.3 highlights how AI models are advancing to deliver greater efficiency and accessibility.

33/38

@LM_22

I am able to run in on my humble 72 cores 256gb Rtx5000 workstation, fast enough, love it. Let see how smart it is now

34/38

@rajahmahsohn

sweet

35/38

@SaquibOptimusAI

Awesome.

36/38

@LM_22

Well done

37/38

@shiels_ai

Literally just as we’re about to deploy with 3.1, you legends drop this! So excited to add Llama 3.3 before cutoff!

38/38

@peregil

Meta is hoping the others will finetune on more languages. This would have been easier if we had access to part of the English dataset, and maybe examples of the classifiers used for cleaning. Any chance this will be released, @ylecun?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

@Xianbao_QIAN

So many incredible new models trending on @HuggingFace this week! Tencent’s HunyuanVideo, Meta’s Llama-3.3, Qwen’s QwQ-32B, and more are pushing AI forward.

Details below.

It’s all super exciting - but honestly I'm a bit concerned about the increasing number of OS models not access to EU...

tencent/HunyuanVideo, the largest and best open source model so far for video generation, released by @TencentGlobal @TXhunyuan team. It's raising the bar for video generation models. Unfortunately this model limits its access to EU, same to LLAMA.

meta-llama/Llama-3.3-70B-Instruct, the latest LLM release from @Meta , fine-tuned from LLAMA 3.1 base model. Similarly, this model remains off access to EU as mentioned in the license.

Qwen/QwQ-32B-Preview, a preview model from @Alibaba_Qwen aiming to replicate the long CoT capability of o1 model series. With enhanced long CoT, this model is great at tricky questions.

black-forest-labs/FLUX.1-dev, a super long time trending model on HuggingFace by @bfl_ml , the best open source text-to-image generation model.

openfree/flux-lora-korea-palace, a flux LoRA model capturing the beautiful Gyeongbokgung palace.

fishaudio/fish-speech-1.5, an upgrade @FishAudio speech model, ranking the 2nd on the TTS arena. It supports 16 languages, including en, zh and ja.

seawolf2357/flux-lora-car-rolls-royce, a flux LoRA of Rolls Royce, also from the Korea community.

Djrango/Qwen2vl-Flux, an enhanced FLUX model with Qwen2VL's vision language understanding capabilities, capable at generating high-quality images both on both text and visual prompts, good at image variation, image blending, image bleding and style transfer.

Shakker-Labs/AWPortraitCN, a portrait model tailored for Chinese, known for delicate, realistic skin quality, popular on Shakker hub in collaboration with @ShakkerAI_Team .

Qwen/Qwen2.5-Coder-32B-Instruct, the most recently coding model from Qwen 2.5 family.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@Xianbao_QIAN

So many incredible new models trending on @HuggingFace this week! Tencent’s HunyuanVideo, Meta’s Llama-3.3, Qwen’s QwQ-32B, and more are pushing AI forward.

Details below.

It’s all super exciting - but honestly I'm a bit concerned about the increasing number of OS models not access to EU...

tencent/HunyuanVideo, the largest and best open source model so far for video generation, released by @TencentGlobal @TXhunyuan team. It's raising the bar for video generation models. Unfortunately this model limits its access to EU, same to LLAMA.

meta-llama/Llama-3.3-70B-Instruct, the latest LLM release from @Meta , fine-tuned from LLAMA 3.1 base model. Similarly, this model remains off access to EU as mentioned in the license.

Qwen/QwQ-32B-Preview, a preview model from @Alibaba_Qwen aiming to replicate the long CoT capability of o1 model series. With enhanced long CoT, this model is great at tricky questions.

black-forest-labs/FLUX.1-dev, a super long time trending model on HuggingFace by @bfl_ml , the best open source text-to-image generation model.

openfree/flux-lora-korea-palace, a flux LoRA model capturing the beautiful Gyeongbokgung palace.

fishaudio/fish-speech-1.5, an upgrade @FishAudio speech model, ranking the 2nd on the TTS arena. It supports 16 languages, including en, zh and ja.

seawolf2357/flux-lora-car-rolls-royce, a flux LoRA of Rolls Royce, also from the Korea community.

Djrango/Qwen2vl-Flux, an enhanced FLUX model with Qwen2VL's vision language understanding capabilities, capable at generating high-quality images both on both text and visual prompts, good at image variation, image blending, image bleding and style transfer.

Shakker-Labs/AWPortraitCN, a portrait model tailored for Chinese, known for delicate, realistic skin quality, popular on Shakker hub in collaboration with @ShakkerAI_Team .

Qwen/Qwen2.5-Coder-32B-Instruct, the most recently coding model from Qwen 2.5 family.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/4

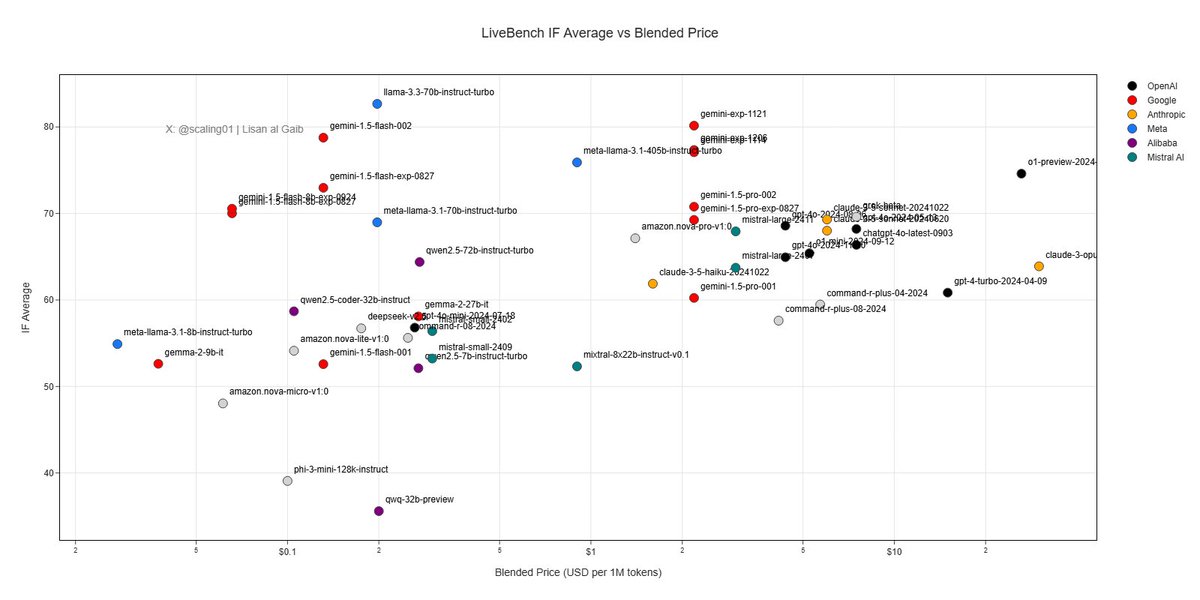

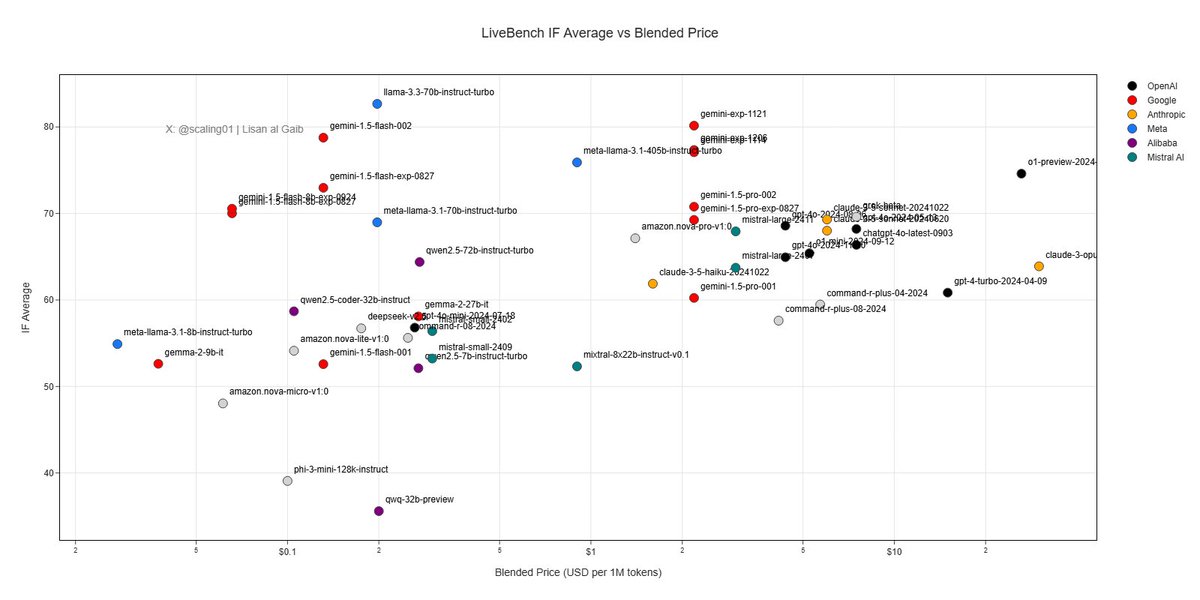

@scaling01

INSTRUCTION FOLLOWING SHOWDOWN!

The new open-weights Llama-3.3 70B model by Meta is the best model for Instruction Following. It beats Gemini Exp 1206, o1-preview and Sonnet 3.5 v2 !!!

IF Category Efficient Frontier:

- Meta Llama-3.1 8B

- Google Gemini 1.5 Flash 8B ️

️

- Google Gemini 1.5 Flash ️

️

- Meta Llama-3.3 70B

Other category overviews coming in a minute

2/4

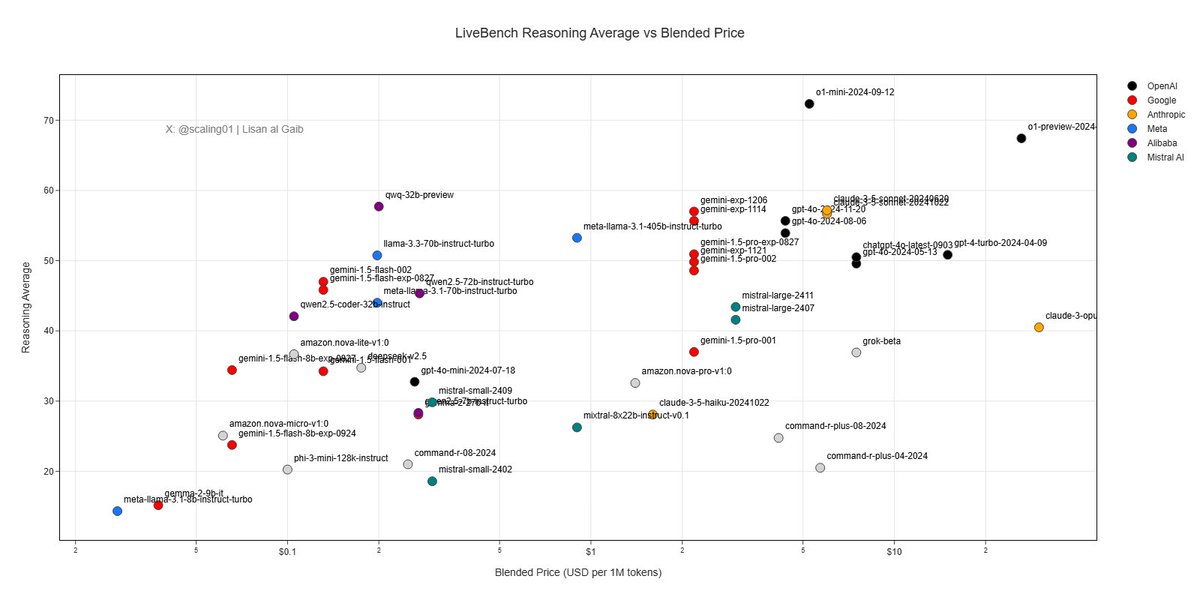

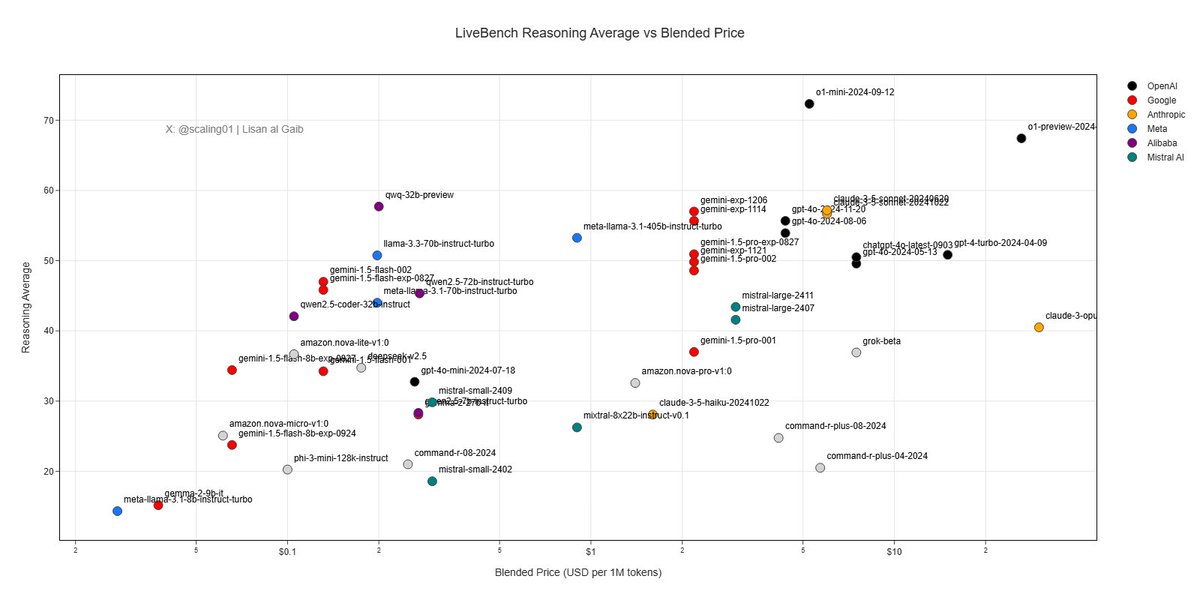

@scaling01

[Quoted tweet]

These are the Kings of Reasoning according to LiveBench!

Another chinese open-weights model by Alibaba Cloud strikes. This time QwQ seperates itself from the pack on the Reasoning Efficient Frontier!

At ~26x the price we find OpenAIs o1-mini model.

Weirdly enough, o1-preview and Sonnet 3.5 v2 are nowhere to be seen on the efficient frontier!

From a pure performance stand-point OpenAIs o1 reasoning models are a step above ALL other models!

3/4

@Mohsine_Mahzi

Love those categories overviews ! It gives far more interesting insights than classic benchmarks. Thanks for sharing

4/4

@BehlHarkirat

It is not so good on arena hard or alpaca eval

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@scaling01

INSTRUCTION FOLLOWING SHOWDOWN!

The new open-weights Llama-3.3 70B model by Meta is the best model for Instruction Following. It beats Gemini Exp 1206, o1-preview and Sonnet 3.5 v2 !!!

IF Category Efficient Frontier:

- Meta Llama-3.1 8B

- Google Gemini 1.5 Flash 8B

- Google Gemini 1.5 Flash

- Meta Llama-3.3 70B

Other category overviews coming in a minute

2/4

@scaling01

[Quoted tweet]

These are the Kings of Reasoning according to LiveBench!

Another chinese open-weights model by Alibaba Cloud strikes. This time QwQ seperates itself from the pack on the Reasoning Efficient Frontier!

At ~26x the price we find OpenAIs o1-mini model.

Weirdly enough, o1-preview and Sonnet 3.5 v2 are nowhere to be seen on the efficient frontier!

From a pure performance stand-point OpenAIs o1 reasoning models are a step above ALL other models!

3/4

@Mohsine_Mahzi

Love those categories overviews ! It gives far more interesting insights than classic benchmarks. Thanks for sharing

4/4

@BehlHarkirat

It is not so good on arena hard or alpaca eval

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

@localghost

Run Llama 3.1 1B offline at 60+ tok/s. Entirely on your phone.

Siri can’t save you offline, but a local model just might.

https://video.twimg.com/amplify_video/1865854635841552384/vid/avc1/1920x1080/8lfrzpuY0Dvc8abL.mp4

2/11

@Piennnefi

amazing ! Any tutorial ?

3/11

@localghost

this is my app apollo, you can just download a local model on it

4/11

@AnuragPrasoon

this is crazy

5/11

@localghost

I love our timeline

6/11

@psalmseeker1

Can i get this app on Android? My Snapdragon 865 or Snapdragon Gen 2 , can run it?

7/11

@localghost

my app is ios only but im sure there are android equivalents

8/11

@thealikazai

Would love to see this being setup . Any resources u recommend for me to try this myself.

9/11

@localghost

you can just download llama 3.2 1b & 3b in my app. there are a few other ways to do it though

10/11

@0xCryptoCafe

do you use mlx models?

how about llama 3.3 70B with 4Bit quantization?

11/11

@localghost

see my vid from yesterday, I did that on my laptop

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@localghost

Run Llama 3.1 1B offline at 60+ tok/s. Entirely on your phone.

Siri can’t save you offline, but a local model just might.

https://video.twimg.com/amplify_video/1865854635841552384/vid/avc1/1920x1080/8lfrzpuY0Dvc8abL.mp4

2/11

@Piennnefi

amazing ! Any tutorial ?

3/11

@localghost

this is my app apollo, you can just download a local model on it

4/11

@AnuragPrasoon

this is crazy

5/11

@localghost

I love our timeline

6/11

@psalmseeker1

Can i get this app on Android? My Snapdragon 865 or Snapdragon Gen 2 , can run it?

7/11

@localghost

my app is ios only but im sure there are android equivalents

8/11

@thealikazai

Would love to see this being setup . Any resources u recommend for me to try this myself.

9/11

@localghost

you can just download llama 3.2 1b & 3b in my app. there are a few other ways to do it though

10/11

@0xCryptoCafe

do you use mlx models?

how about llama 3.3 70B with 4Bit quantization?

11/11

@localghost

see my vid from yesterday, I did that on my laptop

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/51

@localghost

Llama 3.3 70B streaming at 7.8 tok/s on an M1 Pro to my phone.

Not the fastest, but wild that you can serve something this powerful to your whole house without internet.

https://video.twimg.com/amplify_video/1865497234634366976/vid/avc1/1920x1080/lte5Xko1DJhTAsnn.mp4

2/51

@thecsguy

Now imagine the speedup if you use this as justification to buy an M4 Max MBP

3/51

@localghost

im imagining

4/51

@kennypliu

AI will be decentralized

5/51

@localghost

6/51

@natesiggard

NASLLM when

7/51

@localghost

already possible!

8/51

@SaidAitmbarek

really cool, how would you make it faster?

better wifi? faster GPUs?

9/51

@localghost

smaller model

10/51

@eFawzan

I am on M2 Max with 32GB Ram, I couldn't get it run locally. It was so slow almost froze my computer.

11/51

@localghost

not enough ram unfortunately

12/51

@LucaColonnello

Did anyone figure out how to use ram as opposed to vram yet? Ir is it just not possible at all?

13/51

@localghost

the m-series laptops have “unified memory”. all ram is shared

14/51

@9Knowled9e

Youtube vid on how to?

15/51

@localghost

maybe, just get ollama or lm studio and set up a server on your computer. then point an ios client to it (my app apollo is one)

16/51

@burkov

> whole house without internet

You mean during a zombie apocalypse?

17/51

@localghost

yes

18/51

@Eyeinmyeyes

what software u use? mine using mlx.generate only ~5 tok/sec on m1 max 64gb

19/51

@localghost

running it on lm studio

20/51

@jh0nsay

which interface or app did you use in the mobile phone?

21/51

@localghost

it’s my app, apollo

22/51

@FerTech

I tried yesterday to run this model over LmStudio as a local server but it didn’t like my setup (m1 Ultra with 64 gb) I think I was missing the q4 not sure how to do that part

23/51

@localghost

on the download page you can click to expand and see the different versions

24/51

@fedri_ss

It's unbelievable. And to think it only gets more efficient from here

25/51

@localghost

indeed

26/51

@strangerTap

How did you achieve this ? I tried with ollama on my M4 pro and it was so slow impossible to use

27/51

@localghost

make sure you have enough ram and use the mlx version

28/51

@AErmolushka

Anything fancy for streaming?

29/51

@localghost

just sse

30/51

@Siddharth87

That’s amazing. I don’t think my M1 can support this. Did you upgrade your ram?

31/51

@localghost

64gb here

32/51

@slowmemuch

Doomsday preppers are starting to add AI next to water and generators in their must have lists.

33/51

@localghost

could save a life

34/51

@__spongeboi

what's ur ram situation?

35/51

@localghost

64gb

36/51

@HilkemeijerOwen

I want to do something like this using SwiftUI how did u connect the app to the Mac

37/51

@localghost

my app just connects to it via local ip

38/51

@lukalot_

how quantized is this?

39/51

@localghost

q4 on m1 max

40/51

@TundeEinstein

How did you do it

41/51

@localghost

hosted on lm studio w/ my app Apollo as an iOS client

42/51

@zeroedev

What quantization?

43/51

@localghost

Q4

44/51

@odedia

How much ram?

45/51

@localghost

64gb

46/51

@adamviaja

what is happening here?

47/51

@localghost

my laptop is hosting the model which is being accessed from my phone

48/51

@daryl_imagineai

Not fast, but fast enough for interactive work. Anything above 3 t/s is usable imo

49/51

@localghost

7 is about my limit

50/51

@temprlflux

Very cool.

51/51

@sol_roi

oh now i want to try this workspace setup too

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@localghost

Llama 3.3 70B streaming at 7.8 tok/s on an M1 Pro to my phone.

Not the fastest, but wild that you can serve something this powerful to your whole house without internet.

https://video.twimg.com/amplify_video/1865497234634366976/vid/avc1/1920x1080/lte5Xko1DJhTAsnn.mp4

2/51

@thecsguy

Now imagine the speedup if you use this as justification to buy an M4 Max MBP

3/51

@localghost

im imagining

4/51

@kennypliu

AI will be decentralized

5/51

@localghost

6/51

@natesiggard

NASLLM when

7/51

@localghost

already possible!

8/51

@SaidAitmbarek

really cool, how would you make it faster?

better wifi? faster GPUs?

9/51

@localghost

smaller model

10/51

@eFawzan

I am on M2 Max with 32GB Ram, I couldn't get it run locally. It was so slow almost froze my computer.

11/51

@localghost

not enough ram unfortunately

12/51

@LucaColonnello

Did anyone figure out how to use ram as opposed to vram yet? Ir is it just not possible at all?

13/51

@localghost

the m-series laptops have “unified memory”. all ram is shared

14/51

@9Knowled9e

Youtube vid on how to?

15/51

@localghost

maybe, just get ollama or lm studio and set up a server on your computer. then point an ios client to it (my app apollo is one)

16/51

@burkov

> whole house without internet

You mean during a zombie apocalypse?

17/51

@localghost

yes

18/51

@Eyeinmyeyes

what software u use? mine using mlx.generate only ~5 tok/sec on m1 max 64gb

19/51

@localghost

running it on lm studio

20/51

@jh0nsay

which interface or app did you use in the mobile phone?

21/51

@localghost

it’s my app, apollo

22/51

@FerTech

I tried yesterday to run this model over LmStudio as a local server but it didn’t like my setup (m1 Ultra with 64 gb) I think I was missing the q4 not sure how to do that part

23/51

@localghost

on the download page you can click to expand and see the different versions

24/51

@fedri_ss

It's unbelievable. And to think it only gets more efficient from here

25/51

@localghost

indeed

26/51

@strangerTap

How did you achieve this ? I tried with ollama on my M4 pro and it was so slow impossible to use

27/51

@localghost

make sure you have enough ram and use the mlx version

28/51

@AErmolushka

Anything fancy for streaming?

29/51

@localghost

just sse

30/51

@Siddharth87

That’s amazing. I don’t think my M1 can support this. Did you upgrade your ram?

31/51

@localghost

64gb here

32/51

@slowmemuch

Doomsday preppers are starting to add AI next to water and generators in their must have lists.

33/51

@localghost

could save a life

34/51

@__spongeboi

what's ur ram situation?

35/51

@localghost

64gb

36/51

@HilkemeijerOwen

I want to do something like this using SwiftUI how did u connect the app to the Mac

37/51

@localghost

my app just connects to it via local ip

38/51

@lukalot_

how quantized is this?

39/51

@localghost

q4 on m1 max

40/51

@TundeEinstein

How did you do it

41/51

@localghost

hosted on lm studio w/ my app Apollo as an iOS client

42/51

@zeroedev

What quantization?

43/51

@localghost

Q4

44/51

@odedia

How much ram?

45/51

@localghost

64gb

46/51

@adamviaja

what is happening here?

47/51

@localghost

my laptop is hosting the model which is being accessed from my phone

48/51

@daryl_imagineai

Not fast, but fast enough for interactive work. Anything above 3 t/s is usable imo

49/51

@localghost

7 is about my limit

50/51

@temprlflux

Very cool.

51/51

@sol_roi

oh now i want to try this workspace setup too

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/20

@adcock_brett

What a week for AI and Robotics.

As usual, I summarized everything announced by OpenAI, Google DeepMind, Amazon, Microsoft AI, Tencent, ElevenLabs, Meta, xAI, and more.

Here's everything you need to know and how to make sense out of it:

2/20

@adcock_brett

OpenAI announced the immediate launch of their latest o1 and o1 pro reasoning models on day 1 of 'shipmas'

They also introduced a new $200/month Pro tier with unlimited access to their existing model fleet and 01-pro

https://video.twimg.com/ext_tw_video/1864731897861148675/pu/vid/avc1/1920x1080/m8ewXJ3rLoQO9esP.mp4

3/20

@adcock_brett

Google announced Genie2, a large-scale, multimodal foundation world AI model

It can convert single images into interactive, playable 3D environments

Excited about what this can do not just for AI gaming, but also for AI training in simulation

https://video.twimg.com/ext_tw_video/1864351674816495616/pu/vid/avc1/1280x720/Jz3t596U6zObllGO.mp4

4/20

@adcock_brett

Amazon announced Nova, a new family of AI models

The lineup includes four text models of varying capabilities (Micro, Lite, Pro, and Premier), plus Canvas (image) and Reel (video) models

Nice to see Amazon finally making some noise

https://video.twimg.com/amplify_video/1864290507184132096/vid/avc1/720x900/jQNlFahIkLUeONSX.mp4

5/20

@adcock_brett

We announced some big updates to @cover_thz, my AI security company I announced last year:

1. Our first system capable of detecting consealed weapons was completed this summer

2. Design is underway for our 2nd-gen system which will be radically better

[Quoted tweet]

Last year, I started an AI security company called Cover

Our AI hardware detects guns underneath clothes and bags

And I’m currently funding $10M into it

Here’s why this technology is critical and a 12-month update:

6/20

@adcock_brett

Meta dropped Llama 3.3, a 70B open model with similar performance to Llama 3.1 405B, but significantly cheaper

It's text only for now. Available to download at Meta's Llama page and on HuggingFace

7/20

@adcock_brett