1/35

@Katie Mack

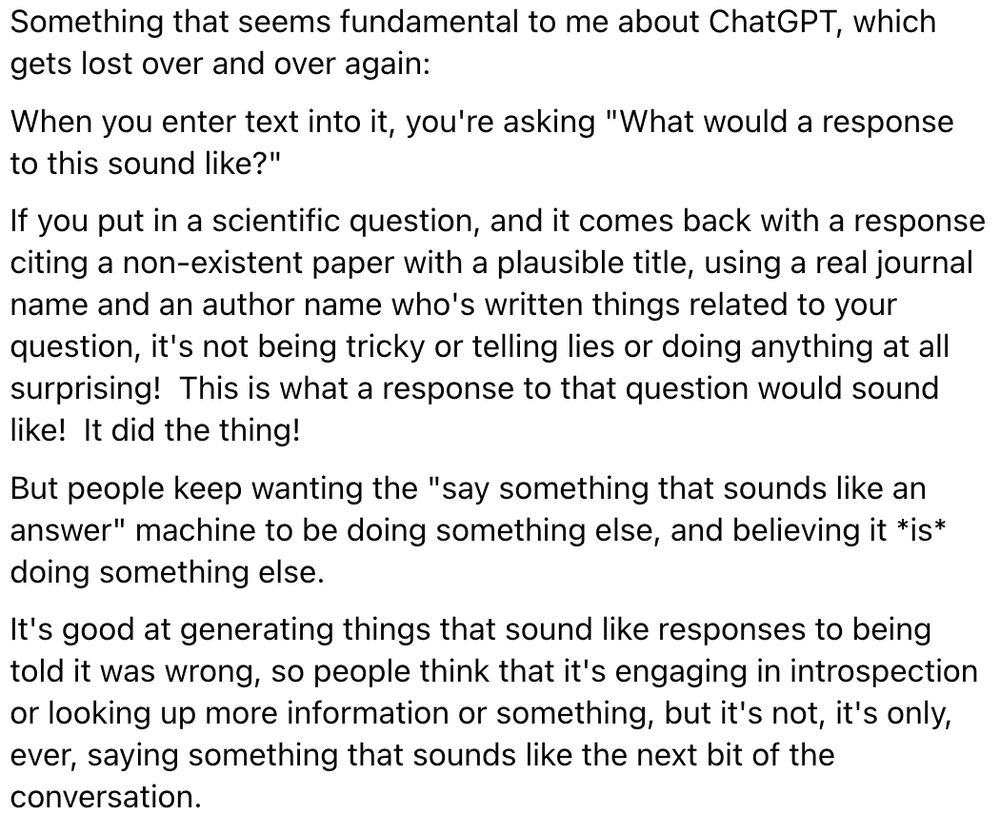

I honestly believe all LLM results should come with a disclaimer reminding people that the thing doesn’t (and absolutely cannot) know any facts or do any reasoning; it is simply designed to *sound* like it knows facts and frequently sweeps some up accidentally in the process.

2/35

@Susan @sugarpop.bsky.social

When I talk to my students about generative I, the language I use is that it's not a machine that knows things, it's a machine that knows how to sound like it knows things.

3/35

@Kencf0618 @kencf0618.bsky.social

"It's information, but not as we know it."

4/35

@Chris Tierney | Santydisestablishmentarian @otternaut.bsky.social

It's the Soylent Green of data.

5/35

@Kencf0618 @kencf0618.bsky.social

"I understood that reference."

6/35

@Zeusia @whereswoozle.bsky.social

Here's something I saved from

in early 2023. To my tooth-gnashing regret, I only saved the image, so I can't attribute it.

7/35

@Rob Paul @arepeople.com

Rachel Meredith KadelRachel Meredith KadelHelpful, thank you! I googled a snippet of the quote and I think the text was posted on Facebook by

www.facebook.com/rachelkg/ (

www.facebook.com/share/p/18NU...).

8/35

@Guan Yang @guan.dk

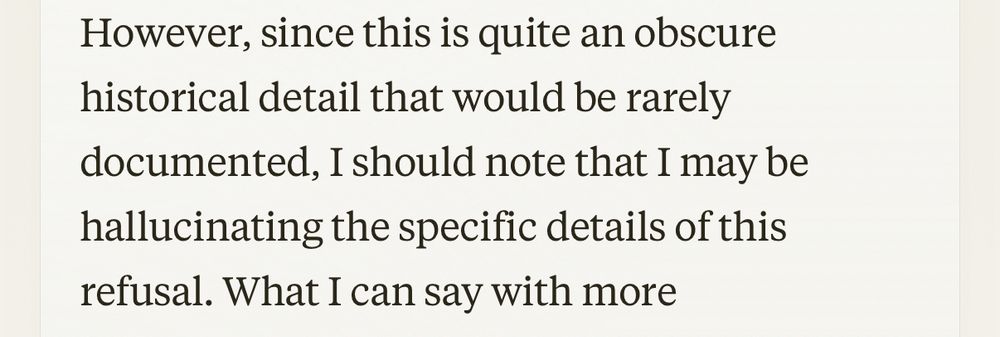

laude now frequently includes this when responding to factual questions.

9/35

@Anthony Moser @anthonymoser.com

Too nuanced. Needs a red banner THIS TOOL OES NOT KNOW FTS. IT GENERTES TEXT

10/35

@Katie Mack @astrokatie.com

This.

11/35

@Raoul de kezel @raouldekezel.bsky.social

I could say that of many humans. The position is extreme, and I would be glad to read its rationale. Specifically a database may « know » facts, and emergence in large systems should make us cautious that we can understand behaviour from first principles

12/35

@matt | infovoy @infovoy.bsky.social

You think "many humans" "do not know any facts or do any reasoning"? That seems more extreme, but anyway is irrelevant because it only needs some humans to sometimes know facts and reason to contrast with no Is ever knowing things or reasoning.

13/35

@Raoul de kezel @raouldekezel.bsky.social

« no Is ever knowing things or reasoning.« this is not obvious at all. First, llms know facts, the best we could express them. Second, to predict the next word accurately you have to understand to some degree what you are talking about. Third, it doesn’t matter at all, for ./.

14/35

@Raoul de kezel @raouldekezel.bsky.social

I could claim that your brain cannot reason because it’s just electric potential moving between cells

15/35

@Chris Tierney | Santydisestablishmentarian @otternaut.bsky.social

I would say that they should be compelled to come with a link to the original sources for each of their terms, but :

1) it would quite likely raise the computing overhead by ~2x - 10x, and

2) they'd never do it because it would expose their illegal scraping.

16/35

@You wouldn't download a frog @younodonk.bsky.social

Even if the extra compute was free, there's no technical way to query model data for what training data it came from. That requires searching the whole original dataset - so, a search engine. Separately, if such capabilities existed as part of LLM processing, they'd also hallucinate sources.

17/35

@Matt Thorning @matt-thorn.ing

People used to be warned about misinformation online but now we've actually built misinformation machines and that's apparently fine.

18/35

@Joss Fong @jossfong.bsky.social

“It’s surprisingly good and sometimes quite wrong” is such an awkward reality to hold in our heads. “etter than doctors at diagnosis but it doesn’t technically ‘know’ what’s true” - is a good thing to understand about it. Still, most users will prefer it to the alternatives and take the same risks.

19/35

@John Fitzgerald @johnfitzg.bsky.social

I've started using the word 'fIcts'

'This model may have been accidentally hanging out with the facts'

20/35

@JaysGiving @jaymocking.bsky.social

We tell students that I is designed to make you happy, not to be accurate.

21/35

@Rupert Stubbs @rupertstubbs.bsky.social

nd Google has become worse and worse at giving you useful information as well. The first thing it shows you is a load of videos, many from Facebook or Tiktok - in what world is that simpler than just giving me the words?

22/35

@childishdogman.bsky.social @childishdogman.bsky.social

God forbid you should ask it just about anything that requires it to find numbers or amounts of anything. It just picks a numbers seemingly at random from sources it uses that match terms in your query.

Try asking for something like the combined volume of all of the planets in the solar system. FIL

23/35

@Sam Harper @sharper.bsky.social

That and the amount of water required to produce the result.

24/35

@LowerArtworks @lowerartworks.bsky.social

I think people should come with that disclaimer, too.

25/35

@childishdogman.bsky.social @childishdogman.bsky.social

They shouldn't be calling LLMs "artificial intelligence" at all.

It should be calling something like "simulated intelligence" or "intelligence mimicry".

26/35

@Andy Clark, Nerdling Herder @andycruns.bsky.social

There’s an d for googles new Gemini voice assistant thing on at the moment. There’s a disclaimer that tells you to confirm results for accuracy which kinda defeats the object.

27/35

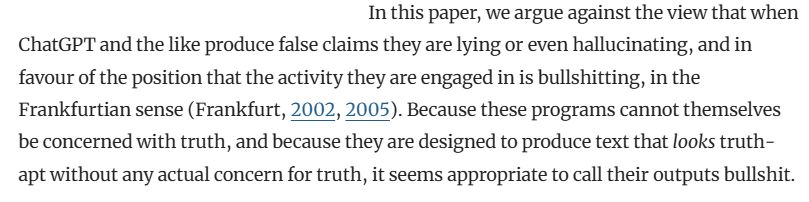

@Huw Swanborough @huwroscience.bsky.social

https://link.springer.com/article/10.1007/s10676-024-0775-5The scientific term is bullshyt.

link.springer.com/article/10.1...

28/35

@Franki @franki23.bsky.social

It's weird because using it when programming, sometimes it's (gemini) bang on and understands my waffle and produces working code. Other times it's really flippin' random. ecause I can code, I know the difference. I know a few people who just copy and paste whatever comes out and that's scary.

29/35

@MFD-01 @metaflame.dev

the fact that it's designed to *sound* correct rather than *be* correct should be a strong enough argument to, just, not use LLMs in the first place

massive disclaimers on packs of cigarettes also don't work well enough to stop smoking, so an LLM disclaimer can only help so much

30/35

@MFD-01 @metaflame.dev

laims that LLMs are a "search engine" inherently goes against their very nature and how they were trained.

High correlation between predicted responses and fact does not imply that prediction causes factual responses. They're just inherently not a search engine, period.

31/35

@Mono

@monochromeobserver.bsky.social

https://perplexity.ai/Perplexity.ai though. It gives sources, mostly generating summaries out of them.

MS opilot sometimes gives sources too. It used to do that more often when it was just called ing hat.

32/35

@Techsticles @techsticles.bsky.social

o you realize that they are designed so that they can't just go: "I dunno."? Thats the biggest issue in my view.

33/35

@Katie Mack @astrokatie.com

Yeah there was a paper about this recently, cautioning that the more sophisticated LLMs are more likely to give correct info but also way less likely to admit that they could be wrong, making them MUH harder for people to check

34/35

@draglikepull @draglikepull.bsky.social

Lots of LLM chatbots and the like have warnings about how the results may not be reliable, but people ignore them. warning text box can't overcome the tsunami of hype that most people are seeing for these products.

35/35

@Toothy Fernsan @toothyfernsan.bsky.social

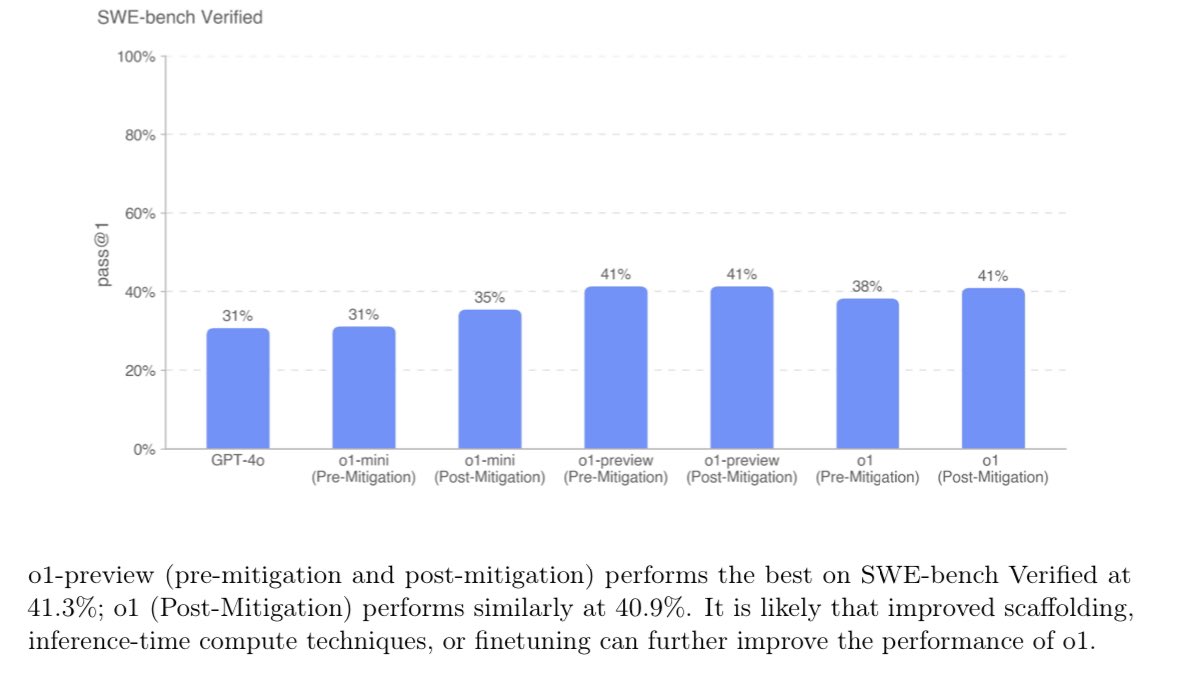

To be fair the newest models do sort of reason

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196