The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?

Welcome to the Falcon 3 Family of Open Models!

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Welcome to the Falcon 3 Family of Open Models!

Published December 17, 2024

We introduce Falcon3, a family of decoder-only large language models under 10 billion parameters, developed by Technology Innovation Institute (TII) in Abu Dhabi. By pushing the boundaries of performance and training efficiency, this release reflects our ongoing commitment to advancing open and accessible large foundation models.

Falcon3 represents a natural evolution from previous releases, emphasizing expanding the models' science, math, and code capabilities.

This iteration includes five base models:

In developing these models, we incorporated several key innovations aimed at improving the models' performances while reducing training costs:

- One pre-training for transformer-based models: We conducted a single large-scale pretraining run on the 7B model, using 1024 H100 GPU chips, leveraging 14 trillion tokens featuring web, code, STEM, and curated high-quality and multilingual data.

[*Depth up-scaling for improved reasoning: Building on recent studies on the effects of model depth, we upscaled the 7B model to a 10B parameters model by duplicating the redundant layers and continuing pre-training with 2 trillion tokens of high-quality data. This yielded Falcon3-10B-Base which achieves state-of-the-art zero-shot and few-shot performance for models under 13B parameters.

- Knowledge distillation for better tiny models: To provide compact and efficient alternatives, we developed Falcon3-1B-Base and Falcon3-3B-Base by leveraging pruning and knowledge distillation techniques, using less than 100GT of curated high-quality data, thereby redefining pre-training efficiency.

- Pure SSM: We have further enhanced Falcon Mamba 7B by training on an additional 1.5 trillion tokens of high-quality data, resulting in Falcon3-Mamba-7B-Base. Notably, the updated model offers significantly improved reasoning and mathematical capabilities.

- Other variants: All models in the Falcon3 family are available in variants such as Instruct, GGUF, GPTQ-Int4, GPTQ-Int8, AWQ, and 1.58-bit, offering flexibility for a wide range of applications.

Key Highlights

Falcon3 featured the limits within the small and medium scales of large language models by demonstrating high performance on common benchmarks:

- Falcon3-1B-Base surpasses SmolLM2-1.7B and is on par with gemma-2-2b.

- Falcon3-3B-Base outperforms larger models like Llama-3.1-8B and Minitron-4B-Base, highlighting the benefits of pre-training with knowledge distillation.

- Falcon3-7B-Base demonstrates top performance, on par with Qwen2.5-7B, among models under the 9B scale.

- Falcon3-10B-Base stands as the state-of-the-art achieving strong results in the under-13B category.

- All the transformer-based Falcon3 models are compatible with Llama architecture allowing better integration in the AI ecosystem.

- Falcon3-Mamba-7B continues to lead as the top-performing State Space Language Model (SSLM), matching or even surpassing leading transformer-based LLMs at the 7B scale, along with support for a longer 32K context length. Having the same architecture as the original Falcon Mamba 7B, users can integrate Falcon3-Mamba-7B seamlessly without any additional effort.

- The instruct versions of our collection of base models further show remarkable performance across various benchmarks with Falcon3-7B-Instruct and Falcon3-10B-Instruct outperforming all instruct models under the 13B scale on the open leaderboard.

Enhanced Capabilities

We evaluated models with our internal evaluation pipeline (based on lm-evaluation-harness) and we report raw scores. Our evaluations highlight key areas where the Falcon3 family of models excel, reflecting the emphasis on enhancing performance in scientific domains, reasoning, and general knowledge capabilities:

- Math Capabilities: Falcon3-10B-Base achieves 22.9 on MATH-Lvl5 and 83.0 on GSM8K, showcasing enhanced reasoning in complex math-focused tasks.

- Coding Capabilities: Falcon3-10B-Base achieves 73.8 on MBPP, while Falcon3-10B-Instruct scores 45.8 on Multipl-E, reflecting their abilities to generalize across programming-related tasks.

- Extended Context Length: Models in the Falcon3 family support up to 32k tokens (except the 1B supporting up to 8k context), with functional improvements such as scoring 86.3 on BFCL (Falcon3-10B-Instruct).

- Improved Reasoning: Falcon3-7B-Base and Falcon3-10B-Base achieve 51.0 and 59.7 on BBH, reflecting enhanced reasoning capabilities, with the 10B model showing improved reasoning performance over the 7B.

- Scientific Knowledge Expansion: Performance on MMLU benchmarks demonstrates advances in specialized knowledge, with scores of 67.4/39.2 (MMLU/MMLU-PRO) for Falcon3-7B-Base and 73.1/42.5 (MMLU/MMLU-PRO) for Falcon3-10B-Base respectively.

Models' Specs and Benchmark Results

Detailed specifications of the Falcon3 family of models are summarized in the following table. The architecture of Falcon3-7B-Base is characterized by a head dimension of 256 which yields high throughput when using FlashAttention-3 as it is optimized for this dimension. These decoder-only models span 18 to 40 layers for the transformer-based ones, and 64 layers for the mamba one, all models share the SwiGLU activation function, with vocabulary size of 131K tokens (65Kfor Mamba-7B). The Falcon3-7B-Base is trained on the largest amount of data ensuring comprehensive coverage of concepts and knowledge, the other variants require way less data.

The table below highlights the performances of Falcon3-7B-Base and Falcon3-10B-Base on key benchmarks showing competitive performances in general, math, reasoning, and common sense understanding domains.

The instruct models also demonstrate competitive and super performances with equivalent and small-size models as highlighted in the tables below.

https://huggingface.co/blog/falcon3#instruct-models

Instruct models

Falcon3-1B-Instruct and Falcon3-3B-Instruct achieve robust performance across the evaluated benchmarks. Specifically, Falcon3-1B attains competitive results in IFEval (54.4), MUSR (40.7), and SciQ (86.8), while Falcon3-3B exhibits further gains—particularly in MMLU-PRO (29.7) and MATH (19.9)—demonstrating clear scaling effects. Although they do not surpass all competing models on every metric, Falcon models show strong performances in reasoning and common-sense understanding relative to both Qwen and Llama.

Furthermore, Falcon3-7B and Falcon3-10B show robust performance across the evaluated benchmarks. Falcon3-7B achieves competitive scores on reasoning (Arc Challenge: 65.9, MUSR: 46.4) and math (GSM8K: 79.1), while Falcon3-10B demonstrates further improvements, notably in GSM8K (83.1) and IFEval (78), indicating clear scaling benefits.

Falcon3 - a tiiuae Collection

Falcon3 family of Open Foundation Models is a set of pretrained and instruct LLMs ranging from 1B to 10B parameters.

huggingface.co

Falcon3

updated about 5 hours ago

Falcon3 family of Open Foundation Models is a set of pretrained and instruct LLMs ranging from 1B to 10B parameters.

1/10

@reach_vb

Falcon 3 is out! 1B, 3B, 7B, 10B (Base + Instruct) & 7B Mamba, trained on 14 Trillion tokens and apache 2.0 licensed!

> 1B-Base surpasses SmolLM2-1.7B and matches gemma-2-2b

> 3B-Base outperforms larger models like Llama-3.1-8B and Minitron-4B-Base

> 7B-Base is on par with Qwen2.5-7B in the under-9B category

> 10B-Base is state-of-the-art in the under-13B category

> Math + Reasoning: 10B-Base scores 24.77 on MATH-Lvl5 and 83.0 on GSM8K

> Coding: 10B-Base scores 73.8 on MBPP, while 10B-Instruct scores 45.8 on Multipl-E

> 10B-Instruct scores 86.3 on BFCL with a 32K context length

> 10B-Base scores 73.1/42.5 on MMLU/MMLU-PRO, outperforming 7B-Base (67.4/39.2)

> Release GGUFs, AWQ, GPTQ and Bitnet quants along with the release!

Kudos @TIIuae - this looks brilliant!

2/10

@reach_vb

Check out all the model checkpoints here:

Falcon3 - a tiiuae Collection

3/10

@TheXeophon

7B: „14 Gigatokens“

10B: 7B + pre-training on „2 Teratokens“

3B: pruned from 7B on „effectively 100 GT“

1B: pruned from 3B on „effectively 80 GT“

4/10

@Dorialexander

Is it Apache 2.0 or their home license? Not very clear about what that entails.

5/10

@inversetrs

you know it's good when Qwen is included in the comparison table

6/10

@AbdullahAdeebi

Available on @ollama?

7/10

@_exe910

It's more censored than llama 3.1 8b

8/10

@TheXeophon

Interesting, from releasing the big chonkers to smol models

9/10

@TextbookTrade1

Why is Math score “0” for Qwen2.5-7b?

10/10

@danNH2006

You can try all these models here, I have an endpoint available for free, can you give me your thoughts?

PrivateGPT

@reach_vb

Falcon 3 is out! 1B, 3B, 7B, 10B (Base + Instruct) & 7B Mamba, trained on 14 Trillion tokens and apache 2.0 licensed!

> 1B-Base surpasses SmolLM2-1.7B and matches gemma-2-2b

> 3B-Base outperforms larger models like Llama-3.1-8B and Minitron-4B-Base

> 7B-Base is on par with Qwen2.5-7B in the under-9B category

> 10B-Base is state-of-the-art in the under-13B category

> Math + Reasoning: 10B-Base scores 24.77 on MATH-Lvl5 and 83.0 on GSM8K

> Coding: 10B-Base scores 73.8 on MBPP, while 10B-Instruct scores 45.8 on Multipl-E

> 10B-Instruct scores 86.3 on BFCL with a 32K context length

> 10B-Base scores 73.1/42.5 on MMLU/MMLU-PRO, outperforming 7B-Base (67.4/39.2)

> Release GGUFs, AWQ, GPTQ and Bitnet quants along with the release!

Kudos @TIIuae - this looks brilliant!

2/10

@reach_vb

Check out all the model checkpoints here:

Falcon3 - a tiiuae Collection

3/10

@TheXeophon

7B: „14 Gigatokens“

10B: 7B + pre-training on „2 Teratokens“

3B: pruned from 7B on „effectively 100 GT“

1B: pruned from 3B on „effectively 80 GT“

4/10

@Dorialexander

Is it Apache 2.0 or their home license? Not very clear about what that entails.

5/10

@inversetrs

you know it's good when Qwen is included in the comparison table

6/10

@AbdullahAdeebi

Available on @ollama?

7/10

@_exe910

It's more censored than llama 3.1 8b

8/10

@TheXeophon

Interesting, from releasing the big chonkers to smol models

9/10

@TextbookTrade1

Why is Math score “0” for Qwen2.5-7b?

10/10

@danNH2006

You can try all these models here, I have an endpoint available for free, can you give me your thoughts?

PrivateGPT

1/3

@ailozovskaya

Falcon3 Model Family joins the

Falcon3 Model Family joins the  Open LLM Leaderboard!

Open LLM Leaderboard!

The Falcon3 family of Open Foundation Models includes pretrained and instruction-tuned versions, ranging from 1B to 10B parameters. Falcon3 models support English, French, Spanish, and Portuguese, while Falcon3-Mamba models focus primarily on English

Key Benchmark Highlights

Key Benchmark Highlights

• Consistent performance across benchmarks, with the 10B-Instruct model leading the family

• Strong results on MATH Hard tasks

Three Falcon3 models rank among the best on the Open LLM Leaderboard

Three Falcon3 models rank among the best on the Open LLM Leaderboard

• Falcon3-10B-Instruct – the best chat model in the ~13B category (avg. 35.19)

• Falcon3-10B-Base – the best pretrained model in the ~13B category (avg. 27.59)

• Falcon3-7B-Instruct – the best chat model within the ~7B category (avg. 34.91)

License: TII Falcon-LLM License 2.0

License: TII Falcon-LLM License 2.0

What an impressive release, @TIIuae !

2/3

@ailozovskaya

Open LLM Leaderboard: Open LLM Leaderboard - a Hugging Face Space by open-llm-leaderboard

Open LLM Leaderboard: Open LLM Leaderboard - a Hugging Face Space by open-llm-leaderboard

Open LLM Leaderboard best models: Open LLM Leaderboard best models

Open LLM Leaderboard best models: Open LLM Leaderboard best models  - a open-llm-leaderboard Collection

- a open-llm-leaderboard Collection

Model Family: Falcon3 - a tiiuae Collection

Model Family: Falcon3 - a tiiuae Collection

3/3

@ailozovskaya

Check out the blogpost for tech details:

Check out the blogpost for tech details:

Welcome to the Falcon 3 Family of Open Models!

@ailozovskaya

The Falcon3 family of Open Foundation Models includes pretrained and instruction-tuned versions, ranging from 1B to 10B parameters. Falcon3 models support English, French, Spanish, and Portuguese, while Falcon3-Mamba models focus primarily on English

• Consistent performance across benchmarks, with the 10B-Instruct model leading the family

• Strong results on MATH Hard tasks

• Falcon3-10B-Instruct – the best chat model in the ~13B category (avg. 35.19)

• Falcon3-10B-Base – the best pretrained model in the ~13B category (avg. 27.59)

• Falcon3-7B-Instruct – the best chat model within the ~7B category (avg. 34.91)

What an impressive release, @TIIuae !

2/3

@ailozovskaya

3/3

@ailozovskaya

Welcome to the Falcon 3 Family of Open Models!

1/1

@Marktechpost

Meta AI Releases Apollo: A New Family of Video-LMMs Large Multimodal Models for Video Understanding

Researchers from Meta AI and Stanford developed Apollo, a family of video-focused LMMs designed to push the boundaries of video understanding. Meta AI’s Apollo models are designed to process videos up to an hour long while achieving strong performance across key video-language tasks. Apollo comes in three sizes – 1.5B, 3B, and 7B parameters – offering flexibility to accommodate various computational constraints and real-world needs.

Key innovations include:

1.5B, 3B, and 7B model checkpoints

1.5B, 3B, and 7B model checkpoints

Can comprehend up-to 1 hour of video

Can comprehend up-to 1 hour of video

Temporal reasoning & complex video question-answering

Temporal reasoning & complex video question-answering

Multi-turn conversations grounded in video content....

Multi-turn conversations grounded in video content....

Read the full article here: Meta AI Releases Apollo: A New Family of Video-LMMs Large Multimodal Models for Video Understanding

Read the full article here: Meta AI Releases Apollo: A New Family of Video-LMMs Large Multimodal Models for Video Understanding

Paper: [2412.10360] Apollo: An Exploration of Video Understanding in Large Multimodal Models

Paper: [2412.10360] Apollo: An Exploration of Video Understanding in Large Multimodal Models

Models: Apollo-LMMs (Apollo-LMMs)

Models: Apollo-LMMs (Apollo-LMMs)

Join our ML Subreddit (60k+ members): https://www.reddit.com/r/machinelearningnews/

Join our ML Subreddit (60k+ members): https://www.reddit.com/r/machinelearningnews/

@AIatMeta @fb_engineering @Meta @Stanford

https://video.twimg.com/ext_tw_video/1868908461498748928/pu/vid/avc1/1280x720/-808WHPXPBXKBRDo.mp4

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@Marktechpost

Meta AI Releases Apollo: A New Family of Video-LMMs Large Multimodal Models for Video Understanding

Researchers from Meta AI and Stanford developed Apollo, a family of video-focused LMMs designed to push the boundaries of video understanding. Meta AI’s Apollo models are designed to process videos up to an hour long while achieving strong performance across key video-language tasks. Apollo comes in three sizes – 1.5B, 3B, and 7B parameters – offering flexibility to accommodate various computational constraints and real-world needs.

Key innovations include:

@AIatMeta @fb_engineering @Meta @Stanford

https://video.twimg.com/ext_tw_video/1868908461498748928/pu/vid/avc1/1280x720/-808WHPXPBXKBRDo.mp4

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/8

@_akhaliq

Meta releases Apollo

An Exploration of Video Understanding in Large Multimodal Models

a family of state-of-the-art video-LMMs

https://video.twimg.com/ext_tw_video/1868534932114419713/pu/vid/avc1/1280x720/IMJZ3ikflXQ2JHRj.mp4

2/8

@_akhaliq

discuss: Paper page - Apollo: An Exploration of Video Understanding in Large Multimodal Models

3/8

@orr_zohar

Thank you @_akhaliq for highlighting our work! We hope that Apollo's insights help further accelerate the video-LMM field with better, more informed design decisions!

insights help further accelerate the video-LMM field with better, more informed design decisions!

4/8

@CohorteAI

This is a great read, check this out: What Can Large Language Models Achieve?.

What Can Large Language Models Achieve? - Cohorte Projects

5/8

@NotBrain4brain

Meta is slowly cooking up AGI

6/8

@imConquered

The progress lately is huge.

7/8

@adugbovictory

Meta’s Apollo feels like the future of video-LMMs! The ability to ‘understand’ video could open up so many possibilities, from smarter content tagging to AI-powered analysis.

8/8

@XiaohanWang96

Thanks for highlighting our work! We systematically explore the design space of video large multimodal models, offering actionable insights. Introducing ApolloBench for efficient evaluation and Apollo, a state-of-the-art video-LMM family—all open-source to advance the field!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@_akhaliq

Meta releases Apollo

An Exploration of Video Understanding in Large Multimodal Models

a family of state-of-the-art video-LMMs

https://video.twimg.com/ext_tw_video/1868534932114419713/pu/vid/avc1/1280x720/IMJZ3ikflXQ2JHRj.mp4

2/8

@_akhaliq

discuss: Paper page - Apollo: An Exploration of Video Understanding in Large Multimodal Models

3/8

@orr_zohar

Thank you @_akhaliq for highlighting our work! We hope that Apollo's

4/8

@CohorteAI

This is a great read, check this out: What Can Large Language Models Achieve?.

What Can Large Language Models Achieve? - Cohorte Projects

5/8

@NotBrain4brain

Meta is slowly cooking up AGI

6/8

@imConquered

The progress lately is huge.

7/8

@adugbovictory

Meta’s Apollo feels like the future of video-LMMs! The ability to ‘understand’ video could open up so many possibilities, from smarter content tagging to AI-powered analysis.

8/8

@XiaohanWang96

Thanks for highlighting our work! We systematically explore the design space of video large multimodal models, offering actionable insights. Introducing ApolloBench for efficient evaluation and Apollo, a state-of-the-art video-LMM family—all open-source to advance the field!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/2

@gm8xx8

Apollo: An Exploration of Video Understanding in Large Multimodal Models

Apollo is a family of state-of-the-art video-LMMs. Its development revealed Scaling Consistency, a principle that allows design decisions made on smaller models and datasets to transfer reliably to larger models, significantly reducing computational costs. Using this approach, hundreds of model variants were trained to explore video sampling methods, token integration, training schedules, and data combinations. These insights enabled Apollo to set new benchmarks in efficient and high-performance video-language modeling.

2/2

@gm8xx8

models: Apollo-LMMs (Apollo-LMMs)

paper: [2412.10360] Apollo: An Exploration of Video Understanding in Large Multimodal Models

project page: Apollo

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@gm8xx8

Apollo: An Exploration of Video Understanding in Large Multimodal Models

Apollo is a family of state-of-the-art video-LMMs. Its development revealed Scaling Consistency, a principle that allows design decisions made on smaller models and datasets to transfer reliably to larger models, significantly reducing computational costs. Using this approach, hundreds of model variants were trained to explore video sampling methods, token integration, training schedules, and data combinations. These insights enabled Apollo to set new benchmarks in efficient and high-performance video-language modeling.

2/2

@gm8xx8

models: Apollo-LMMs (Apollo-LMMs)

paper: [2412.10360] Apollo: An Exploration of Video Understanding in Large Multimodal Models

project page: Apollo

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

@itinaicom

Meta AI has just released Apollo, a groundbreaking family of Large Multimodal Models (LMMs) designed for advanced video understanding. With capabilities to analyze videos up to an hour long and innovative sampling techniques, Apollo sets new benchmarks f… Meta AI Releases Apollo: A New Family of Video-LMMs Large Multimodal Models for Video Understanding

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@itinaicom

Meta AI has just released Apollo, a groundbreaking family of Large Multimodal Models (LMMs) designed for advanced video understanding. With capabilities to analyze videos up to an hour long and innovative sampling techniques, Apollo sets new benchmarks f… Meta AI Releases Apollo: A New Family of Video-LMMs Large Multimodal Models for Video Understanding

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

@bbrunomls

#6 Paper of the Day - Apollo: Advancing Video Understanding in Large Multimodal Models!

In their latest paper, "Apollo: An Exploration of Video Understanding in Large Multimodal Models," researchers introduce Apollo, a state-of-the-art family of Large Multimodal Models (LMMs) designed to improve video comprehension.

The Problem: Despite rapid advancements in image-based LMMs, video understanding remains challenging due to high computational costs and design complexities.

The Problem: Despite rapid advancements in image-based LMMs, video understanding remains challenging due to high computational costs and design complexities.

The Solution: Apollo addresses this by systematically exploring key design choices like video sampling, model architectures, and training schedules. The research introduces Scaling Consistency, where insights from smaller models transfer effectively to larger ones.

The Solution: Apollo addresses this by systematically exploring key design choices like video sampling, model architectures, and training schedules. The research introduces Scaling Consistency, where insights from smaller models transfer effectively to larger ones.

The Result: Apollo models achieve superior performance on benchmarks like LongVideoBench and MLVU. For instance, Apollo-3B outperforms many 7B models, achieving a score of 55.1 on LongVideoBench.

The Result: Apollo models achieve superior performance on benchmarks like LongVideoBench and MLVU. For instance, Apollo-3B outperforms many 7B models, achieving a score of 55.1 on LongVideoBench.

Read the full paper here : [2412.10360] Apollo: An Exploration of Video Understanding in Large Multimodal Models

: [2412.10360] Apollo: An Exploration of Video Understanding in Large Multimodal Models

/search?q=#AI /search?q=#MachineLearning /search?q=#DeepLearning /search?q=#GenAI /search?q=#AIResearch

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@bbrunomls

#6 Paper of the Day - Apollo: Advancing Video Understanding in Large Multimodal Models!

In their latest paper, "Apollo: An Exploration of Video Understanding in Large Multimodal Models," researchers introduce Apollo, a state-of-the-art family of Large Multimodal Models (LMMs) designed to improve video comprehension.

Read the full paper here

/search?q=#AI /search?q=#MachineLearning /search?q=#DeepLearning /search?q=#GenAI /search?q=#AIResearch

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/2

@gm8xx8

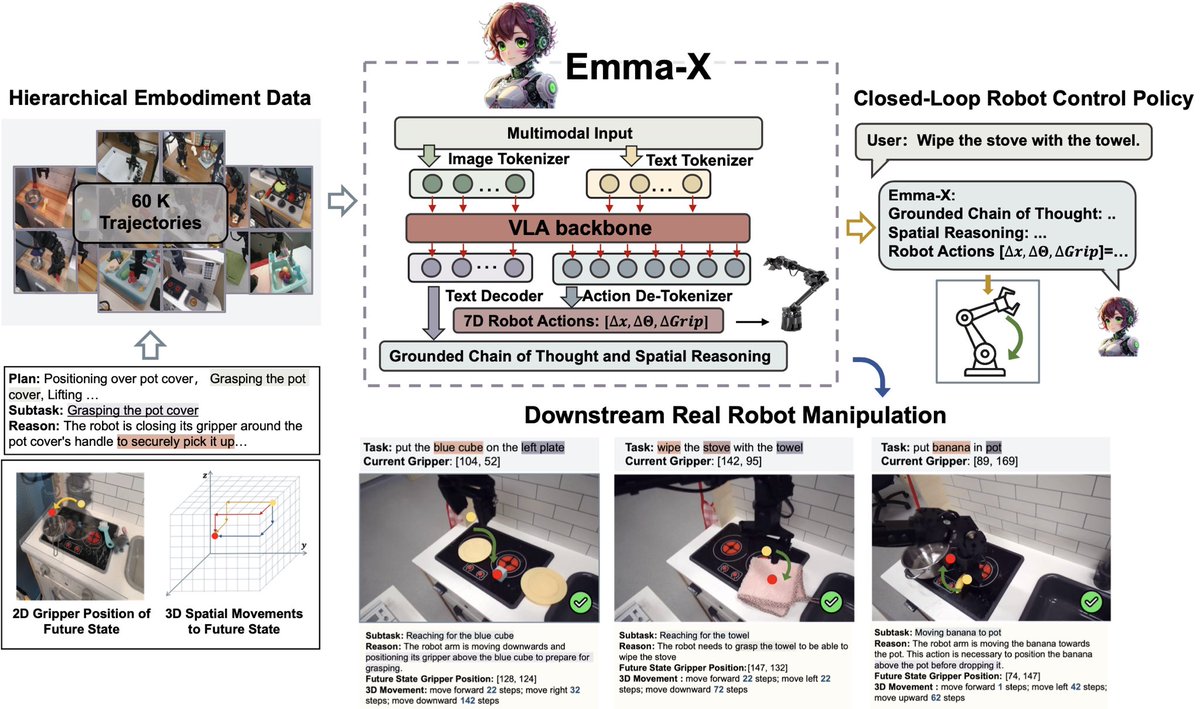

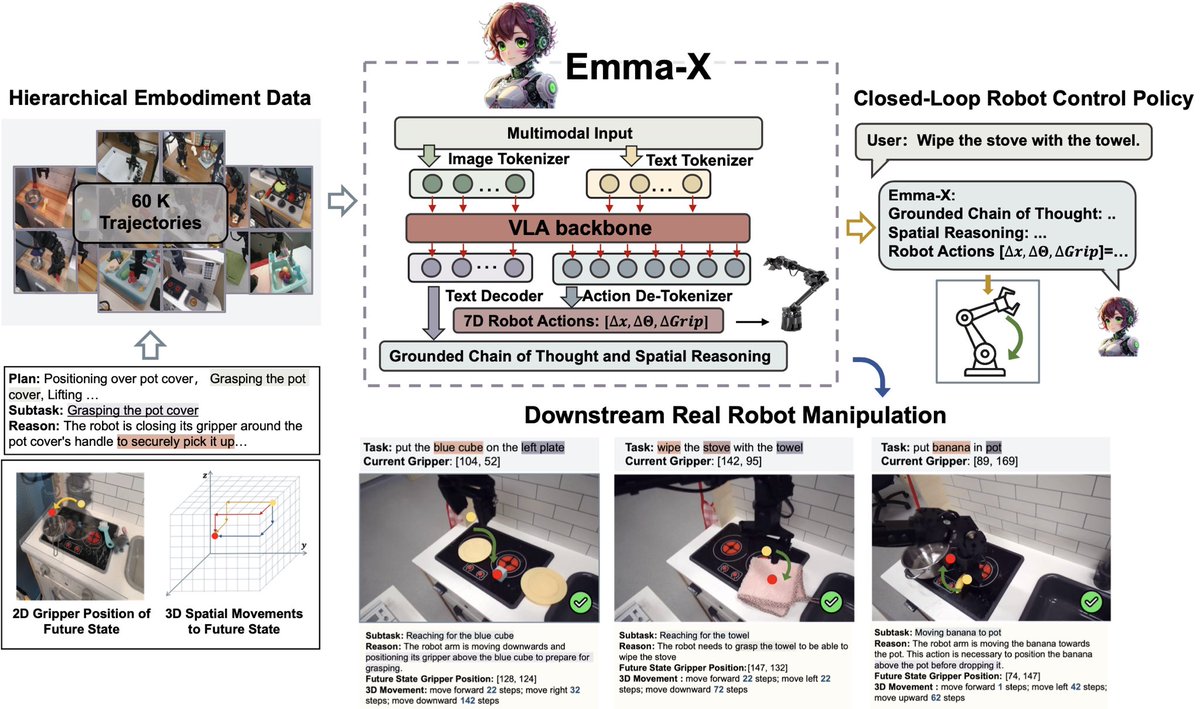

Emma-X: An Embodied Multimodal Action Model with Grounded Chain of Thought and Look-ahead Spatial Reasoning

Emma-X, an Embodied Multimodal Action Model that combines Grounded CoT reasoning and Look-ahead Spatial Reasoning. Emma-X is trained on a hierarchical embodiment dataset derived from BridgeV2, containing 60,000 robot manipulation trajectories annotated with grounded task reasoning and spatial guidance. A trajectory segmentation strategy, based on gripper states and motion paths, further reduces hallucination in subtask reasoning.

Experimental results show that Emma-X outperforms competitive baselines, especially in real-world robotic tasks requiring spatial reasoning.

2/2

@gm8xx8

↓

model: declare-lab/Emma-X · Hugging Face

code: GitHub - declare-lab/Emma-X: Emma-X: An Embodied Multimodal Action Model with Grounded Chain of Thought and Look-ahead Spatial Reasoning

paper: [2412.11974] Emma-X: An Embodied Multimodal Action Model with Grounded Chain of Thought and Look-ahead Spatial Reasoning

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@gm8xx8

Emma-X: An Embodied Multimodal Action Model with Grounded Chain of Thought and Look-ahead Spatial Reasoning

Emma-X, an Embodied Multimodal Action Model that combines Grounded CoT reasoning and Look-ahead Spatial Reasoning. Emma-X is trained on a hierarchical embodiment dataset derived from BridgeV2, containing 60,000 robot manipulation trajectories annotated with grounded task reasoning and spatial guidance. A trajectory segmentation strategy, based on gripper states and motion paths, further reduces hallucination in subtask reasoning.

Experimental results show that Emma-X outperforms competitive baselines, especially in real-world robotic tasks requiring spatial reasoning.

2/2

@gm8xx8

↓

model: declare-lab/Emma-X · Hugging Face

code: GitHub - declare-lab/Emma-X: Emma-X: An Embodied Multimodal Action Model with Grounded Chain of Thought and Look-ahead Spatial Reasoning

paper: [2412.11974] Emma-X: An Embodied Multimodal Action Model with Grounded Chain of Thought and Look-ahead Spatial Reasoning

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/20

@itinaicom

Exciting news in chemical synthesis! Microsoft and Novartis have released a new AI framework, Chimera, enhancing retrosynthesis prediction with improved accuracy and scalability. By integrating multiple ML models, Chimera addresses complex challenges,… This AI Paper from Microsoft and Novartis Introduces Chimera: A Machine Learning Framework for Accurate and Scalable Retrosynthesis Prediction

Exciting news in chemical synthesis! Microsoft and Novartis have released a new AI framework, Chimera, enhancing retrosynthesis prediction with improved accuracy and scalability. By integrating multiple ML models, Chimera addresses complex challenges,… This AI Paper from Microsoft and Novartis Introduces Chimera: A Machine Learning Framework for Accurate and Scalable Retrosynthesis Prediction

2/20

@itinaicom

UBC researchers unveil 'First Explore'—a groundbreaking two-policy learning approach targeting meta-reinforcement learning's exploration failures. This method enhances performance by separating exploration and exploitation, achieving outstanding resul… UBC Researchers Introduce ‘First Explore’: A Two-Policy Learning Approach to Rescue Meta-Reinforcement Learning RL from Failed Explorations

UBC researchers unveil 'First Explore'—a groundbreaking two-policy learning approach targeting meta-reinforcement learning's exploration failures. This method enhances performance by separating exploration and exploitation, achieving outstanding resul… UBC Researchers Introduce ‘First Explore’: A Two-Policy Learning Approach to Rescue Meta-Reinforcement Learning RL from Failed Explorations

3/20

@itinaicom

Introducing Gaze-LLE: a groundbreaking AI model for gaze target estimation that streamlines the process with a simplified architecture. This innovative approach reduces computational needs by 95% and achieves top performance across benchmarks. Discover m… Gaze-LLE: A New AI Model for Gaze Target Estimation Built on Top of a Frozen Visual Foundation Model

4/20

@itinaicom

Microsoft AI Research has introduced OLA-VLM, a groundbreaking vision-centric approach to optimizing Multimodal Large Language Models! This innovation enhances the integration of visual data, improving accuracy while maintaining efficiency. Discover the … Microsoft AI Research Introduces OLA-VLM: A Vision-Centric Approach to Optimizing Multimodal Large Language Models

5/20

@itinaicom

Exciting news from Meta FAIR! They've launched Meta Motivo, a cutting-edge Behavioral Foundation Model designed for controlling virtual physics-based humanoid agents across diverse tasks. Unleashing AI potential through innovative learning! /search?q=#MetaMotiv… Meta FAIR Releases Meta Motivo: A New Behavioral Foundation Model for Controlling Virtual Physics-based Humanoid Agents for a Wide Range of Complex Whole-Body Tasks

Exciting news from Meta FAIR! They've launched Meta Motivo, a cutting-edge Behavioral Foundation Model designed for controlling virtual physics-based humanoid agents across diverse tasks. Unleashing AI potential through innovative learning! /search?q=#MetaMotiv… Meta FAIR Releases Meta Motivo: A New Behavioral Foundation Model for Controlling Virtual Physics-based Humanoid Agents for a Wide Range of Complex Whole-Body Tasks

6/20

@itinaicom

Exciting news from Nexa AI! They've just launched OmniAudio-2.6B, a groundbreaking audio language model designed for edge deployment. With speeds over 10x faster than alternatives, it's perfect for wearables and IoT devices. Discover more about this g… Nexa AI Releases OmniAudio-2.6B: A Fast Audio Language Model for Edge Deployment

Exciting news from Nexa AI! They've just launched OmniAudio-2.6B, a groundbreaking audio language model designed for edge deployment. With speeds over 10x faster than alternatives, it's perfect for wearables and IoT devices. Discover more about this g… Nexa AI Releases OmniAudio-2.6B: A Fast Audio Language Model for Edge Deployment

7/20

@itinaicom

Unlock the future of AI with the open-sourced DeepSeek-VL2 series! Discover three powerful models (3B, 16B, 27B parameters) utilizing Mixture-of-Experts architecture to redefine vision-language tasks. From enhancing OCR to streamlining multimodal analysi… DeepSeek-AI Open Sourced DeepSeek-VL2 Series: Three Models of 3B, 16B, and 27B Parameters with Mixture-of-Experts (MoE) Architecture Redefining Vision-Language AI

8/20

@itinaicom

Introducing BiMediX2: the bilingual (Arabic-English) biomedical model that's transforming healthcare diagnostics! By integrating text & image analysis, it bridges language gaps and enhances medical insights. Outperforming others in both English & Arab… BiMediX2: A Groundbreaking Bilingual Bio-Medical Large Multimodal Model integrating Text and Image Analysis for Advanced Medical Diagnostics

Introducing BiMediX2: the bilingual (Arabic-English) biomedical model that's transforming healthcare diagnostics! By integrating text & image analysis, it bridges language gaps and enhances medical insights. Outperforming others in both English & Arab… BiMediX2: A Groundbreaking Bilingual Bio-Medical Large Multimodal Model integrating Text and Image Analysis for Advanced Medical Diagnostics

9/20

@itinaicom

Meta AI is revolutionizing language processing with Large Concept Models (LCMs), a paradigm shift beyond token-based systems. LCMs leverage high-dimensional embedding spaces for improved coherence and efficiency. These models excel in multilingual contex… Meta AI Proposes Large Concept Models (LCMs): A Semantic Leap Beyond Token-based Language Modeling

10/20

@itinaicom

Unlock the potential of your language models! Tsinghua & CMU researchers reveal compute-optimal inference strategies that show how smaller models can outperform larger ones with the right techniques. Discover the benefits of efficiency: /search?q=#AI /search?q=#MachineLe… From Theory to Practice: Compute-Optimal Inference Strategies for Language Model

Tsinghua & CMU researchers reveal compute-optimal inference strategies that show how smaller models can outperform larger ones with the right techniques. Discover the benefits of efficiency: /search?q=#AI /search?q=#MachineLe… From Theory to Practice: Compute-Optimal Inference Strategies for Language Model

11/20

@itinaicom

Introducing the Self-Refining Data Flywheel (SRDF), a breakthrough in Vision-and-Language Navigation!

SRDF enhances dataset quality through an automated process that links synthetic instructions with real-time navigation. Achieved 78% accuracy, surp… This AI Paper Introduces SRDF: A Self-Refining Data Flywheel for High-Quality Vision-and-Language Navigation Datasets

SRDF enhances dataset quality through an automated process that links synthetic instructions with real-time navigation. Achieved 78% accuracy, surp… This AI Paper Introduces SRDF: A Self-Refining Data Flywheel for High-Quality Vision-and-Language Navigation Datasets

12/20

@itinaicom

Explore "Beyond the Mask: A Comprehensive Study of Discrete Diffusion Models"! This research unveils masked diffusion, a simpler approach for generating discrete data. Key benefits include simplified training and improved sampling strategies. Uncover the… Beyond the Mask: A Comprehensive Study of Discrete Diffusion Models

13/20

@itinaicom

Introducing the InternLM-XComposer2.5-OmniLive (IXC2.5-OL): a groundbreaking multimodal AI framework enabling real-time streaming interactions across audio and video. Designed for efficiency, it mimics human cognition to enhance performance. Explore its … InternLM-XComposer2.5-OmniLive: A Comprehensive Multimodal AI System for Long-Term Streaming Video and Audio Interactions

14/20

@itinaicom

Cohere AI has launched Command R7B, the smallest and fastest model in its R series, designed for enterprises. With 7 billion parameters, it offers optimized performance, data privacy compliance, and low latency. Revolutionize your business with efficient… Cohere AI Releases Command R7B: The Smallest, Fastest, and Final Model in the R Series

15/20

@itinaicom

Meta AI has launched EvalGIM, a game-changing machine learning library for evaluating generative image models. This comprehensive toolkit addresses evaluation challenges, offers diverse dataset support, and integrates multiple metrics for deeper insights… Meta AI Releases EvalGIM: A Machine Learning Library for Evaluating Generative Image Models

16/20

@itinaicom

How do large language models (LLMs) store and use knowledge? A new AI paper introduces "Knowledge Circuits," offering a framework to enhance knowledge storage in transformer-based LLMs. This innovative approach can boost performance while using fewer res… How LLMs Store and Use Knowledge? This AI Paper Introduces Knowledge Circuits: A Framework for Understanding and Improving Knowledge Storage in Transformer-Based LLMs

17/20

@itinaicom

Unlock the potential of protein design with the DL4Proteins Notebook Series! Ideal for researchers, educators, and students, these Jupyter notebooks bridge deep learning and protein engineering. Explore hands-on tools like AlphaFold and ProteinMPNN today… DL4Proteins Notebook Series Bridging Machine Learning and Protein Engineering: A Practical Guide to Deep Learning Tools for Protein Design

18/20

@itinaicom

Exciting news! CloudFerro and ESA Φ-lab have launched the first global embeddings dataset for Earth observations, enhancing AI analysis of Copernicus satellite data. This advancement supports scalable applications for land monitoring and environmental… CloudFerro and ESA Φ-lab Launch the First Global Embeddings Dataset for Earth Observations

Exciting news! CloudFerro and ESA Φ-lab have launched the first global embeddings dataset for Earth observations, enhancing AI analysis of Copernicus satellite data. This advancement supports scalable applications for land monitoring and environmental… CloudFerro and ESA Φ-lab Launch the First Global Embeddings Dataset for Earth Observations

19/20

@itinaicom

Exciting news! xAI has just released Grok-2, its most advanced language model, now available for FREE on the X platform!

Exciting news! xAI has just released Grok-2, its most advanced language model, now available for FREE on the X platform!

Grok-2 offers:

- Contextual understanding

- Personalization options

- Multimodal capabilities

Explore the future of AI today!… xAI Releases Grok-2: An Advanced Language Model Now Freely Available on X

20/20

@itinaicom

Exciting news from Alibaba Research! They've launched ProcessBench, a new AI benchmark to evaluate how well language models identify process errors in mathematical reasoning. With 3,400 meticulously annotated test cases, it aims to refine AI’s reasoni… Alibaba Qwen Researchers Introduced ProcessBench: A New AI Benchmark for Measuring the Ability to Identify Process Errors in Mathematical Reasoning

Exciting news from Alibaba Research! They've launched ProcessBench, a new AI benchmark to evaluate how well language models identify process errors in mathematical reasoning. With 3,400 meticulously annotated test cases, it aims to refine AI’s reasoni… Alibaba Qwen Researchers Introduced ProcessBench: A New AI Benchmark for Measuring the Ability to Identify Process Errors in Mathematical Reasoning

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@itinaicom

2/20

@itinaicom

3/20

@itinaicom

Introducing Gaze-LLE: a groundbreaking AI model for gaze target estimation that streamlines the process with a simplified architecture. This innovative approach reduces computational needs by 95% and achieves top performance across benchmarks. Discover m… Gaze-LLE: A New AI Model for Gaze Target Estimation Built on Top of a Frozen Visual Foundation Model

4/20

@itinaicom

Microsoft AI Research has introduced OLA-VLM, a groundbreaking vision-centric approach to optimizing Multimodal Large Language Models! This innovation enhances the integration of visual data, improving accuracy while maintaining efficiency. Discover the … Microsoft AI Research Introduces OLA-VLM: A Vision-Centric Approach to Optimizing Multimodal Large Language Models

5/20

@itinaicom

6/20

@itinaicom

7/20

@itinaicom

Unlock the future of AI with the open-sourced DeepSeek-VL2 series! Discover three powerful models (3B, 16B, 27B parameters) utilizing Mixture-of-Experts architecture to redefine vision-language tasks. From enhancing OCR to streamlining multimodal analysi… DeepSeek-AI Open Sourced DeepSeek-VL2 Series: Three Models of 3B, 16B, and 27B Parameters with Mixture-of-Experts (MoE) Architecture Redefining Vision-Language AI

8/20

@itinaicom

9/20

@itinaicom

Meta AI is revolutionizing language processing with Large Concept Models (LCMs), a paradigm shift beyond token-based systems. LCMs leverage high-dimensional embedding spaces for improved coherence and efficiency. These models excel in multilingual contex… Meta AI Proposes Large Concept Models (LCMs): A Semantic Leap Beyond Token-based Language Modeling

10/20

@itinaicom

Unlock the potential of your language models!

11/20

@itinaicom

Introducing the Self-Refining Data Flywheel (SRDF), a breakthrough in Vision-and-Language Navigation!

12/20

@itinaicom

Explore "Beyond the Mask: A Comprehensive Study of Discrete Diffusion Models"! This research unveils masked diffusion, a simpler approach for generating discrete data. Key benefits include simplified training and improved sampling strategies. Uncover the… Beyond the Mask: A Comprehensive Study of Discrete Diffusion Models

13/20

@itinaicom

Introducing the InternLM-XComposer2.5-OmniLive (IXC2.5-OL): a groundbreaking multimodal AI framework enabling real-time streaming interactions across audio and video. Designed for efficiency, it mimics human cognition to enhance performance. Explore its … InternLM-XComposer2.5-OmniLive: A Comprehensive Multimodal AI System for Long-Term Streaming Video and Audio Interactions

14/20

@itinaicom

Cohere AI has launched Command R7B, the smallest and fastest model in its R series, designed for enterprises. With 7 billion parameters, it offers optimized performance, data privacy compliance, and low latency. Revolutionize your business with efficient… Cohere AI Releases Command R7B: The Smallest, Fastest, and Final Model in the R Series

15/20

@itinaicom

Meta AI has launched EvalGIM, a game-changing machine learning library for evaluating generative image models. This comprehensive toolkit addresses evaluation challenges, offers diverse dataset support, and integrates multiple metrics for deeper insights… Meta AI Releases EvalGIM: A Machine Learning Library for Evaluating Generative Image Models

16/20

@itinaicom

How do large language models (LLMs) store and use knowledge? A new AI paper introduces "Knowledge Circuits," offering a framework to enhance knowledge storage in transformer-based LLMs. This innovative approach can boost performance while using fewer res… How LLMs Store and Use Knowledge? This AI Paper Introduces Knowledge Circuits: A Framework for Understanding and Improving Knowledge Storage in Transformer-Based LLMs

17/20

@itinaicom

Unlock the potential of protein design with the DL4Proteins Notebook Series! Ideal for researchers, educators, and students, these Jupyter notebooks bridge deep learning and protein engineering. Explore hands-on tools like AlphaFold and ProteinMPNN today… DL4Proteins Notebook Series Bridging Machine Learning and Protein Engineering: A Practical Guide to Deep Learning Tools for Protein Design

18/20

@itinaicom

19/20

@itinaicom

Grok-2 offers:

- Contextual understanding

- Personalization options

- Multimodal capabilities

Explore the future of AI today!… xAI Releases Grok-2: An Advanced Language Model Now Freely Available on X

20/20

@itinaicom

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/20

@itinaicom

Exciting news from EleutherAI! They've unveiled a groundbreaking machine learning framework that analyzes neural network training using the Jacobian matrix. This reveals how parameter initialization influences outcomes. Discover how it improves optimizat… Eleuther AI Introduces a Novel Machine Learning Framework for Analyzing Neural Network Training through the Jacobian Matrix

2/20

@itinaicom

Introducing MosAIC: a revolutionary multi-agent AI framework designed to enhance cross-cultural image captioning. By leveraging diverse cultural perspectives, MosAIC creates detailed and inclusive descriptions, overcoming the biases of existing models. J… MosAIC: A Multi-Agent AI Framework for Cross-Cultural Image Captioning

3/20

@itinaicom

Yale researchers have introduced AsyncLM, a groundbreaking AI system enabling simultaneous function calls in Large Language Models (LLMs). This innovation boosts efficiency, completing tasks up to 5.4 times faster, while reducing waiting times. Discover … Yale Researchers Propose AsyncLM: An Artificial Intelligence System for Asynchronous LLM Function Calling

4/20

@itinaicom

Exciting news from UCLA and Apple! They've introduced STIV, a scalable AI framework that revolutionizes video generation by seamlessly integrating text and images. With impressive enhancements in clarity and performance, STIV is set to redefine the lands… Researchers from UCLA and Apple Introduce STIV: A Scalable AI Framework for Text and Image Conditioned Video Generation

5/20

@itinaicom

Unlock the future of machine learning with the TIME Framework! This innovative approach to temporal model merging enhances model integration over time, encouraging continual training for optimal performance. Explore the best strategies for AI adaptati… TIME Framework: A Novel Machine Learning Unifying Framework Breaking Down Temporal Model Merging

This innovative approach to temporal model merging enhances model integration over time, encouraging continual training for optimal performance. Explore the best strategies for AI adaptati… TIME Framework: A Novel Machine Learning Unifying Framework Breaking Down Temporal Model Merging

6/20

@itinaicom

Introducing AutoReason: the AI framework revolutionizing multi-step reasoning in Large Language Models (LLMs)! By automating reasoning steps, it enhances clarity and performance across complex tasks. Perfect for health and legal fields. Explore more! … Meet AutoReason: An AI Framework for Enhancing Multi-Step Reasoning and Interpretability in Large Language Models

Introducing AutoReason: the AI framework revolutionizing multi-step reasoning in Large Language Models (LLMs)! By automating reasoning steps, it enhances clarity and performance across complex tasks. Perfect for health and legal fields. Explore more! … Meet AutoReason: An AI Framework for Enhancing Multi-Step Reasoning and Interpretability in Large Language Models

7/20

@itinaicom

Meta AI has unveiled the Byte Latent Transformer (BLT), a groundbreaking tokenizer-free model that processes raw byte sequences for enhanced efficiency. BLT scales better and has proven to outperform traditional models with significantly less computation… Meta AI Introduces Byte Latent Transformer (BLT): A Tokenizer-Free Model That Scales Efficiently

8/20

@itinaicom

Exciting advancements from Stanford University! Researchers introduced SMOOTHIE, a cutting-edge machine learning algorithm that optimizes language model routing without the need for labeled data. This unsupervised approach enhances efficiency and accurac… Researchers at Stanford University Propose SMOOTHIE: A Machine Learning Algorithm for Learning Label-Free Routers for Generative Tasks

9/20

@itinaicom

Exciting news! IBM has open-sourced Granite Guardian, a powerful suite for identifying risks in large language models (LLMs). With robust tools for risk detection, transparency, and a human-centric approach, it ensures responsible AI use. Discover mor… IBM Open-Sources Granite Guardian: A Suite of Safeguards for Risk Detection in LLMs

Exciting news! IBM has open-sourced Granite Guardian, a powerful suite for identifying risks in large language models (LLMs). With robust tools for risk detection, transparency, and a human-centric approach, it ensures responsible AI use. Discover mor… IBM Open-Sources Granite Guardian: A Suite of Safeguards for Risk Detection in LLMs

10/20

@itinaicom

Exciting news in AI! The Sequential Controlled Langevin Diffusion (SCLD) algorithm sets a new benchmark for sampling from complex probability distributions. Achieving top results with only 10% of the training budget, SCLD tackles traditional limitatio… This AI Paper Sets a New Benchmark in Sampling with the Sequential Controlled Langevin Diffusion Algorithm

Exciting news in AI! The Sequential Controlled Langevin Diffusion (SCLD) algorithm sets a new benchmark for sampling from complex probability distributions. Achieving top results with only 10% of the training budget, SCLD tackles traditional limitatio… This AI Paper Sets a New Benchmark in Sampling with the Sequential Controlled Langevin Diffusion Algorithm

11/20

@itinaicom

Transforming video generation with the innovative CausVid approach! By combining the efficiency of causal models with the quality of bidirectional techniques, CausVid sets a new standard for real-time video creation. Ideal for gaming and VR applications!… Transforming Video Diffusion Models: The CausVid Approach

12/20

@itinaicom

Introducing "Best-of-N Jailbreaking" – a cutting-edge AI method revealing vulnerabilities in large language models! This innovative approach achieves remarkable success rates in testing model defenses. Learn how it works and stay informed on AI securi… Best-of-N Jailbreaking: A Multi-Modal AI Approach to Identifying Vulnerabilities in Large Language Models

Introducing "Best-of-N Jailbreaking" – a cutting-edge AI method revealing vulnerabilities in large language models! This innovative approach achieves remarkable success rates in testing model defenses. Learn how it works and stay informed on AI securi… Best-of-N Jailbreaking: A Multi-Modal AI Approach to Identifying Vulnerabilities in Large Language Models

13/20

@itinaicom

Meta AI has unveiled COCONUT, revolutionizing machine reasoning with Chain of Continuous Thought. This innovative approach allows AI to think flexibly in latent space, enhancing problem-solving by exploring multiple solutions more efficiently. /search?q=#AI /search?q=#Machi… Meta AI Introduces COCONUT: A New Paradigm Transforming Machine Reasoning with Continuous Latent Thoughts and Advanced Planning Capabilities

14/20

@itinaicom

Unlocking the future of protein design with PLAID! This innovative AI framework co-generates sequences and all-atom structures, enhancing data diversity and structural fidelity. Discover its impact on molecular engineering and drug development. Learn mor… PLAID: A New AI Approach for Co-Generating Sequence and All-Atom Protein Structures by Sampling from the Latent Space of ESMFold

15/20

@itinaicom

Exciting news! Anthropic has launched Clio, a new AI system designed to automatically identify global trends in Claude usage while ensuring user privacy. Clio offers advanced analysis, interactive visualizations, and valuable insights to enhance AI sa… Anthropic Introduces Clio: A New AI System that Automatically Identifies Trends in Claude Usage Across the World

Exciting news! Anthropic has launched Clio, a new AI system designed to automatically identify global trends in Claude usage while ensuring user privacy. Clio offers advanced analysis, interactive visualizations, and valuable insights to enhance AI sa… Anthropic Introduces Clio: A New AI System that Automatically Identifies Trends in Claude Usage Across the World

16/20

@itinaicom

Understanding Deep Neural Networks (DNNs) is key in AI innovation. Composed of multiple layers, DNNs learn complex patterns through interconnected nodes, powering applications from image recognition to NLP. Explore types like CNNs and RNNs to find the be… Understanding Deep Neural Network (DNN)

17/20

@itinaicom

Exciting news from PyTorch! They've launched **torchcodec**, a new library for decoding videos into PyTorch tensors. This user-friendly tool simplifies video data handling for machine learning, enhancing workflow efficiency. Say goodbye to the complex… PyTorch Introduces torchcodec: A Machine Learning Library for Decoding Videos into PyTorch Tensors

Exciting news from PyTorch! They've launched **torchcodec**, a new library for decoding videos into PyTorch tensors. This user-friendly tool simplifies video data handling for machine learning, enhancing workflow efficiency. Say goodbye to the complex… PyTorch Introduces torchcodec: A Machine Learning Library for Decoding Videos into PyTorch Tensors

18/20

@itinaicom

Exciting news from AMD! The release of ROCm 6.3 brings an open-source platform packed with advanced tools and optimizations for AI, ML, and HPC workloads. Key features include SGLang support, FlashAttention-2, and multi-node FFT capabilities. Level up… AMD Releases AMD ROCm 6.3: An Open-Source Platform with Advanced Tools and Optimizations to Enhance AI, ML, and HPC Workloads

Exciting news from AMD! The release of ROCm 6.3 brings an open-source platform packed with advanced tools and optimizations for AI, ML, and HPC workloads. Key features include SGLang support, FlashAttention-2, and multi-node FFT capabilities. Level up… AMD Releases AMD ROCm 6.3: An Open-Source Platform with Advanced Tools and Optimizations to Enhance AI, ML, and HPC Workloads

19/20

@itinaicom

Exciting news from Microsoft AI! Meet Phi-4, a 14 billion parameter language model that’s redefining complex reasoning with resource efficiency. With innovations like synthetic data generation and enhanced context handling, Phi-4 outperforms larger mo… Microsoft AI Introduces Phi-4: A New 14 Billion Parameter Small Language Model Specializing in Complex Reasoning

Exciting news from Microsoft AI! Meet Phi-4, a 14 billion parameter language model that’s redefining complex reasoning with resource efficiency. With innovations like synthetic data generation and enhanced context handling, Phi-4 outperforms larger mo… Microsoft AI Introduces Phi-4: A New 14 Billion Parameter Small Language Model Specializing in Complex Reasoning

20/20

@itinaicom

Exploring the implications of AI hallucinations in business is vital. Strategies like Retrieval-Augmented Generation and human oversight can enhance the reliability of language models. Let's embrace AI while prioritizing accuracy and trust. /search?q=#AI /search?q=#MachineL… Hallucinating Reality. An Essay on Business Benefits of Accurate LLMs and LLM Hallucination Reduction Methods

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@itinaicom

Exciting news from EleutherAI! They've unveiled a groundbreaking machine learning framework that analyzes neural network training using the Jacobian matrix. This reveals how parameter initialization influences outcomes. Discover how it improves optimizat… Eleuther AI Introduces a Novel Machine Learning Framework for Analyzing Neural Network Training through the Jacobian Matrix

2/20

@itinaicom

Introducing MosAIC: a revolutionary multi-agent AI framework designed to enhance cross-cultural image captioning. By leveraging diverse cultural perspectives, MosAIC creates detailed and inclusive descriptions, overcoming the biases of existing models. J… MosAIC: A Multi-Agent AI Framework for Cross-Cultural Image Captioning

3/20

@itinaicom

Yale researchers have introduced AsyncLM, a groundbreaking AI system enabling simultaneous function calls in Large Language Models (LLMs). This innovation boosts efficiency, completing tasks up to 5.4 times faster, while reducing waiting times. Discover … Yale Researchers Propose AsyncLM: An Artificial Intelligence System for Asynchronous LLM Function Calling

4/20

@itinaicom

Exciting news from UCLA and Apple! They've introduced STIV, a scalable AI framework that revolutionizes video generation by seamlessly integrating text and images. With impressive enhancements in clarity and performance, STIV is set to redefine the lands… Researchers from UCLA and Apple Introduce STIV: A Scalable AI Framework for Text and Image Conditioned Video Generation

5/20

@itinaicom

Unlock the future of machine learning with the TIME Framework!

6/20

@itinaicom

7/20

@itinaicom

Meta AI has unveiled the Byte Latent Transformer (BLT), a groundbreaking tokenizer-free model that processes raw byte sequences for enhanced efficiency. BLT scales better and has proven to outperform traditional models with significantly less computation… Meta AI Introduces Byte Latent Transformer (BLT): A Tokenizer-Free Model That Scales Efficiently

8/20

@itinaicom

Exciting advancements from Stanford University! Researchers introduced SMOOTHIE, a cutting-edge machine learning algorithm that optimizes language model routing without the need for labeled data. This unsupervised approach enhances efficiency and accurac… Researchers at Stanford University Propose SMOOTHIE: A Machine Learning Algorithm for Learning Label-Free Routers for Generative Tasks

9/20

@itinaicom

10/20

@itinaicom

11/20

@itinaicom

Transforming video generation with the innovative CausVid approach! By combining the efficiency of causal models with the quality of bidirectional techniques, CausVid sets a new standard for real-time video creation. Ideal for gaming and VR applications!… Transforming Video Diffusion Models: The CausVid Approach

12/20

@itinaicom

13/20

@itinaicom

Meta AI has unveiled COCONUT, revolutionizing machine reasoning with Chain of Continuous Thought. This innovative approach allows AI to think flexibly in latent space, enhancing problem-solving by exploring multiple solutions more efficiently. /search?q=#AI /search?q=#Machi… Meta AI Introduces COCONUT: A New Paradigm Transforming Machine Reasoning with Continuous Latent Thoughts and Advanced Planning Capabilities

14/20

@itinaicom

Unlocking the future of protein design with PLAID! This innovative AI framework co-generates sequences and all-atom structures, enhancing data diversity and structural fidelity. Discover its impact on molecular engineering and drug development. Learn mor… PLAID: A New AI Approach for Co-Generating Sequence and All-Atom Protein Structures by Sampling from the Latent Space of ESMFold

15/20

@itinaicom

16/20

@itinaicom

Understanding Deep Neural Networks (DNNs) is key in AI innovation. Composed of multiple layers, DNNs learn complex patterns through interconnected nodes, powering applications from image recognition to NLP. Explore types like CNNs and RNNs to find the be… Understanding Deep Neural Network (DNN)

17/20

@itinaicom

18/20

@itinaicom

19/20

@itinaicom

20/20

@itinaicom

Exploring the implications of AI hallucinations in business is vital. Strategies like Retrieval-Augmented Generation and human oversight can enhance the reliability of language models. Let's embrace AI while prioritizing accuracy and trust. /search?q=#AI /search?q=#MachineL… Hallucinating Reality. An Essay on Business Benefits of Accurate LLMs and LLM Hallucination Reduction Methods

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/19

@itinaicom

Introducing an innovative Maximum Entropy Inverse Reinforcement Learning approach to enhance sample quality in diffusion generative models! This breakthrough improves efficiency without sacrificing output quality. Discover more about DxMI and its applica… This AI Paper Introduces A Maximum Entropy Inverse Reinforcement Learning (IRL) Approach for Improving the Sample Quality of Diffusion Generative Models

2/19

@itinaicom

Exciting news! Researchers at Stanford have launched UniTox, a groundbreaking dataset of 2,418 FDA-approved drugs detailing drug-induced toxicity summaries, powered by GPT-4o. This resource can enhance safety in drug development by identifying potential … Researchers at Stanford Introduce UniTox: A Unified Dataset of 2,418 FDA-Approved Drugs with Drug-Induced Toxicity Summaries and Ratings Created by Using GPT-4o to Process FDA Drug Labels

3/19

@itinaicom

Introducing Ivy-VL: a lightweight multimodal model with only 3 billion parameters designed for edge devices!

Achieving exceptional performance on benchmarks like AI2D (81.6) and ScienceQA (97.3) while remaining resource-efficient. A game-changer for… Meet Ivy-VL: A Lightweight Multimodal Model with Only 3 Billion Parameters for Edge Devices

Achieving exceptional performance on benchmarks like AI2D (81.6) and ScienceQA (97.3) while remaining resource-efficient. A game-changer for… Meet Ivy-VL: A Lightweight Multimodal Model with Only 3 Billion Parameters for Edge Devices

4/19

@itinaicom

Introducing AGORA BENCH: a groundbreaking benchmark developed by researchers from CMU, KAIST, and UW for evaluating language models as synthetic data generators. AGORA BENCH standardizes evaluations to identify the best models for various tasks, enhancin… This AI Paper from CMU, KAIST and University of Washington Introduces AGORA BENCH: A Benchmark for Systematic Evaluation of Language Models as Synthetic Data Generators

5/19

@itinaicom

Meet Maya: an 8B open-source multilingual multimodal model that prioritizes toxicity-free datasets and cultural intelligence across eight languages. With 558,000 rigorously filtered image-text pairs, Maya sets a new standard for ethical AI practices. Dis… Meet Maya: An 8B Open-Source Multilingual Multimodal Model with Toxicity-Free Datasets and Cultural Intelligence Across Eight Languages

6/19

@itinaicom

Exciting news! LG AI Research has unveiled EXAONE 3.5, a suite of three open-source bilingual AI models, optimizing instruction following and long-context understanding. With superior performance and real-world applications, these advancements redefin… LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence

Exciting news! LG AI Research has unveiled EXAONE 3.5, a suite of three open-source bilingual AI models, optimizing instruction following and long-context understanding. With superior performance and real-world applications, these advancements redefin… LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence

7/19

@itinaicom

Splunk researchers have unveiled MAG-V, a groundbreaking Multi-Agent Framework for generating synthetic data and verifying AI trajectories. This innovative system enhances accuracy and lowers costs without relying solely on LLMs. Discover the future of A… Splunk Researchers Introduce MAG-V: A Multi-Agent Framework For Synthetic Data Generation and Reliable AI Trajectory Verification

8/19

@itinaicom

ByteDance has launched Infinity, a groundbreaking autoregressive model for high-resolution image synthesis. With innovations like Bitwise Tokenization and Infinite-Vocabulary Classifier, Infinity achieves faster processing and higher quality. Discover th… ByteDance Introduces Infinity: An Autoregressive Model with Bitwise Modeling for High-Resolution Image Synthesis

9/19

@itinaicom

Artificial Neural Networks (ANNs) mimic the human brain to learn from data, identify patterns, and make informed decisions. With applications in healthcare, finance, and more, they are revolutionizing AI. Explore various types like CNNs and GANs to empow… Understanding the Artificial Neural Networks ANNs

10/19

@itinaicom

Introducing DEIM: a revolutionary AI framework designed to enhance DETR models for faster convergence and improved object detection accuracy. By leveraging Dense O2O and Matchability Aware Loss, DEIM tackles slow learning challenges head-on. Get ready fo… DEIM: A New AI Framework that Enhances DETRs for Faster Convergence and Accurate Object Detection

11/19

@itinaicom

Cerebras has launched CePO, an AI framework enhancing the Llama models with advanced reasoning and planning capabilities. This integration revolutionizes decision-making in complex environments, proving effective in logistics and healthcare. Transform yo… Cerebras Introduces CePO (Cerebras Planning and Optimization): An AI Framework that Adds Sophisticated Reasoning Capabilities to the Llama Family of Models

12/19

@itinaicom

Exciting news! Hugging Face just launched Text Generation Inference (TGI) v3.0, boasting speeds 13x faster than vLLM for long prompts. With increased token capacity and zero-configuration setup, it's perfect for developers seeking efficiency. Dive int… Hugging Face Releases Text Generation Inference (TGI) v3.0: 13x Faster than vLLM on Long Prompts

Exciting news! Hugging Face just launched Text Generation Inference (TGI) v3.0, boasting speeds 13x faster than vLLM for long prompts. With increased token capacity and zero-configuration setup, it's perfect for developers seeking efficiency. Dive int… Hugging Face Releases Text Generation Inference (TGI) v3.0: 13x Faster than vLLM on Long Prompts

13/19

@itinaicom

Unlock the future of AI with MAmmoTH-VL-Instruct! This groundbreaking open-source dataset enhances multimodal reasoning through innovative, scalable construction methods. By utilizing 12 million entries and advanced filtering techniques, we drive state-o… MAmmoTH-VL-Instruct: Advancing Open-Source Multimodal Reasoning with Scalable Dataset Construction

14/19

@itinaicom

Exciting news! DeepSeek AI has just launched DeepSeek-V2.5-1210, with significant performance improvements across mathematics, coding, writing, and reasoning tasks. This update enhances accuracy and user experience, making it a powerful tool for resea… DeepSeek AI Just Released DeepSeek-V2.5-1210: The Updated Version of DeepSeek-V2.5 with Significant Performance Boosts in Mathematics, Coding, Writing, and Reasoning Tasks

Exciting news! DeepSeek AI has just launched DeepSeek-V2.5-1210, with significant performance improvements across mathematics, coding, writing, and reasoning tasks. This update enhances accuracy and user experience, making it a powerful tool for resea… DeepSeek AI Just Released DeepSeek-V2.5-1210: The Updated Version of DeepSeek-V2.5 with Significant Performance Boosts in Mathematics, Coding, Writing, and Reasoning Tasks

15/19

@itinaicom

Unlocking the power of neural networks with Latent Functional Maps (LFM) ! This innovative framework enhances analysis of neural representations, providing robust comparisons across models. Say goodbye to fragile methods like CKA and embrace improved s… Latent Functional Maps: A Robust Machine Learning Framework for Analyzing Neural Network Representations

! This innovative framework enhances analysis of neural representations, providing robust comparisons across models. Say goodbye to fragile methods like CKA and embrace improved s… Latent Functional Maps: A Robust Machine Learning Framework for Analyzing Neural Network Representations

16/19

@itinaicom

Do Transformers truly grasp the nuances of search? Recent studies reveal their struggles with complex graph searches, showing mixed results. While innovative training methods improve their capabilities, challenges remain as graph size increases. Key insi… Do Transformers Truly Understand Search? A Deep Dive into Their Limitations

17/19

@itinaicom

Introducing "Capability Density," a groundbreaking framework for evaluating Large Language Models (LLMs). This metric measures performance per parameter, suggesting smarter, more efficient AI. With rapid improvements in density, smaller models are set to… From Scale to Density: A New AI Framework for Evaluating Large Language Models

18/19

@itinaicom

Unlock the potential of Sequential Recommendation Systems with IDLE-Adapter! This innovative ML framework bridges ID barriers and enhances personalization by integrating with Large Language Models. Experience improved accuracy and scalability in e-commer… ID-Language Barrier: A New Machine Learning Framework for Sequential Recommendation

19/19

@itinaicom

Introducing LLM-Check: a groundbreaking tool for detecting hallucinations in Large Language Models in real-time! With single response analysis, it’s 450x faster than traditional methods—no extra training needed. Enhance your accuracy and reliability t… LLM-Check: Efficient Detection of Hallucinations in Large Language Models for Real-Time Applications

Introducing LLM-Check: a groundbreaking tool for detecting hallucinations in Large Language Models in real-time! With single response analysis, it’s 450x faster than traditional methods—no extra training needed. Enhance your accuracy and reliability t… LLM-Check: Efficient Detection of Hallucinations in Large Language Models for Real-Time Applications

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@itinaicom

Introducing an innovative Maximum Entropy Inverse Reinforcement Learning approach to enhance sample quality in diffusion generative models! This breakthrough improves efficiency without sacrificing output quality. Discover more about DxMI and its applica… This AI Paper Introduces A Maximum Entropy Inverse Reinforcement Learning (IRL) Approach for Improving the Sample Quality of Diffusion Generative Models

2/19

@itinaicom

Exciting news! Researchers at Stanford have launched UniTox, a groundbreaking dataset of 2,418 FDA-approved drugs detailing drug-induced toxicity summaries, powered by GPT-4o. This resource can enhance safety in drug development by identifying potential … Researchers at Stanford Introduce UniTox: A Unified Dataset of 2,418 FDA-Approved Drugs with Drug-Induced Toxicity Summaries and Ratings Created by Using GPT-4o to Process FDA Drug Labels

3/19

@itinaicom

Introducing Ivy-VL: a lightweight multimodal model with only 3 billion parameters designed for edge devices!

4/19

@itinaicom

Introducing AGORA BENCH: a groundbreaking benchmark developed by researchers from CMU, KAIST, and UW for evaluating language models as synthetic data generators. AGORA BENCH standardizes evaluations to identify the best models for various tasks, enhancin… This AI Paper from CMU, KAIST and University of Washington Introduces AGORA BENCH: A Benchmark for Systematic Evaluation of Language Models as Synthetic Data Generators

5/19

@itinaicom

Meet Maya: an 8B open-source multilingual multimodal model that prioritizes toxicity-free datasets and cultural intelligence across eight languages. With 558,000 rigorously filtered image-text pairs, Maya sets a new standard for ethical AI practices. Dis… Meet Maya: An 8B Open-Source Multilingual Multimodal Model with Toxicity-Free Datasets and Cultural Intelligence Across Eight Languages

6/19

@itinaicom

7/19

@itinaicom

Splunk researchers have unveiled MAG-V, a groundbreaking Multi-Agent Framework for generating synthetic data and verifying AI trajectories. This innovative system enhances accuracy and lowers costs without relying solely on LLMs. Discover the future of A… Splunk Researchers Introduce MAG-V: A Multi-Agent Framework For Synthetic Data Generation and Reliable AI Trajectory Verification

8/19

@itinaicom

ByteDance has launched Infinity, a groundbreaking autoregressive model for high-resolution image synthesis. With innovations like Bitwise Tokenization and Infinite-Vocabulary Classifier, Infinity achieves faster processing and higher quality. Discover th… ByteDance Introduces Infinity: An Autoregressive Model with Bitwise Modeling for High-Resolution Image Synthesis

9/19

@itinaicom

Artificial Neural Networks (ANNs) mimic the human brain to learn from data, identify patterns, and make informed decisions. With applications in healthcare, finance, and more, they are revolutionizing AI. Explore various types like CNNs and GANs to empow… Understanding the Artificial Neural Networks ANNs

10/19

@itinaicom

Introducing DEIM: a revolutionary AI framework designed to enhance DETR models for faster convergence and improved object detection accuracy. By leveraging Dense O2O and Matchability Aware Loss, DEIM tackles slow learning challenges head-on. Get ready fo… DEIM: A New AI Framework that Enhances DETRs for Faster Convergence and Accurate Object Detection

11/19

@itinaicom

Cerebras has launched CePO, an AI framework enhancing the Llama models with advanced reasoning and planning capabilities. This integration revolutionizes decision-making in complex environments, proving effective in logistics and healthcare. Transform yo… Cerebras Introduces CePO (Cerebras Planning and Optimization): An AI Framework that Adds Sophisticated Reasoning Capabilities to the Llama Family of Models

12/19

@itinaicom

13/19

@itinaicom

Unlock the future of AI with MAmmoTH-VL-Instruct! This groundbreaking open-source dataset enhances multimodal reasoning through innovative, scalable construction methods. By utilizing 12 million entries and advanced filtering techniques, we drive state-o… MAmmoTH-VL-Instruct: Advancing Open-Source Multimodal Reasoning with Scalable Dataset Construction

14/19

@itinaicom

15/19

@itinaicom

Unlocking the power of neural networks with Latent Functional Maps (LFM)

16/19

@itinaicom

Do Transformers truly grasp the nuances of search? Recent studies reveal their struggles with complex graph searches, showing mixed results. While innovative training methods improve their capabilities, challenges remain as graph size increases. Key insi… Do Transformers Truly Understand Search? A Deep Dive into Their Limitations

17/19

@itinaicom

Introducing "Capability Density," a groundbreaking framework for evaluating Large Language Models (LLMs). This metric measures performance per parameter, suggesting smarter, more efficient AI. With rapid improvements in density, smaller models are set to… From Scale to Density: A New AI Framework for Evaluating Large Language Models

18/19

@itinaicom

Unlock the potential of Sequential Recommendation Systems with IDLE-Adapter! This innovative ML framework bridges ID barriers and enhances personalization by integrating with Large Language Models. Experience improved accuracy and scalability in e-commer… ID-Language Barrier: A New Machine Learning Framework for Sequential Recommendation

19/19

@itinaicom

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/20

@itinaicom

Introducing AutoReason: the AI framework revolutionizing multi-step reasoning in Large Language Models (LLMs)! By automating reasoning steps, it enhances clarity and performance across complex tasks. Perfect for health and legal fields. Explore more! … Meet AutoReason: An AI Framework for Enhancing Multi-Step Reasoning and Interpretability in Large Language Models

Introducing AutoReason: the AI framework revolutionizing multi-step reasoning in Large Language Models (LLMs)! By automating reasoning steps, it enhances clarity and performance across complex tasks. Perfect for health and legal fields. Explore more! … Meet AutoReason: An AI Framework for Enhancing Multi-Step Reasoning and Interpretability in Large Language Models

2/20

@itinaicom

Meta AI has unveiled the Byte Latent Transformer (BLT), a groundbreaking tokenizer-free model that processes raw byte sequences for enhanced efficiency. BLT scales better and has proven to outperform traditional models with significantly less computation… Meta AI Introduces Byte Latent Transformer (BLT): A Tokenizer-Free Model That Scales Efficiently

3/20

@itinaicom

Exciting advancements from Stanford University! Researchers introduced SMOOTHIE, a cutting-edge machine learning algorithm that optimizes language model routing without the need for labeled data. This unsupervised approach enhances efficiency and accurac… Researchers at Stanford University Propose SMOOTHIE: A Machine Learning Algorithm for Learning Label-Free Routers for Generative Tasks

4/20

@itinaicom

Exciting news! IBM has open-sourced Granite Guardian, a powerful suite for identifying risks in large language models (LLMs). With robust tools for risk detection, transparency, and a human-centric approach, it ensures responsible AI use. Discover mor… IBM Open-Sources Granite Guardian: A Suite of Safeguards for Risk Detection in LLMs

Exciting news! IBM has open-sourced Granite Guardian, a powerful suite for identifying risks in large language models (LLMs). With robust tools for risk detection, transparency, and a human-centric approach, it ensures responsible AI use. Discover mor… IBM Open-Sources Granite Guardian: A Suite of Safeguards for Risk Detection in LLMs

5/20

@itinaicom

Exciting news in AI! The Sequential Controlled Langevin Diffusion (SCLD) algorithm sets a new benchmark for sampling from complex probability distributions. Achieving top results with only 10% of the training budget, SCLD tackles traditional limitatio… This AI Paper Sets a New Benchmark in Sampling with the Sequential Controlled Langevin Diffusion Algorithm