You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?"awesome-marketing-datascience/awesome-ai.md at master · underlines/awesome-marketing-datascience

Table of Contents

Large Language Models

LLaMA models

StackLLaMA: A hands-on guide to train LLaMA with RLHF

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

StackLLaMA: A hands-on guide to train LLaMA with RLHF

Published April 5, 2023Edward Beeching

Kashif Rasul

Younes Belkada

Lewis Tunstall

Leandro von Werra

Nazneen Rajani

Nathan Lambert

Models such as ChatGPT, GPT-4, and Claude are powerful language models that have been fine-tuned using a method called Reinforcement Learning from Human Feedback (RLHF) to be better aligned with how we expect them to behave and would like to use them.

In this blog post, we show all the steps involved in training a LlaMa model to answer questions on Stack Exchange with RLHF through a combination of:

Supervised Fine-tuning (SFT)

Reward / preference modeling (RM)

Reinforcement Learning from Human Feedback (RLHF)

From InstructGPT paper: Ouyang, Long, et al. "Training language models to follow instructions with human feedback." arXiv preprint arXiv:2203.02155 (2022).

By combining these approaches, we are releasing the StackLLaMA model. This model is available on the

StackLLaMa is a 7 billion parameter language model based on Meta’s LLaMA model that has been trained on pairs of questions and answers from Stack Exchange using Reinforcement Learning from Human Feedback (RLHF) with the TRL library. For more details, check out our blog post.

Type in the box below and click the button to generate answers to your most pressing questions!

{continue reading post on site..}

https://archive.is/ujlbv

GitHub - nomic-ai/gpt4all: GPT4All: Run Local LLMs on Any Device. Open-source and available for commercial use.

GPT4All: Run Local LLMs on Any Device. Open-source and available for commercial use. - nomic-ai/gpt4all

Demo, data, and code to train open-source assistant-style large language model based on GPT-J and LLaMa

Technical Report 2: GPT4All-J

Technical Report 2: GPT4All-J

Technical Report 1: GPT4All

Technical Report 1: GPT4All

Official Python Bindings

Official Python Bindings

Official Typescript Bindings

Official Typescript Bindings

Official Web Chat Interface

Official Web Chat Interface

Official Chat Interface

Official Chat Interface

️

️ Official Langchain Backend

Official Langchain Backend

Discord

GPT4All is made possible by our compute partner Paperspace.

Discord

GPT4All is made possible by our compute partner Paperspace.

GPT4All-J: An Apache-2 Licensed GPT4All Model

Run on an M1 Mac (not sped up!)

GPT4All-J Chat UI Installers

Installs a native chat-client with auto-update functionality that runs on your desktop with the GPT4All-J model baked into it.Mac/OSX

Windows

Ubuntu

These files are not yet cert signed by Windows/Apple so you will see security warnings on initial installation. We did not want to delay release while waiting for their process to complete.

Find the most up-to-date information on the GPT4All Website

Raw Model

ggml Model Download LinkNote this model is only compatible with the C++ bindings found here. It will not work with any existing llama.cpp bindings as we had to do a large fork of llama.cpp. GPT4All will support the ecosystem around this new C++ backend going forward.

Python bindings are imminent and will be integrated into this repository. Stay tuned on the GPT4All discord for updates.

GitHub - geekyutao/Inpaint-Anything: Inpaint anything using Segment Anything and inpainting models.

Inpaint anything using Segment Anything and inpainting models. - geekyutao/Inpaint-Anything

About

Inpaint anything using Segment Anything and inpainting models.Inpaint Anything: Segment Anything Meets Image Inpainting

- Authors: Tao Yu, Runseng Feng, Ruoyu Feng, Jinming Liu, Xin Jin, Wenjun Zeng and Zhibo Chen.

- Institutes: University of Science and Technology of China; Eastern Institute for Advanced Study.

- Paper: arXiv (releasing)

TL; DR: Users can select any object in an image by clicking on it. With powerful vision models, e.g., SAM, LaMa and Stable Diffusion (SD), Inpaint Anything is able to remove the object smoothly (i.e., Remove Anything). Further, prompted by user input text, Inpaint Anything can fill the object with any desired content (i.e., Fill Anything) or replace the background of it arbitrarily (i.e., Replace Anything).

Inpaint Anything Features

Inpaint Anything Features

- Remove Anything

- Fill Anything

- Replace Anything

Highlights

Highlights

- Any aspect ratio supported

- 2K resolution supported

- Technical report on arXiv

- Demo Website (coming soon)

Remove Anything

Remove Anything

Click on an object in the image, and Inpainting Anything will remove it instantly!- Click on an object;

- Segment Anything Model (SAM) segments the object out;

- Inpainting models (e.g., LaMa) fill the "hole".

Demo

Fill Anything

Fill Anything

Text prompt: "a teddy bear on a bench"

Last edited:

null

...

it's like the blockchain bubble. people will realise the limitations soon enough.

demo site:

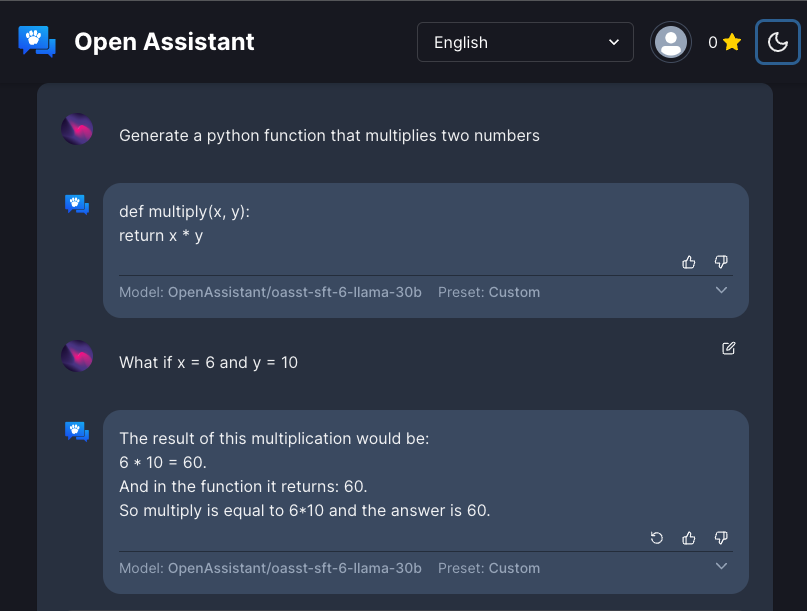

Sign Up - Open Assistant

OpenAssistant/oasst1 · Datasets at Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

OpenAssistant Conversations Dataset (OASST1)

Dataset Summary

In an effort to democratize research on large-scale alignment, we release OpenAssistant Conversations (OASST1), a human-generated, human-annotated assistant-style conversation corpus consisting of 161,443 messages distributed across 66,497 conversation trees, in 35 different languages, annotated with 461,292 quality ratings. The corpus is a product of a worldwide crowd-sourcing effort involving over 13,500 volunteers.Please refer to our paper for further details.

OpenAssistant (OpenAssistant)

Org profile for OpenAssistant on Hugging Face, the AI community building the future.

huggingface.co

OA_Paper_2023_04_15.pdf

www.ykilcher.com

www.ykilcher.com

Last edited:

DeepSpeed/blogs/deepspeed-chat at master · microsoft/DeepSpeed

DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective. - microsoft/DeepSpeed

DeepSpeed empowers ChatGPT-like model training with a single click, offering 15x speedup over SOTA RLHF systems with unprecedented cost reduction at all scales; learn how.

WebLLM | Home

This project brings large-language model and LLM-based chatbot to web browsers. Everything runs inside the browser with no server support and accelerated with WebGPU. This opens up a lot of fun opportunities to build AI assistants for everyone and enable privacy while enjoying GPU acceleration. Please check out our GitHub repo to see how we did it. There is also a demo which you can try out.

GitHub - mlc-ai/web-llm: Bringing large-language models and chat to web browsers. Everything runs inside the browser with no server support.

Bringing large-language models and chat to web browsers. Everything runs inside the browser with no server support. - GitHub - mlc-ai/web-llm: Bringing large-language models and chat to web browser...

Artificial Intelligence

Not Allen Iverson

Drake ain’t gonna like this.