The AI unicorn is making damn sure it has most leading U.S. magazine and text-based news publishers in content licensing agreements with it.

venturebeat.com

OpenAI will bring Cosmopolitan publisher Hearst’s content to ChatGPT

Carl Franzen@carlfranzen

October 8, 2024 12:31 PM

Credit: VentureBeat made with Midjourney

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

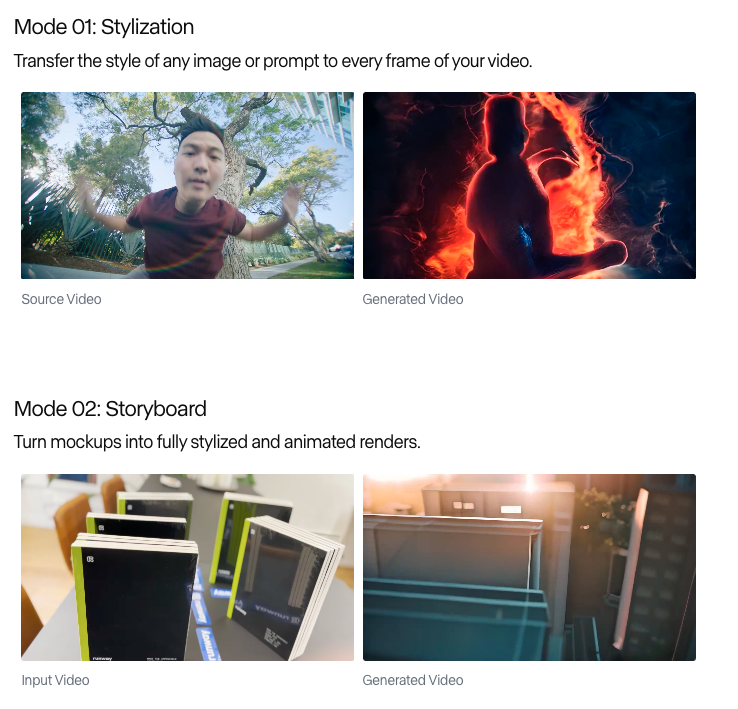

Is the future of written media — and potentially imagery and videos, too — going to be primarily surfaced to us through ChatGPT?

It’s not out of the question at the rate OpenAI is going. At the very least, the $157-billion dollar valued AI unicorn — fresh off the launch of its new

Canvas feature for ChatGPT and a

record-setting $6.6 billion fundraising round — is making damn well sure it has most of the leading U.S. magazine and text-based news publishers entered into content licensing agreements with it. These enable OpenAI to train on, or at least serve up, vast archives of prior written articles, photos, videos and other journalistic/editorial materials, through ChatGPT, SearchGPT and other AI products, potentially as truncated summaries.

The latest major American media firm to join with OpenAI is

Hearst, the eponymous media company famed for its “

yellow journalism” founder William Randolph Hearst (who helped beat the drum for the

U.S. to enter the Spanish-American War as well as

demonized marijuana, and was memorably fictionalized by

Citizen Kane‘s Charles Foster Kane) which is now perhaps best known as the publisher of

Cosmopolitan, the sex and lifestyle magazine aimed at young women, as well as

Esquire,

Elle,

Car & Driver,

Country Living,

Good Housekeeping,

Popular Mechanics and many more.

In total, Hearst operates 25 brands in the U.S., 175 websites and more than 200 magazine editions worldwide, according to

its media page. However, OpenAI will be specifically surfacing “curated content” from more than 20 magazine brands and over 40 newspapers, including well-known titles such as

Cosmopolitan,

Esquire,

Houston Chronicle,

San Francisco Chronicle,

ELLE, and

Women’s Health. The content will be clearly attributed, with appropriate citations and direct links to Hearst’s original sources, ensuring transparency, according to the brands.

“Hearst’s other businesses outside of magazines and newspapers are not included in this partnership,” reads a release jointly published on

Hearst’s and

OpenAI’s websites.

It’s unclear whether or not the company will be training its models specifically on Hearst content — or merely piping said content through to end users of ChatGPT and other products. I’ve reached out to an OpenAI spokesperson for clarity and will update when I hear back.

Hearst now joins the long and growing list of media publishers that have struck content licensing deals with OpenAI. Among the many that have forged deals with OpenAI include:

These partnerships represent OpenAI’s broader ambition to collaborate with established media brands and elevate the quality of content provided through its AI systems.

With Hearst’s integration, OpenAI continues to expand its network of trusted content providers, ensuring users of its AI products, like ChatGPT, have access to reliable information across a wide range of topics.

What the executives are saying it means

Jeff Johnson, President of Hearst Newspapers, emphasized the critical role that professional journalism plays in the evolution of AI. “As generative AI matures, it’s critical that journalism created by professional journalists be at the heart of all AI products,” he said, underscoring the importance of integrating trustworthy, curated content into these platforms.

Debi Chirichella, President of Hearst Magazines, echoed this sentiment, noting that the partnership allows Hearst to help shape the future of magazine content while preserving the credibility and high standards of the company’s journalism.

These deals signal a growing trend of cooperation between tech companies and traditional publishers as both industries adapt to the changes brought about by advances in AI.

While OpenAI’s partnerships offer media companies access to cutting-edge technology and the opportunity to reach larger audiences, they also raise questions about the long-term impact on the future of publishing.

Fears of OpenAI swallowing U.S. journalism and editorial print media whole?

Some critics argue that licensing content to AI platforms could potentially lead to competition, as AI systems improve and become more capable of generating content that rivals traditional journalism.

I myself, as a journalist whose work was undoubtedly scraped and trained by many AI models (and used for lots of other things of which I had no control over or say in), voiced

my own hesitation about media publishers moving so quickly to ink deals with OpenAI.

These concerns were amplified in recent legal actions, such as the lawsuit filed by

The New York Times against OpenAI and Microsoft, alleging copyright infringement in the development of AI models. The case remains in court for now, and

NYT remains one of an increasingly few holdouts who have yet to settle with or strike a deal with OpenAI to license their content.

Despite these concerns, publishers like Hearst, Condé Nast, and Vox Media are actively embracing AI as a means of staying competitive in an increasingly digital landscape.

As Chirichella pointed out, Hearst’s partnership with OpenAI is not only about delivering their high-quality content to a new audience but also about preserving the cultural and historical context that defines their publications. This collaboration, she said, “ensures that our high-quality writing and expertise, cultural and historical context and attribution and credibility are promoted as OpenAI’s products evolve.”

For OpenAI, these partnerships with major media brands enhance its ability to deliver reliable, engaging content to its users, aligning with the company’s stated goal of building AI products that provide trustworthy and relevant information.

As Brad Lightcap, COO of OpenAI, explained, bringing Hearst’s content into ChatGPT elevates the platform’s value to users, particularly as AI becomes an increasingly common tool for consuming and interacting with news and information.