You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?GitHub - diegovelilla/AutoREADME: AutoREADME is an AI-powered tool that genereates a README file for any given input repository.

AutoREADME is an AI-powered tool that genereates a README file for any given input repository. - diegovelilla/AutoREADME

About

AutoREADME is an AI-powered tool that genereates a README file for any given input repository.

1/2

Today we’re premiering Meta Movie Gen: the most advanced media foundation models to-date.

Today we’re premiering Meta Movie Gen: the most advanced media foundation models to-date.

Developed by AI research teams at Meta, Movie Gen delivers state-of-the-art results across a range of capabilities. We’re excited for the potential of this line of research to usher in entirely new possibilities for casual creators and creative professionals alike.

More details and examples of what Movie Gen can do Meta Movie Gen - the most advanced media foundation AI models

Meta Movie Gen - the most advanced media foundation AI models

Movie Gen models and capabilities

Movie Gen models and capabilities

Movie Gen Video: 30B parameter transformer model that can generate high-quality and high-definition images and videos from a single text prompt.

Movie Gen Audio: A 13B parameter transformer model that can take a video input along with optional text prompts for controllability to generate high-fidelity audio synced to the video. It can generate ambient sound, instrumental background music and foley sound — delivering state-of-the-art results in audio quality, video-to-audio alignment and text-to-audio alignment.

Precise video editing: Using a generated or existing video and accompanying text instructions as an input it can perform localized edits such as adding, removing or replacing elements — or global changes like background or style changes.

Personalized videos: Using an image of a person and a text prompt, the model can generate a video with state-of-the-art results on character preservation and natural movement in video.

We’re continuing to work closely with creative professionals from across the field to integrate their feedback as we work towards a potential release. We look forward to sharing more on this work and the creative possibilities it will enable in the future.

2/2

As part of our continued belief in open science and progressing the state-of-the-art in media generation, we’ve published more details on Movie Gen in a new research paper for the academic community https://go.fb.me/toz71j

https://go.fb.me/toz71j

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Developed by AI research teams at Meta, Movie Gen delivers state-of-the-art results across a range of capabilities. We’re excited for the potential of this line of research to usher in entirely new possibilities for casual creators and creative professionals alike.

More details and examples of what Movie Gen can do

Movie Gen Video: 30B parameter transformer model that can generate high-quality and high-definition images and videos from a single text prompt.

Movie Gen Audio: A 13B parameter transformer model that can take a video input along with optional text prompts for controllability to generate high-fidelity audio synced to the video. It can generate ambient sound, instrumental background music and foley sound — delivering state-of-the-art results in audio quality, video-to-audio alignment and text-to-audio alignment.

Precise video editing: Using a generated or existing video and accompanying text instructions as an input it can perform localized edits such as adding, removing or replacing elements — or global changes like background or style changes.

Personalized videos: Using an image of a person and a text prompt, the model can generate a video with state-of-the-art results on character preservation and natural movement in video.

We’re continuing to work closely with creative professionals from across the field to integrate their feedback as we work towards a potential release. We look forward to sharing more on this work and the creative possibilities it will enable in the future.

2/2

As part of our continued belief in open science and progressing the state-of-the-art in media generation, we’ve published more details on Movie Gen in a new research paper for the academic community

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/2

More examples of what Meta Movie Gen can do across video generation, precise video editing, personalized video generation and audio generation.

2/2

We’ve shared more details on the models and Movie Gen capabilities in a new blog post How Meta Movie Gen could usher in a new AI-enabled era for content creators

How Meta Movie Gen could usher in a new AI-enabled era for content creators

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

More examples of what Meta Movie Gen can do across video generation, precise video editing, personalized video generation and audio generation.

2/2

We’ve shared more details on the models and Movie Gen capabilities in a new blog post

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

https://reddit.com/link/1fvyvr9/video/v6ozewbtoqsd1/player

Generate videos from text Edit video with text

Produce personalized videos

Create sound effects and soundtracks

Paper: MovieGen: A Cast of Media Foundation Models

https://ai.meta.com/static-resource/movie-gen-research-paper

Source: AI at Meta on X:

1/40

@aisolopreneur

Yesterday, a Chinese company released an alarmingly advanced text-to-video AI:

Minimax.

It's like a Hollywood studio in your pocket.

10 mind-bending examples (plus how to access it for free):

2/40

@aisolopreneur

2.

[Quoted tweet]

Minimax is a new Chinese text-to-video model. It is available to try hailuoai.com/video

I've been experimenting with a couple of descriptive prompts showing human motion with AI and so far I'm impressed. It isn't perfect but the hand movement is the most natural I've seen.

3/40

@aisolopreneur

3.

4/40

@aisolopreneur

4.

[Quoted tweet]

Another Chinese 'Sora': A new AI video tool launched today by Minimax, backed by major investors Alibaba Group and Tencent.

Check out their official AI film Magic Coin , created entirely with text-to-video .

, created entirely with text-to-video .

Try it for free now: hailuoai.com/video

Try it for free now: hailuoai.com/video

5/40

@aisolopreneur

5.

[Quoted tweet]

MiniMAX New Chinese Free AI Generator - WOW

Free to use for now and only text to video! (Most phone number accepted)

Music: @udiomusic

6/40

@aisolopreneur

6.

[Quoted tweet]

6. Video in pixel style

7/40

@aisolopreneur

7.

[Quoted tweet]

4.

two giant mech robots fighting in the middle of the ocean

8/40

@aisolopreneur

8.

[Quoted tweet]

I got to try the new Chinese text to image model by MiniMax. It generates 5 second videos in HD, and the fidelity looks really good. I included the link below if you want to try. It's free, for now!

Thanks for sharing @JunieLauX !

9/40

@aisolopreneur

9.

[Quoted tweet]

前几天 Minimax 发布了他们的视频模型 abab-video-1,我还做了个小测试。

今天补了一下针对性的详细测试,发现确实非常牛批,模型综合实力是全世界最好的之一。

先说结论:

- 画质表现是现在所有视频模型最强的;

- 人物面部细节和情绪的表现是现在所有模型最好的;

- 提示词理解、运动幅度、稳定性都在第一档;

- 美学表现略差于 Luma,好于 Ruwnay;

- 物理正确性跟 Runway 差不多,好于 Luma;

真的,你们可以看一下最后一段测试视频,太牛了。

原来的提示词让一个女性的表情从喜悦到恐惧在到无奈,Minimax 完美实现了提示词描述的内容。Luma 人物直接崩了,Runway调教过于生硬开始硬切画面。

画质分部分他的视频清晰度和纹理都非常清晰和稳定,没有出现常见的模糊和网格问题。

提示词理解也很强,比如豹子武僧的部分,Luma 画出来了但是画面完全不动,runway 直接画了个人出来,只有 minimax 完全理解了提示词并且运动幅度正常。

所有的提示词都是 AI 生成,没有使用常见视频模型的演示提示词。所有测试都只跑一次。

10/40

@aisolopreneur

10.

11/40

@aisolopreneur

Repost if you found this thread valuable.

Follow me @aisolopreneur for more on how to leverage & profit from AI.

[Quoted tweet]

Yesterday, a Chinese company released an alarmingly advanced text-to-video AI:

Minimax.

It's like a Hollywood studio in your pocket.

10 mind-bending examples (plus how to access it for free):

12/40

@SocDoneLeft

this lightsaber battle is incredibly gay

please make more Frot Wars content, thank you

13/40

@MaxRovensky

Nearly every visual effects artist working today started their career because they wanted to do a lightsaber effect

All of them will tell you in detail how bad these are

14/40

@blackdolphin0

@TweetHelperBot download this

15/40

@Yaz2022742

@TweetHelperBot download this

16/40

@Anni_malik33

This is next-level! Can't believe we're already at text-to-video AI. Excited to see what creative possibilities this unlocks!

"

"

17/40

@imsandraroses

I use those things in my bed but for other things

18/40

@sara69baddie

The Light Sabers need some more work

19/40

@haribhajnmeena

What is this

20/40

@MethadosA

YOU WANNA SWORD FIGHT MAN!?

YOUWANNASWORDFIGHTMANG!?!

21/40

@haribhajnmeena

That's too bad what's in his hands it looks like he's fighting with a sword of light

22/40

@Apikameena

Is this docking

23/40

@saoulidisg

Is this docking?

24/40

@BenjaminDEKR

Uhhhh i'm sorry but this isn't good.

25/40

@shawnchauhan1

Thanks for the recommendation

26/40

@BlakeC

Schwartz

27/40

@iamtexture

They’re “sword fighting”

28/40

@DannysWeb3

Looks like they’re trying to tickle each other w their light sabers

29/40

@CMRidinDerby

Sword fight

30/40

@PDXFato

Not better than Runway

31/40

@AndImOkayWithIt

Mini max lol

@realmadmaxx

32/40

@EisenhartJoshua

I think if any american company used the data this chinese company used to train these, they would be sued within a week, if not the same day.

33/40

@jakebrowne95

Interesting, going to have to try it out.

Are there any other chinese text-to-video models you recommend?

34/40

@shiridesu

are they dikkfighting?

35/40

@paliaskepsi

Better than the last couple Disney efforts.

36/40

@Brongis9163

What’s those sword made of?

laser or gum??

laser or gum??  LOOOOL

LOOOOL

37/40

@michael_kove

Are they fighting or crossing their "swords" in a weird fetish fantasy a niche audience of nerds might have?

38/40

@kakigaijin

if you play with it too long...

39/40

@bitsinabyte

40/40

@IanFenwickTX

Bruh what they doin’ with their lightsabers???

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@aisolopreneur

Yesterday, a Chinese company released an alarmingly advanced text-to-video AI:

Minimax.

It's like a Hollywood studio in your pocket.

10 mind-bending examples (plus how to access it for free):

2/40

@aisolopreneur

2.

[Quoted tweet]

Minimax is a new Chinese text-to-video model. It is available to try hailuoai.com/video

I've been experimenting with a couple of descriptive prompts showing human motion with AI and so far I'm impressed. It isn't perfect but the hand movement is the most natural I've seen.

3/40

@aisolopreneur

3.

4/40

@aisolopreneur

4.

[Quoted tweet]

Another Chinese 'Sora': A new AI video tool launched today by Minimax, backed by major investors Alibaba Group and Tencent.

Check out their official AI film Magic Coin

5/40

@aisolopreneur

5.

[Quoted tweet]

MiniMAX New Chinese Free AI Generator - WOW

Free to use for now and only text to video! (Most phone number accepted)

Music: @udiomusic

6/40

@aisolopreneur

6.

[Quoted tweet]

6. Video in pixel style

7/40

@aisolopreneur

7.

[Quoted tweet]

4.

two giant mech robots fighting in the middle of the ocean

8/40

@aisolopreneur

8.

[Quoted tweet]

I got to try the new Chinese text to image model by MiniMax. It generates 5 second videos in HD, and the fidelity looks really good. I included the link below if you want to try. It's free, for now!

Thanks for sharing @JunieLauX !

9/40

@aisolopreneur

9.

[Quoted tweet]

前几天 Minimax 发布了他们的视频模型 abab-video-1,我还做了个小测试。

今天补了一下针对性的详细测试,发现确实非常牛批,模型综合实力是全世界最好的之一。

先说结论:

- 画质表现是现在所有视频模型最强的;

- 人物面部细节和情绪的表现是现在所有模型最好的;

- 提示词理解、运动幅度、稳定性都在第一档;

- 美学表现略差于 Luma,好于 Ruwnay;

- 物理正确性跟 Runway 差不多,好于 Luma;

真的,你们可以看一下最后一段测试视频,太牛了。

原来的提示词让一个女性的表情从喜悦到恐惧在到无奈,Minimax 完美实现了提示词描述的内容。Luma 人物直接崩了,Runway调教过于生硬开始硬切画面。

画质分部分他的视频清晰度和纹理都非常清晰和稳定,没有出现常见的模糊和网格问题。

提示词理解也很强,比如豹子武僧的部分,Luma 画出来了但是画面完全不动,runway 直接画了个人出来,只有 minimax 完全理解了提示词并且运动幅度正常。

所有的提示词都是 AI 生成,没有使用常见视频模型的演示提示词。所有测试都只跑一次。

10/40

@aisolopreneur

10.

11/40

@aisolopreneur

Repost if you found this thread valuable.

Follow me @aisolopreneur for more on how to leverage & profit from AI.

[Quoted tweet]

Yesterday, a Chinese company released an alarmingly advanced text-to-video AI:

Minimax.

It's like a Hollywood studio in your pocket.

10 mind-bending examples (plus how to access it for free):

12/40

@SocDoneLeft

this lightsaber battle is incredibly gay

please make more Frot Wars content, thank you

13/40

@MaxRovensky

Nearly every visual effects artist working today started their career because they wanted to do a lightsaber effect

All of them will tell you in detail how bad these are

14/40

@blackdolphin0

@TweetHelperBot download this

15/40

@Yaz2022742

@TweetHelperBot download this

16/40

@Anni_malik33

This is next-level! Can't believe we're already at text-to-video AI. Excited to see what creative possibilities this unlocks!

17/40

@imsandraroses

I use those things in my bed but for other things

18/40

@sara69baddie

The Light Sabers need some more work

19/40

@haribhajnmeena

What is this

20/40

@MethadosA

YOU WANNA SWORD FIGHT MAN!?

YOUWANNASWORDFIGHTMANG!?!

21/40

@haribhajnmeena

That's too bad what's in his hands it looks like he's fighting with a sword of light

22/40

@Apikameena

Is this docking

23/40

@saoulidisg

Is this docking?

24/40

@BenjaminDEKR

Uhhhh i'm sorry but this isn't good.

25/40

@shawnchauhan1

Thanks for the recommendation

26/40

@BlakeC

Schwartz

27/40

@iamtexture

They’re “sword fighting”

28/40

@DannysWeb3

Looks like they’re trying to tickle each other w their light sabers

29/40

@CMRidinDerby

Sword fight

30/40

@PDXFato

Not better than Runway

31/40

@AndImOkayWithIt

Mini max lol

@realmadmaxx

32/40

@EisenhartJoshua

I think if any american company used the data this chinese company used to train these, they would be sued within a week, if not the same day.

33/40

@jakebrowne95

Interesting, going to have to try it out.

Are there any other chinese text-to-video models you recommend?

34/40

@shiridesu

are they dikkfighting?

35/40

@paliaskepsi

Better than the last couple Disney efforts.

36/40

@Brongis9163

What’s those sword made of?

37/40

@michael_kove

Are they fighting or crossing their "swords" in a weird fetish fantasy a niche audience of nerds might have?

38/40

@kakigaijin

if you play with it too long...

39/40

@bitsinabyte

40/40

@IanFenwickTX

Bruh what they doin’ with their lightsabers???

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Apple releases Depth Pro, an AI model that rewrites the rules of 3D vision

Apple's Depth Pro AI model sets a new standard in 3D depth estimation, offering high-resolution, real-time depth mapping from a single image without camera metadata—transforming industries like AR, autonomous vehicles, and more.

Apple releases Depth Pro, an AI model that rewrites the rules of 3D vision

Michael Nuñez@MichaelFNunez

October 4, 2024 11:52 AM

Credit: VentureBeat made with Midjourney

Apple’s AI research team has developed a new model that could significantly advance how machines perceive depth, potentially transforming industries ranging from augmented reality to autonomous vehicles.

The system, called Depth Pro, is able to generate detailed 3D depth maps from single 2D images in a fraction of a second—without relying on the camera data traditionally needed to make such predictions.

The technology, detailed in a research paper titled “Depth Pro: Sharp Monocular Metric Depth in Less Than a Second,” is a major leap forward in the field of monocular depth estimation, a process that uses just one image to infer depth.

This could have far-reaching applications across sectors where real-time spatial awareness is key. The model’s creators, led by Aleksei Bochkovskii and Vladlen Koltun, describe Depth Pro as one of the fastest and most accurate systems of its kind.

A comparison of depth maps from Apple’s Depth Pro, Marigold, Depth Anything v2, and Metric3D v2. Depth Pro excels in capturing fine details like fur and birdcage wires, producing sharp, high-resolution depth maps in just 0.3 seconds, outperforming other models in accuracy and detail. (credit: arxiv.org)

Speed and precision, without the metadata

Monocular depth estimation has long been a challenging task, requiring either multiple images or metadata like focal lengths to accurately gauge depth.

But Depth Pro bypasses these requirements, producing high-resolution depth maps in just 0.3 seconds on a standard GPU. The model can create 2.25-megapixel maps with exceptional sharpness, capturing even minute details like hair and vegetation that are often overlooked by other methods.

“These characteristics are enabled by a number of technical contributions, including an efficient multi-scale vision transformer for dense prediction,” the researchers explain in their paper. This architecture allows the model to process both the overall context of an image and its finer details simultaneously—an enormous leap from slower, less precise models that came before it.

A comparison of depth maps from Apple’s Depth Pro, Depth Anything v2, Marigold, and Metric3D v2. Depth Pro excels in capturing fine details like the deer’s fur, windmill blades, and zebra’s stripes, delivering sharp, high-resolution depth maps in 0.3 seconds. (credit: arxiv.org)

Metric depth, zero-shot learning

What truly sets Depth Pro apart is its ability to estimate both relative and absolute depth, a capability called “metric depth.”

This means that the model can provide real-world measurements, which is essential for applications like augmented reality (AR), where virtual objects need to be placed in precise locations within physical spaces.

And Depth Pro doesn’t require extensive training on domain-specific datasets to make accurate predictions—a feature known as “zero-shot learning.” This makes the model highly versatile. It can be applied to a wide range of images, without the need for the camera-specific data usually required in depth estimation models.

“Depth Pro produces metric depth maps with absolute scale on arbitrary images ‘in the wild’ without requiring metadata such as camera intrinsics,” the authors explain. This flexibility opens up a world of possibilities, from enhancing AR experiences to improving autonomous vehicles’ ability to detect and navigate obstacles.

For those curious to experience Depth Pro firsthand, a live demo is available on the Hugging Face platform.

A comparison of depth estimation models across multiple datasets. Apple’s Depth Pro ranks highest overall with an average rank of 2.5, outperforming models like Depth Anything v2 and Metric3D in accuracy across diverse scenarios. (credit: arxiv.org)

Real-world applications: From e-commerce to autonomous vehicles

This versatility has significant implications for various industries. In e-commerce, for example, Depth Pro could allow consumers to see how furniture fits in their home by simply pointing their phone’s camera at the room. In the automotive industry, the ability to generate real-time, high-resolution depth maps from a single camera could improve how self-driving cars perceive their environment, boosting navigation and safety.

“The method should ideally produce metric depth maps in this zero-shot regime to accurately reproduce object shapes, scene layouts, and absolute scales,” the researchers write, emphasizing the model’s potential to reduce the time and cost associated with training more conventional AI models.

Tackling the challenges of depth estimation

One of the toughest challenges in depth estimation is handling what are known as “flying pixels”—pixels that appear to float in mid-air due to errors in depth mapping. Depth Pro tackles this issue head-on, making it particularly effective for applications like 3D reconstruction and virtual environments, where accuracy is paramount.

Additionally, Depth Pro excels in boundary tracing, outperforming previous models in sharply delineating objects and their edges. The researchers claim it surpasses other systems “by a multiplicative factor in boundary accuracy,” which is key for applications that require precise object segmentation, such as image matting and medical imaging.

Open-source and ready to scale

In a move that could accelerate its adoption, Apple has made Depth Pro open-source. The code, along with pre-trained model weights, is available on GitHub, allowing developers and researchers to experiment with and further refine the technology. The repository includes everything from the model’s architecture to pretrained checkpoints, making it easy for others to build on Apple’s work.

The research team is also encouraging further exploration of Depth Pro’s potential in fields like robotics, manufacturing, and healthcare. “We release code and weights at GitHub - apple/ml-depth-pro: Depth Pro: Sharp Monocular Metric Depth in Less Than a Second.,” the authors write, signaling this as just the beginning for the model.

What’s next for AI depth perception

As artificial intelligence continues to push the boundaries of what’s possible, Depth Pro sets a new standard in speed and accuracy for monocular depth estimation. Its ability to generate high-quality, real-time depth maps from a single image could have wide-ranging effects across industries that rely on spatial awareness.

In a world where AI is increasingly central to decision-making and product development, Depth Pro exemplifies how cutting-edge research can translate into practical, real-world solutions. Whether it’s improving how machines perceive their surroundings or enhancing consumer experiences, the potential uses for Depth Pro are broad and varied.

As the researchers conclude, “Depth Pro dramatically outperforms all prior work in sharp delineation of object boundaries, including fine structures such as hair, fur, and vegetation.” With its open-source release, Depth Pro could soon become integral to industries ranging from autonomous driving to augmented reality—transforming how machines and people interact with 3D environments.

1/11

@tost_ai

Depth Pro with Depth Flow now on @tost_ai, @runpod_io and @ComfyUI

Depth Pro with Depth Flow now on @tost_ai, @runpod_io and @ComfyUI

Thanks to Depth Pro Team ❤ and Depth Flow Team ❤

🗺comfyui: depth-flow-tost/depth_flow_tost.json at main · camenduru/depth-flow-tost

runpod: GitHub - camenduru/depth-flow-tost

runpod: GitHub - camenduru/depth-flow-tost

tost: please try it

tost: please try it  Tost AI

Tost AI

2/11

@ProTipsKe

/search?q=#Apple Releases Depth Pro /search?q=#AI

"...Depth Pro, is able to generate detailed 3D depth maps from single 2D images in a fraction of a second—without relying on the camera data traditionally needed to make such predictions..."

Apple releases Depth Pro, an AI model that rewrites the rules of 3D vision

3/11

@_akhaliq

Web UI for Apple Depth-Pro

Metric depth estimation determines real-world distances to objects in a scene from images. This repo provides a web UI that allows users to estimate metric depth and visualize depth maps by easily uploading images using the Depth Pro model through a simple Gradio UI interface.

4/11

@bycloudai

whatttt

there are like 5 research papers from Apple this week

LLMs Know More Than They Show [2410.02707] LLMs Know More Than They Show: On the Intrinsic Representation of LLM Hallucinations (affiliated only?)

Depth Pro [2410.02073] Depth Pro: Sharp Monocular Metric Depth in Less Than a Second

MM1.5 [2409.20566] MM1.5: Methods, Analysis & Insights from Multimodal LLM Fine-tuning

Contrastive Localized Language-Image Pre-Training [2410.02746] Contrastive Localized Language-Image Pre-Training

Revisit Large-Scale Image-Caption Data in Pre-training Multimodal Foundation Models [2410.02740] Revisit Large-Scale Image-Caption Data in Pre-training Multimodal Foundation Models

[Quoted tweet]

This week’s top AI/ML research papers:

This week’s top AI/ML research papers:

- MovieGen

- Were RNNs All We Needed?

- Contextual Document Embeddings

- RLEF

- ENTP

- VinePPO

- When a language model is optimized for reasoning, does it still show embers of autoregression? An analysis of OpenAI o1

- LLMs Know More Than They Show

- Video Instruction Tuning With Synthetic Data

- PHI-S

- Thermodynamic Bayesian Inference

- Emu3: Next-Token Prediction is All You Need

- Lattice-Valued Bottleneck Duality

- Loong

- Archon

- Direct Judgement Preference Optimization

- Depth Pro

- MIO: A Foundation Model on Multimodal Tokens

- MM1.5

- PhysGen

- Cottention

- UniAff

- Hyper-Connections

- Image Copy Detection for Diffusion Models

- RATIONALYST

- From Code to Correctness

- Not All LLM Reasoners Are Created Equal

- VPTQ: Extreme Low-bit Vector Post-Training Quantization for LLMs

- Leopard: A VLM For Text-Rich Multi-Image Tasks

- Selective Aggregation for LoRA in Federated Learning

- Quantifying Generalization Complexity for Large Language Models

- FactAlign: Long-form Factuality Alignment of LLMs

- Is Preference Alignment Always the Best Option to Enhance LLM-Based Translation?

- Law of the Weakest Link: Cross Capabilities of Large Language Models

- TPI-LLM: Serving 70B-scale LLMs Efficiently on Low-resource Edge Devices

- One Token to Seg Them All: Language Instructed Reasoning Segmentation in Videos

- Looped Transformers for Length Generalization

- Illustrious

- LLaVA-Critic

- Contrastive Localized Language-Image Pre-Training

- Large Language Models as Markov Chains

- CLIP-MoE

- SageAttention

- Training Language Models on Synthetic Edit Sequences Improves Code Synthesis

- Revisit Large-Scale Image-Caption Data in Pre-training Multimodal Foundation Models

- EVER

- The bunkbed conjecture is false

overview for each + authors' explanations

read this in thread mode for the best experience

5/11

@jonstephens85

I am very impressed with Apple's new code release of Depth Pro: Sharp Monocular Metric Depth in Less Than a Second. It's FAST! And it picked up on some incredible depth detail on my super fluffy cat. I show the whole process end to end.

6/11

@aisearchio

Apple has released Depth Pro, an open-source AI model that creates detailed 3D depth maps from single images.

Depth Pro - a Hugging Face Space by akhaliq

7/11

@chrisoffner3d

Testing temporal coherence of the new monocular depth estimator Depth Pro: Sharp Monocular Metric Depth in Less Than a Second with my own videos. The admittedly challenging and low-detail background seems quite unstable.

8/11

@aigclink

苹果刚刚开源了一个深度学习项目:Depth Pro,不到一秒即可生成非常清晰和详细的深度图

可用于各种需要理解图像深度的应用,利好自动驾驶汽车、虚拟现实、3D建模等场景

特点:

1、快速生成:可在0.3秒内生成一张225万像素的深度图

2、高清晰度:高分辨率、高锐度、高频细节,可以捕捉到很多细节

3、无需额外信息:无需相机的特定设置信息,比如焦距设置,就能生成准确的深度图

github:GitHub - apple/ml-depth-pro: Depth Pro: Sharp Monocular Metric Depth in Less Than a Second.

/search?q=#DepthPro

9/11

@TheAITimeline

Depth Pro: Sharp Monocular Metric Depth in Less Than a Second

Overview:

Depth Pro introduces a foundation model for zero-shot metric monocular depth estimation, delivering high-resolution depth maps with outstanding sharpness and detail.

It operates efficiently, producing a 2.25-megapixel depth map in just 0.3 seconds without the need for camera metadata.

Key innovations include a multi-scale vision transformer for dense prediction and a combined real-synthetic dataset training protocol for accuracy.

Extensive experiments show that Depth Pro surpasses previous models in several performance metrics, including boundary accuracy and focal length estimation from a single image.

Paper:

[2410.02073] Depth Pro: Sharp Monocular Metric Depth in Less Than a Second

10/11

@toyxyz3

Depth pro test /search?q=#stablediffusion /search?q=#AIイラスト /search?q=#AI /search?q=#ComfyUI

11/11

@jaynz_way

**Summary**:

Apple's Depth Pro AI model transforms 2D images into 3D depth maps in under a second, without extra hardware. It's fast, precise, and useful for AR, robotics, and autonomous systems. The technology uses advanced vision transformers for accuracy. Developers can now explore new 3D applications freely, as Apple has opened up this tech. It's like giving AI the ability to see in 3D instantly, with Apple showing off by riding this tech like a bicycle - no hands!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@tost_ai

Thanks to Depth Pro Team ❤ and Depth Flow Team ❤

🗺comfyui: depth-flow-tost/depth_flow_tost.json at main · camenduru/depth-flow-tost

2/11

@ProTipsKe

/search?q=#Apple Releases Depth Pro /search?q=#AI

"...Depth Pro, is able to generate detailed 3D depth maps from single 2D images in a fraction of a second—without relying on the camera data traditionally needed to make such predictions..."

Apple releases Depth Pro, an AI model that rewrites the rules of 3D vision

3/11

@_akhaliq

Web UI for Apple Depth-Pro

Metric depth estimation determines real-world distances to objects in a scene from images. This repo provides a web UI that allows users to estimate metric depth and visualize depth maps by easily uploading images using the Depth Pro model through a simple Gradio UI interface.

4/11

@bycloudai

whatttt

there are like 5 research papers from Apple this week

LLMs Know More Than They Show [2410.02707] LLMs Know More Than They Show: On the Intrinsic Representation of LLM Hallucinations (affiliated only?)

Depth Pro [2410.02073] Depth Pro: Sharp Monocular Metric Depth in Less Than a Second

MM1.5 [2409.20566] MM1.5: Methods, Analysis & Insights from Multimodal LLM Fine-tuning

Contrastive Localized Language-Image Pre-Training [2410.02746] Contrastive Localized Language-Image Pre-Training

Revisit Large-Scale Image-Caption Data in Pre-training Multimodal Foundation Models [2410.02740] Revisit Large-Scale Image-Caption Data in Pre-training Multimodal Foundation Models

[Quoted tweet]

- MovieGen

- Were RNNs All We Needed?

- Contextual Document Embeddings

- RLEF

- ENTP

- VinePPO

- When a language model is optimized for reasoning, does it still show embers of autoregression? An analysis of OpenAI o1

- LLMs Know More Than They Show

- Video Instruction Tuning With Synthetic Data

- PHI-S

- Thermodynamic Bayesian Inference

- Emu3: Next-Token Prediction is All You Need

- Lattice-Valued Bottleneck Duality

- Loong

- Archon

- Direct Judgement Preference Optimization

- Depth Pro

- MIO: A Foundation Model on Multimodal Tokens

- MM1.5

- PhysGen

- Cottention

- UniAff

- Hyper-Connections

- Image Copy Detection for Diffusion Models

- RATIONALYST

- From Code to Correctness

- Not All LLM Reasoners Are Created Equal

- VPTQ: Extreme Low-bit Vector Post-Training Quantization for LLMs

- Leopard: A VLM For Text-Rich Multi-Image Tasks

- Selective Aggregation for LoRA in Federated Learning

- Quantifying Generalization Complexity for Large Language Models

- FactAlign: Long-form Factuality Alignment of LLMs

- Is Preference Alignment Always the Best Option to Enhance LLM-Based Translation?

- Law of the Weakest Link: Cross Capabilities of Large Language Models

- TPI-LLM: Serving 70B-scale LLMs Efficiently on Low-resource Edge Devices

- One Token to Seg Them All: Language Instructed Reasoning Segmentation in Videos

- Looped Transformers for Length Generalization

- Illustrious

- LLaVA-Critic

- Contrastive Localized Language-Image Pre-Training

- Large Language Models as Markov Chains

- CLIP-MoE

- SageAttention

- Training Language Models on Synthetic Edit Sequences Improves Code Synthesis

- Revisit Large-Scale Image-Caption Data in Pre-training Multimodal Foundation Models

- EVER

- The bunkbed conjecture is false

overview for each + authors' explanations

read this in thread mode for the best experience

5/11

@jonstephens85

I am very impressed with Apple's new code release of Depth Pro: Sharp Monocular Metric Depth in Less Than a Second. It's FAST! And it picked up on some incredible depth detail on my super fluffy cat. I show the whole process end to end.

6/11

@aisearchio

Apple has released Depth Pro, an open-source AI model that creates detailed 3D depth maps from single images.

Depth Pro - a Hugging Face Space by akhaliq

7/11

@chrisoffner3d

Testing temporal coherence of the new monocular depth estimator Depth Pro: Sharp Monocular Metric Depth in Less Than a Second with my own videos. The admittedly challenging and low-detail background seems quite unstable.

8/11

@aigclink

苹果刚刚开源了一个深度学习项目:Depth Pro,不到一秒即可生成非常清晰和详细的深度图

可用于各种需要理解图像深度的应用,利好自动驾驶汽车、虚拟现实、3D建模等场景

特点:

1、快速生成:可在0.3秒内生成一张225万像素的深度图

2、高清晰度:高分辨率、高锐度、高频细节,可以捕捉到很多细节

3、无需额外信息:无需相机的特定设置信息,比如焦距设置,就能生成准确的深度图

github:GitHub - apple/ml-depth-pro: Depth Pro: Sharp Monocular Metric Depth in Less Than a Second.

/search?q=#DepthPro

9/11

@TheAITimeline

Depth Pro: Sharp Monocular Metric Depth in Less Than a Second

Overview:

Depth Pro introduces a foundation model for zero-shot metric monocular depth estimation, delivering high-resolution depth maps with outstanding sharpness and detail.

It operates efficiently, producing a 2.25-megapixel depth map in just 0.3 seconds without the need for camera metadata.

Key innovations include a multi-scale vision transformer for dense prediction and a combined real-synthetic dataset training protocol for accuracy.

Extensive experiments show that Depth Pro surpasses previous models in several performance metrics, including boundary accuracy and focal length estimation from a single image.

Paper:

[2410.02073] Depth Pro: Sharp Monocular Metric Depth in Less Than a Second

10/11

@toyxyz3

Depth pro test /search?q=#stablediffusion /search?q=#AIイラスト /search?q=#AI /search?q=#ComfyUI

11/11

@jaynz_way

**Summary**:

Apple's Depth Pro AI model transforms 2D images into 3D depth maps in under a second, without extra hardware. It's fast, precise, and useful for AR, robotics, and autonomous systems. The technology uses advanced vision transformers for accuracy. Developers can now explore new 3D applications freely, as Apple has opened up this tech. It's like giving AI the ability to see in 3D instantly, with Apple showing off by riding this tech like a bicycle - no hands!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Last edited:

1/15

@chrisoffner3d

Testing temporal coherence of the new monocular depth estimator Depth Pro: Sharp Monocular Metric Depth in Less Than a Second with my own videos. The admittedly challenging and low-detail background seems quite unstable.

https://video.twimg.com/ext_tw_video/1842268171002064896/pu/vid/avc1/1080x1214/ArL-6XCEO1Gr0pVT.mp4

2/15

@chrisoffner3d

In this sequence it just seems to fail altogether.

https://video.twimg.com/ext_tw_video/1842269882080989184/pu/vid/avc1/1080x1214/edSr-s2BSGuUmKRZ.mp4

3/15

@chrisoffner3d

Here's a more busy scene with lots of depth variation.

https://video.twimg.com/ext_tw_video/1842278718569435136/pu/vid/avc1/1080x1214/wLCW5vNRA9O4Ttkb.mp4

4/15

@chrisoffner3d

Here I clamp the upper bound of the color map to 800 (metres) but for most frames the max predicted depth value is 10,000 (~infinity).

The DJI Osmo Pocket 3 camera does pretty heavy image processing. Maybe this post-processing is just too different from the training data?

https://video.twimg.com/ext_tw_video/1842287018081964032/pu/vid/avc1/1080x1214/F6R-D9SkpzOBCxeV.mp4

5/15

@chrisoffner3d

Hmm... somehow I think those mountains are more than 7.58 metres away in the left image. And the right one just went .

.

6/15

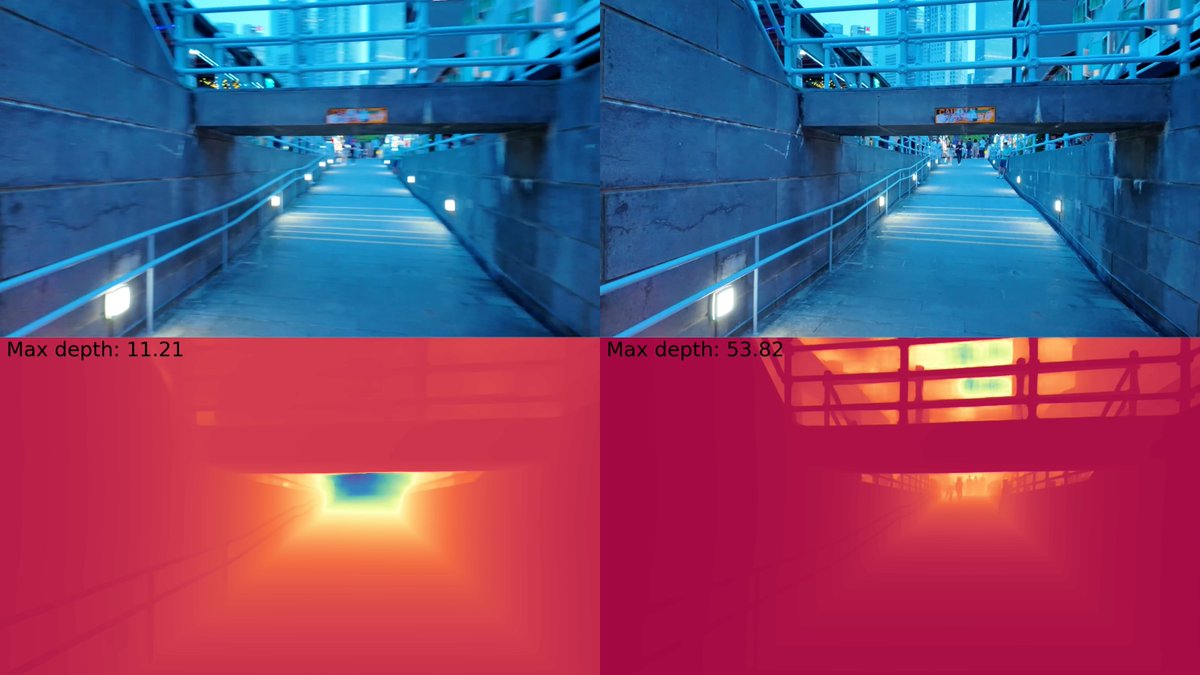

@chrisoffner3d

Motion blur seems to make a huge difference. These are successive frames from a video.

The left one has substantial motion blur and "Depth Pro" produces a very mushy depth map.

The right one is sharper, and the depth map looks more reasonable.

7/15

@chrisoffner3d

Two other successive frames from the same video, this time without noticeable visual differences. Nonetheless, the maximum depth varies by a factor of almost four.

8/15

@chrisoffner3d

From second 10 or so, the models assigns depth 10,000 (infinity) to some pixels it considers to be sky, which is why the depth map turns all red – because all those nearby finite depth values become negligible compared to the maximum value.

https://video.twimg.com/ext_tw_video/1842333210228695040/pu/vid/avc1/1080x1214/B8Q4nKAOYuKr6agy.mp4

9/15

@chrisoffner3d

Looks like primarily areas with large or infinite ground truth depth (e.g. the sky) have very high variance in the maximum depth. I should try some scenes with bounded maximum depth.

https://video.twimg.com/ext_tw_video/1842434205617168385/pu/vid/avc1/1080x1214/TIFyR-8ripcPzEnW.mp4

10/15

@chrisoffner3d

Here I show the log depth to reduce the impact on the maximum depth pixel on the rest of the color map. The shown "Max depth" label is still the raw metric depth value. Odd how the max depth drops/switches from 10,000 to values as low as ~20 in some frames.

https://video.twimg.com/ext_tw_video/1842454596368621568/pu/vid/avc1/1080x1214/zX4cJNPFW5XgCrst.mp4

11/15

@chrisoffner3d

Here's a scene with (mostly) bounded depth, but the sky peeks through the foliage and causes some strong fluctuations in the max depth estimate. Still, overall it looks more stable than the scenes with lots of sky.

https://video.twimg.com/ext_tw_video/1842473286552141824/pu/vid/avc1/1080x1214/Z8a5IicqkEdgiSL1.mp4

12/15

@chrisoffner3d

Here's the (log) depth of an indoor scene with fully bounded depth. The top-down frames in the first half of the video still have high depth variance. The later frames taken at more conventional angles are pleasantly stable.

https://video.twimg.com/ext_tw_video/1842489701111922688/pu/vid/avc1/1080x1214/dRWU_evea_0J5UTO.mp4

13/15

@chrisoffner3d

Again, when there's a lot of sky in the frame, all bets are off. The max depth oscillates between 10,000 (infinity) and values down to <200 in successive frames.

https://video.twimg.com/ext_tw_video/1842516079739834370/pu/vid/avc1/1080x1214/rsIgsOW-FbWs252h.mp4

14/15

@chrisoffner3d

Finally, here's a particularly challenging low-light video of where I met a cute and curious cow while wandering across a tiny island in Indonesia last month. Given the poor lighting and strong noise, I find Depth Pro's performance quite impressive tbh.

https://video.twimg.com/ext_tw_video/1842569306871123968/pu/vid/avc1/1080x1214/9uT8klzhH-4PPHWb.mp4

15/15

@chrisoffner3d

However, looking at the "swishy" artefacts in the depth maps of the cow video, I'm wondering whether the cow is secretly wearing the One Ring and is about to be captured by Sauron's ringwraiths.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@chrisoffner3d

Testing temporal coherence of the new monocular depth estimator Depth Pro: Sharp Monocular Metric Depth in Less Than a Second with my own videos. The admittedly challenging and low-detail background seems quite unstable.

https://video.twimg.com/ext_tw_video/1842268171002064896/pu/vid/avc1/1080x1214/ArL-6XCEO1Gr0pVT.mp4

2/15

@chrisoffner3d

In this sequence it just seems to fail altogether.

https://video.twimg.com/ext_tw_video/1842269882080989184/pu/vid/avc1/1080x1214/edSr-s2BSGuUmKRZ.mp4

3/15

@chrisoffner3d

Here's a more busy scene with lots of depth variation.

https://video.twimg.com/ext_tw_video/1842278718569435136/pu/vid/avc1/1080x1214/wLCW5vNRA9O4Ttkb.mp4

4/15

@chrisoffner3d

Here I clamp the upper bound of the color map to 800 (metres) but for most frames the max predicted depth value is 10,000 (~infinity).

The DJI Osmo Pocket 3 camera does pretty heavy image processing. Maybe this post-processing is just too different from the training data?

https://video.twimg.com/ext_tw_video/1842287018081964032/pu/vid/avc1/1080x1214/F6R-D9SkpzOBCxeV.mp4

5/15

@chrisoffner3d

Hmm... somehow I think those mountains are more than 7.58 metres away in the left image. And the right one just went

6/15

@chrisoffner3d

Motion blur seems to make a huge difference. These are successive frames from a video.

The left one has substantial motion blur and "Depth Pro" produces a very mushy depth map.

The right one is sharper, and the depth map looks more reasonable.

7/15

@chrisoffner3d

Two other successive frames from the same video, this time without noticeable visual differences. Nonetheless, the maximum depth varies by a factor of almost four.

8/15

@chrisoffner3d

From second 10 or so, the models assigns depth 10,000 (infinity) to some pixels it considers to be sky, which is why the depth map turns all red – because all those nearby finite depth values become negligible compared to the maximum value.

https://video.twimg.com/ext_tw_video/1842333210228695040/pu/vid/avc1/1080x1214/B8Q4nKAOYuKr6agy.mp4

9/15

@chrisoffner3d

Looks like primarily areas with large or infinite ground truth depth (e.g. the sky) have very high variance in the maximum depth. I should try some scenes with bounded maximum depth.

https://video.twimg.com/ext_tw_video/1842434205617168385/pu/vid/avc1/1080x1214/TIFyR-8ripcPzEnW.mp4

10/15

@chrisoffner3d

Here I show the log depth to reduce the impact on the maximum depth pixel on the rest of the color map. The shown "Max depth" label is still the raw metric depth value. Odd how the max depth drops/switches from 10,000 to values as low as ~20 in some frames.

https://video.twimg.com/ext_tw_video/1842454596368621568/pu/vid/avc1/1080x1214/zX4cJNPFW5XgCrst.mp4

11/15

@chrisoffner3d

Here's a scene with (mostly) bounded depth, but the sky peeks through the foliage and causes some strong fluctuations in the max depth estimate. Still, overall it looks more stable than the scenes with lots of sky.

https://video.twimg.com/ext_tw_video/1842473286552141824/pu/vid/avc1/1080x1214/Z8a5IicqkEdgiSL1.mp4

12/15

@chrisoffner3d

Here's the (log) depth of an indoor scene with fully bounded depth. The top-down frames in the first half of the video still have high depth variance. The later frames taken at more conventional angles are pleasantly stable.

https://video.twimg.com/ext_tw_video/1842489701111922688/pu/vid/avc1/1080x1214/dRWU_evea_0J5UTO.mp4

13/15

@chrisoffner3d

Again, when there's a lot of sky in the frame, all bets are off. The max depth oscillates between 10,000 (infinity) and values down to <200 in successive frames.

https://video.twimg.com/ext_tw_video/1842516079739834370/pu/vid/avc1/1080x1214/rsIgsOW-FbWs252h.mp4

14/15

@chrisoffner3d

Finally, here's a particularly challenging low-light video of where I met a cute and curious cow while wandering across a tiny island in Indonesia last month. Given the poor lighting and strong noise, I find Depth Pro's performance quite impressive tbh.

https://video.twimg.com/ext_tw_video/1842569306871123968/pu/vid/avc1/1080x1214/9uT8klzhH-4PPHWb.mp4

15/15

@chrisoffner3d

However, looking at the "swishy" artefacts in the depth maps of the cow video, I'm wondering whether the cow is secretly wearing the One Ring and is about to be captured by Sauron's ringwraiths.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

AI experts ready 'Humanity's Last Exam' to stump powerful tech

By Jeffrey Dastin and Katie Paul

September 16, 20244:42 PM EDTUpdated 20 days ago

Figurines with computers and smartphones are seen in front of the words "Artificial Intelligence AI" in this illustration taken, February 19, 2024. REUTERS/Dado Ruvic/Illustration/File Photo Purchase Licensing Rights

, opens new tab

Sept 16 (Reuters) - A team of technology experts issued a global call on Monday seeking the toughest questions to pose to artificial intelligence systems, which increasingly have handled popular benchmark tests like child's play.

Dubbed "Humanity's Last Exam

, opens new tab," the project seeks to determine when expert-level AI has arrived. It aims to stay relevant even as capabilities advance in future years, according to the organizers, a non-profit called the Center for AI Safety (CAIS) and the startup Scale AI.

The call comes days after the maker of ChatGPT previewed a new model, known as OpenAI o1, which "destroyed the most popular reasoning benchmarks," said Dan Hendrycks, executive director of CAIS and an advisor to Elon Musk's xAI startup.

Hendrycks co-authored two 2021 papers that proposed tests of AI systems that are now widely used, one quizzing them on undergraduate-level knowledge of topics like U.S. history, the other probing models' ability to reason through competition-level math. The undergraduate-style test has more downloads from the online AI hub Hugging Face than any such dataset

, opens new tab.

At the time of those papers, AI was giving almost random answers to questions on the exams. "They're now crushed," Hendrycks told Reuters.

As one example, the Claude models from the AI lab Anthropic have gone from scoring about 77% on the undergraduate-level test in 2023, to nearly 89% a year later, according to a prominent capabilities leaderboard

, opens new tab.

These common benchmarks have less meaning as a result.

AI has appeared to score poorly on lesser-used tests involving plan formulation and visual pattern-recognition puzzles, according to Stanford University’s AI Index Report from April. OpenAI o1 scored around 21% on one version of the pattern-recognition ARC-AGI test, for instance, the ARC organizers said on Friday.

Some AI researchers argue that results like this show planning and abstract reasoning to be better measures of intelligence, though Hendrycks said the visual aspect of ARC makes it less suited to assessing language models. “Humanity’s Last Exam” will require abstract reasoning, he said.

Answers from common benchmarks may also have ended up in data used to train AI systems, industry observers have said. Hendrycks said some questions on "Humanity's Last Exam" will remain private to make sure AI systems' answers are not from memorization.

The exam will include at least 1,000 crowd-sourced questions due November 1 that are hard for non-experts to answer. These will undergo peer review, with winning submissions offered co-authorship and up to $5,000 prizes sponsored by Scale AI.

“We desperately need harder tests for expert-level models to measure the rapid progress of AI," said Alexandr Wang, Scale's CEO.

One restriction: the organizers want no questions about weapons, which some say would be too dangerous for AI to study.

AI Research

Why It May Never Be Possible to Reach the Goal of AGI or Human Intelligence?

sanjeevverma September 20, 2024Explore the challenges and arguments against the feasibility of achieving Artificial General Intelligence (AGI). This research paper examines why AGI may never surpass or replicate the complexity of human intelligence, covering topics such as the multi-dimensional nature of intelligence, the limitations of AI in problem-solving, and the difficulties of modeling the human brain.

Artificial General Intelligence (AGI) represents an ambitious goal in the field of artificial intelligence, referring to a machine’s ability to perform any intellectual task a human can. AGI would not only replicate human intelligence but would surpass it in adaptability, understanding, and versatility. Despite this aspirational aim, numerous researchers, philosophers, and scientists argue that the achievement of AGI may remain forever elusive. This paper examines three major arguments against the feasibility of achieving AGI, exploring both the claims made by critics and the common rebuttals, with a focus on understanding why the complexity of human intelligence may render AGI unattainable.

Intelligence is Multi-Dimensional

One of the primary arguments against the possibility of AGI is the assertion that intelligence is inherently multi-dimensional. Human intelligence is not a single, unified trait but a complex interplay of cognitive abilities and specialized functions. Intelligence in humans involves the ability to learn, adapt, reason, and solve problems, yet these abilities are deeply influenced by emotion, intuition, and contextual understanding. Machines, by contrast, tend to excel at narrow, specialized tasks rather than general intelligence.

The Complexity of Human Intelligence

Yann LeCun, a pioneer in the field of deep learning, has argued that the term “AGI” should be retired in favor of the pursuit of “human-level AI”. He points out that intelligence is not a singular, monolithic capability, but a collection of specialized skills. Each individual, even within the human species, exhibits a unique set of intellectual abilities and limitations. For example, some people may excel in mathematical reasoning, while others are proficient in linguistic creativity. Human intelligence is both diverse and fragmented, and as a species, we cannot experience the entire spectrum of our own cognitive abilities, let alone replicate them in a machine.

Moreover, animals also demonstrate diverse dimensions of intelligence. Squirrels, for instance, have the ability to remember the locations of hundreds of hidden nuts for months, a remarkable feat of spatial memory that far exceeds typical human ability. This raises the question: if human intelligence is but one type of intelligence among many, how can we claim that a machine designed to replicate human intelligence would be superior or more advanced? The multi-dimensional nature of intelligence suggests that AGI, if achievable, would be different from human intelligence, not necessarily superior.

Machine Weaknesses in the Face of Human Adaptability

Additionally, while machines can outperform humans in specific tasks, such as playing chess or Go, these victories often reveal limitations rather than true intelligence. In 2016, the AlphaGo program famously defeated world champion Go player Lee Sedol. However, subsequent amateur players were able to defeat programs with AlphaGo-like capabilities by exploiting specific weaknesses in their algorithms. This suggests that even in domains where machines demonstrate superhuman capabilities, human ingenuity and adaptability can expose flaws in machine reasoning.

Despite these limitations, the multi-dimensionality of intelligence has not prevented humans from achieving dominance as a species. Homo sapiens, for instance, have contributed the most to the global biomass among mammals, showcasing that intelligence — even in its specialized, fragmented form — can still lead to unparalleled success. Thus, while machines may achieve narrow forms of superintelligence, the unique structure of human intelligence may prevent the development of AGI that mirror or surpasses the multi-faceted nature of human cognition.

Intelligence is Not the Solution to All Problems

The second major argument against AGI is the notion that intelligence alone is insufficient to solve the world’s most complex problems. Intelligence, as traditionally defined, is often seen as the key to unlocking solutions to any challenge, but in practice, this is not always the case. Even the most advanced AI systems today struggle with tasks requiring the discovery of new knowledge through experimentation, rather than merely analyzing existing data.

Limitations in Problem-Solving

For instance, while AI has made significant strides in fields such as medical diagnostics and drug discovery, it has yet to discover a cure for diseases like cancer. A machine, no matter how intelligent, cannot generate new knowledge in fields like biology or physics without experimentation, trial and error, and creative insight. Machines excel at analyzing vast amounts of data and detecting patterns, but these abilities are insufficient when novel, unpredictable problems arise.

Nevertheless, intelligence can enhance the quality of experimentation. More intelligent machines can design better experiments, optimize variables, and analyze results more effectively, potentially accelerating the pace of discovery. Historical trends in research productivity demonstrate that more advanced tools and better experimental design have led to more breakthroughs. However, these advances also face diminishing returns. As simpler problems like Newtonian motion are solved, humanity encounters harder challenges, such as quantum mechanics, that demand increasingly sophisticated approaches. Intelligence alone cannot overcome the intrinsic complexity of nature.

Diminishing Returns and Hard Problems

Furthermore, it is important to recognize that more intelligence does not guarantee more progress. In some cases, as problems become more complex, the returns on additional intelligence diminish. Discoveries in fields like physics and medicine have become increasingly difficult to achieve as researchers delve deeper into areas of uncertainty. Machines may excel at solving well-defined problems with clear parameters, but when confronted with the chaotic, unpredictable nature of reality, even the most advanced systems may fall short.

The diminishing returns on intelligence also highlight a critical distinction: intelligence, while important, is not synonymous with creativity, intuition, or insight. These uniquely human traits often play a crucial role in solving the most complex problems, and it remains unclear whether machines can ever replicate them. As such, intelligence alone may not be the ultimate key to solving all problems, further casting doubt on the feasibility of AGI.

AGI and the Complexity of Modeling the Human Brain

The third argument against AGI hinges on the sheer complexity of the human brain. While the Church-Turing thesis, proposed in 1950, suggests that any computational machine can, in theory, simulate the functioning of the human brain, this would require access to infinite memory and time — resources that are clearly impossible to achieve.

The Church-Turing Hypothesis and Its Limitations

The Church-Turing hypothesis asserts that any computational problem solvable by a human brain can also be solved by a sufficiently advanced machine. However, this theoretical assertion depends on ideal conditions: infinite memory and infinite time. In practical terms, this means that while the human brain might be modeled by a machine, doing so would require an unrealistic amount of computational power.

Most computer scientists believe that it is possible to model the brain with finite resources, but they also acknowledge that this belief lacks mathematical proof. As it stands, our understanding of the brain is too limited to precisely determine its computational capabilities. The brain’s neural architecture, synaptic connections, and plasticity make it an extraordinarily complex system. Despite decades of research, we have yet to develop a machine capable of fully replicating even a fraction of the brain’s processes.

The Challenge of Building AGI

The launch of large language models like ChatGPT in recent years has sparked excitement about the potential for AI to achieve human-like fluency and adaptability. ChatGPT, for instance, has demonstrated impressive language generation capabilities and reached millions of users in a short period. Yet, despite its ability to produce coherent text, it still suffers from fundamental flaws, including a lack of logical understanding, contextual awareness, and the ability to reason abstractly.

This example underscores the current limitations of AI. While machines have made remarkable progress in specific domains, they remain far from achieving the generalized, flexible intelligence that characterizes human cognition. The inability to model the full complexity of the brain, combined with the limitations of current AI systems, suggests that the dream of AGI may remain out of reach.

The goal of AGI, while a captivating aspiration, faces significant obstacles rooted in the multi-dimensional nature of intelligence, the limitations of intelligence in solving all problems, and the immense complexity of modeling the human brain. Human intelligence is diverse, specialized, and shaped by both cognitive and emotional factors that machines may never fully replicate. Moreover, intelligence alone may not be the ultimate key to solving complex problems, and even if we could model the brain, we lack the necessary resources and understanding to do so at present.

As AI continues to evolve, it will undoubtedly become more powerful and capable, but it may never achieve the level of general intelligence that characterizes humans. In the end, the limitations of both technology and our understanding of the human mind may prevent us from reaching the ultimate goal of AGI.