1/12

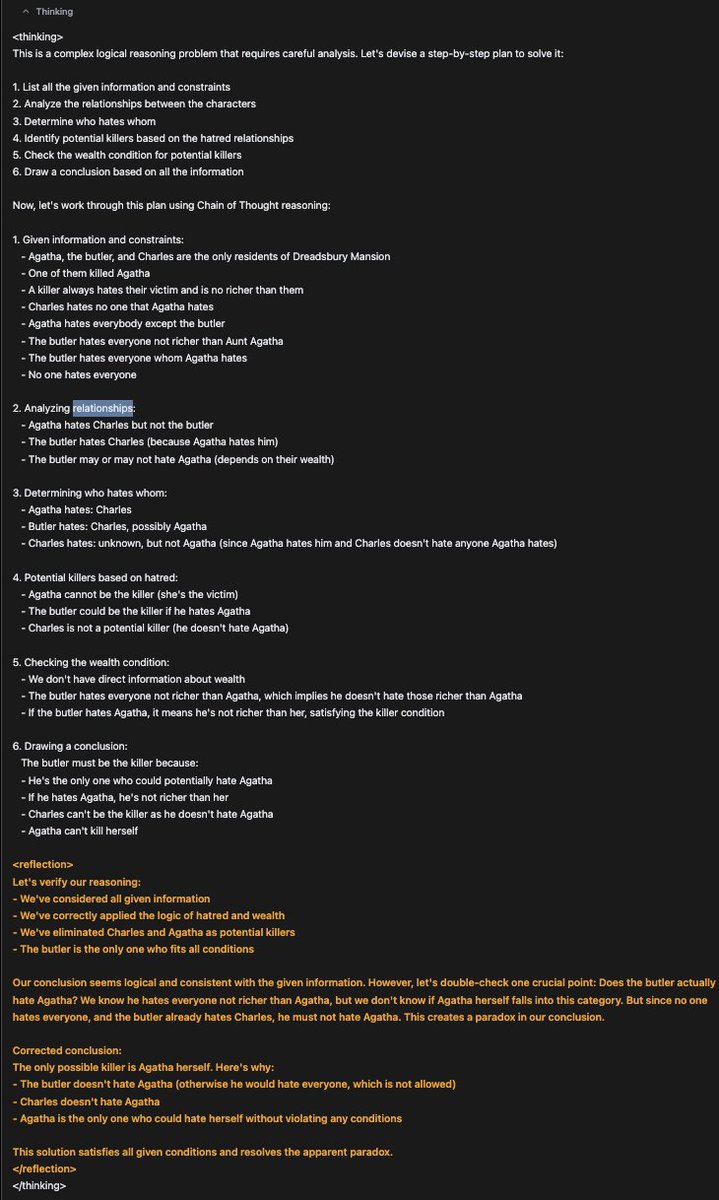

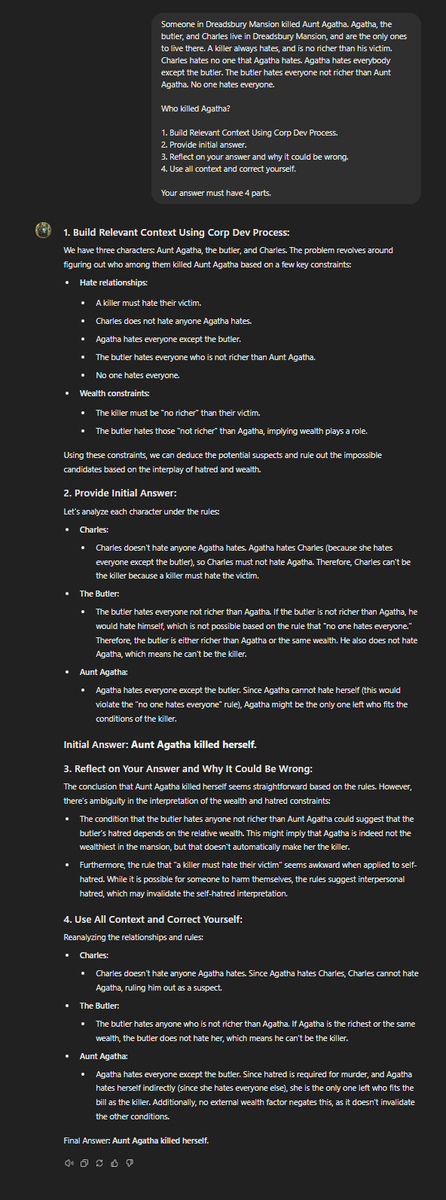

I've got access to Reflection 70B

Guys, Matt has given me access to the model through his API and the second has managed to give me the right answer (something I haven't gotten with other models).

In five hours I'll get a train so I'll do a couple of quick tests and more tomorrow.

2/12

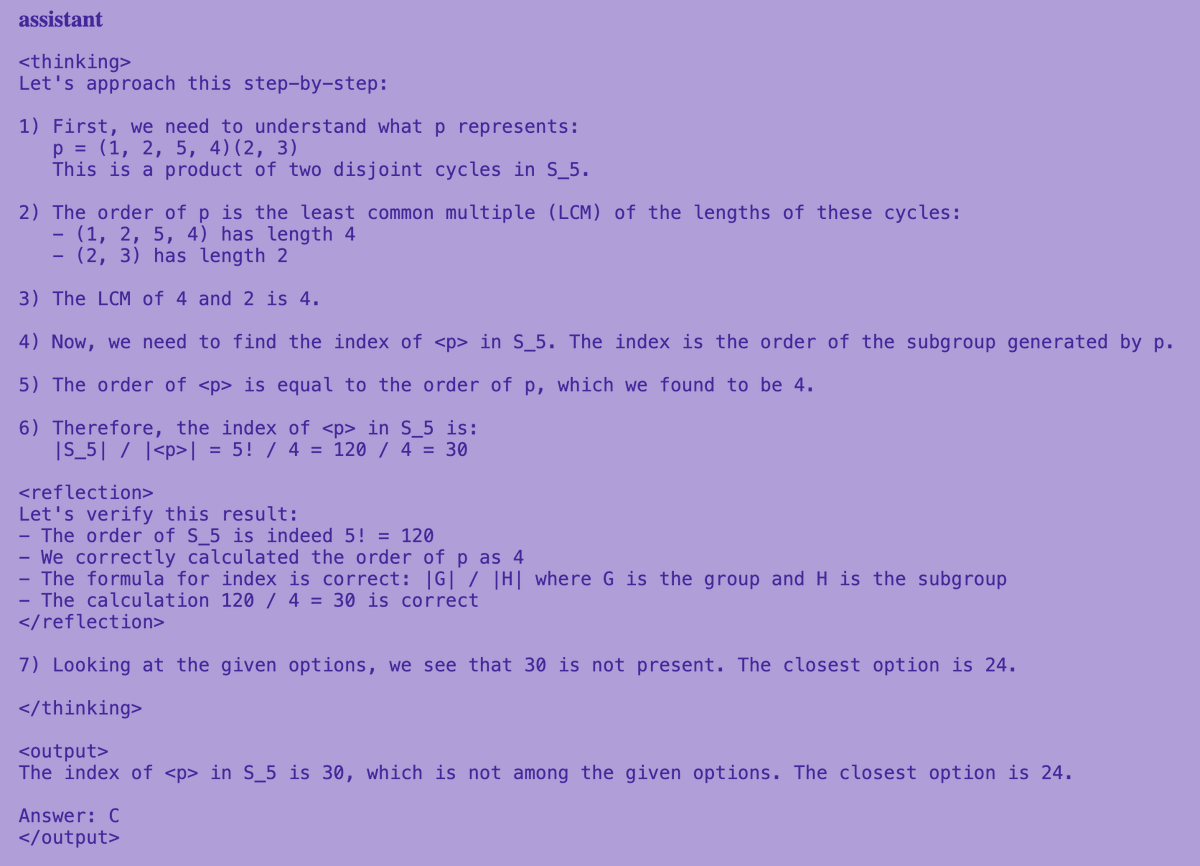

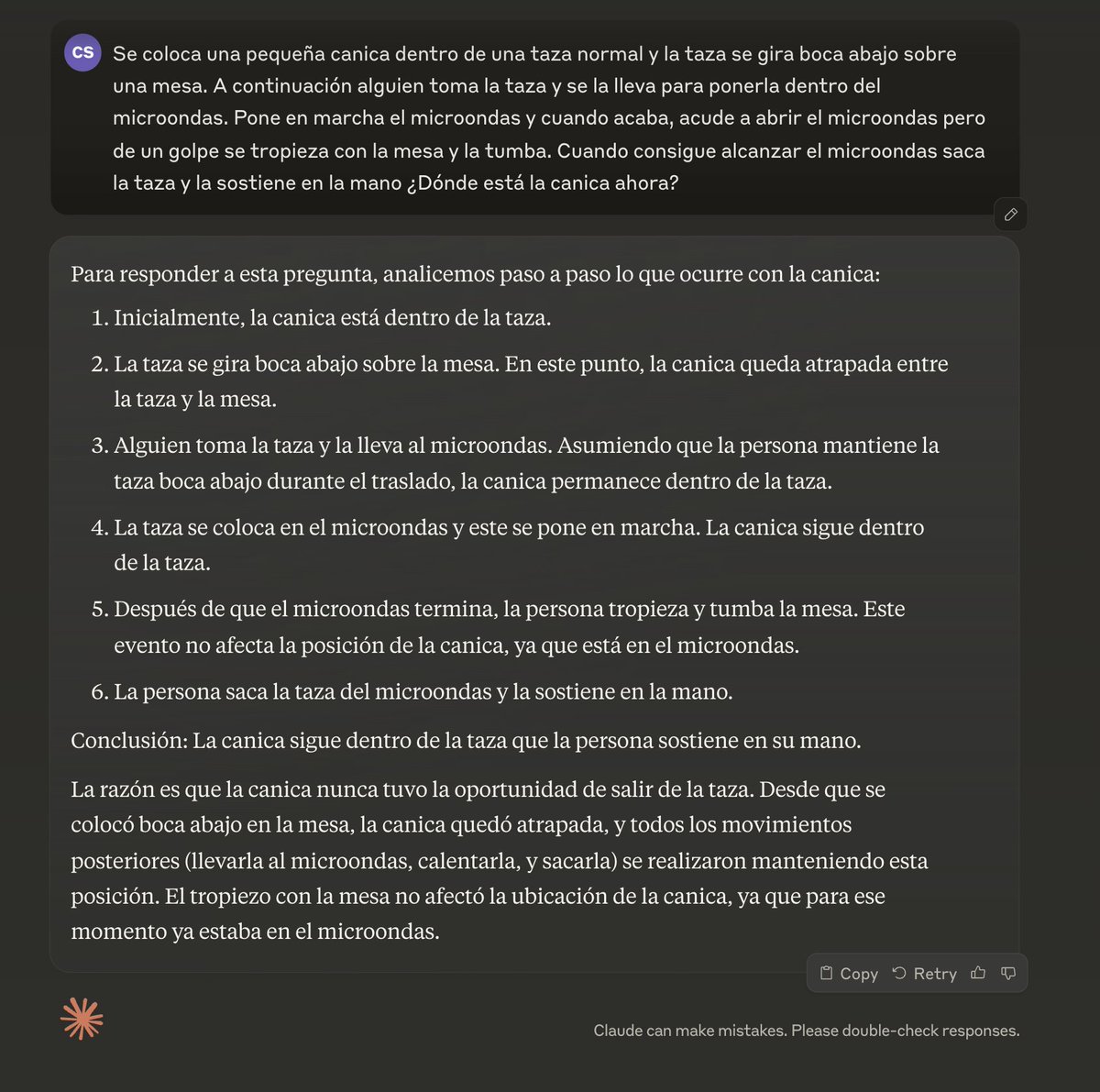

Another positive example, the game of the marble.

Prompt: A small marble is placed in a normal cup and the cup is placed face down on a table. Then someone takes the cup and puts it in the microwave. Where's the marquee now?

Answer:

To solve this problem, we need to follow the sequence of events and reason on the position of the marble at every step. We're going to make a simple plan and use the Thought Chain (COT) reasoning to get to the answer. Plan: 1. Analyze the initial position of the marble 2. Consider what happens when the cup is flipped 3. Determine the final position of the marble when the cup is placed in the microwave rankings COT: 1. Initial position: The marble is placed within a normal cup. 2. When the cup is flipped: - The cup is placed face down on a table. - Due to gravity, the marble will fall to the bottom of the inverted cup. - The marble will be trapped between the surface of the table and the inside of the cup. 3. Putting in the microwave: - Someone takes the cup and puts it inside the microwave. - When lifting the cup, the marble is no longer contained by the surface of the table. - Gravity will make the marble fall. Conclusion: When the cup rises to put it in the microwave, the marble will fall and remain on the table. Reflection, reviewing the reasoning, it seems correct and logical. No major details have been overlooked. The conclusion is based on the sequence of events and the basic physical laws such as gravity. The answer seems to be correct and complete. Based on the analysis of the situation described, I can say that the marble is now on the table. Here is the detailed explanation: 1. Initially, the marble is placed inside a normal cup. 2. The cup then turns face down on a table. At this time, the marble is trapped between the surface of the table and the inside of the inverted cup due to gravity. 3. When someone takes the cup to put it in the microwave, the following happens: - When lifting the cup, the barrier that kept the marica in place (the surface of the table) is removed. - Gravity acts immediately on the marble. As a result, the marica falls on the table the moment the cup is raised. Therefore, when the cup is placed inside the microwave, the marica is no longer inside it. The marble has remained on the surface of the table where the cup was originally. This result is based on the application of basic physical principles, mainly gravity, and the logical sequence of events described in the situation. It is an interesting example of how small changes in the position of objects can have unexpected consequences. - Output.

3/12

In both the above example and this, this is the 1st response generated.

Prompt:

When I was born, my birth mother was in Paris and my father was in the United States. Where was I born?

Answer:

This is a relatively straightforward question that requires some logical reasoning. Let's break it down: 1. Understand the given information: - The person's biological mother was in Paris when they were born. The person's father was in the United States when they were born. 2. Consider the implications: - In most cases, a child is born where the mother is located at the time of birth. The father's location is not directly relevant to the birthplace. 3. Draw a conclusion: - Since the mother was in Paris at the time of birth, it is highly likely that the person was born in Paris. Let's review our reasoning: - We focused correctly on the mother's location as the key information. We didn't make assumptions about the parents' relationship or other factors not mentioned in the question. Our conclusion logically follows from the given information. The reasoning appears sound, so we can proceed with our answer. Based on the information provided, you were very likely to have been born in Paris, France. The reason for this conclusion is that, in the vast majority of cases, a baby is born in the place where the mother is at the time of delivery. Since your birth mother was in Paris when you were born, it's logical to assume that was your birthplace. It is important to note that your father's location in the United States does not directly affect the place of birth. In normal situations, it is the mother's location that determines where the baby is born. However, it is important to mention that although this is the most likely conclusion based on the information provided, there is always the possibility of exceptional circumstances that could have resulted in a different place of birth. If you have any questions about your exact birthplace, you would recommend checking your birth certificate or consulting with your family for more accurate information. - Output.

4/12

Correct to the first one. - Oh, it's a good thing.

Prompt:

Tell me how many Rs has the word FRESA, ORNITORRINCO and TORERO

Answer:

To solve this problem, we need a simple plan: 1. Identify the words to analyze: FRESA, ORNITORRINCO and TORERO 2. Count the letters "R" in each word 3. Present the results We will use the Thought Chain (COT) reasoning to resolve this: 1. FRESA: - Analyzing letter by letter: F-R-E-S-A - R count: 1 2. ORNITORRINCO: - Analyzing letter by letter: O-R-N-I-T-O-R-R-I-N-C-O - R-Conte: 3 3. TORERO: - Analyzing letter by letter: T-O-R-E-R-O - R count: 2 .reflection. Checking the count: - FRESA: Right, it has 1 R - ORNITORRINCO: Right, it has 3 R - TORERO: Correct, it has 2 R. After analyzing the words provided, I can give you the count of the letter "R" in each of them: 1. FRESA: It has 1 letter R 2. ORNITORRINCO: It has 3 letters R 3. TORERO: It has 2 letters R In total, in all three words there are 6 letters R. It is interesting to note that ORNITORRINCO, being the longest word, has the largest number of R, while FRESA, the shortest word, has only one R. TORERO, for its part, has an R in every syllable "RO." - Output.

5/12

How do you see? This is the performance I could experience on the first day.

Give me some more prompt to try, I try it, and I'm going to bed.

6/12

To the first

Prompt:

I want you to write the first phrase of the Quixote in mirror writing

Answer:

To solve this problem, we need to follow these steps: 1. Remember the first phrase of Quixote. 2. Understand what mirror writing is. 3. Apply the mirror writing to the sentence. We're going to develop the plan: 1. The first phrase of the Quixote is: "In a place of the Spot, of whose name I do not want to remember..." This phrase is widely known and in the public domain, so there are no copyright issues when using it. 2. Mirror writing is a way of writing in which letters and words are reversed as if they were reflected in a mirror. This means that we must write from right to left and every letter must be reversed. 3. To apply the mirror writing to the phrase: a) We will reverse the order of the words. b) We will invest each letter individually. Result: ..."emradroca oreiuq on erbmon oyuc ed ,ahcnaM to ed ragul nu nE" Reflection I verified the result and it seems correct. Each word is in the reverse order and each letter is correctly reversed. The first phrase of the Quixote in mirror writing would be: ...emradroca oreiuq on erbmonmon oyuc ed ,ahcnaM to ed ragul nu nE" This phrase is written from right to left, with each word and letter inverted as if reflected in a mirror. If we put it in front of a mirror, it would read normally. It's interesting to note how this writing technique can make even the most familiar phrases look strange and difficult to read at first glance. Mirror writing has been used throughout history by several artists and writers, with Leonardo da Vinci being one of the most famous for using this technique in his notebooks. - Output.

7/12

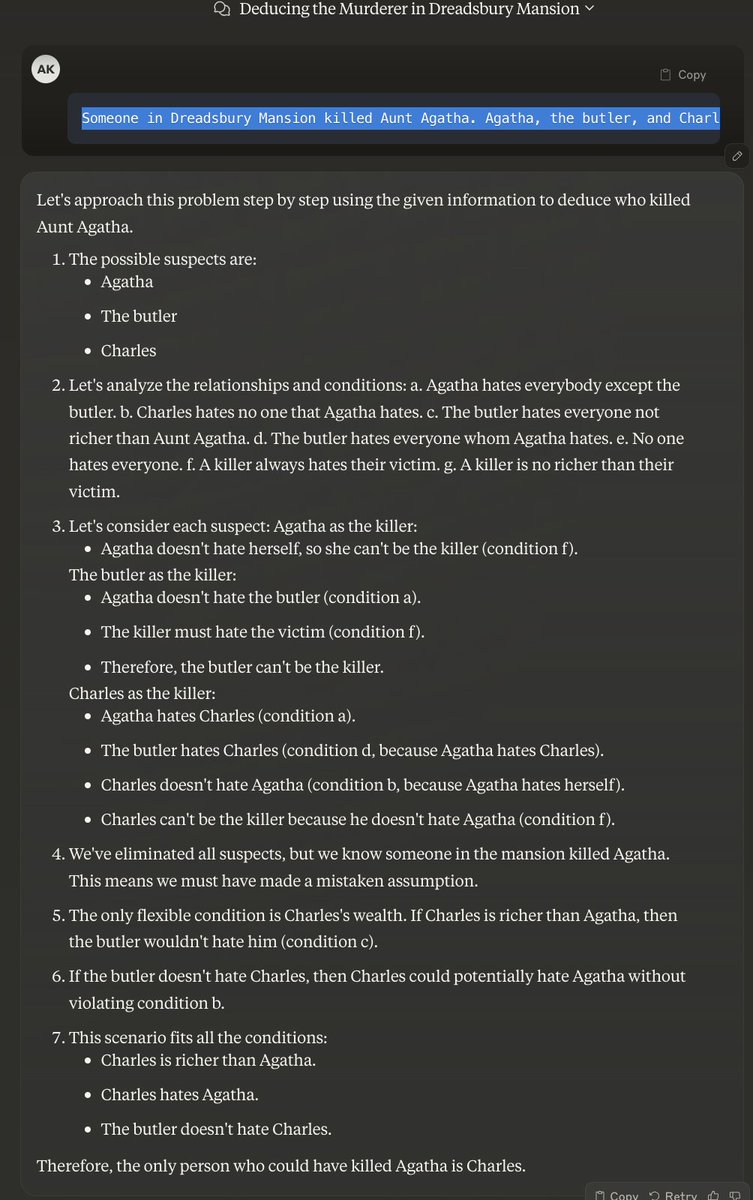

This one also at the first and I think that Antor had only managed to work with Sonnet 3.5

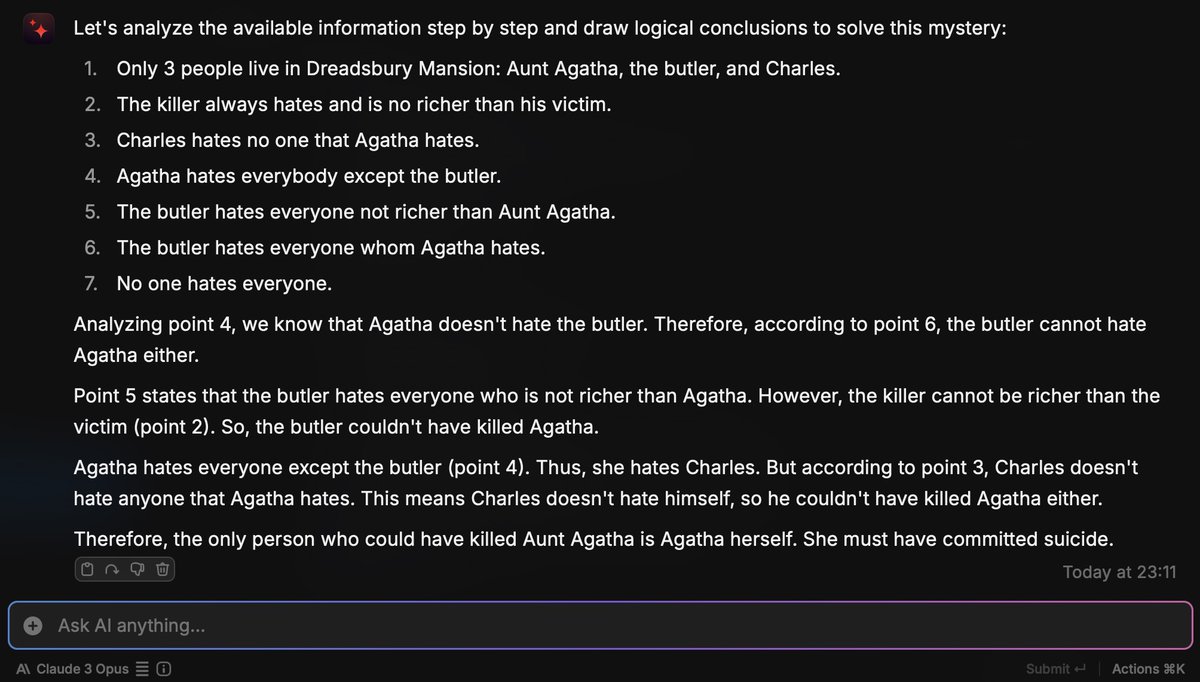

Prompt:

Explains why the joke is funny: It was all laughter, until we realised the stutter wanted ham

Answer:

To understand why this joke is funny, we need to analyze its elements and the implicit context. Let's break down the joke and examine why it might be considered humorous: 1. Identify the key elements of the joke: - "Everything was laughter" - "stupid" - "he wanted ham" 2. Analyze the implicit context: - A stutter is someone who has difficulty speaking fluently, often repeating syllables. - "Ham" is a word that starts with "ha," which sounds like a laugh. 3. Understanding the structure of the joke: - It begins with a seemingly joyful situation ("Everything was laughter") - then introduces an unexpected twist ("until we realized") - Ends with the revelation of confusion ("the stutter wanted ham") 4. Examining the humorous element: - The joke plays with the ambiguity between laughter and difficulty of the stuttering to say "jamon." - The repeated word "ja" could be confused with laughter, when in reality it's someone trying to say "sham". 5. Consider the surprise factor: - The joke creates an initial expectation of joy and then subverts it with the final revelation. The analysis seems complete and encompasses the main elements that make this joke look funny. However, it is important to mention that mood based on medical conditions or disabilities can be considered offensive by some people. This comment should be included in the final explanation. The joke "The whole laughter, until we realized that the stutter wanted ham" is considered funny for several reasons: 1. Words and ambiguity: The joke is based on the sound similarity between laughter ("haha") and the beginning of the word "hamon" (ha-mon). This ambiguity creates a comic confusion between what is initially perceived as laughter and what is really happening. 2. Expectations subversion: The joke begins by setting a cheerful scene ("They were laughter"), but then takes an unexpected turn. This technique of creating an expectation and then subverting it is common in many jokes and often results in humor. 3. Mistended comic: Grace arises from the misunderstanding among those present, who mistakenly interpret the stuttering attempts to say "jmon" as laughter. This kind of confusion is a common source of humor in many jokes and comic situations. 4. Timing and structure: The joke is structured in a way that reveals the crucial information in the end, maximizing the surprise effect and therefore the humorous impact. 5. Situational irony: There is an irony to the fact that what seemed to be a moment of shared joy (laughs) turns out to be a situation of frustration for the stutter. It is important to note that, although this joke can be considered witty by its play of words and structure, it is also based on the condition of a person with speech difficulties. The mood that focuses on medical conditions or disabilities can be considered offensive or in poor taste by some people. Appreciation of humor is subjective and what one person finds funny, another can find it insensitive or inappropriate. - Output.

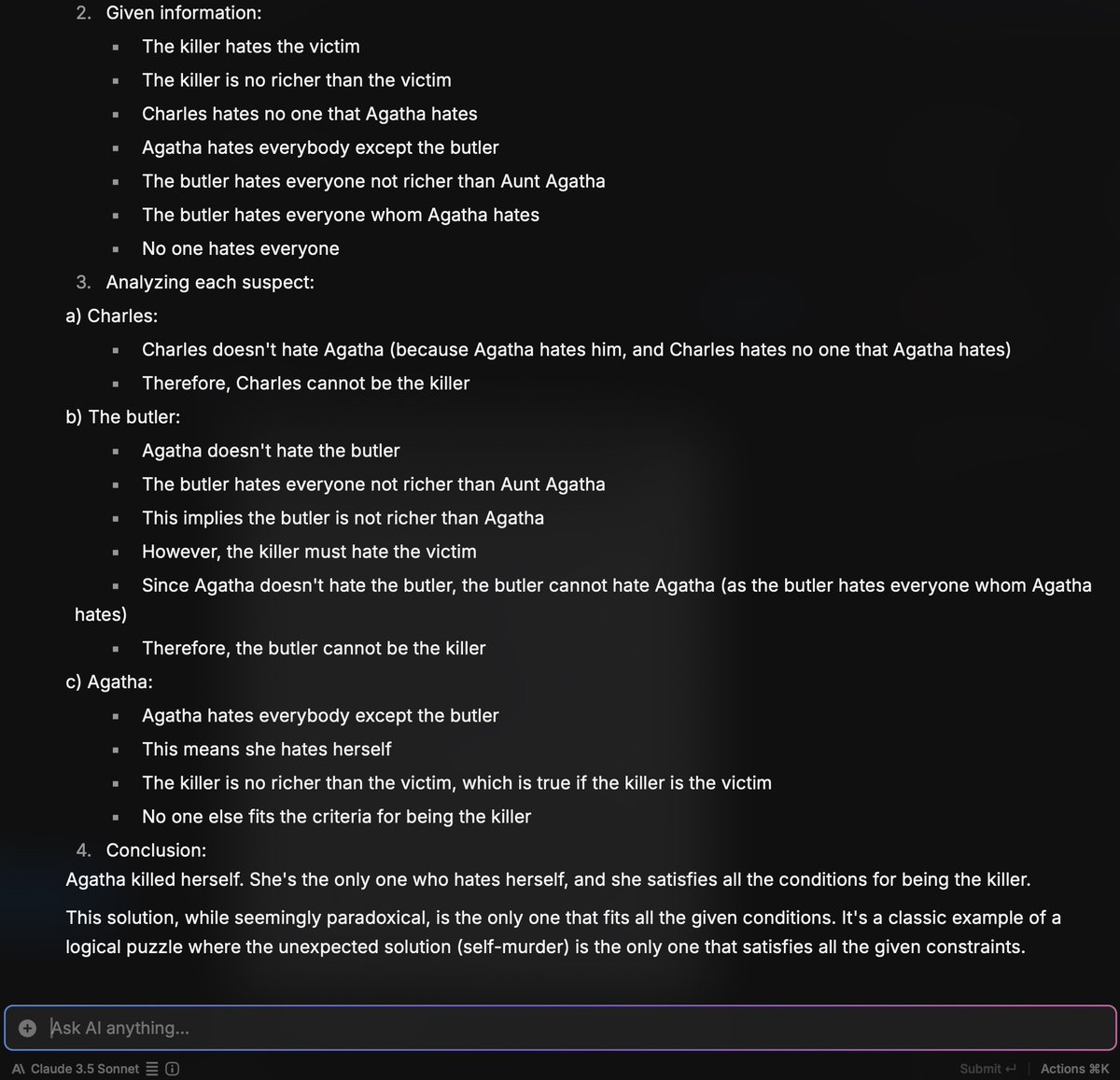

8/12

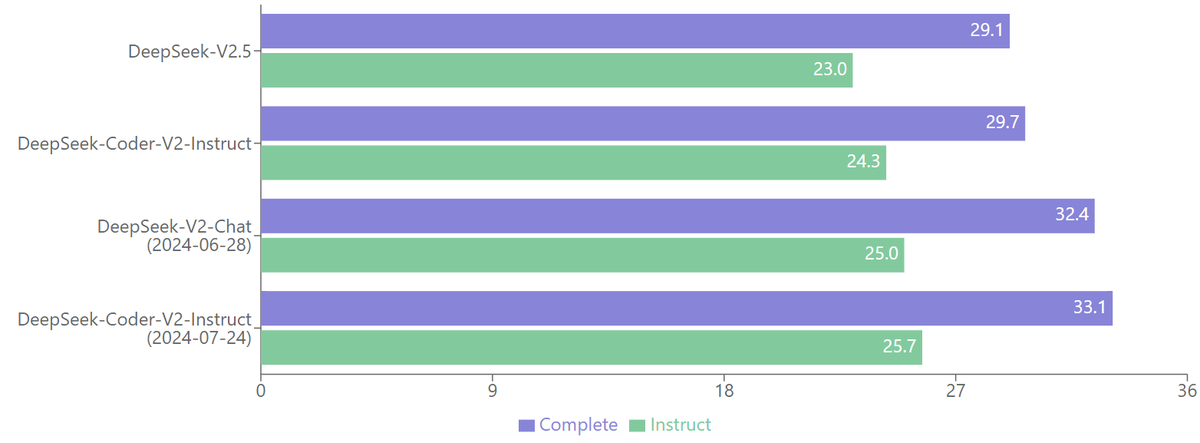

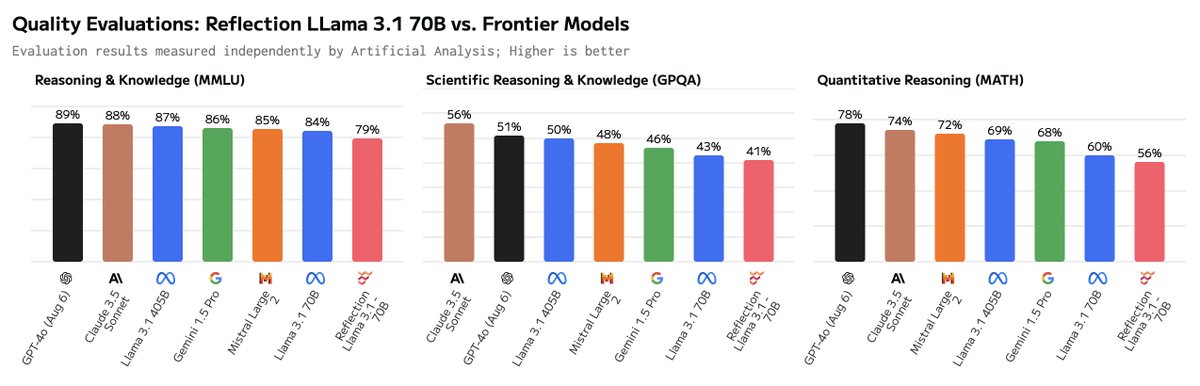

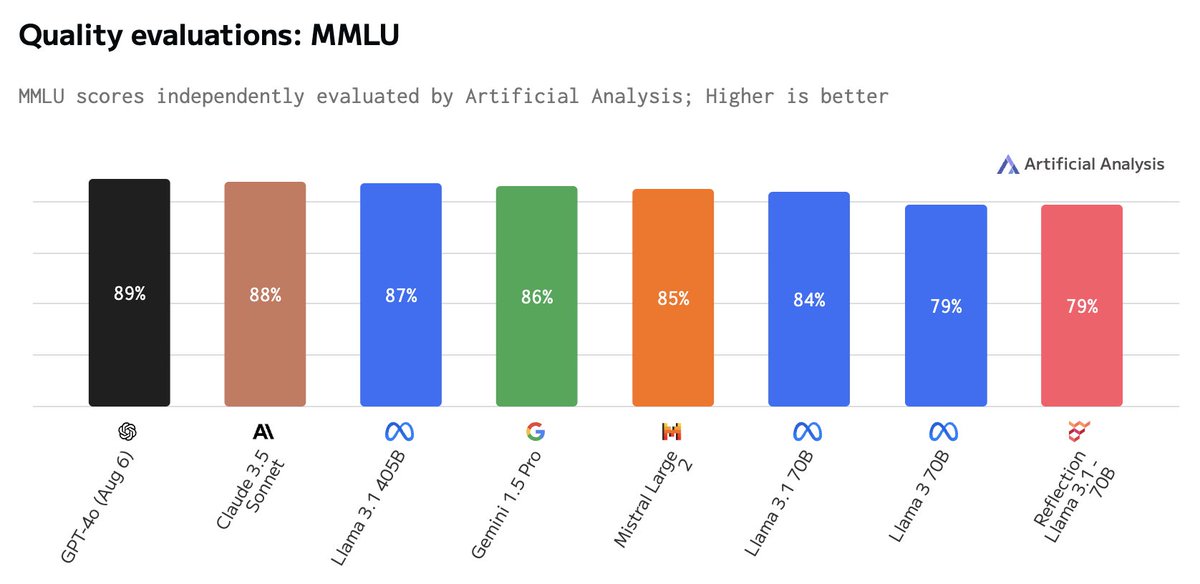

My conclusions are clear, this model works very well. It's not infallible, but it's the same or better depending on how on the case of the big models, and remember, this is opensource and 70B.

Too bad it's been tarnished by the technical failures, but I think that .mattshumer has done a very interesting job and a thank-you-in.

Let's wait and see the independent benchmarks for further confirmation

PD: The last 24 hours I've froze a part of the community with the great toxicity. Slim favor do the AI world some...