Reworkd's founders went viral on GitHub last year with AgentGPT, a free tool to build AI agents that acquired more than 100,000 daily users in a week. Paul Graham-backed startup Reworkd is building AI agents to scrape the web, and raised $2.75 million in seed funding.

techcrunch.com

After AgentGPT’s success, Reworkd pivots to web-scraping AI agents

Maxwell Zeff

8:00 AM PDT • July 24, 2024

Comment

Image Credits: Reworkd

Reworkd’s founders went viral on GitHub last year with AgentGPT, a free tool to build AI agents that acquired more than 100,000 daily users in a week. This earned them a spot in Y Combinator’s summer 2023 cohort, but the co-founders quickly realized building general AI agents was too broad. So now Reworkd is a web-scraping company, specifically building AI agents to extract structured data from the public web.

AgentGPT provided a simple interface in a browser where users could create autonomous AI agents. Soon, everyone was raving about how agents were the future of computing.

When the tool took off, Asim Shrestha, Adam Watkins, and Srijan Subedi were still living in Canada and Reworkd didn’t exist. The massive user influx caught them off guard; Subedi, now Reworkd’s COO, said the tool was costing them $2,000 a day in API calls. For that reason, they had to create Reworkd and get funded fast. One of the most popular use cases for AgentGPT was creating web scrapers, a relatively simple but high-volume task, so Reworkd made this its singular focus.

Web scrapers have become invaluable in the AI era. The number one reason organizations use public web data in 2024 is to build AI models, according to Bright Data’s

latest report. The problem is that web scrapers are traditionally built by humans and must be customized for specific web pages, making them expensive. But Reworkd’s AI agents can scrape more of the web with fewer humans in the loop.

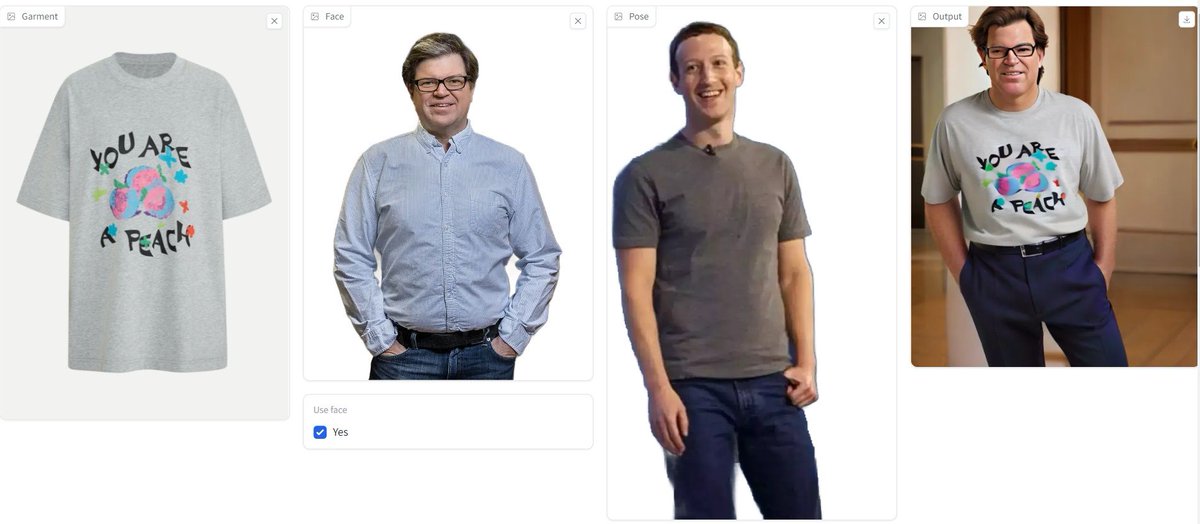

Customers can give Reworkd a list of hundreds, or even thousands, of websites to scrape and then specify the types of data they’re interested in. Then Reworkd’s AI agents use multimodal code generation to turn this into structured data. Agents generate unique code to scrape each website and extract that data for customers to use as they please.

For example, say you want stats on every NFL player, but every team’s website has a different layout. Instead of building a scraper for each website, Reworkd’s agents do that for you given just links and a description of the data you want to extract. With 32 teams, that could save you hours — but if there were 1,000 teams, it could save you weeks.

Reworkd raised a fresh $2.75 million in seed funding from Paul Graham, AI Grant (Nat Friedman and Daniel Gross’ startup accelerator), SV Angel, General Catalyst and Panache Ventures, among others, the startup exclusively told TechCrunch. Combined with a $1.25 million pre-seed investment last year from Panache Ventures and Y Combinator, this brings Reworkd’s total funding raised to date to $4 million.

AI that can use the internet

Shortly after forming Reworkd and moving to San Francisco, the team hired Rohan Pandey as a founding research engineer. He currently lives in AGI House SF, one of the Bay Area’s most popular hacker houses for the AI era. One investor described Pandey as a “one person research lab within Reworkd.”

“We see ourselves as the culmination of this 30-year dream of the Semantic Web,” said Pandey in an interview with TechCrunch, referring to a vision of world wide web inventor Tim Berners-Lee in which computers can read the entire internet. “Even though some websites don’t have markup, LLMs can understand the websites in the same ways that humans can, in such that we can expose basically any website as an API. So in some sense, Reworkd is like the universal API layer for the internet.”

Reworkd says it’s able to capture the long tail end of customer data needs, meaning its AI agents are specifically good for scraping thousands of smaller public websites that large competitors often skip over. Others, such as Bright Data, have scrapers for large websites like LinkedIn or Amazon already built out, but it may not be worth the trouble for a human to build a scraper for every small website. Reworkd addresses this concern, but potentially raises others.

What exactly is “public” web data?

Though web scrapers have existed for decades, they have attracted controversy in the AI era. Unfettered scraping of huge swathes of data has thrown

OpenAI and

Perplexity into legal trouble: News and media organizations allege the AI companies extracted intellectual property from behind a paywall, reproducing it widely without payment. Reworkd is taking precautions to avoid these issues.

“We look at it as uplifting the accessibility of publicly available information,” said Shrestha, co-founder and CEO of Reworkd, in an interview with TechCrunch. “We’re only allowing information that’s publicly available; we’re not going through sign-in walls or anything like that.”

To go a step further, Reworkd says it’s avoiding scraping news altogether, and being selective about who they work with. Watkins, the company’s CTO, says there are better tools for aggregating news content elsewhere, and it is not their focus.

As an example of what is, Reworkd described their work with Axis, a company that helps policy teams comply with government regulations. Axis uses Reworkd’s AI to extract data from thousands of government regulation documents for many countries across the European Union. Axis then trains and fine-tunes an AI model based on this data and offers it to clients as a product.

Starting a web-scraping company these days could be considered wading into dangerous territory, according to Aaron Fiske, partner at Silicon-Valley based law firm Gunderson Dettmer. The landscape is somewhat fluid right now, and the jury is still out on how “public” web data really is for AI models. However, Fiske says Reworkd’s approach, where customers decide what websites to scrape, may insulate them from legal liability.

“It’s like they invented the copying machine, and there’s this one use case for making copies that turned out to be hugely economically valuable, but also legally, really questionable,” said Fiske in an interview with TechCrunch. “It’s not like web scrapers servicing AI companies is necessarily risky, but working with AI companies that are really interested in harvesting copyrighted content is maybe an issue.”

That’s why Reworkd is being careful about who it works with. Web scrapers have obfuscated much of the blame in potential copyright infringement cases related to AI thus far. In the OpenAI case, Fiske points out that The New York Times did not sue the web scraper that collected its articles, but rather the company that allegedly reproduced its work. But even there, it’s yet to be decided if what OpenAI did was truly copyright infringement.

There’s more evidence that web scrapers are legally in the clear during the AI boom. A court recently

ruled in favor of Bright Data after it scraped Facebook and Instagram profiles via the web. One example in the court case was a dataset of 615 million records of Instagram user data, which Bright Data sells for $860,000. Meta sued the company, alleging this violated its terms of service. But a court ruled that this data is public and therefore available to scrape.

Investors think Reworkd scales with the big guys

Reworkd has attracted big names as early investors, from Y Combinator and Paul Graham to Daniel Gross and Nat Friedman. Some investors say this is because Reworkd’s technology stands to improve, and get cheaper, alongside new models. The startup says OpenAI’s GPT-4o is currently the best for its multimodal code generation and that a lot of Reworkd’s technology wasn’t possible until just a few months ago.

“If you try to compete with the rate of technology progress — not building on top of it — then I think that you’ll have a hard time as a founder,” General Catalyst’s Viet Le told TechCrunch. “Reworkd has the mindset of basing its solution on the rate of progress.”

Reworkd is creating AI agents that address a particular gap in the market; companies need more data because AI is advancing quickly. As more companies build custom AI models specific to their business, Reworkd stands to gain more customers. Fine-tuning models necessitates quality, structured data, and lots of it.

Reworkd says its approach is “self-healing,” meaning that its web scrapers won’t break down due to a web page update. The startup claims to avoid hallucination issues traditionally associated with AI models because Reworkd’s agents are generating code to scrape a website. It’s possible the AI could make a mistake and grab the wrong data from a website, but Reworkd’s team created

Banana-lyzer, an open source evaluation framework, to regularly assess its accuracy.

Reworkd doesn’t have a large payroll — the team is just four people — but it does have to take on considerable inference costs for running its AI agents. The startup expects its pricing to get increasingly competitive as these costs trend downward.

OpenAI just released GPT-4o mini, a smaller version of its industry-leading model with competitive benchmarks. Innovations like these could make Reworkd more competitive.

Paul Graham and AI Grant did not respond to TechCrunch’s request for comment.