1/8

SuperCoder 2.0 achieves 33.66% success rate in SWE-Bench Lite, ranking #3 globally and #1 among all Open Source Coding Systems.

SuperCoder 2.0 achieves 33.66% success rate in SWE-Bench Lite, ranking #3 globally and #1 among all Open Source Coding Systems.

Read our Technical Report on SuperCoder's results here: SuperCoder 2.0 achieves 33.66% success rate in SWE-bench Lite, ranking #3 globally & #1 among all open-source coding systems - SuperAGI

2/8

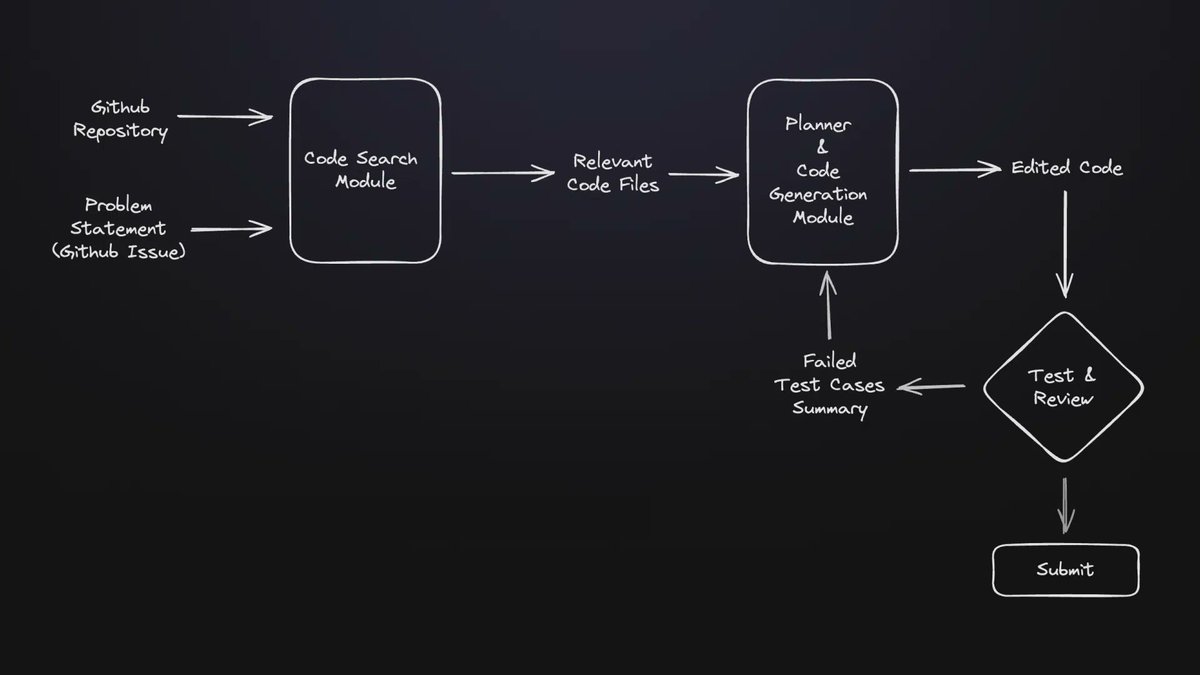

SuperCoder 2.0 integrates GPT-4o and Sonnet-3.5 to refine our dual approach of Code Search and Code Generation. By identifying precise bug locations and generating robust code patches, the system streamlines the debugging process in large codebases.

3/8

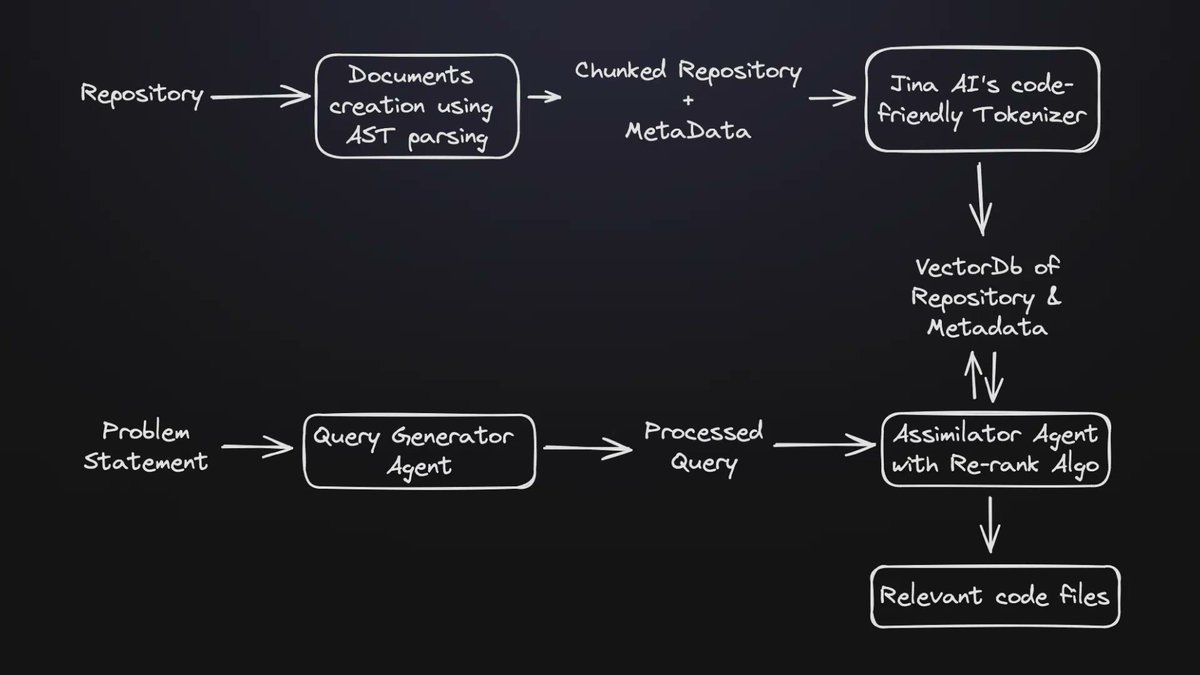

Our approach utilizes an innovative method of extracting detailed metadata and employing vector databases for advanced search capabilities. This allows us to locate specific code issues accurately and deploy solutions effectively, ensuring higher reliability in patch application.

4/8

We address Python's indentation sensitivity by regenerating entire method bodies, allowing for seamless integration of new or revised code without introducing syntax errors, which is crucial for maintaining code functionality after patches.

5/8

Try SuperCoder now: SuperAGI - Open-source Autonomous Software Systems - SuperAGI

6/8

Impressive results! I'll take a look at the technical report to learn more about SuperCoder 2.0's success in SWE-Bench Lite. Keep up the great work!

lets connect

7/8

An impressive achievement for SuperCoder 2.0!

8/8

Hello, do you like to promote your account? We have the ability to promote your account and increase your followers. We help you create a strong and effective account with a very attractive price. Leave me a private message about the prices.

/search?q=#HisMan3 /search?q=#Schoof /search?q=#ssmonth24

/search?q=#Bitcoin

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Read our Technical Report on SuperCoder's results here: SuperCoder 2.0 achieves 33.66% success rate in SWE-bench Lite, ranking #3 globally & #1 among all open-source coding systems - SuperAGI

2/8

SuperCoder 2.0 integrates GPT-4o and Sonnet-3.5 to refine our dual approach of Code Search and Code Generation. By identifying precise bug locations and generating robust code patches, the system streamlines the debugging process in large codebases.

3/8

Our approach utilizes an innovative method of extracting detailed metadata and employing vector databases for advanced search capabilities. This allows us to locate specific code issues accurately and deploy solutions effectively, ensuring higher reliability in patch application.

4/8

We address Python's indentation sensitivity by regenerating entire method bodies, allowing for seamless integration of new or revised code without introducing syntax errors, which is crucial for maintaining code functionality after patches.

5/8

Try SuperCoder now: SuperAGI - Open-source Autonomous Software Systems - SuperAGI

6/8

Impressive results! I'll take a look at the technical report to learn more about SuperCoder 2.0's success in SWE-Bench Lite. Keep up the great work!

lets connect

7/8

An impressive achievement for SuperCoder 2.0!

8/8

Hello, do you like to promote your account? We have the ability to promote your account and increase your followers. We help you create a strong and effective account with a very attractive price. Leave me a private message about the prices.

/search?q=#HisMan3 /search?q=#Schoof /search?q=#ssmonth24

/search?q=#Bitcoin

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196