Exclusive: OpenAI working on new reasoning technology under code name ‘Strawberry’

By

Anna Tong and

Katie Paul

July 12, 20245:23 PM EDTUpdated 19 hours ago

OpenAI logo is seen in this illustration taken May 20, 2024. REUTERS/Dado Ruvic/Illustration/File Photo

Purchase Licensing Rights, opens new tab

July 12 - ChatGPT maker OpenAI is working on a novel approach to its artificial intelligence models in a project code-named “Strawberry,” according to a person familiar with the matter and internal documentation reviewed by Reuters.

The project, details of which have not been previously reported, comes as the Microsoft-backed startup races to show that the types of models it offers are capable of delivering advanced reasoning capabilities.

Teams inside OpenAI are working on Strawberry, according to a copy of a recent internal OpenAI document seen by Reuters in May. Reuters could not ascertain the precise date of the document, which details a plan for how OpenAI intends to use Strawberry to perform research. The source described the plan to Reuters as a work in progress. The news agency could not establish how close Strawberry is to being publicly available.

How Strawberry works is a tightly kept secret even within OpenAI, the person said.

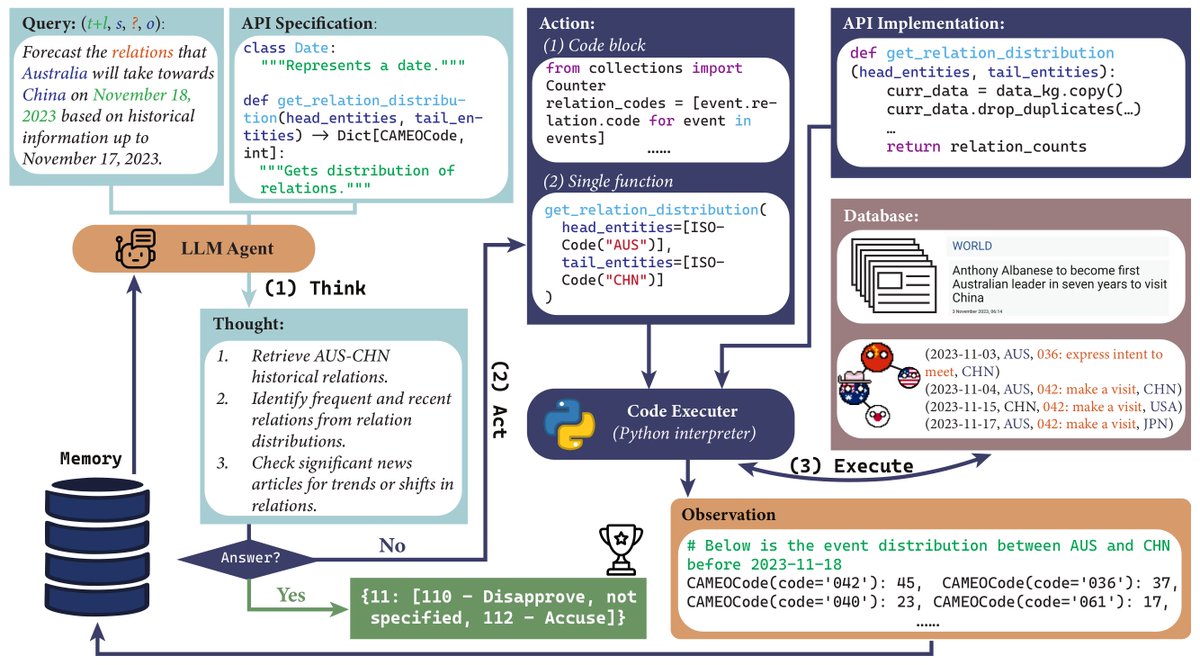

The document describes a project that uses Strawberry models with the aim of enabling the company’s AI to not just generate answers to queries but to plan ahead enough to navigate the internet autonomously and reliably to perform what OpenAI terms “deep research,” according to the source.

This is something that has eluded AI models to date, according to interviews with more than a dozen AI researchers.

Asked about Strawberry and the details reported in this story, an OpenAI company spokesperson said in a statement: “We want our AI models to see and understand the world more like we do. Continuous research into new AI capabilities is a common practice in the industry, with a shared belief that these systems will improve in reasoning over time.”

The spokesperson did not directly address questions about Strawberry.

The Strawberry project was formerly known as Q*, which

Reuters reported last year was already seen inside the company as a breakthrough.

Two sources described viewing earlier this year what OpenAI staffers told them were Q* demos, capable of answering tricky science and math questions out of reach of today’s commercially-available models.

On Tuesday at an internal all-hands meeting, OpenAI showed a demo of a research project that it claimed had new human-like reasoning skills,

according to Bloomberg, opens new tab. An OpenAI spokesperson confirmed the meeting but declined to give details of the contents. Reuters could not determine if the project demonstrated was Strawberry.

OpenAI hopes the innovation will improve its AI models’ reasoning capabilities dramatically, the person familiar with it said, adding that Strawberry involves a specialized way of processing an AI model after it has been pre-trained on very large datasets.

Researchers Reuters interviewed say that reasoning is key to AI achieving human or super-human-level intelligence.

While large language models can already summarize dense texts and compose elegant prose far more quickly than any human, the technology often falls short on common sense problems whose solutions seem intuitive to people, like recognizing logical fallacies and playing tic-tac-toe. When the model encounters these kinds of problems, it often “hallucinates” bogus information.

AI researchers interviewed by Reuters generally agree that reasoning, in the context of AI, involves the formation of a model that enables AI to plan ahead, reflect how the physical world functions, and work through challenging multi-step problems reliably.

Improving reasoning in AI models is seen as the key to unlocking the ability for the models to do everything from making major scientific discoveries to planning and building new software applications.

OpenAI CEO Sam Altman

said earlier this year, opens new tab that in AI “the most important areas of progress will be around reasoning ability.”

Other companies like Google, Meta and Microsoft are likewise experimenting with different techniques to improve reasoning in AI models, as are most academic labs that perform AI research. Researchers differ, however, on whether large language models (LLMs) are capable of incorporating ideas and long-term planning into how they do prediction. For instance, one of the pioneers of modern AI, Yann LeCun, who works at Meta, has frequently said that LLMs are not capable of humanlike reasoning.

AI CHALLENGES

Strawberry is a key component of OpenAI’s plan to overcome those challenges, the source familiar with the matter said. The document seen by Reuters described what Strawberry aims to enable, but not how.

In recent months, the company has privately been signaling to developers and other outside parties that it is on the cusp of releasing technology with significantly more advanced reasoning capabilities, according to four people who have heard the company’s pitches. They declined to be identified because they are not authorized to speak about private matters.

Strawberry includes a specialized way of what is known as “post-training” OpenAI’s generative AI models, or adapting the base models to hone their performance in specific ways after they have already been “trained” on reams of generalized data, one of the sources said.

The post-training phase of developing a model involves methods like “fine-tuning,” a process used on nearly all language models today that comes in many flavors, such as having humans give feedback to the model based on its responses and feeding it examples of good and bad answers.

Strawberry has similarities to a method developed at Stanford in 2022 called "Self-Taught Reasoner” or “STaR”, one of the sources with knowledge of the matter said. STaR enables AI models to “bootstrap” themselves into higher intelligence levels via iteratively creating their own training data, and in theory could be used to get language models to transcend human-level intelligence, one of its creators, Stanford professor Noah Goodman, told Reuters.

“I think that is both exciting and terrifying…if things keep going in that direction we have some serious things to think about as humans,” Goodman said. Goodman is not affiliated with OpenAI and is not familiar with Strawberry.

Among the capabilities OpenAI is aiming Strawberry at is performing long-horizon tasks (LHT), the document says, referring to complex tasks that require a model to plan ahead and perform a series of actions over an extended period of time, the first source explained.

To do so, OpenAI is creating, training and evaluating the models on what the company calls a “deep-research” dataset, according to the OpenAI internal documentation. Reuters was unable to determine what is in that dataset or how long an extended period would mean.

OpenAI specifically wants its models to use these capabilities to conduct research by browsing the web autonomously with the assistance of a “CUA,” or a computer-using agent, that can take actions based on its findings, according to the document and one of the sources. OpenAI also plans to test its capabilities on doing the work of software and machine learning engineers.

Reporting by Anna Tong in San Francisco and Katie Paul in New York; editing by Ken Li and Claudia Parsons