You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?That's one wild ass quote

that old fool will be in the ground by the time agi becomes an existential threat

Well if we're too believe most experts in the field, AGI will be developed in 2029. I hope it isn't an existential threat. I'm more worried about how humans will use it than I am about some skynet /terminator situation.that old fool will be in the ground by the time agi becomes an existential threat

OpenAI is expected to release a 'materially better' GPT-5 for its chatbot mid-year, sources say

The model that underlies the popular ChatGPT generative AI tool is said to be soon getting an upgrade.

OpenAI is expected to release a 'materially better' GPT-5 for its chatbot mid-year, sources say

Kali Hays and Darius RafieyanMar 19, 2024, 6:35 PM EDT

Sam AltmanJustin Sullivan/Getty Images

- OpenAI's main product is the popular generative AI tool ChatGPT.

- Since its last major model upgrade to GPT-4, the tool has run into performance issues.

- The next version of the model, GPT-5, is set to come out soon. It's said to be "materially better."

OpenAI is poised to release in the coming months the next version of its model for ChatGPT, the generative AI tool that kicked off the current wave of AI projects and investments.

The generative AI company helmed by Sam Altman is on track to put out GPT-5 sometime mid-year, likely during summer, according to two people familiar with the company. Some enterprise customers have recently received demos of the latest model and its related enhancements to the ChatGPT tool, another person familiar with the process said. These people, whose identities Business Insider has confirmed, asked to remain anonymous so they could speak freely.

"It's really good, like materially better," said one CEO who recently saw a version of GPT-5. OpenAI demonstrated the new model with use cases and data unique to his company, the CEO said. He said the company also alluded to other as-yet-unreleased capabilities of the model, including the ability to call AI agents being developed by OpenAI to perform tasks autonomously.

The company does not yet have a set release date for the new model, meaning current internal expectations for its release could change.

OpenAI is still training GPT-5, one of the people familiar said. After training is complete, it will be safety tested internally and further "red teamed," a process where employees and typically a selection of outsiders challenge the tool in various ways to find issues before it's made available to the public. There is no specific timeframe when safety testing needs to be completed, one of the people familiar noted, so that process could delay any release date.

Spokespeople for the company did not respond to an email requesting comment.

Sales to enterprise customers, which pay OpenAI for an enhanced version of ChatGPT for their work, are the company's main revenue stream as it builds out its business and Altman builds his growing AI empire.

OpenAI released a year ago its last major update to ChatGPT. GPT-4 was billed as being much faster and more accurate in its responses than its previous model GPT-3. OpenAI later in 2023 released GPT-4 Turbo, part of an effort to cure an issue sometimes referred to as " laziness" because the model would sometimes refuse to answer prompts.

Large language models like those of OpenAI are trained on massive sets of data scraped from across the web to respond to user prompts in an authoritative tone that evokes human speech patterns. That tone, along with the quality of the information it provides, can degrade depending on what training data is used for updates or other changes OpenAI may make in its development and maintenance work.

Several forums on Reddit have been dedicated to complaints of GPT-4 degradation and worse outputs from ChatGPT. People inside OpenAI hope GPT-5 will be more reliable and will impress the public and enterprise customers alike, one of the people familiar said.

Much of the most crucial training data for AI models is technically owned by copyright holders. OpenAI, along with many other tech companies, have argued against updated federal rules for how LLMs access and use such material.

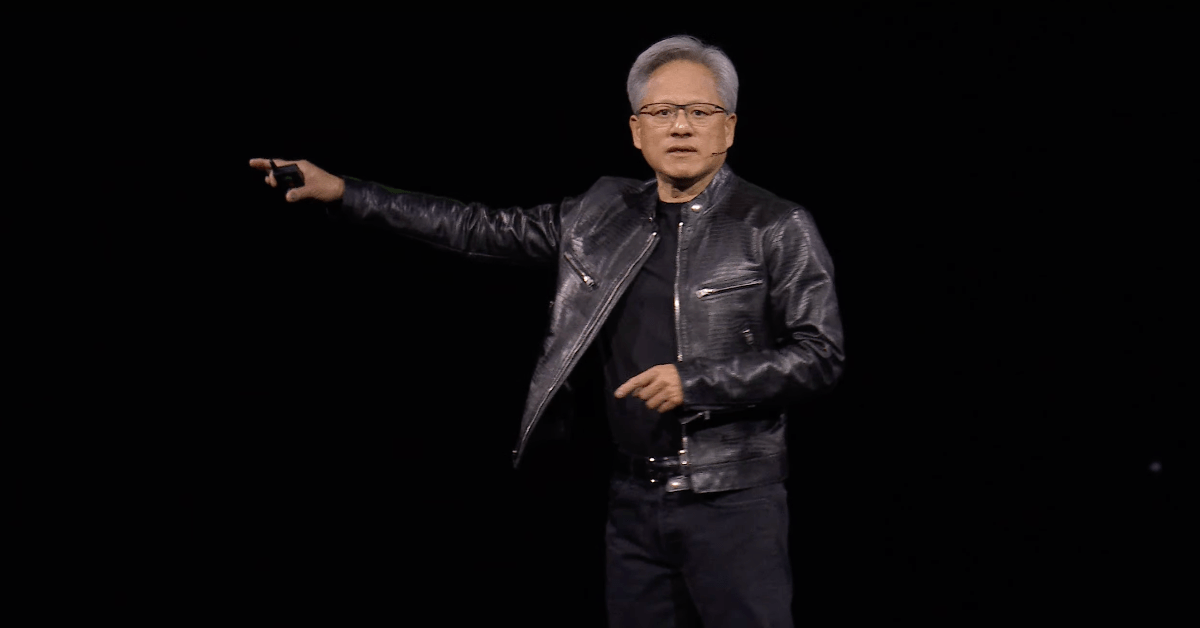

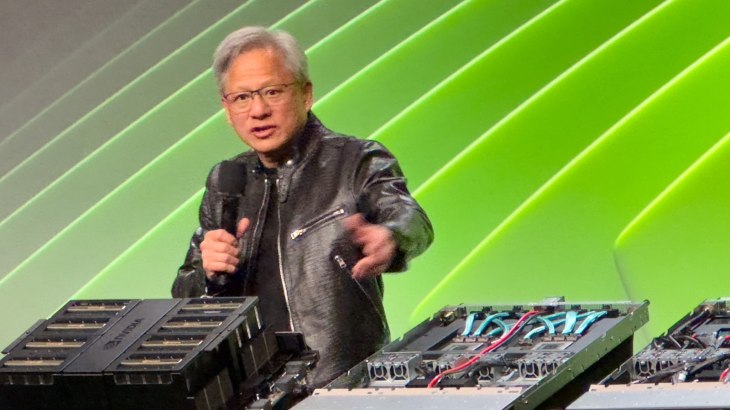

Nvidia's Jensen Huang says AI hallucinations are solvable, artificial general intelligence is 5 years away | TechCrunch

Artificial general intelligence (AGI) -- often referred to as "strong AI," "full AI," "human-level AI" or "general intelligent action" -- represents a

Nvidia’s Jensen Huang says AI hallucinations are solvable, artificial general intelligence is 5 years away

Haje Jan Kamps @Haje / 5:13 PM EDT•March 19, 2024

Image Credits: Haje Jan Kamps(opens in a new window)

Artificial general intelligence (AGI) — often referred to as “strong AI,” “full AI,” “human-level AI” or “general intelligent action” — represents a significant future leap in the field of artificial intelligence. Unlike narrow AI, which is tailored for specific tasks, such as detecting product flaws, summarizing the news, or building you a website, AGI will be able to perform a broad spectrum of cognitive tasks at or above human levels. Addressing the press this week at Nvidia’s annual GTC developer conference, CEO Jensen Huang appeared to be getting really bored of discussing the subject — not least because he finds himself misquoted a lot, he says.

The frequency of the question makes sense: The concept raises existential questions about humanity’s role in and control of a future where machines can outthink, outlearn and outperform humans in virtually every domain. The core of this concern lies in the unpredictability of AGI’s decision-making processes and objectives, which might not align with human values or priorities (a concept explored in-depth in science fiction since at least the 1940s). There’s concern that once AGI reaches a certain level of autonomy and capability, it might become impossible to contain or control, leading to scenarios where its actions cannot be predicted or reversed.

When sensationalist press asks for a timeframe, it is often baiting AI professionals into putting a timeline on the end of humanity — or at least the current status quo. Needless to say, AI CEOs aren’t always eager to tackle the subject.

Huang, however, spent some time telling the press what he does think about the topic. Predicting when we will see a passable AGI depends on how you define AGI, Huang argues, and draws a couple of parallels: Even with the complications of time zones, you know when New Year happens and 2025 rolls around. If you’re driving to the San Jose Convention Center (where this year’s GTC conference is being held), you generally know you’ve arrived when you can see the enormous GTC banners. The crucial point is that we can agree on how to measure that you’ve arrived, whether temporally or geospatially, where you were hoping to go.

“If we specified AGI to be something very specific, a set of tests where a software program can do very well — or maybe 8% better than most people — I believe we will get there within 5 years,” Huang explains. He suggests that the tests could be a legal bar exam, logic tests, economic tests or perhaps the ability to pass a pre-med exam. Unless the questioner is able to be very specific about what AGI means in the context of the question, he’s not willing to make a prediction. Fair enough.

AI hallucination is solvable

In Tuesday’s Q&A session, Huang was asked what to do about AI hallucinations — the tendency for some AIs to make up answers that sound plausible but aren’t based in fact. He appeared visibly frustrated by the question, and suggested that hallucinations are solvable easily — by making sure that answers are well-researched.“Add a rule: For every single answer, you have to look up the answer,” Huang says, referring to this practice as “retrieval-augmented generation,” describing an approach very similar to basic media literacy: Examine the source and the context. Compare the facts contained in the source to known truths, and if the answer is factually inaccurate — even partially — discard the whole source and move on to the next one. “The AI shouldn’t just answer; it should do research first to determine which of the answers are the best.”

For mission-critical answers, such as health advice or similar, Nvidia’s CEO suggests that perhaps checking multiple resources and known sources of truth is the way forward. Of course, this means that the generator that is creating an answer needs to have the option to say, “I don’t know the answer to your question,” or “I can’t get to a consensus on what the right answer to this question is,” or even something like “Hey, the Super Bowl hasn’t happened yet, so I don’t know who won.”

1/4

GPT4 was trained on only about 10T tokens!

30 billion quadrillion == 3e25

Note: 3e25 BFloat16 FLOPS at 40% MFU on H100s is about 7.5e10sec ie 21M H100 hours. This is about 1300h on 16k H100s (less than 2 months)

Token math:

Previous leaks have verified that GPT4 is a 8x topk=2 MoE

Assume attention uses 1/2 the params of each expert

params per attn blcok: ppab + 8x (2x ppab) = 1.8e12 => ppab = 105.88e9

per tok params used in fwd pass: p_fwd = ppab + 2x (2x ppab) = 529.4e9

FLOPs per tok = 3 * 2 * p_fwd = 3.2e12

3e25 FLOPS / 3.2e12 FLOPS/tok = 9.3e12 tok (rounds to 10T tok)

2/4

Jensen Huang: OpenAI's latest model has 1.8 trillion parameters and required 30 billion quadrillion FLOPS to train

3/4

@allen_ai, @soldni dolma 10T when?

GPT4 was trained on only about 10T tokens!

30 billion quadrillion == 3e25

Note: 3e25 BFloat16 FLOPS at 40% MFU on H100s is about 7.5e10sec ie 21M H100 hours. This is about 1300h on 16k H100s (less than 2 months)

Token math:

Previous leaks have verified that GPT4 is a 8x topk=2 MoE

Assume attention uses 1/2 the params of each expert

params per attn blcok: ppab + 8x (2x ppab) = 1.8e12 => ppab = 105.88e9

per tok params used in fwd pass: p_fwd = ppab + 2x (2x ppab) = 529.4e9

FLOPs per tok = 3 * 2 * p_fwd = 3.2e12

3e25 FLOPS / 3.2e12 FLOPS/tok = 9.3e12 tok (rounds to 10T tok)

2/4

Jensen Huang: OpenAI's latest model has 1.8 trillion parameters and required 30 billion quadrillion FLOPS to train

3/4

@allen_ai, @soldni dolma 10T when?

1/4

Presenting Starling-LM-7B-beta, our cutting-edge 7B language model fine-tuned with RLHF!

Also introducing Starling-RM-34B, a Yi-34B-based reward model trained on our Nectar dataset, surpassing our previous 7B RM in all benchmarks.

We've fine-tuned the latest Openchat model with the 34B reward model, achieving MT Bench score of 8.12 while being much better at hard prompts compared to Starling-LM-7B-alpha in internal benchmarks. Testing will soon be available on @lmsysorg@lmsysorg. Please stay tuned!

. Please stay tuned!

HuggingFace links:

[Starling-LM-7B-beta]Nexusflow/Starling-LM-7B-beta · Hugging Face

[Starling-RM-34B]Nexusflow/Starling-RM-34B · Hugging Face

Discord Link:加入 Discord 服务器 Nexusflow!

Since the release of Starling-LM-7B-alpha, we've received numerous requests to make the model commercially viable. Therefore, we're licensing all models and datasets under Apache-2.0, with the condition that they are not used to compete with OpenAI. Enjoy!

2/4

Thank you! I guess larger model as RM naturally has some advantage. But you’ll see some rigorous answer very soon on twitter ;)

3/4

Yes, sorry we delayed that a bit since we are refactoring the code. But hopefully the code and paper will be out soon!

4/4

Yes, please stay tuned!

Presenting Starling-LM-7B-beta, our cutting-edge 7B language model fine-tuned with RLHF!

Also introducing Starling-RM-34B, a Yi-34B-based reward model trained on our Nectar dataset, surpassing our previous 7B RM in all benchmarks.

We've fine-tuned the latest Openchat model with the 34B reward model, achieving MT Bench score of 8.12 while being much better at hard prompts compared to Starling-LM-7B-alpha in internal benchmarks. Testing will soon be available on @lmsysorg@lmsysorg. Please stay tuned!

. Please stay tuned!

HuggingFace links:

[Starling-LM-7B-beta]Nexusflow/Starling-LM-7B-beta · Hugging Face

[Starling-RM-34B]Nexusflow/Starling-RM-34B · Hugging Face

Discord Link:加入 Discord 服务器 Nexusflow!

Since the release of Starling-LM-7B-alpha, we've received numerous requests to make the model commercially viable. Therefore, we're licensing all models and datasets under Apache-2.0, with the condition that they are not used to compete with OpenAI. Enjoy!

2/4

Thank you! I guess larger model as RM naturally has some advantage. But you’ll see some rigorous answer very soon on twitter ;)

3/4

Yes, sorry we delayed that a bit since we are refactoring the code. But hopefully the code and paper will be out soon!

4/4

Yes, please stay tuned!

1/1

Exciting Update: Moirai is now open source!

Foundation models for time series forecasting are gaining momentum! If you’ve been eagerly awaiting the release of Moirai since our paper (Unified Training of Universal Time Series Forecasting Transformers ) dropped, here’s some great news!

Today, we’re excited to share that the code, data, and model weights for our paper have been officially released. Let’s fuel the advancement of foundation models for time series forecasting together!

Code:GitHub - SalesforceAIResearch/uni2ts: Unified Training of Universal Time Series Forecasting Transformers

LOTSA data: Salesforce/lotsa_data · Datasets at Hugging Face (of course via @huggingface)

Paper:[2402.02592] Unified Training of Universal Time Series Forecasting Transformers

Blog post: Moirai: A Time Series Foundation Model for Universal Forecasting

Model:Moirai-R models - a Salesforce Collection

@woo_gerald @ChenghaoLiu15 @silviocinguetta @CaimingXiong @doyensahoo

Exciting Update: Moirai is now open source!

Foundation models for time series forecasting are gaining momentum! If you’ve been eagerly awaiting the release of Moirai since our paper (Unified Training of Universal Time Series Forecasting Transformers ) dropped, here’s some great news!

Today, we’re excited to share that the code, data, and model weights for our paper have been officially released. Let’s fuel the advancement of foundation models for time series forecasting together!

Code:GitHub - SalesforceAIResearch/uni2ts: Unified Training of Universal Time Series Forecasting Transformers

LOTSA data: Salesforce/lotsa_data · Datasets at Hugging Face (of course via @huggingface)

Paper:[2402.02592] Unified Training of Universal Time Series Forecasting Transformers

Blog post: Moirai: A Time Series Foundation Model for Universal Forecasting

Model:Moirai-R models - a Salesforce Collection

@woo_gerald @ChenghaoLiu15 @silviocinguetta @CaimingXiong @doyensahoo

Last edited:

1/4

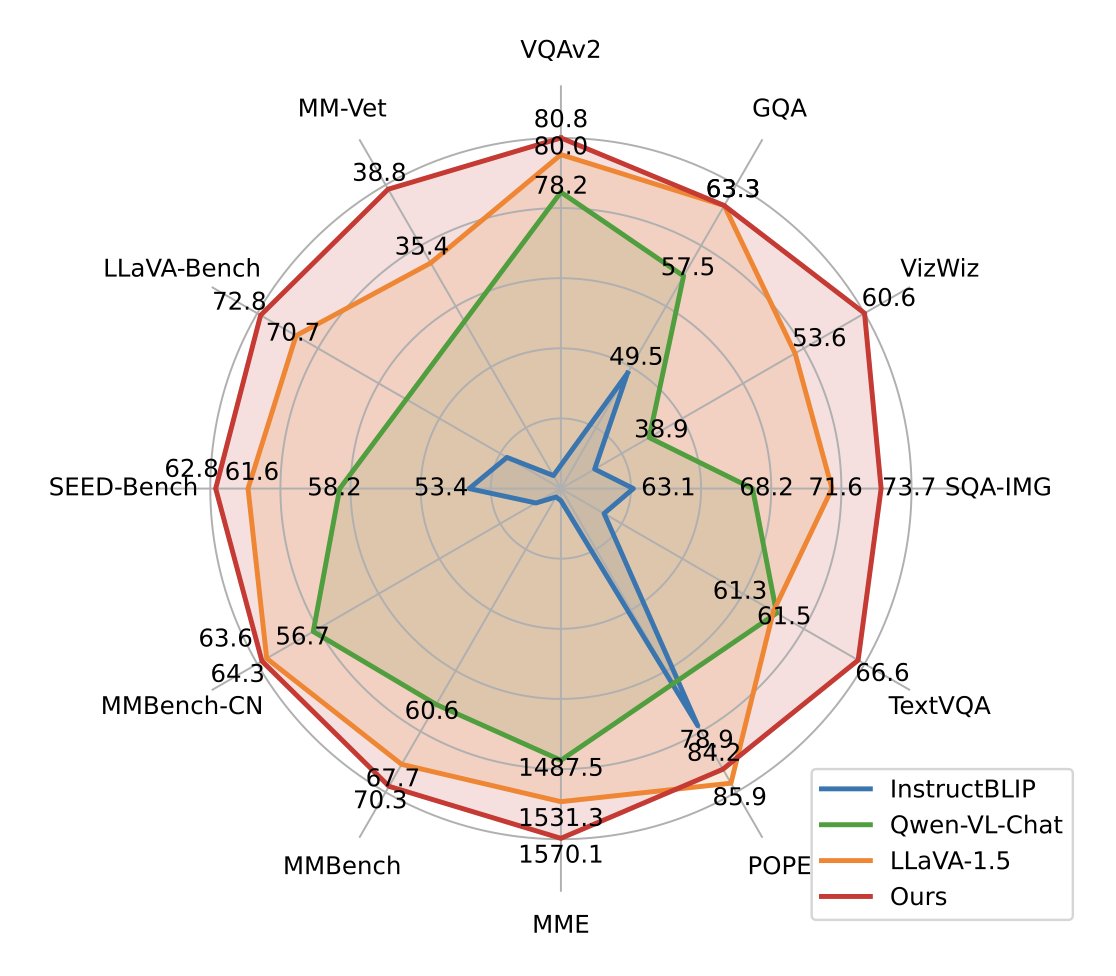

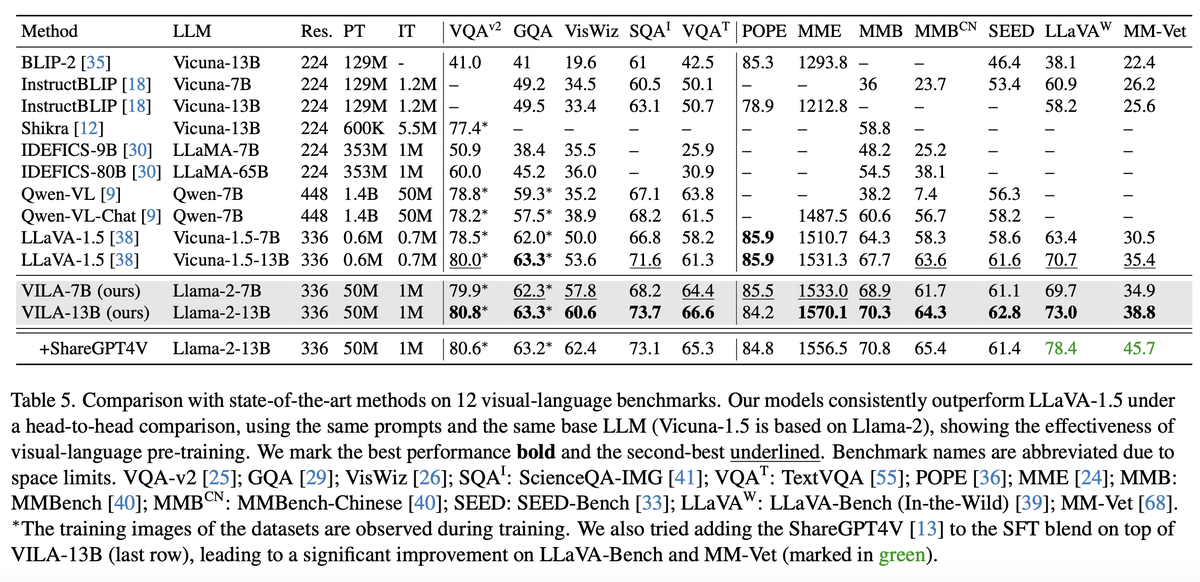

VILA by

@NVIDIAAI & @MIT

> 13B, 7B and 2.7B model checkpoints.

> Beats the current SoTA models like QwenVL.

> Interleaved Vision + Text pre-training.

> Followed by joint SFT.

> Works with AWQ for 4-bit inference.

Models on the Hugging Face Hub:https://huggingface.co/collections/...anguage-models-65d8022a3a52cd9bcd62698e 2/4

All the Model check points here:

3/4

The benchmarks look quite strong:

4/4

Paper for those interested:

VILA by

@NVIDIAAI & @MIT

> 13B, 7B and 2.7B model checkpoints.

> Beats the current SoTA models like QwenVL.

> Interleaved Vision + Text pre-training.

> Followed by joint SFT.

> Works with AWQ for 4-bit inference.

Models on the Hugging Face Hub:https://huggingface.co/collections/...anguage-models-65d8022a3a52cd9bcd62698e 2/4

All the Model check points here:

3/4

The benchmarks look quite strong:

4/4

Paper for those interested: