1/2

today in AI:

1/ Gemini 1.5 Pro is now open to all in Google’s AI studio. It’s soon coming to API as well. This is

@Google

’s model with 1M context length.

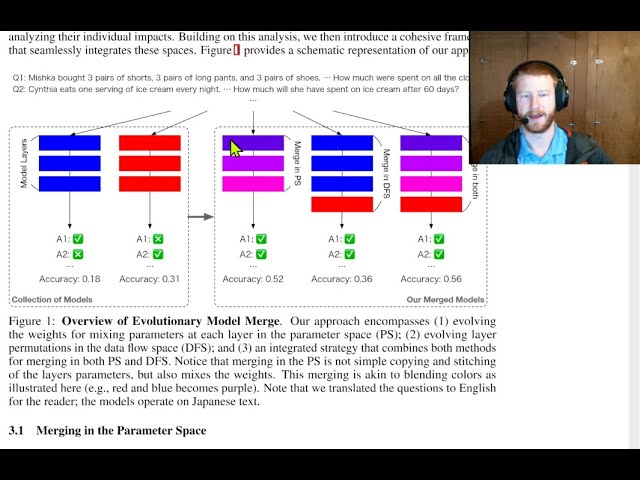

2/ Sakana AI releases its report on merging foundational models similar to natural evolution. Sakana AI was founded by two authors from Google’s transformer paper. It is focused on making nature-inspired LLMs.

2/2

We're interviewing people who use AI at work and this week @bentossell interviewed a solo-founder building an AI company, with AI

It’s fascinating to see how efficient people can be by leveraging AI

Full story:

today in AI:

1/ Gemini 1.5 Pro is now open to all in Google’s AI studio. It’s soon coming to API as well. This is

’s model with 1M context length.

2/ Sakana AI releases its report on merging foundational models similar to natural evolution. Sakana AI was founded by two authors from Google’s transformer paper. It is focused on making nature-inspired LLMs.

2/2

We're interviewing people who use AI at work and this week @bentossell interviewed a solo-founder building an AI company, with AI

It’s fascinating to see how efficient people can be by leveraging AI

Full story:

1/7

Everyone can now try Google Gemini 1.5 Pro from GOOGLE AI STUDIO. There's no more waiting list .

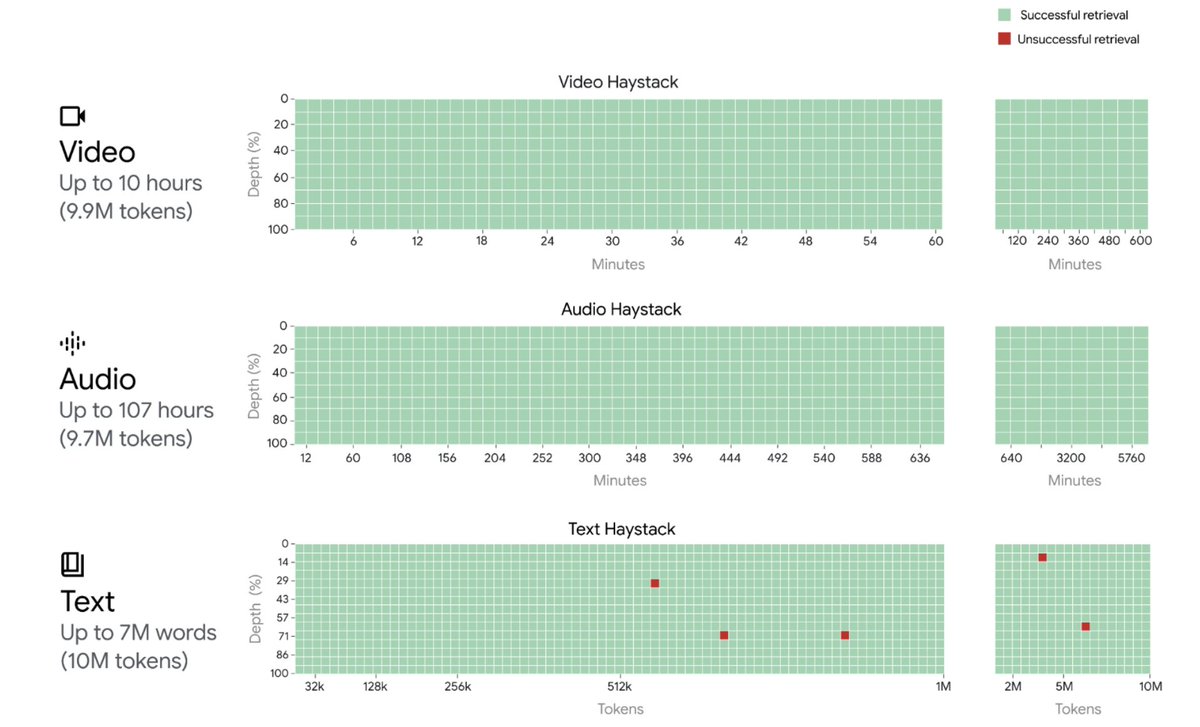

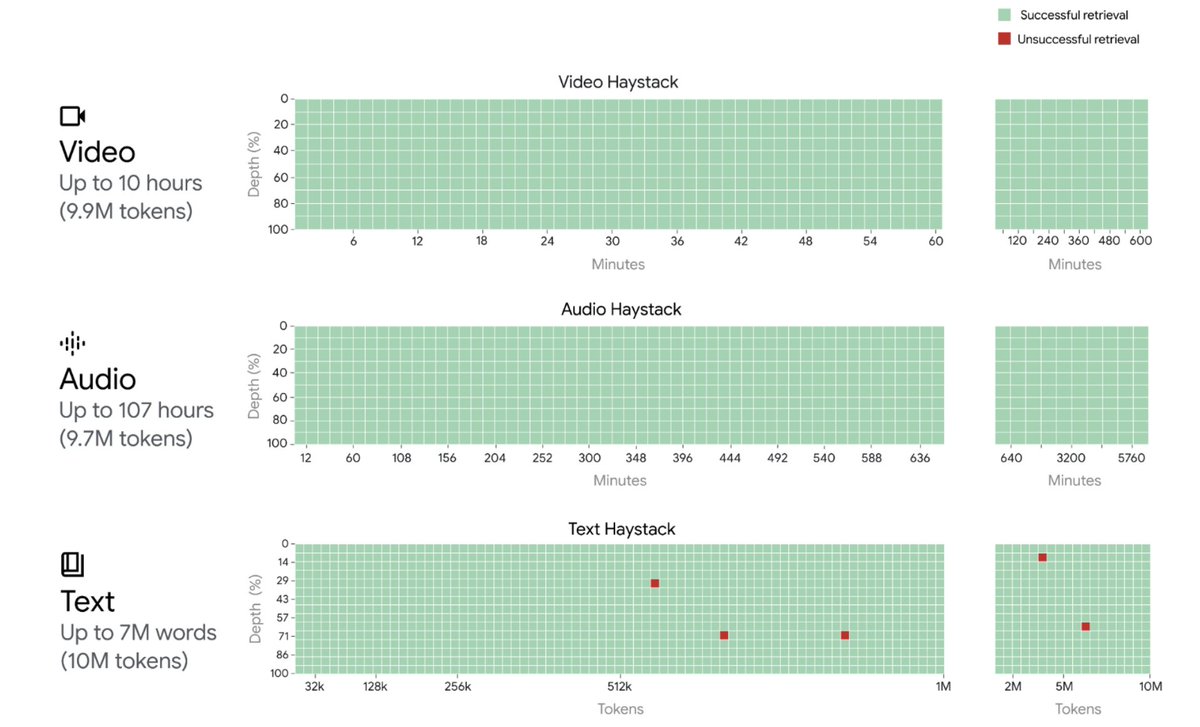

Also, Gemini 1.5 Pro can handle 10M on all modalities.

h/t: Oriol Vinyals.

2/7

We are rolling out Gemini 1.5 Pro API so that you can keep building amazing stuff on top of the model like we've seen in the past few weeks.

Also, if you just want to play with Gemini 1.5, we removed the waitlist: http://aistudio.google.comhttp:// Last, but not least, we pushed the model…

3/7

Go and enjoy now..

4/7

Damn.. More like regional issue.. They have lifted the waitlist.

5/7

Sad man.. I dunno what is the problem but some region it's not enabled may be due to govt regulation.

6/7

Okay my bad.. I mean if product itself is not available than how can you use..

7/7

Lol they already did once recently

Everyone can now try Google Gemini 1.5 Pro from GOOGLE AI STUDIO. There's no more waiting list .

Also, Gemini 1.5 Pro can handle 10M on all modalities.

h/t: Oriol Vinyals.

2/7

We are rolling out Gemini 1.5 Pro API so that you can keep building amazing stuff on top of the model like we've seen in the past few weeks.

Also, if you just want to play with Gemini 1.5, we removed the waitlist: http://aistudio.google.comhttp:// Last, but not least, we pushed the model…

3/7

Go and enjoy now..

4/7

Damn.. More like regional issue.. They have lifted the waitlist.

5/7

Sad man.. I dunno what is the problem but some region it's not enabled may be due to govt regulation.

6/7

Okay my bad.. I mean if product itself is not available than how can you use..

7/7

Lol they already did once recently

1/1

Gemini 1.5 pro with 1m tokens is now available for free at Google AI Studio let's goooo

I just thought "haha stupid AI, there's nothing like this word for machine in the grammar" and it... overplayed me

Gemini 1.5 pro with 1m tokens is now available for free at Google AI Studio let's goooo

I just thought "haha stupid AI, there's nothing like this word for machine in the grammar" and it... overplayed me