You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?

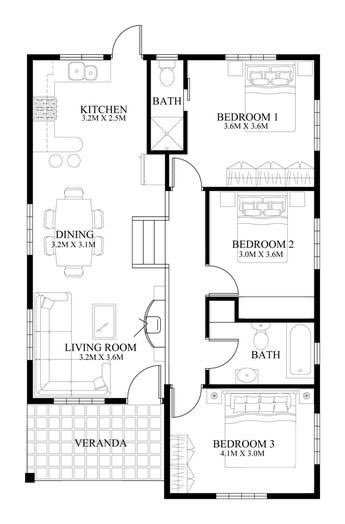

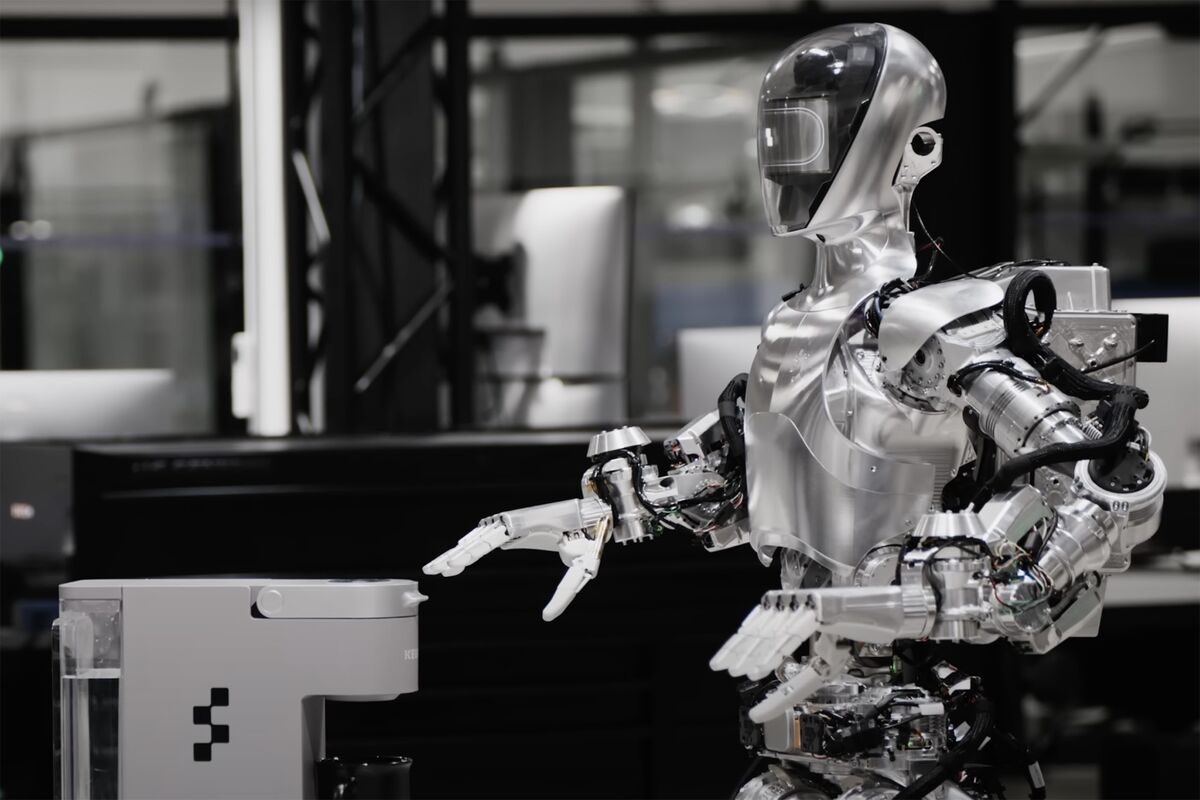

Bezos, Nvidia Join OpenAI in Funding Humanoid Robot Startup

Jeff Bezos, Nvidia Corp. and other big technology names are investing in a business that’s developing human-like robots, according to people with knowledge of the situation, part of a scramble to find new applications for artificial intelligence.

Bezos, Nvidia Join OpenAI in Funding Humanoid Robot Startup

Figure AI also gets investments from Intel, Samsung and Amazon

Company, valued at roughly $2 billion, is raising $675 million

Source: Figure AI Inc.

In this Article

FIGURE AI INC

Private Company

MICROSOFT CORP

410.34USD

–0.32%

By Mark Gurman and Gillian Tan

February 23, 2024 at 12:56 PM EST

Updated on

February 23, 2024 at 1:20 PM EST

Jeff Bezos, Nvidia Corp. and other big technology names are investing in a business that’s developing human-like robots, according to people with knowledge of the situation, part of a scramble to find new applications for artificial intelligence.

The startup Figure AI Inc. — also backed by OpenAI and Microsoft Corp. — is raising about $675 million in a funding round that carries a pre-money valuation of roughly $2 billion, said the people, who asked not to be identified because the matter is private. Through his firm Explore Investments LLC, Bezos has committed $100 million. Microsoft is investing $95 million, while Nvidia and an Amazon.com Inc.-affiliated fund are each providing $50 million.

Robots have emerged as a critical new frontier for the AI industry, letting it apply cutting-edge technology to real-world tasks. At Figure, engineers are working on a robot that looks and moves like a human. The company has said it hopes its machine, called Figure 01, will be able to perform dangerous jobs that are unsuitable for people and that its technology will help alleviate labor shortages.

Other technology companies are involved as well. Intel Corp.’s venture capital arm is pouring in $25 million, and LG Innotek is providing $8.5 million. Samsung’s investment group, meanwhile, committed $5 million. Backers also include venture firms Parkway Venture Capital, which is investing $100 million, and Align Ventures, which is providing $90 million.

The ARK Venture Fund is participating as well, putting in $2.5 million, while Aliya Capital Partners is investing $20 million. Other investors include Tamarack, at $27 million; Boscolo Intervest Ltd., investing $15 million; and BOLD Capital Partners, at $2.5 million.

OpenAI, which at one point considered acquiring Figure, is investing $5 million. Bloomberg News reported in January on the funding round, which kicked off with Microsoft and OpenAI as the initial lead investors. Those big names helped attract the influx of cash from the other entities. The $675 million raised is a significant increase over the $500 million initially sought by Figure.

Representatives for Figure and its investors declined to comment or didn’t immediately respond to requests for comment.

People with knowledge of the matter expect the investors to wire the funds to Figure AI and sign formal agreements on Monday, but the numbers could change as final details are worked out. The roughly $2 billion valuation is pre-money, meaning it doesn’t account for the capital that Figure is raising.

Last May, Figure raised $70 million in funding round led by Parkway. At the time, Chief Executive Officer Brett Adcock said, “We hope that we’re one of the first groups to bring to market a humanoid that can actually be useful and do commercial activities.”

The AI robotics industry has been busy lately. Earlier this year, OpenAI-backed Norwegian robotics startup 1X Technologies AS raised $100 million. Vancouver-based Sanctuary AI is developing a humanoid robot called Phoenix. And Tesla Inc. is working on a robot called Optimus, with Elon Musk calling it one of his most important projects.

Agility Robotics, which Amazon backed in 2022, has bots in testing at one of the retailer’s warehouses. Bezos — the world’s second-richest person, according to the Bloomberg Billionaires Ranking — was Amazon’s chief executive officer until 2021 and remains chairman. His net worth is estimated at $197.1 billion.

— With assistance from Matt Day

(Updates with more on Bezos in final paragraph. A previous version of the story corrected a figure to show it was in millions.)

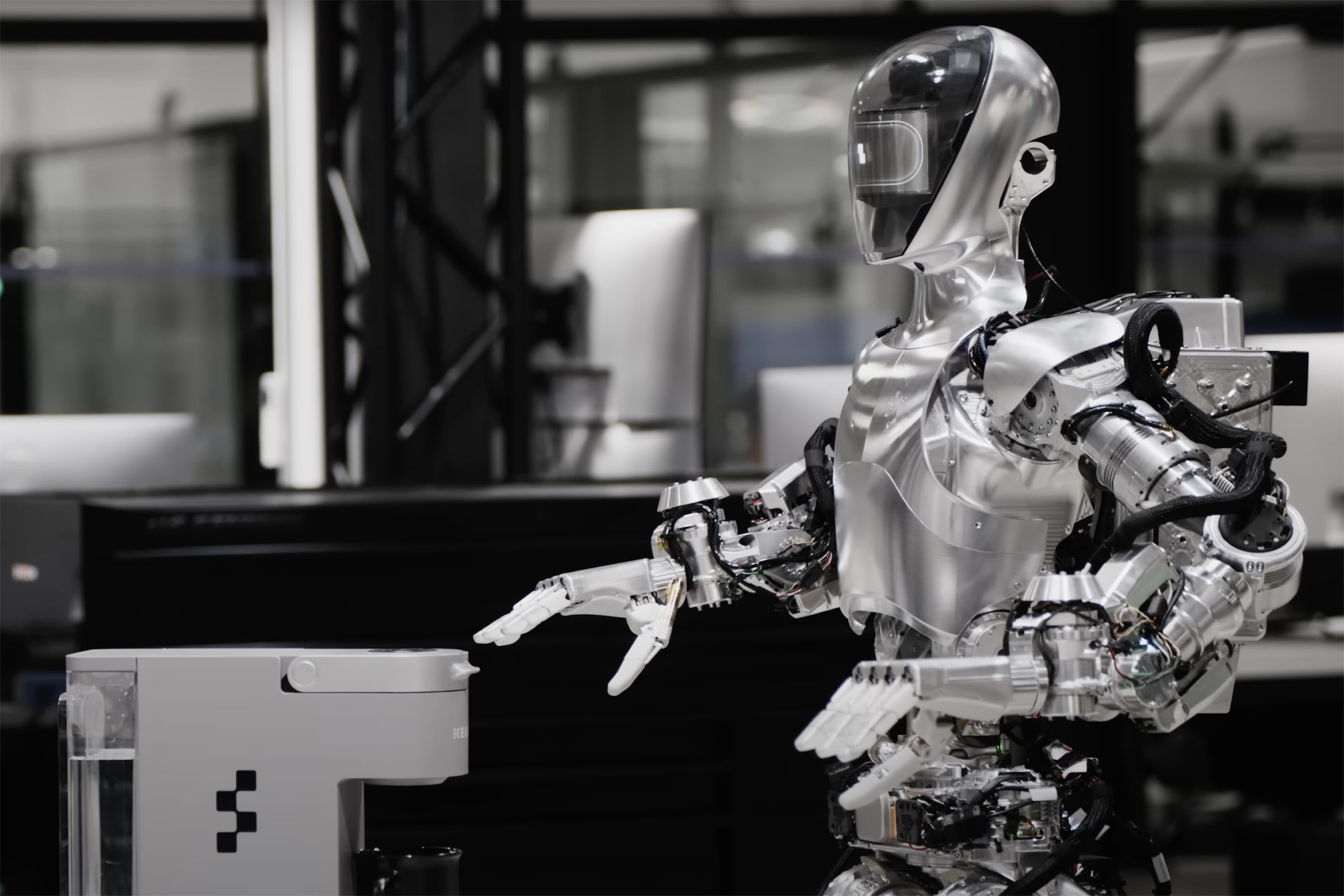

AI solves nuclear fusion puzzle for near-limitless clean energy

Nuclear fusion breakthough overcomes key barrier to grid-scale adoption

AI solves nuclear fusion puzzle for near-limitless clean energy

Nuclear fusion breakthough overcomes key barrier to grid-scale adoption

Anthony Cuthbertson5 days ago

*video from another source

What is the difference between nuclear fission and fusion?

Scientists have used artificial intelligence to overcome a huge challenge for producing near-limitless clean energy with nuclear fusion.

A team from Princeton University in the US figured out a way to use an AI model to predict and prevent instabilities with plasma during fusion reactions.

Nuclear fusion has been hailed as the “holy grail” of clean energy for its potential to produce vast amounts of energy without requiring any fossil fuels or leaving behind any hazardous waste.

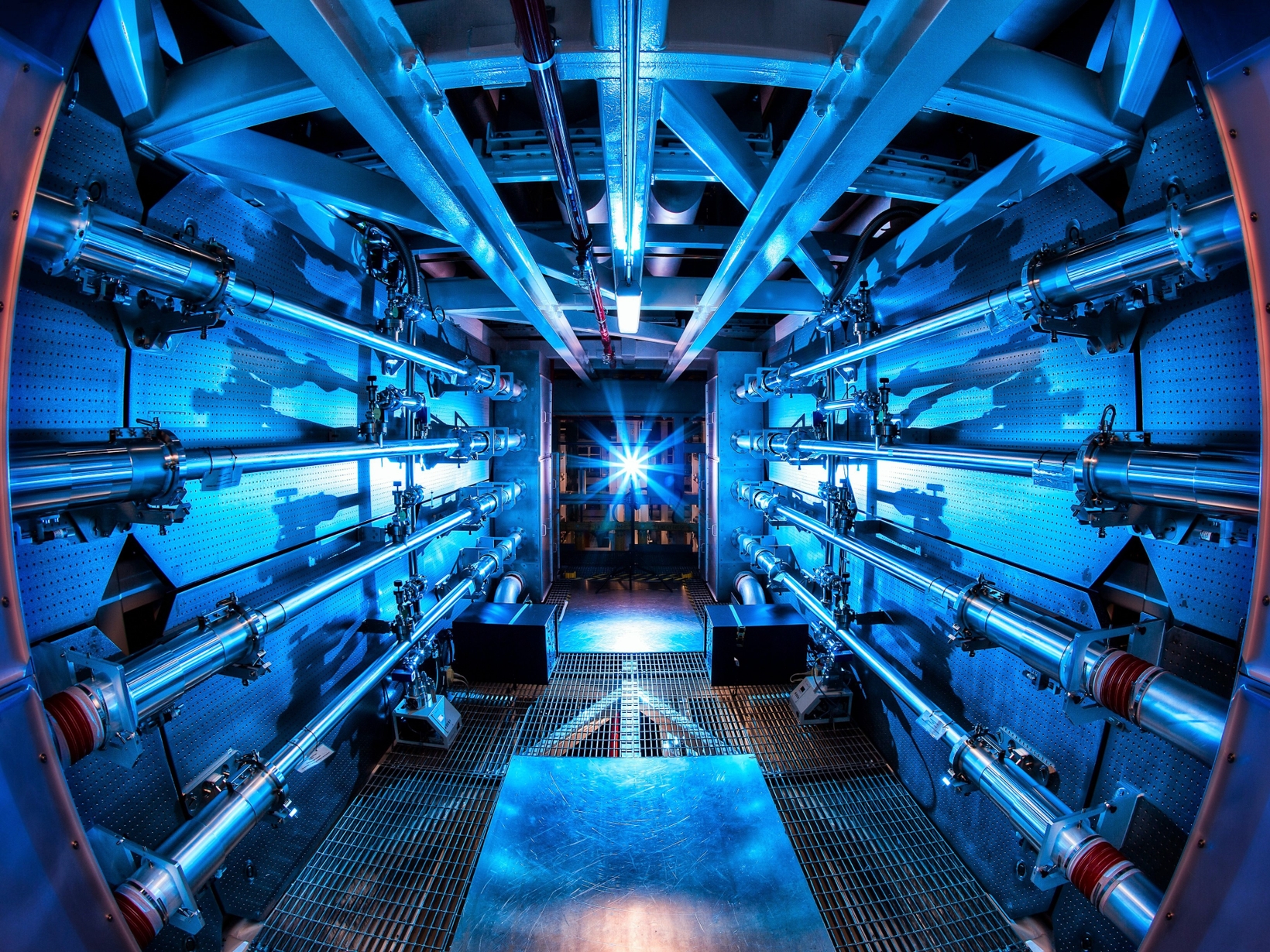

A visualisation of a nuclear fusion reactor

(iStock/ Getty Images)

The process mimics the same natural reactions that occur within the Sun, however harnessing nuclear fusion energy has proved immensely difficult.

In 2022, a team from the Lawrence Livermore National Laboratory in California achieved the first ever net energy gain with nuclear fusion, meaning they were able to produce more energy than was input for the reaction.

It was only a small amount – roughly enough to boil a kettle – but it represented a major milestone towards achieving it at scale.

Lawrence Livermore National Laboratory announced a major breakthrough with nuclear fusion on 13 December, 2022

(US Department of Energy)

The latest success means another significant obstacle has been passed, with the AI capable of recognising plasma instabilities 300 milliseconds before they happen – enough time to make modifications to keep the plasma under control.

The new understanding could lead to grid-scale adoption of nuclear fusion energy, according to the researchers.

“By learning from past experiments, rather than incorporating information from physics-based models, the AI could develop a final control policy that supported a stable, high-powered plasma regime in real time, at a real reactor,” said research leader Egemen Kolemen, who works as a physicist at the Princeton Plasma Physics Laboratory where the breakthrough was made.

The latest research was published in the scientific journal Nature on Wednesday in a paper titled ‘Avoiding fusion plasma tearing instability with deep reinforcement learning’.

“Being able to predict instabilities ahead of time can make it easier to run these reactions than current approaches, which are more passive,” said SanKyeun Kim, who co-authored the study.

“We no longer have to wait for the instabilities to occur and then take quick corrective action before the plasma becomes disrupted.”

Large World Models

largeworldmodel.github.io

largeworldmodel.github.io

World Model on Million-Length Video and

Language with RingAttention

Hao Liu*, Wilson Yan*, Matei Zaharia, Pieter Abbeel

UC berkeley

Abstract

Current language models fall short in understanding aspects of the world not easily described in words, and struggle with complex, long-form tasks. Video sequences offer valuable temporal information absent in language and static images, making them attractive for joint modeling with language. Such models could develop a understanding of both human textual knowledge and the physical world, enabling broader AI capabilities for assisting humans. However, learning from millions of tokens of video and language sequences poses challenges due to memory constraints, computational complexity, and limited datasets. To address these challenges, we curate a large dataset of diverse videos and books, utilize the RingAttention technique to scalably train on long sequences, and gradually increase context size from 4K to 1M tokens. This paper makes the following contributions: (a) Largest context size neural network: We train one of the largest context size transformers on long video and language sequences, setting new benchmarks in difficult retrieval tasks and long video understanding. (b) Solutions for overcoming vision-language training challenges, including using masked sequence packing for mixing different sequence lengths, loss weighting to balance language and vision, and model-generated QA dataset for long sequence chat. (c) A highly-optimized implementation with RingAttention, masked sequence packing, and other key features for training on millions-length multimodal sequences. (d) Fully open-sourced a family of 7B parameter models capable of processing long text documents (LWM-Text, LWM-Text-Chat) and videos (LWM, LWM-Chat) of over 1M tokens. This work paves the way for training on massive datasets of long video and language to develop understanding of both human knowledge and the multimodal world, and broader capabilities.

EMO

EMO: Emote Portrait Alive - Generating Expressive Portrait Videos with Audio2Video Diffusion Model under Weak Conditions

EMO: Emote Portrait Alive - Generating Expressive Portrait Videos with Audio2Video Diffusion Model under Weak Conditions

Linrui Tian, Qi Wang, Bang Zhang, Liefeng Bo

Institute for Intelligent Computing, Alibaba Group

GitHub arXiv

Institute for Intelligent Computing, Alibaba Group

GitHub arXiv

Character: Audrey Kathleen Hepburn-Ruston

Vocal Source: Ed Sheeran - Perfect. Covered by Samantha Harvey

Character: AI Lady from SORA

Vocal Source: Where We Go From Here with OpenAI's Mira Murati

Vocal Source: Ed Sheeran - Perfect. Covered by Samantha Harvey

Character: AI Lady from SORA

Vocal Source: Where We Go From Here with OpenAI's Mira Murati

Abstract

We proposed EMO, an expressive audio-driven portrait-video generation framework. Input a single reference image and the vocal audio, e.g. talking and singing, our method can generate vocal avatar videos with expressive facial expressions, and various head poses, meanwhile, we can generate videos with any duration depending on the length of input video.

Method

Overview of the proposed method. Our framework is mainly constituted with two stages. In the initial stage, termed Frames Encoding, the ReferenceNet is deployed to extract features from the reference image and motion frames. Subsequently, during the Diffusion Process stage, a pretrained audio encoder processes the audio embedding. The facial region mask is integrated with multi-frame noise to govern the generation of facial imagery. This is followed by the employment of the Backbone Network to facilitate the denoising operation. Within the Backbone Network, two forms of attention mechanisms are applied: Reference-Attention and Audio-Attention. These mechanisms are essential for preserving the character's identity and modulating the character's movements, respectively. Additionally, Temporal Modules are utilized to manipulate the temporal dimension, and adjust the velocity of motion.

Various Generated Videos

Singing

Make Portrait Sing

Input a single character image and a vocal audio, such as singing, our method can generate vocal avatar videos with expressive facial expressions, and various head poses, meanwhile, we can generate videos with any duration depending on the length of input audio. Our method can also persist the characters' identifies in a long duration.

Character: AI Mona Lisa generated by dreamshaper XL

Vocal Source: Miley Cyrus - Flowers. Covered by YUQI

Character: AI Lady from SORA

Vocal Source: Dua Lipa - Don't Start Now

Different Language & Portrait Style

Our method supports songs in various languages and brings diverse portrait styles to life. It intuitively recognizes tonal variations in the audio, enabling the generation of dynamic, expression-rich avatars.

Character: AI Girl generated by ChilloutMix

Vocal Source: David Tao - Melody. Covered by NINGNING (mandarin)

Character: AI Ymir from AnyLora & Ymir Fritz Adult

Vocal Source: 『衝撃』Music Video【TVアニメ「進撃の巨人」The Final Season エンディングテーマ曲】 (Japanese)

Character: Leslie Cheung Kwok Wing

Vocal Source: Eason Chan - Unconditional. Covered by AI (Cantonese)

Character: AI girl generated by WildCardX-XL-Fusion

Vocal Source: JENNIE - SOLO. Cover by Aiana (Korean)

Rapid Rhythm

The driven avatar can keep up with fast-paced rhythms, guaranteeing that even the swiftest lyrics are synchronized with expressive and dynamic character animations.

Character: Leonardo Wilhelm DiCaprio

Vocal Source: EMINEM - GODZILLA (FT. JUICE WRLD) COVER

Character: KUN KUN

Vocal Source: Eminem - Rap God

Talking

Talking With Different Characters

Our approach is not limited to processing audio inputs from singing, it can also accommodate spoken audio in various languages. Additionally, our method has the capability to animate portraits from bygone eras, paintings, and both 3D models and AI generated content, infusing them with lifelike motion and realism.

Character: Audrey Kathleen Hepburn-Ruston

Vocal Source: Interview Clip

Character: AI Chloe: Detroit Become Human

Vocal Source: Interview Clip

Character: Mona Lisa

Vocal Source: Shakespeare's Monologue II As You Like It: Rosalind "Yes, one; and in this manner."

Character: AI Ymir from AnyLora & Ymir Fritz Adult

Vocal Source: NieR: Automata

Cross-Actor Performance

Explore the potential applications of our method, which enables the portraits of movie characters delivering monologues or performances in different languages and styles. we can expanding the possibilities of character portrayal in multilingual and multicultural contexts.

Character: Joaquin Rafael Phoenix - The Jocker - 《Jocker 2019》

Vocal Source: 《The Dark Knight》 2008

Character: SongWen Zhang - QiQiang Gao - 《The Knockout》

Vocal Source: Online courses for legal exams

Character: AI girl generated by xxmix_9realisticSDXL

Vocal Source: Videos published by itsjuli4.

Last edited:

Gemini image generation got it wrong. We'll do better.

An explanation of how the issues with Gemini’s image generation of people happened, and what we’re doing to fix it.

GEMINI

Gemini image generation got it wrong. We'll do better.

Feb 23, 2024

2 min read

We recently made the decision to pause Gemini’s image generation of people while we work on improving the accuracy of its responses. Here is more about how this happened and what we’re doing to fix it.

Prabhakar Raghavan

Senior Vice President

Three weeks ago, we launched a new image generation feature for the Gemini conversational app (formerly known as Bard), which included the ability to create images of people.

It’s clear that this feature missed the mark. Some of the images generated are inaccurate or even offensive. We’re grateful for users’ feedback and are sorry the feature didn't work well.

We’ve acknowledged the mistake and temporarily paused image generation of people in Gemini while we work on an improved version.

What happened

The Gemini conversational app is a specific product that is separate from Search, our underlying AI models, and our other products. Its image generation feature was built on top of an AI model called Imagen 2.When we built this feature in Gemini, we tuned it to ensure it doesn’t fall into some of the traps we’ve seen in the past with image generation technology — such as creating violent or sexually explicit images, or depictions of real people. And because our users come from all over the world, we want it to work well for everyone. If you ask for a picture of football players, or someone walking a dog, you may want to receive a range of people. You probably don’t just want to only receive images of people of just one type of ethnicity (or any other characteristic).

However, if you prompt Gemini for images of a specific type of person — such as “a Black teacher in a classroom,” or “a white veterinarian with a dog” — or people in particular cultural or historical contexts, you should absolutely get a response that accurately reflects what you ask for.

So what went wrong? In short, two things. First, our tuning to ensure that Gemini showed a range of people failed to account for cases that should clearly not show a range. And second, over time, the model became way more cautious than we intended and refused to answer certain prompts entirely — wrongly interpreting some very anodyne prompts as sensitive.

These two things led the model to overcompensate in some cases, and be over-conservative in others, leading to images that were embarrassing and wrong.

Next steps and lessons learned

This wasn’t what we intended. We did not want Gemini to refuse to create images of any particular group. And we did not want it to create inaccurate historical — or any other — images. So we turned the image generation of people off and will work to improve it significantly before turning it back on. This process will include extensive testing.One thing to bear in mind: Gemini is built as a creativity and productivity tool, and it may not always be reliable, especially when it comes to generating images or text about current events, evolving news or hot-button topics. It will make mistakes. As we’ve said from the beginning, hallucinations are a known challenge with all LLMs — there are instances where the AI just gets things wrong. This is something that we’re constantly working on improving.

Gemini tries to give factual responses to prompts — and our double-check feature helps evaluate whether there’s content across the web to substantiate Gemini’s responses — but we recommend relying on Google Search, where separate systems surface fresh, high-quality information on these kinds of topics from sources across the web.

I can’t promise that Gemini won’t occasionally generate embarrassing, inaccurate or offensive results — but I can promise that we will continue to take action whenever we identify an issue. AI is an emerging technology which is helpful in so many ways, with huge potential, and we’re doing our best to roll it out safely and responsibly.