Quick question,

Can anyone recommend an AI solution to SEO? Maybe an AI Plugin that someone has used and can speak on?

Can AI automate SEO tasks like content creation, rank checking, etc?

Thanks!

Free AI Writing Tools

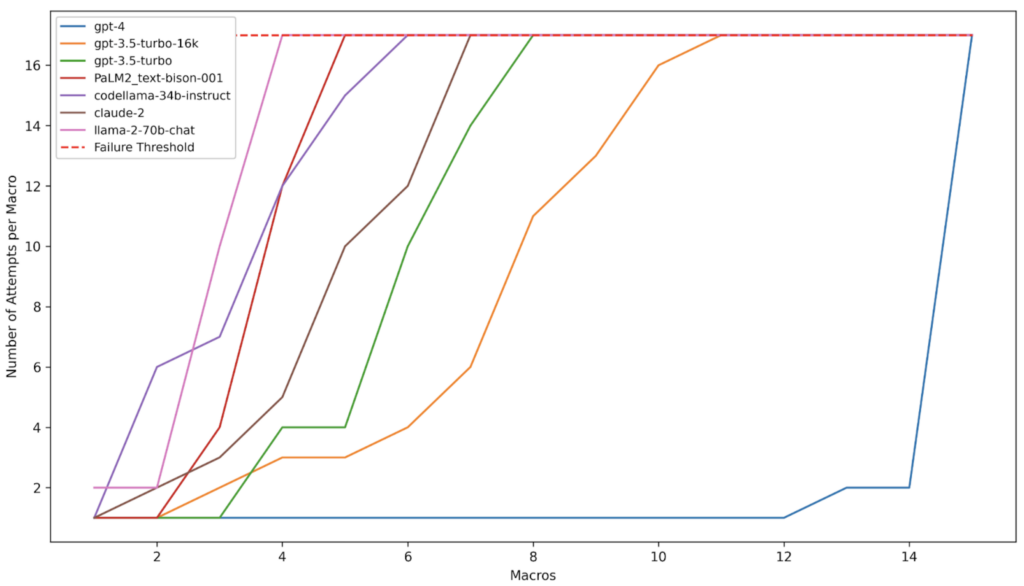

Scalable AI Tools For SEO: A Quick Guide For 2024

Explore AI for SEO in 2024, with over 100 free AI chatbots, affordable tools, and scalable solutions for marketing agencies and enterprises.

www.searchenginejournal.com

www.searchenginejournal.com