GitHub - baaivision/Emu: Emu Series: Generative Multimodal Models from BAAI

Emu Series: Generative Multimodal Models from BAAI - baaivision/Emu

BAAI/Emu2-Gen · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Generative Multimodal Models are In-Context Learners

Generative Multimodal Models are In-Context Learners

Generative Multimodal Models are In-Context Learners

Quan Sun1*, Yufeng Cui1*, Xiaosong Zhang1*, Fan Zhang1*, Qiying Yu2,1*, Zhengxiong Luo1, Yueze Wang1, Yongming Rao1 Jingjing Liu2 Tiejun Huang1,3 Xinlong Wang1†1Beijing Academy of Artificial Intelligence 2Tsinghua University 3Peking University

*equal contribution †project lead

arXiv Code Demo

Abstract

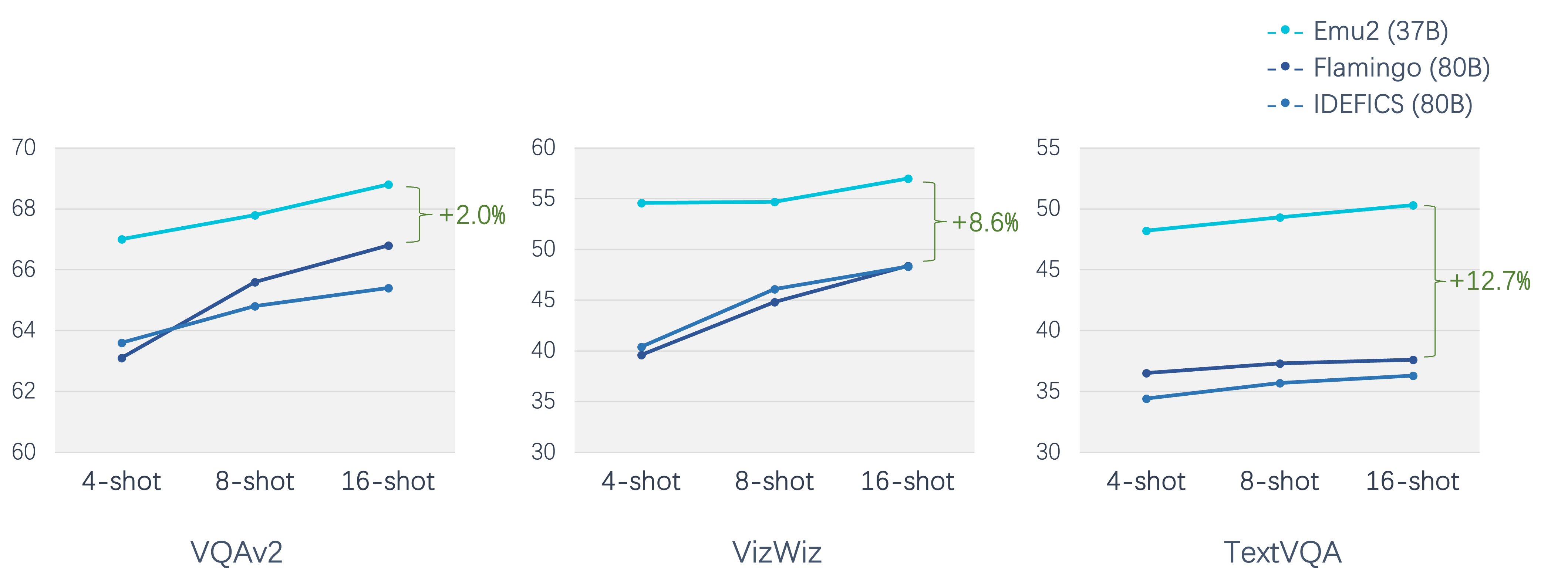

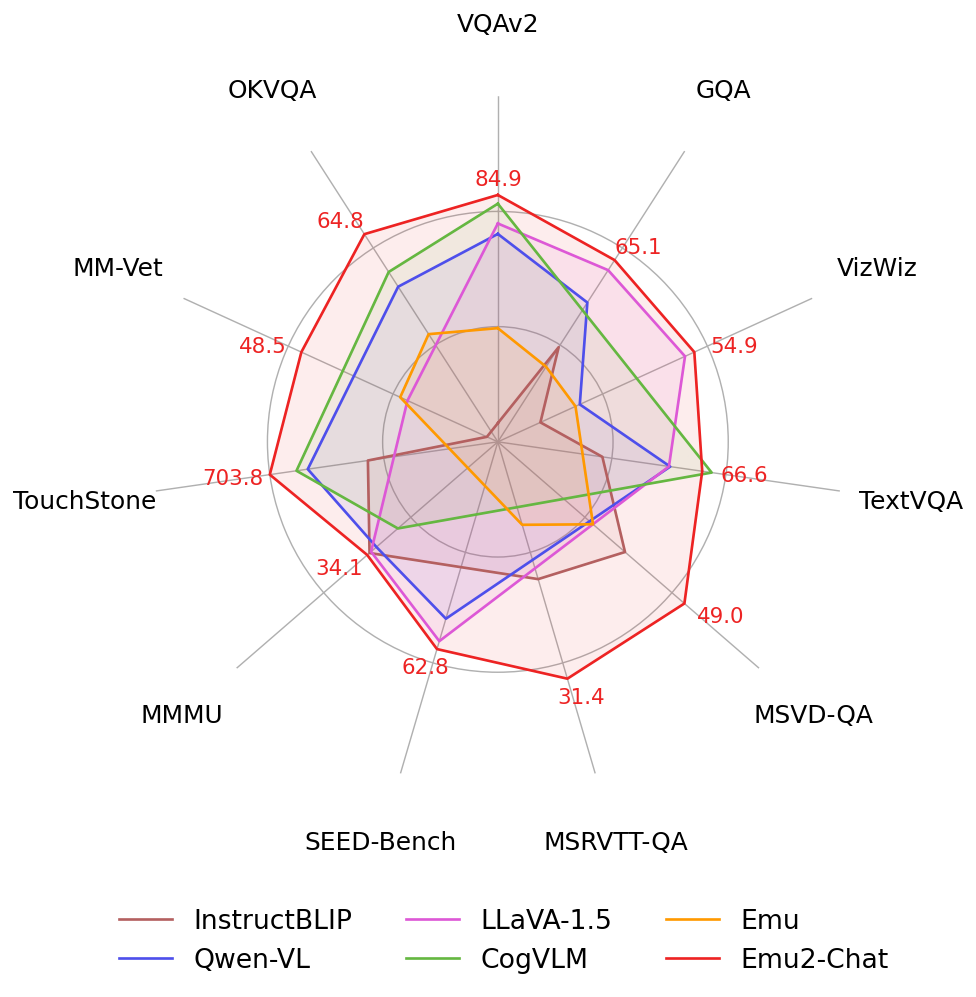

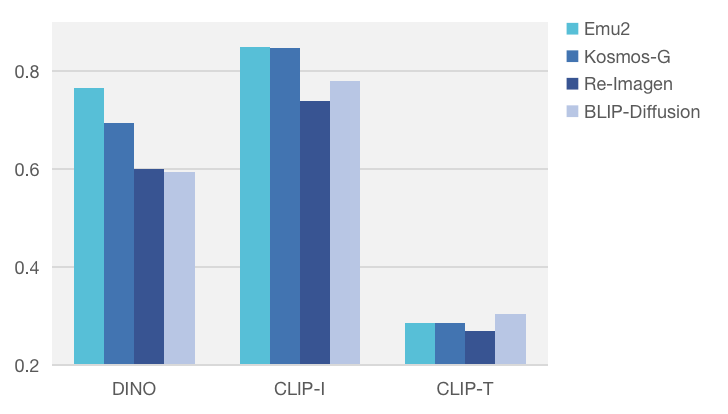

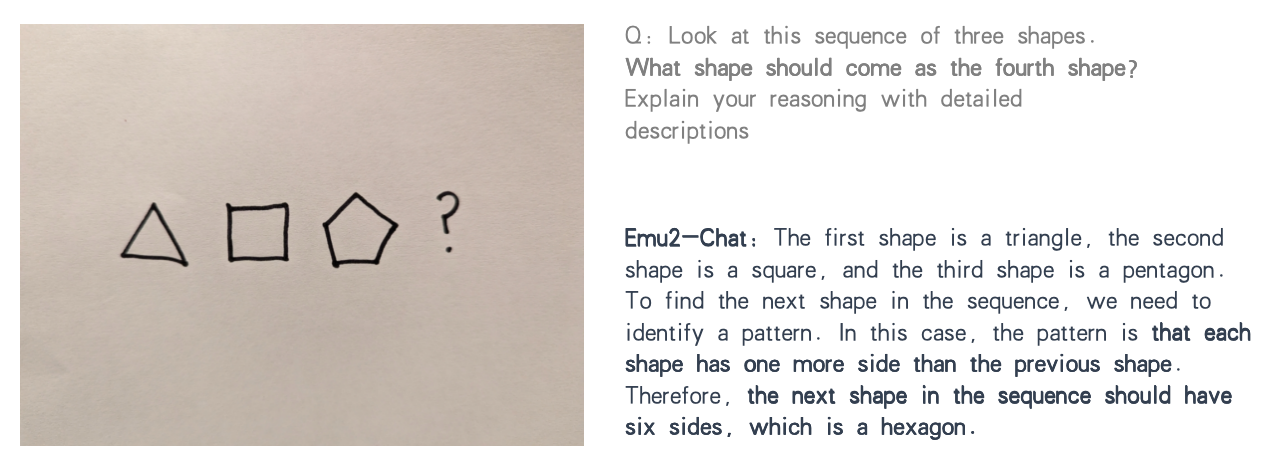

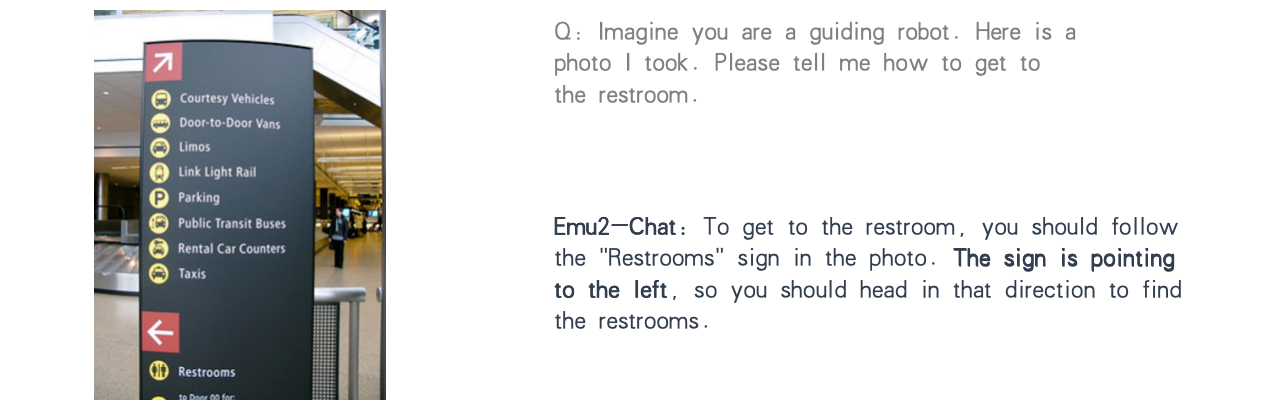

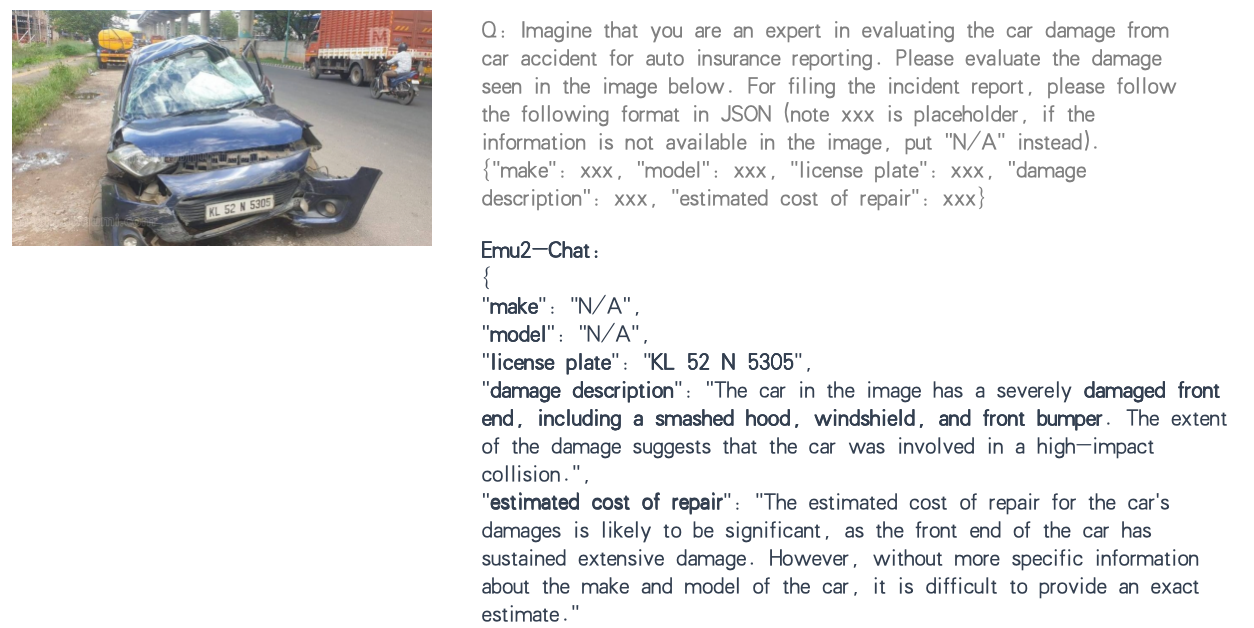

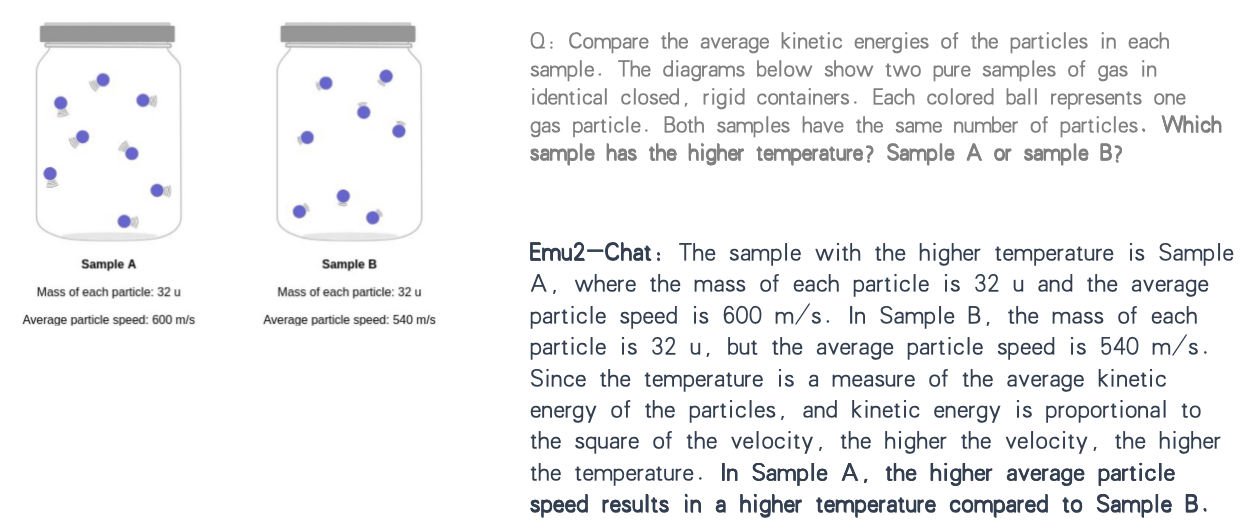

The human ability to easily solve multimodal tasks in context (i.e., with only a few demonstrations or simple instructions), is what current multimodal systems have largely struggled to imitate. In this work, we demonstrate that the task-agnostic in-context learning capabilities of large multimodal models can be significantly enhanced by effective scaling-up. We introduce Emu2, a generative multimodal model with 37 billion parameters, trained on large-scale multimodal sequences with a unified autoregressive objective. Emu2 exhibits strong multimodal in-context learning abilities, even emerging to solve tasks that require on-the-fly reasoning, such as visual prompting and object-grounded generation. The model sets a new record on multiple multimodal understanding tasks in few-shot settings. When instruction-tuned to follow specific instructions, Emu2 further achieves new state-of-the-art on challenging tasks such as question answering benchmarks for large multimodal models and open-ended subject-driven generation. These achievements demonstrate that Emu2 can serve as a base model and general-purpose interface for a wide range of multimodal tasks. Code and models are publicly available to facilitate future research.Video

A strong multimodal few-shot learner

An impressive multimodal generalist

A skilled painter

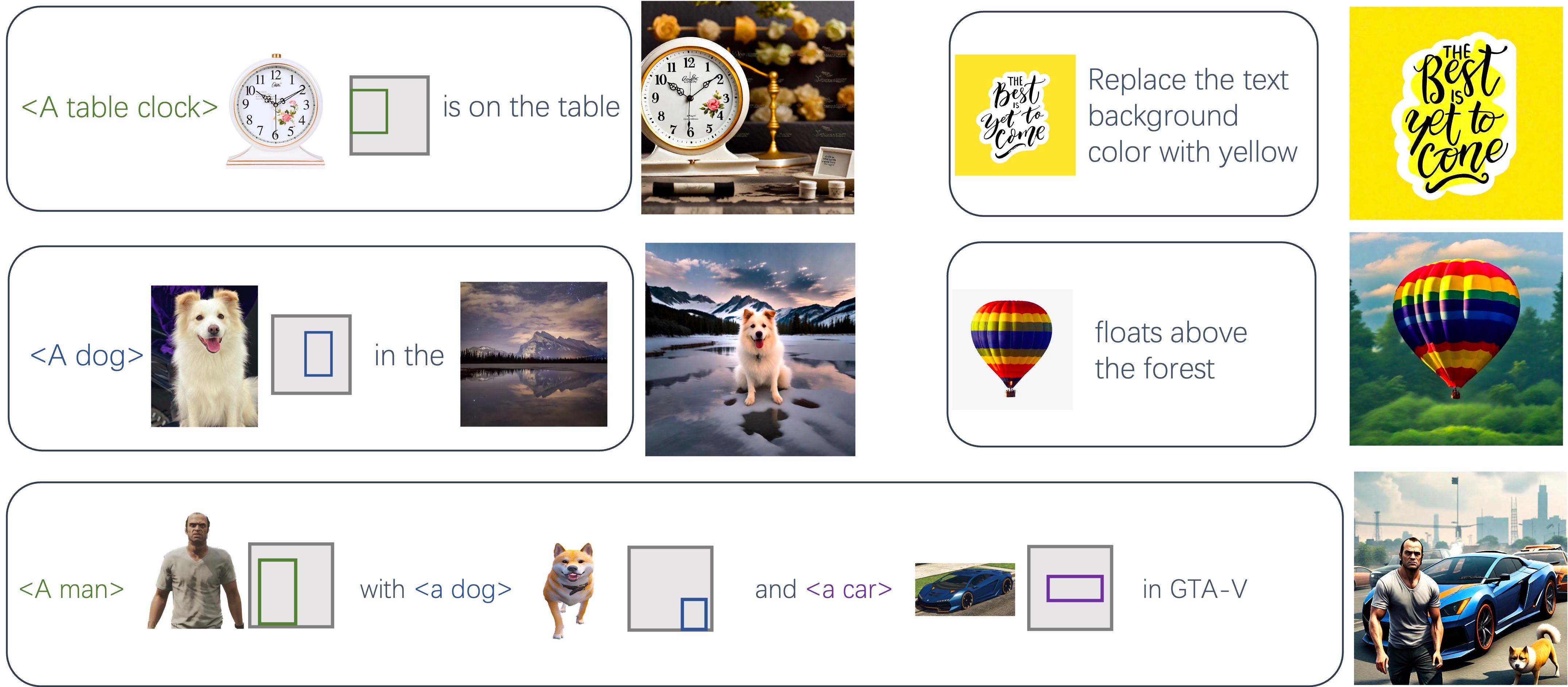

Zero-shot subject-driven generation

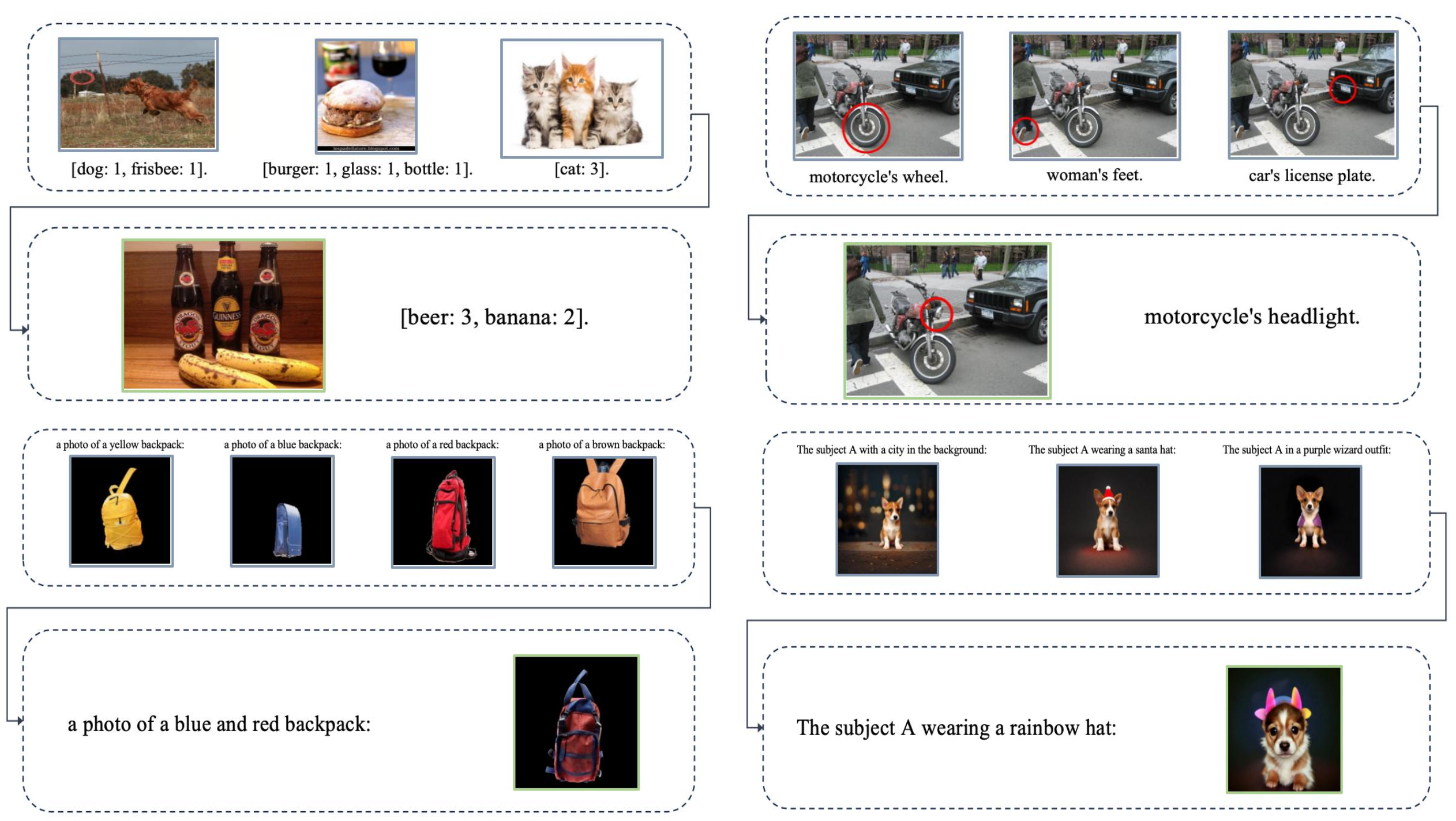

Multimodal in-context learning

Strong multimodal understanding

Generate image from any prompt sequence

Generate video from any prompt sequence

A.I generated explanation:

Sure, let’s break down this abstract into simpler terms and provide some examples:

- Multimodal tasks in context: This refers to tasks that involve multiple types of data (like text, images, and sound) and require understanding the context. For example, if you see a picture of a dog and read a story about a dog, you can understand that both are related. This is something humans do easily but is challenging for machines.

- In-context learning capabilities of large multimodal models: This means the ability of large AI models to learn from the context in which they’re used. For instance, if an AI model is used to recommend movies, it might learn to suggest horror movies when it’s close to Halloween, based on the context of the time of year.

- Emu2: This is the name of the new AI model introduced in the paper. It’s a large model with 37 billion parameters, which means it has a lot of capacity to learn from data.

- Unified autoregressive objective: This is a fancy way of saying that Emu2 learns to predict the next piece of data (like the next word in a sentence) based on all the data it has seen so far.

- Visual prompting and object-grounded generation: These are examples of tasks that Emu2 can do. Visual prompting might involve generating a description of an image, while object-grounded generation could involve writing a story about a specific object in an image.

- Few-shot settings: This means that Emu2 can learn to do new tasks with only a few examples. For instance, if you show it a few examples of cats and then ask it to identify cats in other images, it can do this effectively.

- Instruction-tuned: This means that Emu2 can be adjusted to follow specific instructions, like answering questions or generating text on a specific topic.

- Code and models are publicly available: This means that the authors have shared their work publicly, so other researchers can use and build upon it.

Last edited: