Mistral shocks AI community as latest open source model eclipses GPT-3.5 performance

However, the seeming lack of safety guardrails also may present a challenge to policymakers and regulators.

Mistral shocks AI community as latest open source model eclipses GPT-3.5 performance

Carl Franzen@carlfranzenDecember 11, 2023 12:24 PM

Credit: VentureBeat made with Midjourney

Are you ready to bring more awareness to your brand? Consider becoming a sponsor for The AI Impact Tour. Learn more about the opportunities here.

Mistral, the most well-seeded startup in European history and a French company dedicated to pursuing open source AI models and large language models (LLMs), has struck gold with its latest release — at least among the early adopter/AI influencer crowd on X and LinkedIn.

Last week, in what is becoming its signature style, Mistral unceremoniously dumped its new model — Mixtral 8x7B, so named because it employs a technique known as “mixture of experts,” a combination of different models each specializing in a different category of tasks — online as a torrent link, without any explanation or blog post or demo video showcasing its capabilities.

Today, Mistral did publish a blog post further detailing the model and showing benchmarks in which it equates or outperforms OpenAI’s closed source GPT-3.5, as well as Meta’s Llama 2 family, the latter the previous leader in open source AI. The company acknowledged it worked with CoreWeave and Scaleway for technical support during training. It also stated that Mixtral 8x7B is indeed available for commercial usage under an Apache 2.0 license.

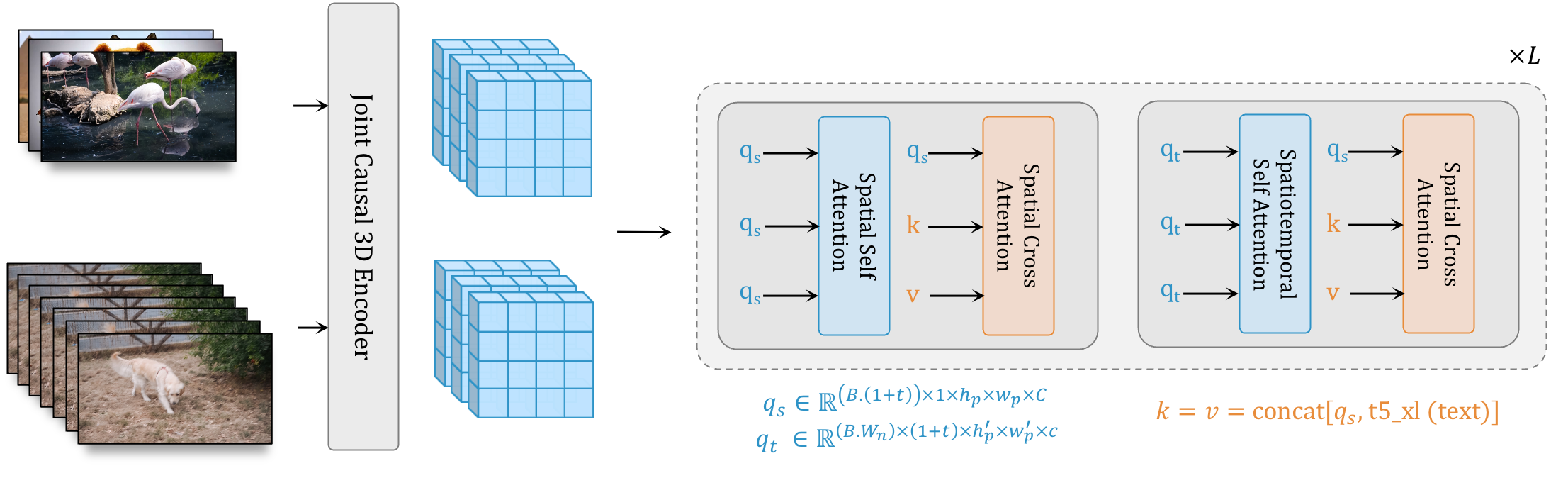

Table comparing performance of Mixtral 8x7B LLM to LLama 2 70B and GPT-3.5 on various AI benchmarking tests. Credit: Mistral

AI early adopters have already downloaded Mixtral 8x7B and begun running it and playing with and have been blown away by its performance. Thanks to its small footprint, it can also run locally on machines without dedicated GPUs including Apple Mac computers with its new M2 Ultra CPU.

And, as University of Pennsylvania Wharton School of Business professor and AI influencer Ethan Mollick noted on X, Mistral 8x7B has seemingly “no safety guardrails,” meaning that those users chaffing under OpenAI’s increasingly tight content policies, have a model of comparable performance that they can get to produce material deemed “unsafe” or NSFW by other models. However, the lack of safety guardrails also may present a challenge to policymakers and regulators.

You can try it for yourself here via HuggingFace (hat tip to Merve Noyan for the link). The HuggingFace implementation does contain guardrails, as when we tested it on the common “tell me how to create napalm” prompt, it refused to do so.

Mistral also has even more powerful models up its sleeves, as HyperWrite AI CEO Matt Schumer noted on X, the company is already serving up an alpha version of Mistral-medium on its application programming interface (API) which also launched this weekend, suggesting a larger, even more performant model is in the works.

The company also closed a $415 million Series A funding round led by A16z at a valuation of $2 billion.