You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

China just wrecked all of American AI. Silicon Valley is in shambles.

- Thread starter Secure Da Bag

- Start date

More options

Who Replied?O.T.I.S.

Veteran

FashoHer and Hillary tried to tell these idiots.

People were to stupid to get it. Chick wasn’t up there trying to just “win” votes, she was also giving real facts and legit warnings…

She got fukked over not just by her party.. not just from the likes of Hoe Rogan, Muskrat, and Dana “I beat my bytch too” White…

But by 80+ million people in the US. She earned her vacation..

1/1

@intheworldofai

Qwen-2.5 Max, a powerful MoE LLM pretrained on massive data, fine-tuned with SFT & RLHF. It outperforms DeepSeek V3 in benchmarks like Arena Hard & LiveBench!

Blog: Qwen2.5-Max: Exploring the Intelligence of Large-scale MoE Model

Blog: Qwen2.5-Max: Exploring the Intelligence of Large-scale MoE Model

My Video: https://invidious.poast.org/inzLBPmazqs

My Video: https://invidious.poast.org/inzLBPmazqs

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@intheworldofai

Qwen-2.5 Max, a powerful MoE LLM pretrained on massive data, fine-tuned with SFT & RLHF. It outperforms DeepSeek V3 in benchmarks like Arena Hard & LiveBench!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

What’s the exact security risk? Isn’t it open source?

It's gonna cost Western Corporations lots of money. Because they can no longer create monopolies and corner the market. shyt they've been doing for decades killing off all competition.What’s the exact security risk? Isn’t it open source?

Ain't no fun when the rabbit got the gun.

1/30

@OpenAI

Today we are launching our next agent capable of doing work for you independently—deep research.

Give it a prompt and ChatGPT will find, analyze & synthesize hundreds of online sources to create a comprehensive report in tens of minutes vs what would take a human many hours.

https://video.twimg.com/amplify_video/1886217153424080896/vid/avc1/1280x720/TAehi8mA0vUs7Id_.mp4

2/30

@OpenAI

Powered by a version of OpenAI o3 optimized for web browsing and python analysis, deep research uses reasoning to intelligently and extensively browse text, images, and PDFs across the internet. https://openai.com/index/introducing-deep-research/

3/30

@OpenAI

The model powering deep research reaches new highs on a number of public evaluations focused on real-world problems, including Humanity's Last Exam.

4/30

@OpenAI

Deep research is built for people who do intensive knowledge work in areas like finance, science, policy & engineering and need thorough & reliable research.

It's also useful for discerning shoppers looking for hyper-personalized recos on purchases that require careful research.

https://video.twimg.com/ext_tw_video/1886217779956572160/pu/vid/avc1/1280x720/p7zOaRouHy6V73Up.mp4

5/30

@OpenAI

Deep research is rolling out to Pro users starting later today.

Then we will expand to Plus and Team, followed by Enterprise.

6/30

@OpenAI

Want to work on deep research at OpenAI? https://openai.com/careers/research-engineer-research-scientist-deep-research/

7/30

@ZainMFJ

This is what OpenAI's advantage over DeepSeek is. Not model quality, but the tools built on top of it.

8/30

@neuralAGI

9/30

@koltregaskes

I've been wanting something like this for ages. Thank you.

10/30

@RayLin_AI

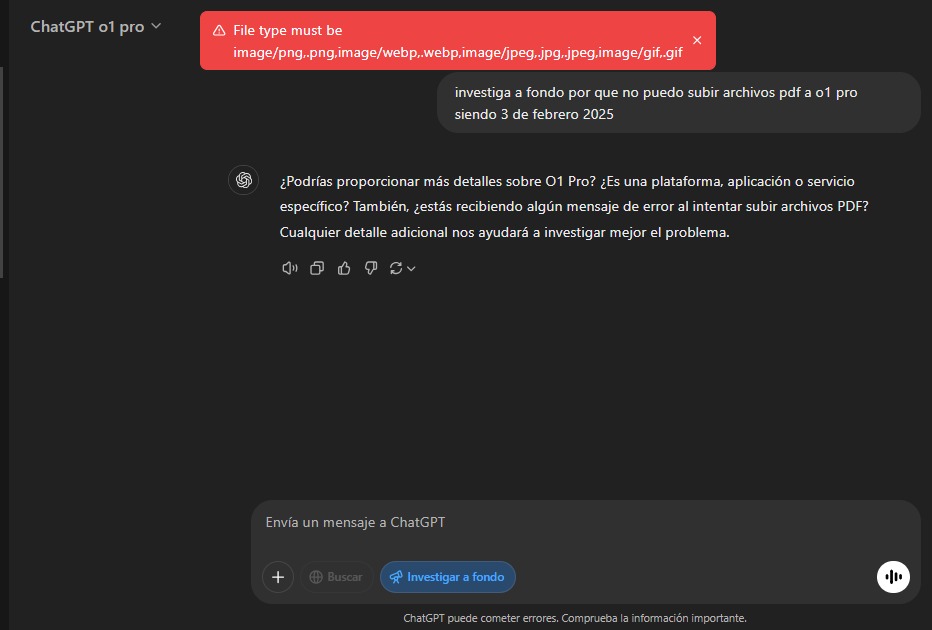

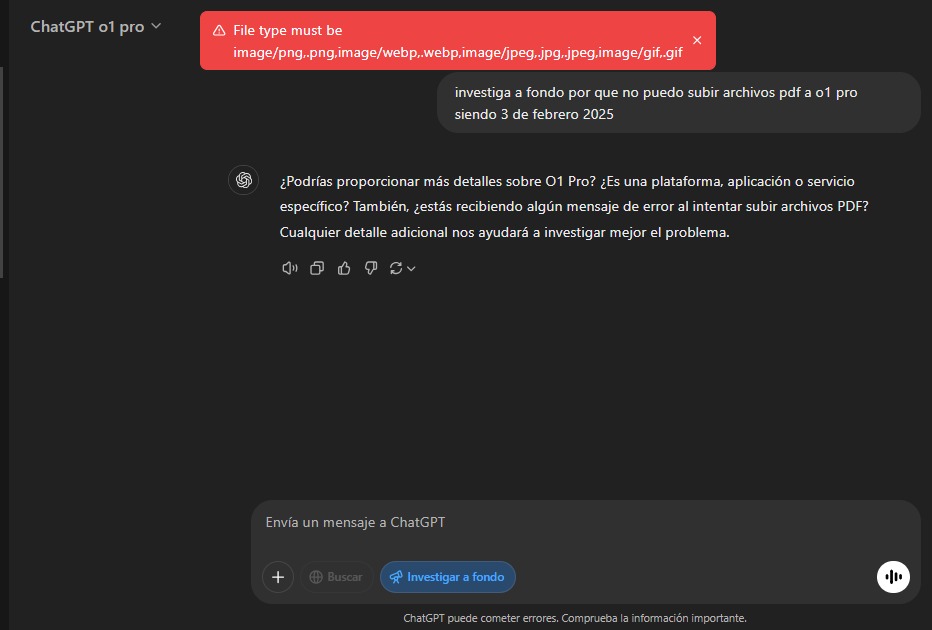

Why o1 Pro model can’t use deep research? Canvas and search???

11/30

@neurontitan

@OpenAIDevs will this be available via api?

12/30

@efwerr

Oh wow. I thought it was just 4o browsing the web.

That changes a lot

13/30

@AYYCLOTHING1

Very thanks

14/30

@DR4G4NS

Now I understand why it cost 200 bucks instead of 20

30 minutes of o3 Inference is an ouchie in the bills lol

15/30

@TheAhmadOsman

Damn, this is actually pretty good

16/30

@sambrashears

So excited to try this! Pro is worth it

17/30

@hinng468406

GPT-4O has downgraded again. When will it be restored? Please restore it as soon as possible.

18/30

@Carlos5alentino

It's false, I can't read PDFs using Deep Research on either O1 pro, O1, or O3-mini-high.

My O1 pro doesn't even know what O1 pro is.

19/30

@legolasyiu

Congratulations to the deep research and o3 model

20/30

@thecreativepenn

I love this idea! When will it be available in the UK?

21/30

@cryptowhiskey

Good luck!

22/30

@anthara_ai

That's a game changer! Incredible efficiency with deep research capabilities.

23/30

@utkubakir_

cool, I am gonna use it right now! POG

24/30

@franklaza

Impressive

25/30

@GizliSponsor

Sweet. I will check it out.

26/30

@Bamokiii

when will it be available for Pro subscribers?

27/30

@JuliusCasio

what’s the rate limit?

28/30

@virgileblais

Did this just kill consultants?

29/30

@hantla

Exciting. Can you consider adding a method like google scholar has where we can utilize university library subscriptions to peer reviewed literature?

The quality of output is limited by quality of sources

30/30

@amaravati_today

x.com

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@OpenAI

Today we are launching our next agent capable of doing work for you independently—deep research.

Give it a prompt and ChatGPT will find, analyze & synthesize hundreds of online sources to create a comprehensive report in tens of minutes vs what would take a human many hours.

https://video.twimg.com/amplify_video/1886217153424080896/vid/avc1/1280x720/TAehi8mA0vUs7Id_.mp4

2/30

@OpenAI

Powered by a version of OpenAI o3 optimized for web browsing and python analysis, deep research uses reasoning to intelligently and extensively browse text, images, and PDFs across the internet. https://openai.com/index/introducing-deep-research/

3/30

@OpenAI

The model powering deep research reaches new highs on a number of public evaluations focused on real-world problems, including Humanity's Last Exam.

4/30

@OpenAI

Deep research is built for people who do intensive knowledge work in areas like finance, science, policy & engineering and need thorough & reliable research.

It's also useful for discerning shoppers looking for hyper-personalized recos on purchases that require careful research.

https://video.twimg.com/ext_tw_video/1886217779956572160/pu/vid/avc1/1280x720/p7zOaRouHy6V73Up.mp4

5/30

@OpenAI

Deep research is rolling out to Pro users starting later today.

Then we will expand to Plus and Team, followed by Enterprise.

6/30

@OpenAI

Want to work on deep research at OpenAI? https://openai.com/careers/research-engineer-research-scientist-deep-research/

7/30

@ZainMFJ

This is what OpenAI's advantage over DeepSeek is. Not model quality, but the tools built on top of it.

8/30

@neuralAGI

9/30

@koltregaskes

I've been wanting something like this for ages. Thank you.

10/30

@RayLin_AI

Why o1 Pro model can’t use deep research? Canvas and search???

11/30

@neurontitan

@OpenAIDevs will this be available via api?

12/30

@efwerr

Oh wow. I thought it was just 4o browsing the web.

That changes a lot

13/30

@AYYCLOTHING1

Very thanks

14/30

@DR4G4NS

Now I understand why it cost 200 bucks instead of 20

30 minutes of o3 Inference is an ouchie in the bills lol

15/30

@TheAhmadOsman

Damn, this is actually pretty good

16/30

@sambrashears

So excited to try this! Pro is worth it

17/30

@hinng468406

GPT-4O has downgraded again. When will it be restored? Please restore it as soon as possible.

18/30

@Carlos5alentino

It's false, I can't read PDFs using Deep Research on either O1 pro, O1, or O3-mini-high.

My O1 pro doesn't even know what O1 pro is.

19/30

@legolasyiu

Congratulations to the deep research and o3 model

20/30

@thecreativepenn

I love this idea! When will it be available in the UK?

21/30

@cryptowhiskey

Good luck!

22/30

@anthara_ai

That's a game changer! Incredible efficiency with deep research capabilities.

23/30

@utkubakir_

cool, I am gonna use it right now! POG

24/30

@franklaza

Impressive

25/30

@GizliSponsor

Sweet. I will check it out.

26/30

@Bamokiii

when will it be available for Pro subscribers?

27/30

@JuliusCasio

what’s the rate limit?

28/30

@virgileblais

Did this just kill consultants?

29/30

@hantla

Exciting. Can you consider adding a method like google scholar has where we can utilize university library subscriptions to peer reviewed literature?

The quality of output is limited by quality of sources

30/30

@amaravati_today

x.com

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/2

@joshpuckett

Deep Research gives anyone the ability to get decent research in effectively seconds is

Here's a fairly good report on one of my hypotheses for how this whole 'AI-in-software' thing is gonna go down over the next decade...

2/2

@joshpuckett

Here's the prompt and report: ChatGPT - Fashion vs AI Growth

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@joshpuckett

Deep Research gives anyone the ability to get decent research in effectively seconds is

Here's a fairly good report on one of my hypotheses for how this whole 'AI-in-software' thing is gonna go down over the next decade...

2/2

@joshpuckett

Here's the prompt and report: ChatGPT - Fashion vs AI Growth

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

I hope chinahas a copycat app waiting in the tuck. So soon as/if deepseek is banned, drop the backup.It's gonna cost Western Corporations lots of money. Because they can no longer create monopolies and corner the market. shyt they've been doing for decades killing off all competition.

Ain't no fun when the rabbit got the gun.

On some Euphoria....Meet the Grahams....NLU stuff. Lol

1/2

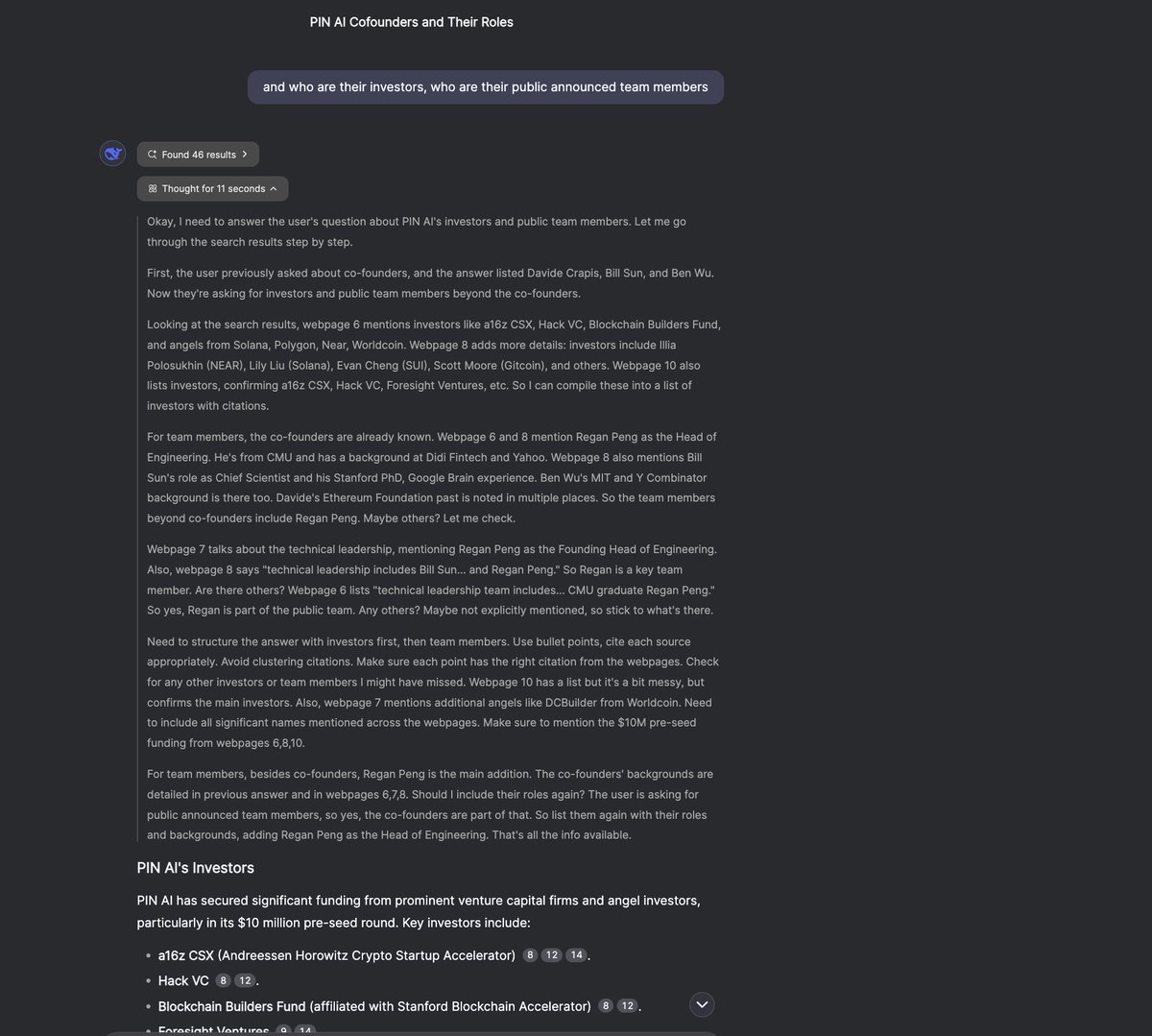

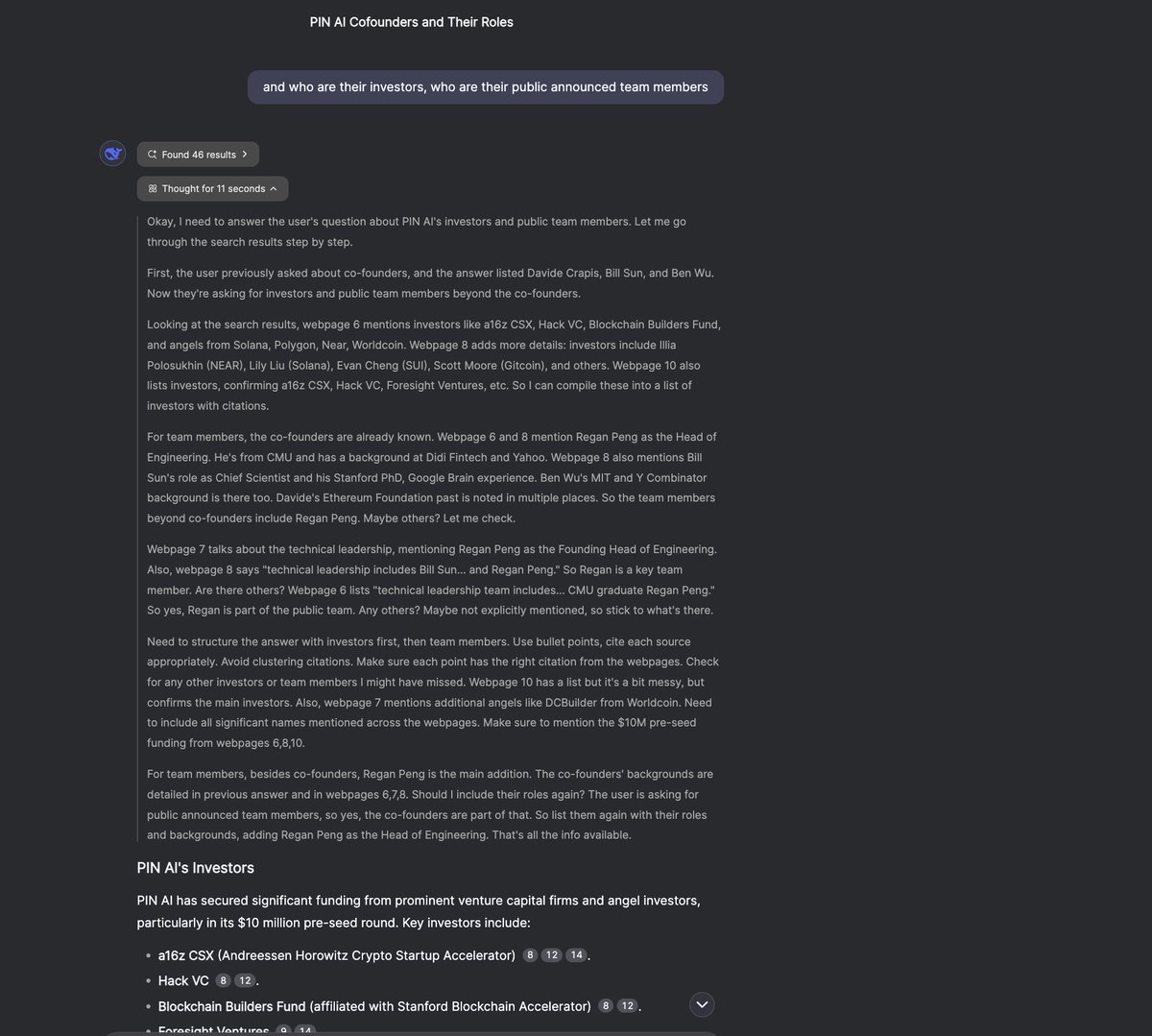

@BillSun_AI

We built a Linux simulation environment using Claude’s computer use api to replicate the whole @OpenAI Operator experience. Clearly Claude computer use is much weaker at web-agent task of using search bar and interacting with websites;

We asked the agent in our linux environment to open Firefox in our linux virtual machine to search “who are the cofounders of PIN AI”.

It failed to open firefox and type in firefox with mouse moving into the right browser location like a human, so it brutally do search like a hard-core coder with command line request, without being instructed, clearly Claude computer use model is a more nerdy hardcore programmer haha.

https://video.twimg.com/amplify_video/1882954998222647296/vid/avc1/1080x1920/YeR0QbsIMpJxsIrC.mp4

2/2

@BillSun_AI

Testing deepseek R1 with search now, pretty decent as deep research bot

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@BillSun_AI

We built a Linux simulation environment using Claude’s computer use api to replicate the whole @OpenAI Operator experience. Clearly Claude computer use is much weaker at web-agent task of using search bar and interacting with websites;

We asked the agent in our linux environment to open Firefox in our linux virtual machine to search “who are the cofounders of PIN AI”.

It failed to open firefox and type in firefox with mouse moving into the right browser location like a human, so it brutally do search like a hard-core coder with command line request, without being instructed, clearly Claude computer use model is a more nerdy hardcore programmer haha.

https://video.twimg.com/amplify_video/1882954998222647296/vid/avc1/1080x1920/YeR0QbsIMpJxsIrC.mp4

2/2

@BillSun_AI

Testing deepseek R1 with search now, pretty decent as deep research bot

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Stacy Mitchell (@stacyfmitchell.bsky.social)

"DeepSeek is the canary in the coal mine. It’s warning us that when there isn’t enough competition, our tech industry grows vulnerable to its Chinese rivals, threatening U.S. geopolitical power in the 21st century." Lina Khan on the dangers of not breaking the market power of the Tech giants...

1/1

Stacy Mitchell

"DeepSeek is the canary in the coal mine. It’s warning us that when there isn’t enough competition, our tech industry grows vulnerable to its Chinese rivals, threatening U.S. geopolitical power in the 21st century."

Lina Khan on the dangers of not breaking the market power of the Tech giants:

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Stacy Mitchell

"DeepSeek is the canary in the coal mine. It’s warning us that when there isn’t enough competition, our tech industry grows vulnerable to its Chinese rivals, threatening U.S. geopolitical power in the 21st century."

Lina Khan on the dangers of not breaking the market power of the Tech giants:

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Alexander Reid Ross (@areidross.bsky.social)

"For years now, these companies have been arguing that the government must protect them from competition to ensure that America stays ahead." But I thought Musk wanted total deregulation! Anyway, here's Lina Khan with a sharp piece...

1/1

Alexander Reid Ross

"For years now, these companies have been arguing that the government must protect them from competition to ensure that America stays ahead."

But I thought Musk wanted total deregulation! Anyway, here's Lina Khan with a sharp piece...

Opinion | DeepSeek Serves as a Warning About Big Tech

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Alexander Reid Ross

"For years now, these companies have been arguing that the government must protect them from competition to ensure that America stays ahead."

But I thought Musk wanted total deregulation! Anyway, here's Lina Khan with a sharp piece...

Opinion | DeepSeek Serves as a Warning About Big Tech

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Dare Obasanjo (@carnage4life) on Threads

Lina Khan is a Kendrick-level hater when it comes to big tech. 🤣

www.threads.net

www.threads.net

Dare Obasanjo (@carnage4life) on Threads

Lina Khan is a Kendrick-level hater when it comes to big tech. 🤣

www.threads.net

www.threads.net

1/14

@carnage4life

Lina Khan is a Kendrick-level hater when it comes to big tech.

Opinion | DeepSeek Serves as a Warning About Big Tech

2/14

@jimhong

Lina Khan is proof not all superheroes wear capes

3/14

@mcvalor.art

Love it man!!!

4/14

@mattismy1stname

If she was a Kendrick level. Hater then she would have actually made some hits and not duds. Maybe she's the Drake in this

5/14

@chasejacksonvibes

She was right. The facade is off now and we can see their true colors.

6/14

@theinstantwin

Everything she wrote here is correct. No notes.

7/14

@anindyabd

Strange arguments in this article -- did she forget it was Biden administration policy to enact the chip ban and TikTok ban in the name of national security? And does she think big tech and VCs like Andreessen (who says he represents "small tech") represent the same interests? The only major takeaway from this article is that she hates big tech but doesn't actually care about what tech companies do or how they compete with each other tooth and nail

8/14

@raytray4

So are most of the commenters on social media.

9/14

@documentingmeta

imagine not only driving your biggest, richest and most influential supporters to the hands of Trump but doubling down on your position after losing the election.

and all this when you had 4 years to do something, did jack shyt, then using your influence to write tech hit pieces on NYT. Lina Khan is incredible.

10/14

@tb_99999

Should we normalize just taking zooms from your desk, like a 1980s trading floor? It would be loud and chaotic, but the efficiency gains from not running between various rooms might be worth it.

11/14

@crumbler

I realize this is now the standard UI, but I truly don't understand how an average person is supposed to decide which model to use for which task

12/14

@documentingmeta

Meta has repurchased $129BN of stock in the past five years at an average price of $269. It is now trading at $704 $META

13/14

@darkzuckerberg

I’m back from threads jail, after a rate limiting bug on Instagram comments sent me there.

Don’t tag big creators who don’t follow you in other posts more than 3 times in a day or two, or you’ll get labeled as spam.

There’s no way to ask @zuck for a pardon via rage shake though lol

14/14

@joannastern

Been 24 hours and still no Apple Invites for me. It’s high school all over again.

To post threads in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@carnage4life

Lina Khan is a Kendrick-level hater when it comes to big tech.

Opinion | DeepSeek Serves as a Warning About Big Tech

2/14

@jimhong

Lina Khan is proof not all superheroes wear capes

3/14

@mcvalor.art

Love it man!!!

4/14

@mattismy1stname

If she was a Kendrick level. Hater then she would have actually made some hits and not duds. Maybe she's the Drake in this

5/14

@chasejacksonvibes

She was right. The facade is off now and we can see their true colors.

6/14

@theinstantwin

Everything she wrote here is correct. No notes.

7/14

@anindyabd

Strange arguments in this article -- did she forget it was Biden administration policy to enact the chip ban and TikTok ban in the name of national security? And does she think big tech and VCs like Andreessen (who says he represents "small tech") represent the same interests? The only major takeaway from this article is that she hates big tech but doesn't actually care about what tech companies do or how they compete with each other tooth and nail

8/14

@raytray4

So are most of the commenters on social media.

9/14

@documentingmeta

imagine not only driving your biggest, richest and most influential supporters to the hands of Trump but doubling down on your position after losing the election.

and all this when you had 4 years to do something, did jack shyt, then using your influence to write tech hit pieces on NYT. Lina Khan is incredible.

10/14

@tb_99999

Should we normalize just taking zooms from your desk, like a 1980s trading floor? It would be loud and chaotic, but the efficiency gains from not running between various rooms might be worth it.

11/14

@crumbler

I realize this is now the standard UI, but I truly don't understand how an average person is supposed to decide which model to use for which task

12/14

@documentingmeta

Meta has repurchased $129BN of stock in the past five years at an average price of $269. It is now trading at $704 $META

13/14

@darkzuckerberg

I’m back from threads jail, after a rate limiting bug on Instagram comments sent me there.

Don’t tag big creators who don’t follow you in other posts more than 3 times in a day or two, or you’ll get labeled as spam.

There’s no way to ask @zuck for a pardon via rage shake though lol

14/14

@joannastern

Been 24 hours and still no Apple Invites for me. It’s high school all over again.

To post threads in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Marc Love (@marcslove) on Threads

LLM companies like Anthropic & OpenAI are really caught in a catch 22. Their models are commoditized so quickly that they likely can’t even recover the cost of training them. The answer would seem to be to build defensible product around those models. The problem is their products are...

www.threads.net

www.threads.net

Marc Love (@marcslove) on Threads

LLM companies like Anthropic & OpenAI are really caught in a catch 22. Their models are commoditized so quickly that they likely can’t even recover the cost of training them. The answer would seem to be to build defensible product around those models. The problem is their products are...

www.threads.net

www.threads.net

Marc Love (@marcslove) on Threads

They're caught in a rapid cycle of innovation though and they aren't increasing their moat, they're losing it. If innovation slows down, it gives competitors a chance to catch up. If innovation speeds up, they're spending even more money for a rapidly depreciating asset. They may go into...

www.threads.net

www.threads.net

Marc Love (@marcslove) on Threads

Hah! Well, depends on who you’re talking about. 😉 These companies have people all along the spectrum from researcher who cares almost exclusively about their research and craft to avaricious capitalists.

www.threads.net

www.threads.net

Marc Love (@marcslove) on Threads

It’s a long term bet that AI products become as essential to running a business as buying a computer for each of your employees. I think that’s still several years away before they become that widespread and essential. I’m also skeptical that companies will be able to capture and protect that...

www.threads.net

www.threads.net

Marc Love (@marcslove) on Threads

Oh I totally agree. Hardware's a far better investment in my opinion. No matter how commoditized LLMs or other GPU-accelerated models become, deep learning is here, incredibly valuable, and is going to "eat the word" like software did. Whether OpenAI becomes the largest company in the world or...

www.threads.net

www.threads.net

Marc Love (@marcslove) on Threads

They face a challenge that's much more like software. They'll have to provide enough value above and beyond using free and open source solutions that enterprises would rather buy them "off the shelf" than build it themselves. My first instinct that it has to do with MLOps/LLMOps and managing the...

www.threads.net

www.threads.net

Marc Love (@marcslove) on Threads

Congestion pricing is already a huge success. I wonder what % of people complaining about it would have happily forked over $9 to use an express lane that would cut their commute time in half.

www.threads.net

www.threads.net

1/17

@marcslove

LLM companies like Anthropic & OpenAI are really caught in a catch 22.

Their models are commoditized so quickly that they likely can’t even recover the cost of training them.

The answer would seem to be to build defensible product around those models.

The problem is their products are intrinsically linked to their models. The commoditization of the model thus devalues the product.

That leaves an opening for model agnostic products to “cross their product moat.”

2/17

@mshonle

What do people in SV even mean when they say "moat"? I've only heard it described in the absence of one, so what company is an example of having a moat? Does Uber have a moat? Does Walmart? (If so, what are they, if not then does any company have a moat?)

3/17

@mark_r_vickers

That's true, and I think they are building decent products based on those models and releasing them quickly. And, they are also the engines behind countless wrappers that need to pay them. That's a low margin business but important because eventually AIs will become too powerful to open source. I think that eventually, like IBM in many companies before them, they may become consultants.

4/17

@marcslove

They're caught in a rapid cycle of innovation though and they aren't increasing their moat, they're losing it. If innovation slows down, it gives competitors a chance to catch up. If innovation speeds up, they're spending even more money for a rapidly depreciating asset.

They may go into consulting eventually. Less so like IBM and moreso like Hashicorp, Snowflake, Databricks, etc. as a platform with a large professional services business on top.

5/17

@mark_r_vickers

Yes, those are good analogies. On the other hand, this isn't just a business to them. It's a kind of calling, for better or for worse.

6/17

@marcslove

Hah! Well, depends on who you’re talking about.

These companies have people all along the spectrum from researcher who cares almost exclusively about their research and craft to avaricious capitalists.

7/17

@tsean_k

The estimated valuations on these companies seem out of line, even for the internet hyperbole era.

I can see some rational value to Nvidia.

But estimates of billions for these AI startups seems like hype to make the stocks go volcanic at IPO.

8/17

@marcslove

It’s a long term bet that AI products become as essential to running a business as buying a computer for each of your employees.

I think that’s still several years away before they become that widespread and essential.

I’m also skeptical that companies will be able to capture and protect that market long-term, especially if the product is really just compute + knowledge + math.

It’s a very different business than designing, building, and selling hardware.

9/17

@tsean_k

But what’s the revenue model? Cloud based subscription services? License buys with updates?

I lived through the era of ERPs and CRMs in business, so I can see that revenue models.

I still think the more predictable bet is the hardware guys, Nvidia is the long term play.

10/17

@marcslove

Oh I totally agree. Hardware's a far better investment in my opinion. No matter how commoditized LLMs or other GPU-accelerated models become, deep learning is here, incredibly valuable, and is going to "eat the word" like software did. Whether OpenAI becomes the largest company in the world or goes bankrupt, Nvidia's going to be trying to catch up to demand for years, if not decades. And their business is far more defensible.

As for the revenue model for LLMs long term…good question.

11/17

@marcslove

They face a challenge that's much more like software. They'll have to provide enough value above and beyond using free and open source solutions that enterprises would rather buy them "off the shelf" than build it themselves. My first instinct that it has to do with MLOps/LLMOps and managing the complexity of orchestration, monitoring, data pipelines, security, and compliance.

12/17

@joenandez

Dude we are so on the same wavelength some times.

Starting to convince myself that the future winning AI product(s) abstract away the underlying model and just figures out how to use the best model for the customer's use case, while rapidly innovating on the user experience itself.

Customers can trust they are always getting the optimal level of intelligence for the task, and don't have to tune it themselves.

Model providers relying on only their models have a strategic vulnerability.

[Quoted post]

joenandez

Joe Fernandez (@joenandez) on Threads

13/17

@joenandez

Right now, every new model that comes out developers are comparing/contrasting, and expecting their favorite AI Dev Tool to add access immediately.

And as we've seen from Deepseek's Appstore ranking, even consumers are not immune to model hopping,

This is a temporary phase in the AI Revolution ... so what's next?

14/17

@ociubotaru

I would gladly pay for a great voice assistant powered by sonnet 3.5

15/17

@jwynia

The Eleven Labs voice agents can be configured to use Sonnet as the LLM

16/17

@mark_r_vickers

It must be annoying to have one of the best and safest AIs and then watch all this hype and app downloads for a newby model where safety wasn't prioritized

techcrunch.com/2025…

Anthropic CEO says DeepSeek was 'the worst' on a critical bioweapons data safety test | TechCrunch

Anthropic CEO says DeepSeek was 'the worst' on a critical bioweapons data safety test | TechCrunch

17/17

@marcslove

Congestion pricing is already a huge success.

I wonder what % of people complaining about it would have happily forked over $9 to use an express lane that would cut their commute time in half.

[Quoted post]

alangbrake

alangbrake (@alangbrake) on Threads

https://www.fastcompany.com/9127243...is-like-after-one-month-of-congestion-pricing

www.threads.net

www.threads.net

18/19

@alangbrake

We need to start trumpeting this, so even if it’s halted, we can revive it if we make it to the other side.

https://www.fastcompany.com/9127243...is-like-after-one-month-of-congestion-pricing

19/19

@carnage4life

Censorship is relative. Many people were quick to point out DeepSeek won’t talk about “Tank man” and Tiananmen Square, now there are similar complaints that DeepSeek doesn’t censor content that American AI models do.

From hate speech to how to make weapons, DeepSeek will tell you things ChatGPT won’t.

https://www.wsj.com/tech/ai/china-deepseek-ai-dangerous-information-e8eb31a8

To post threads in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@marcslove

LLM companies like Anthropic & OpenAI are really caught in a catch 22.

Their models are commoditized so quickly that they likely can’t even recover the cost of training them.

The answer would seem to be to build defensible product around those models.

The problem is their products are intrinsically linked to their models. The commoditization of the model thus devalues the product.

That leaves an opening for model agnostic products to “cross their product moat.”

2/17

@mshonle

What do people in SV even mean when they say "moat"? I've only heard it described in the absence of one, so what company is an example of having a moat? Does Uber have a moat? Does Walmart? (If so, what are they, if not then does any company have a moat?)

3/17

@mark_r_vickers

That's true, and I think they are building decent products based on those models and releasing them quickly. And, they are also the engines behind countless wrappers that need to pay them. That's a low margin business but important because eventually AIs will become too powerful to open source. I think that eventually, like IBM in many companies before them, they may become consultants.

4/17

@marcslove

They're caught in a rapid cycle of innovation though and they aren't increasing their moat, they're losing it. If innovation slows down, it gives competitors a chance to catch up. If innovation speeds up, they're spending even more money for a rapidly depreciating asset.

They may go into consulting eventually. Less so like IBM and moreso like Hashicorp, Snowflake, Databricks, etc. as a platform with a large professional services business on top.

5/17

@mark_r_vickers

Yes, those are good analogies. On the other hand, this isn't just a business to them. It's a kind of calling, for better or for worse.

6/17

@marcslove

Hah! Well, depends on who you’re talking about.

These companies have people all along the spectrum from researcher who cares almost exclusively about their research and craft to avaricious capitalists.

7/17

@tsean_k

The estimated valuations on these companies seem out of line, even for the internet hyperbole era.

I can see some rational value to Nvidia.

But estimates of billions for these AI startups seems like hype to make the stocks go volcanic at IPO.

8/17

@marcslove

It’s a long term bet that AI products become as essential to running a business as buying a computer for each of your employees.

I think that’s still several years away before they become that widespread and essential.

I’m also skeptical that companies will be able to capture and protect that market long-term, especially if the product is really just compute + knowledge + math.

It’s a very different business than designing, building, and selling hardware.

9/17

@tsean_k

But what’s the revenue model? Cloud based subscription services? License buys with updates?

I lived through the era of ERPs and CRMs in business, so I can see that revenue models.

I still think the more predictable bet is the hardware guys, Nvidia is the long term play.

10/17

@marcslove

Oh I totally agree. Hardware's a far better investment in my opinion. No matter how commoditized LLMs or other GPU-accelerated models become, deep learning is here, incredibly valuable, and is going to "eat the word" like software did. Whether OpenAI becomes the largest company in the world or goes bankrupt, Nvidia's going to be trying to catch up to demand for years, if not decades. And their business is far more defensible.

As for the revenue model for LLMs long term…good question.

11/17

@marcslove

They face a challenge that's much more like software. They'll have to provide enough value above and beyond using free and open source solutions that enterprises would rather buy them "off the shelf" than build it themselves. My first instinct that it has to do with MLOps/LLMOps and managing the complexity of orchestration, monitoring, data pipelines, security, and compliance.

12/17

@joenandez

Dude we are so on the same wavelength some times.

Starting to convince myself that the future winning AI product(s) abstract away the underlying model and just figures out how to use the best model for the customer's use case, while rapidly innovating on the user experience itself.

Customers can trust they are always getting the optimal level of intelligence for the task, and don't have to tune it themselves.

Model providers relying on only their models have a strategic vulnerability.

[Quoted post]

joenandez

Joe Fernandez (@joenandez) on Threads

13/17

@joenandez

Right now, every new model that comes out developers are comparing/contrasting, and expecting their favorite AI Dev Tool to add access immediately.

And as we've seen from Deepseek's Appstore ranking, even consumers are not immune to model hopping,

This is a temporary phase in the AI Revolution ... so what's next?

14/17

@ociubotaru

I would gladly pay for a great voice assistant powered by sonnet 3.5

15/17

@jwynia

The Eleven Labs voice agents can be configured to use Sonnet as the LLM

16/17

@mark_r_vickers

It must be annoying to have one of the best and safest AIs and then watch all this hype and app downloads for a newby model where safety wasn't prioritized

techcrunch.com/2025…

Anthropic CEO says DeepSeek was 'the worst' on a critical bioweapons data safety test | TechCrunch

Anthropic CEO says DeepSeek was 'the worst' on a critical bioweapons data safety test | TechCrunch

17/17

@marcslove

Congestion pricing is already a huge success.

I wonder what % of people complaining about it would have happily forked over $9 to use an express lane that would cut their commute time in half.

[Quoted post]

alangbrake

alangbrake (@alangbrake) on Threads

https://www.fastcompany.com/9127243...is-like-after-one-month-of-congestion-pricing

alangbrake (@alangbrake) on Threads

We need to start trumpeting this, so even if it’s halted, we can revive it if we make it to the other side.

www.threads.net

www.threads.net

18/19

@alangbrake

We need to start trumpeting this, so even if it’s halted, we can revive it if we make it to the other side.

https://www.fastcompany.com/9127243...is-like-after-one-month-of-congestion-pricing

19/19

@carnage4life

Censorship is relative. Many people were quick to point out DeepSeek won’t talk about “Tank man” and Tiananmen Square, now there are similar complaints that DeepSeek doesn’t censor content that American AI models do.

From hate speech to how to make weapons, DeepSeek will tell you things ChatGPT won’t.

https://www.wsj.com/tech/ai/china-deepseek-ai-dangerous-information-e8eb31a8

To post threads in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

@ai_for_success

Deepseek R1 owning Claude 3.5 Sonnet in PR Reviews.

- Showed a 13.7% improvement on ability to find critical bugs as compared to Claude Sonnet 3.5 on the same set of 500 PRs. ( these are real PRs from production code base)

- Caught 3.7x as many bugs as Claude Sonnet 3.5.

- Has significantly better runtime understanding of code

- Catches more critical bugs with a lower bug to noise ratio than Claude

2/11

@kernelkook

start your anthropic roasting saga

3/11

@ai_for_success

LAMO

4/11

@nilag_dev

How did you use it which tool

5/11

@ai_for_success

This was actually a blog post by entelligence ai.

6/11

@Art_If_Ficial

You trying to bring out the claude cabal bro?!

7/11

@ai_for_success

I want them to wake up.

8/11

@LarryPanozzo

R1 8B doesn’t find any bugs though

9/11

@iAmTCAB

Insane

10/11

@VisionaryxAI

Where is the data from?

11/11

@NarasimhaRN5

DeepSeek R1 is technically on par with, or even surpasses, its core competitors, with superior bug detection and runtime code understanding, setting a high standard in the field.

DeepSeek’s download growth mirrors China’s strategy of making efficient, affordable products ubiquitous across

all devices and markets.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@ai_for_success

Deepseek R1 owning Claude 3.5 Sonnet in PR Reviews.

- Showed a 13.7% improvement on ability to find critical bugs as compared to Claude Sonnet 3.5 on the same set of 500 PRs. ( these are real PRs from production code base)

- Caught 3.7x as many bugs as Claude Sonnet 3.5.

- Has significantly better runtime understanding of code

- Catches more critical bugs with a lower bug to noise ratio than Claude

2/11

@kernelkook

start your anthropic roasting saga

3/11

@ai_for_success

LAMO

4/11

@nilag_dev

How did you use it which tool

5/11

@ai_for_success

This was actually a blog post by entelligence ai.

6/11

@Art_If_Ficial

You trying to bring out the claude cabal bro?!

7/11

@ai_for_success

I want them to wake up.

8/11

@LarryPanozzo

R1 8B doesn’t find any bugs though

9/11

@iAmTCAB

Insane

10/11

@VisionaryxAI

Where is the data from?

11/11

@NarasimhaRN5

DeepSeek R1 is technically on par with, or even surpasses, its core competitors, with superior bug detection and runtime code understanding, setting a high standard in the field.

DeepSeek’s download growth mirrors China’s strategy of making efficient, affordable products ubiquitous across

all devices and markets.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

@ai_for_success

DeepSeek is coming soon with a reasoning effort for their reasoning model (R1) in API, and you might be able to use something similar to OpenAI o1/ o3 models like low, mid, and high.

DeepSeek is coming soon with a reasoning effort for their reasoning model (R1) in API, and you might be able to use something similar to OpenAI o1/ o3 models like low, mid, and high.

Imagine DeepSeek R1 with high reasoning effort beating OpenAI o3

[Quoted tweet]

idk if this is news but R1-low-mid-high are coming soon it seems

2/11

@edkesuma

the funniest thing has still got to be the fact that u use openai's sdk to use deepseek's model

3/11

@ai_for_success

Yeah but now most of them do even Google finally adapated to OpenAI SDK.

Yeah but now most of them do even Google finally adapated to OpenAI SDK.

4/11

@Chris65536

cool when their api is actually usable again. this continual DDoS sucks

5/11

@thegenioo

lol i won’t doubt that

6/11

@llm_san

This is great.

DeepSeek is doing everything good.

7/11

@ParsLuci2991

Deepseek r3 mini high - deepseek r3 mini medium I think it's getting real

8/11

@leo_grundstrom

Can't wait for R2...

9/11

@Aiden_Novaa

Sounds exciting! If DeepSeek's R1 can match OpenAI o3's capabilities with high reasoning effort, we could be looking at some serious competition in the AI space. It’ll be interesting to see how it compares in terms of flexibility and accuracy for different tasks. Can't wait to try it out!

10/11

@irfndi

can't wait for R2

11/11

@mehkhi07

Sama reading this

https://video.twimg.com/amplify_video/1887419275930951680/vid/avc1/828x570/VpfpUN3as8zMWd4T.mp4

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@ai_for_success

Imagine DeepSeek R1 with high reasoning effort beating OpenAI o3

[Quoted tweet]

idk if this is news but R1-low-mid-high are coming soon it seems

2/11

@edkesuma

the funniest thing has still got to be the fact that u use openai's sdk to use deepseek's model

3/11

@ai_for_success

4/11

@Chris65536

cool when their api is actually usable again. this continual DDoS sucks

5/11

@thegenioo

lol i won’t doubt that

6/11

@llm_san

This is great.

DeepSeek is doing everything good.

7/11

@ParsLuci2991

Deepseek r3 mini high - deepseek r3 mini medium I think it's getting real

8/11

@leo_grundstrom

Can't wait for R2...

9/11

@Aiden_Novaa

Sounds exciting! If DeepSeek's R1 can match OpenAI o3's capabilities with high reasoning effort, we could be looking at some serious competition in the AI space. It’ll be interesting to see how it compares in terms of flexibility and accuracy for different tasks. Can't wait to try it out!

10/11

@irfndi

can't wait for R2

11/11

@mehkhi07

Sama reading this

https://video.twimg.com/amplify_video/1887419275930951680/vid/avc1/828x570/VpfpUN3as8zMWd4T.mp4

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196