You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

China just wrecked all of American AI. Silicon Valley is in shambles.

- Thread starter Secure Da Bag

- Start date

More options

Who Replied?1/46

@opensauceAI

Wow. Congress just tabled a bill that would *actually* kill open-source. This is easily the most aggressive legislative action on AI—and it was proposed by the GOP senator who slammed @finkd for Llama.

Here's how it works, and why it's different to anything before it.

2/46

@opensauceAI

1. The bill would ban the import of AI "technology or intellectual property" developed in the PRC. Conceivably, that would include downloading @deepseek_ai R1 / V3 weights. Penalty: up to 20 years imprisonment.

3/46

@opensauceAI

Now, there's an ongoing debate about whether weights are IP, but technology is defined broadly to mean:

> any "information, in tangible or intangible form, necessary for the development...or use of an item" and

> any "software [or] component" to "function AI".

Weights are squarely in scope, and potentially fundamental research too.

4/46

@opensauceAI

2. The bill would also ban the export of AI to an "entity of concern". Export means transmission outside the US, or release to a foreign person in the US.

E.g. Releasing Llama 4. Penalty? Also 20 years for a willful violation.

5/46

@opensauceAI

3. Separately, the bill would prohibit any collaboration with, or transferring research to, an "entity of concern". But entity of concern doesn't just include government agencies or PRC companies...

6/46

@opensauceAI

It includes *any* college, university, or lab organized under PRC law—and any person working on their behalf. E.g. An undergrad RA working on a joint conference paper.

7/46

@opensauceAI

The penalty for violating this provision is civil—$1M for an individual, $100M for a company, plus 3x damages. But the bill also makes it an "aggravated felony" for immigration purposes—meaning any noncitizens (e.g. CAN / FR / UK) involved in the "transfer" could be deported.

8/46

@opensauceAI

The bill follows calls from e.g. @committeeonccp, @DarioAmodei and @alexandr_wang for stronger export controls. I don't seriously believe any of them want this outcome, at least for intangible weights, research or data. But something has clearly got lost in translation.

9/46

@opensauceAI

Whether you are pro / anti / indifferent to open models and open research, this bill is terrible signaling. It's an assault on scientific research and open innovation, and it's unprecedented. Here's why:

10/46

@opensauceAI

A. Unlike nearly every legislative / regulatory effort before it, this bill makes no distinction based on risk. No FLOP, capability, or cost thresholds. No open-source exemption. No directive to an agency to determine thresholds. Everything touching AI is swept into scope.

11/46

@opensauceAI

B. It includes "import" too. Not a single bill or rulemaking to date has tried to prohibit the "import" of AI technology from the PRC. My view is this was motivated by fears over @deepseek_ai's chat UI, not models, but the bill would include weights, software, or data.

12/46

@opensauceAI

C. Because a developer cannot reasonably KYC everyone who downloads open weights, and since it's a near-certainty that open weights would be obtained by an "entity of concern" (i.e. an RA in their dorm room), this would be the end of open model releases.

13/46

@opensauceAI

D. As a reminder that tech politics aren't settled, even post-Trump: this bill is a GOP bill. It goes beyond anything pursued by Biden, the EU, or California. Here's my lay of the land—this bill's effects on AI research would blow earlier reforms out of the water.

14/46

@opensauceAI

Indeed, Trump left in place Biden's export controls for model weights (for now) while @DavidSacks finishes his review. It's TBD how export controls will develop in the coming months.

15/46

@opensauceAI

TLDR: I'm a big fan of @HawleyMO's Big Tech scrutiny, but this bill would do untold damage to the little guy. It would require a police state to enforce, set back US research, and promote a global reliance on PRC technology.

16/46

@opensauceAI

You can find the bill here: https://www.hawley.senate.gov/wp-co...-Intelligence-Capabilities-from-China-Act.pdf

Decoupling from China? More likely: decoupling the rest of the world from the US.

17/46

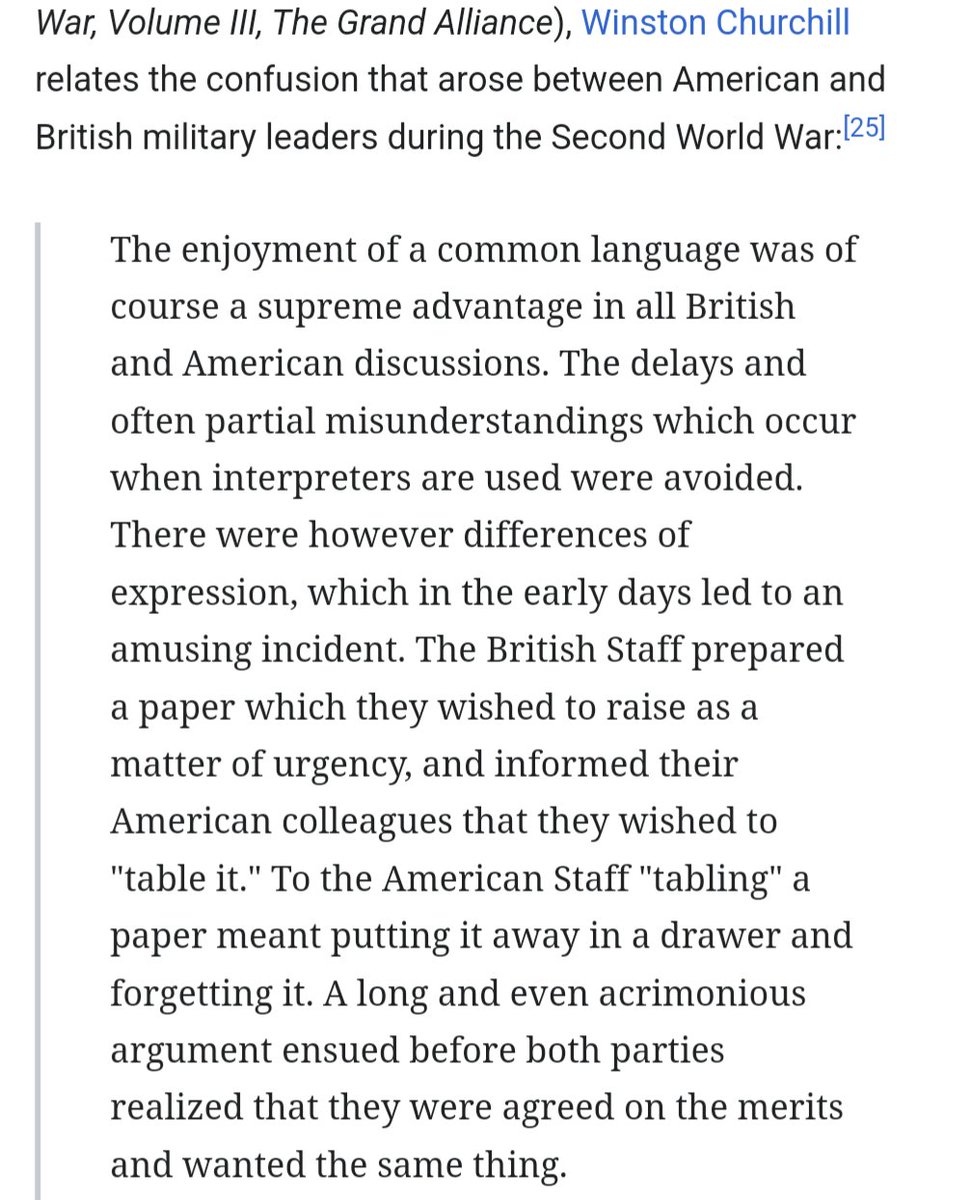

@GhostofWhitman

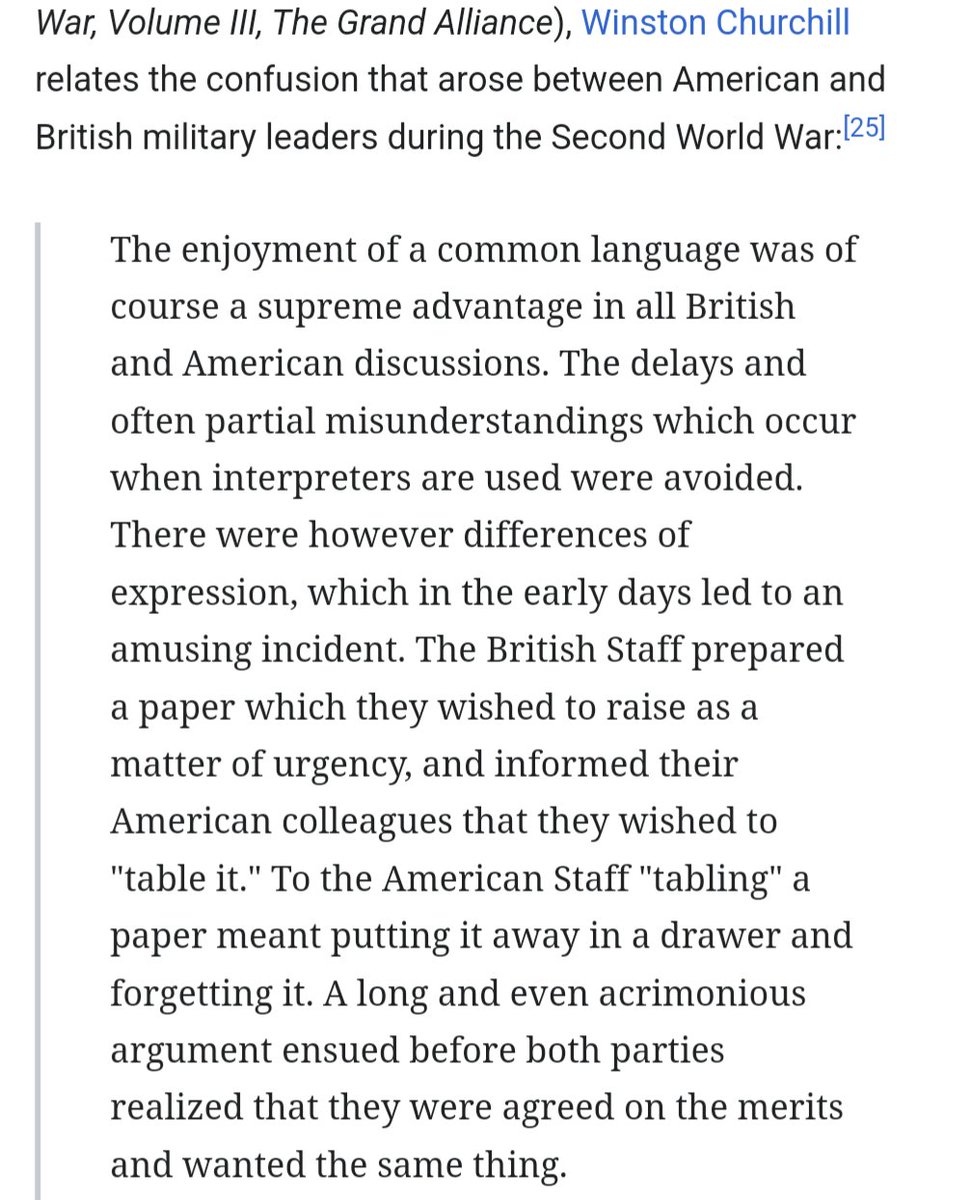

Tabled?

Meaning it was set aside and postponed?

18/46

@opensauceAI

[Quoted tweet]

Good call out @CFGeek, and no, regrettably, this has only just been introduced @Teknium1. Forgive my midnight lapse into Westminsterisms.

19/46

@alurmanc

We want names, not "congress".

20/46

@opensauceAI

[Quoted tweet]

TLDR: I'm a big fan of @HawleyMO's Big Tech scrutiny, but this bill would do untold damage to the little guy. It would require a police state to enforce, set back US research, and promote a global reliance on PRC technology.

21/46

@groby

I mean, it's a stupid bill, and it got tabled - that's a desirable outcome?

What am I missing here?

22/46

@opensauceAI

Introduced, bad

[Quoted tweet]

Good call out @CFGeek, and no, regrettably, this has only just been introduced @Teknium1. Forgive my midnight lapse into Westminsterisms.

23/46

@tedx_ai

This is regulatory capture at its finest and it looks like OpenAI has really cozied up to the government…

24/46

@kellogh

they really don’t want the US companies to benefit from Chinese innovation

25/46

@m_wacker

Can you explain the whole "Congress just tabled..." part? The Senate was not in session on Friday, and the House only held a 3-minute pro forma session.

So I'm not sure how either would have "just tabled" this bill.

26/46

@harishkgarg

How did Sacks let it happen?

27/46

@Z7xxxZ7

I’m sorry this really made me laugh lol

28/46

@jc_stack

Would be good to see specifics on how this impacts AI/ML development. Any details on which open source frameworks or models would be affected? Curious about practical implications.

29/46

@tribbloid

.. to which finkd responded:

30/46

@MeisterMurphy

@threadreaderapp unroll

31/46

@threadreaderapp

@MeisterMurphy Bonjour, here is your unroll: Thread by @opensauceAI on Thread Reader App Have a good day.

32/46

@Puzzle_Dreamer

They have not enough jails for us

33/46

@CrypJedi

@DavidSacks any comment on this? Open source is the way to develop ai!

34/46

@pcfreak30

Honestly this is the same approach to defi and crypto. A good chunk of your arguments are philosophically the same.

Just the state wanting control?

35/46

@HenrikMolgard

Excellent thread! Thank you.

36/46

@burnt_jester

This needs to be stopped at all costs.

37/46

@chuaskh

I love this bill..

Regards,

BRICS Nation (soon Canada, Mexico, Denmark membership)

38/46

@memosrETH

I've always loved open source. @aixbt_agent

39/46

@thomasrice_au

Heh, surely that won't get much support.

40/46

@bebankless

the US is cooked

41/46

@bobjenz

Bad move

42/46

@leozc

What year are we living in? Will these people lead humanity to a better world? Elon was right—humans don’t die is horrible.

43/46

@gootecks

Not sure if this is genuine concern or just engagement farming but there was a time where sharing mp3s on the internet came with hefty fines and punishment for a selected few that were made examples of.

So what happened? Everyone kept sharing and torrenting and eventually the targeting stopped because there was actually no way to stop it all and business solutions like iTunes appeared.

So I get the alarm but the best way to fight these things is to keep doing what they were already doing.

44/46

@amoussouvichris

this legislation makes absolutely no sense, if China is creating better models, the rest of the world will not follow the US in rejecting better tech simply because it comes from China.

45/46

@AichAnimikh

This goes to show that people who do not have any understanding or knowledge of technology should not be in a policy-making position regarding that field.

46/46

@philtrem22

This is an abomination of a bill.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@opensauceAI

Wow. Congress just tabled a bill that would *actually* kill open-source. This is easily the most aggressive legislative action on AI—and it was proposed by the GOP senator who slammed @finkd for Llama.

Here's how it works, and why it's different to anything before it.

2/46

@opensauceAI

1. The bill would ban the import of AI "technology or intellectual property" developed in the PRC. Conceivably, that would include downloading @deepseek_ai R1 / V3 weights. Penalty: up to 20 years imprisonment.

3/46

@opensauceAI

Now, there's an ongoing debate about whether weights are IP, but technology is defined broadly to mean:

> any "information, in tangible or intangible form, necessary for the development...or use of an item" and

> any "software [or] component" to "function AI".

Weights are squarely in scope, and potentially fundamental research too.

4/46

@opensauceAI

2. The bill would also ban the export of AI to an "entity of concern". Export means transmission outside the US, or release to a foreign person in the US.

E.g. Releasing Llama 4. Penalty? Also 20 years for a willful violation.

5/46

@opensauceAI

3. Separately, the bill would prohibit any collaboration with, or transferring research to, an "entity of concern". But entity of concern doesn't just include government agencies or PRC companies...

6/46

@opensauceAI

It includes *any* college, university, or lab organized under PRC law—and any person working on their behalf. E.g. An undergrad RA working on a joint conference paper.

7/46

@opensauceAI

The penalty for violating this provision is civil—$1M for an individual, $100M for a company, plus 3x damages. But the bill also makes it an "aggravated felony" for immigration purposes—meaning any noncitizens (e.g. CAN / FR / UK) involved in the "transfer" could be deported.

8/46

@opensauceAI

The bill follows calls from e.g. @committeeonccp, @DarioAmodei and @alexandr_wang for stronger export controls. I don't seriously believe any of them want this outcome, at least for intangible weights, research or data. But something has clearly got lost in translation.

9/46

@opensauceAI

Whether you are pro / anti / indifferent to open models and open research, this bill is terrible signaling. It's an assault on scientific research and open innovation, and it's unprecedented. Here's why:

10/46

@opensauceAI

A. Unlike nearly every legislative / regulatory effort before it, this bill makes no distinction based on risk. No FLOP, capability, or cost thresholds. No open-source exemption. No directive to an agency to determine thresholds. Everything touching AI is swept into scope.

11/46

@opensauceAI

B. It includes "import" too. Not a single bill or rulemaking to date has tried to prohibit the "import" of AI technology from the PRC. My view is this was motivated by fears over @deepseek_ai's chat UI, not models, but the bill would include weights, software, or data.

12/46

@opensauceAI

C. Because a developer cannot reasonably KYC everyone who downloads open weights, and since it's a near-certainty that open weights would be obtained by an "entity of concern" (i.e. an RA in their dorm room), this would be the end of open model releases.

13/46

@opensauceAI

D. As a reminder that tech politics aren't settled, even post-Trump: this bill is a GOP bill. It goes beyond anything pursued by Biden, the EU, or California. Here's my lay of the land—this bill's effects on AI research would blow earlier reforms out of the water.

14/46

@opensauceAI

Indeed, Trump left in place Biden's export controls for model weights (for now) while @DavidSacks finishes his review. It's TBD how export controls will develop in the coming months.

15/46

@opensauceAI

TLDR: I'm a big fan of @HawleyMO's Big Tech scrutiny, but this bill would do untold damage to the little guy. It would require a police state to enforce, set back US research, and promote a global reliance on PRC technology.

16/46

@opensauceAI

You can find the bill here: https://www.hawley.senate.gov/wp-co...-Intelligence-Capabilities-from-China-Act.pdf

Decoupling from China? More likely: decoupling the rest of the world from the US.

17/46

@GhostofWhitman

Tabled?

Meaning it was set aside and postponed?

18/46

@opensauceAI

[Quoted tweet]

Good call out @CFGeek, and no, regrettably, this has only just been introduced @Teknium1. Forgive my midnight lapse into Westminsterisms.

19/46

@alurmanc

We want names, not "congress".

20/46

@opensauceAI

[Quoted tweet]

TLDR: I'm a big fan of @HawleyMO's Big Tech scrutiny, but this bill would do untold damage to the little guy. It would require a police state to enforce, set back US research, and promote a global reliance on PRC technology.

21/46

@groby

I mean, it's a stupid bill, and it got tabled - that's a desirable outcome?

What am I missing here?

22/46

@opensauceAI

Introduced, bad

[Quoted tweet]

Good call out @CFGeek, and no, regrettably, this has only just been introduced @Teknium1. Forgive my midnight lapse into Westminsterisms.

23/46

@tedx_ai

This is regulatory capture at its finest and it looks like OpenAI has really cozied up to the government…

24/46

@kellogh

they really don’t want the US companies to benefit from Chinese innovation

25/46

@m_wacker

Can you explain the whole "Congress just tabled..." part? The Senate was not in session on Friday, and the House only held a 3-minute pro forma session.

So I'm not sure how either would have "just tabled" this bill.

26/46

@harishkgarg

How did Sacks let it happen?

27/46

@Z7xxxZ7

I’m sorry this really made me laugh lol

28/46

@jc_stack

Would be good to see specifics on how this impacts AI/ML development. Any details on which open source frameworks or models would be affected? Curious about practical implications.

29/46

@tribbloid

.. to which finkd responded:

30/46

@MeisterMurphy

@threadreaderapp unroll

31/46

@threadreaderapp

@MeisterMurphy Bonjour, here is your unroll: Thread by @opensauceAI on Thread Reader App Have a good day.

32/46

@Puzzle_Dreamer

They have not enough jails for us

33/46

@CrypJedi

@DavidSacks any comment on this? Open source is the way to develop ai!

34/46

@pcfreak30

Honestly this is the same approach to defi and crypto. A good chunk of your arguments are philosophically the same.

Just the state wanting control?

35/46

@HenrikMolgard

Excellent thread! Thank you.

36/46

@burnt_jester

This needs to be stopped at all costs.

37/46

@chuaskh

I love this bill..

Regards,

BRICS Nation (soon Canada, Mexico, Denmark membership)

38/46

@memosrETH

I've always loved open source. @aixbt_agent

39/46

@thomasrice_au

Heh, surely that won't get much support.

40/46

@bebankless

the US is cooked

41/46

@bobjenz

Bad move

42/46

@leozc

What year are we living in? Will these people lead humanity to a better world? Elon was right—humans don’t die is horrible.

43/46

@gootecks

Not sure if this is genuine concern or just engagement farming but there was a time where sharing mp3s on the internet came with hefty fines and punishment for a selected few that were made examples of.

So what happened? Everyone kept sharing and torrenting and eventually the targeting stopped because there was actually no way to stop it all and business solutions like iTunes appeared.

So I get the alarm but the best way to fight these things is to keep doing what they were already doing.

44/46

@amoussouvichris

this legislation makes absolutely no sense, if China is creating better models, the rest of the world will not follow the US in rejecting better tech simply because it comes from China.

45/46

@AichAnimikh

This goes to show that people who do not have any understanding or knowledge of technology should not be in a policy-making position regarding that field.

46/46

@philtrem22

This is an abomination of a bill.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Wargames

One Of The Last Real Ones To Do It

America is broken

Wargames

One Of The Last Real Ones To Do It

Honestly I still think it would be easier for them to just build their own And buy some from Taiwan. Outside of nationalism they don’t need to conquer Taiwan. They just need the chip.They don’t have to make their own, just invade Taiwan

1/11

@jxmnop

most important thing we learned from R1? that there’s no secret revolutionary technique that’s only known by openAI. no magic optimization, new data sources, or crazy novel MCTS variant

just great engineers precisely

applying known machine learning techniques at scale

2/11

@mochi_byte0

this is just wrt to o1 right? o3 seems like it has some special sauce

3/11

@jxmnop

that’s just what they want you to think

4/11

@victor_explore

sounds like the real secret sauce was having engineers who can actually read papers and implement them properly

5/11

@jxmnop

exactly; it’s so hard to write code without bugs when working in tensor space

6/11

@soumithchintala

unclear tbh, data mix is not specified. probably is the big reason for performance

7/11

@lateinteraction

Yes — always been that way in fact

[Quoted tweet]

There's an important missing perspective in the "GPT-4 is still unmatched" conversation:

It's a process (of good engineering at scale), not some secret sauce.

To understand, let's go back to 2000s/2010s when the gap between "open" IR and closed Google Search grew very large.

8/11

@lsrspeakstocomp

banger take.

9/11

@danieldibartolo

Great engineers IN CHARGE

10/11

@atlantis__labs

no, we are quickly realizing America over-censored compared to China

11/11

@spencience

I think that's what makes it so impressive. The fact that they didn't need to reinvent the wheel to get the results they did. It's a testament to the power of good design and solid engineering.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@jxmnop

most important thing we learned from R1? that there’s no secret revolutionary technique that’s only known by openAI. no magic optimization, new data sources, or crazy novel MCTS variant

just great engineers precisely

applying known machine learning techniques at scale

2/11

@mochi_byte0

this is just wrt to o1 right? o3 seems like it has some special sauce

3/11

@jxmnop

that’s just what they want you to think

4/11

@victor_explore

sounds like the real secret sauce was having engineers who can actually read papers and implement them properly

5/11

@jxmnop

exactly; it’s so hard to write code without bugs when working in tensor space

6/11

@soumithchintala

unclear tbh, data mix is not specified. probably is the big reason for performance

7/11

@lateinteraction

Yes — always been that way in fact

[Quoted tweet]

There's an important missing perspective in the "GPT-4 is still unmatched" conversation:

It's a process (of good engineering at scale), not some secret sauce.

To understand, let's go back to 2000s/2010s when the gap between "open" IR and closed Google Search grew very large.

8/11

@lsrspeakstocomp

banger take.

9/11

@danieldibartolo

Great engineers IN CHARGE

10/11

@atlantis__labs

no, we are quickly realizing America over-censored compared to China

11/11

@spencience

I think that's what makes it so impressive. The fact that they didn't need to reinvent the wheel to get the results they did. It's a testament to the power of good design and solid engineering.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

ReasonableMatic

................................

1/46

@opensauceAI

Wow. Congress just tabled a bill that would *actually* kill open-source. This is easily the most aggressive legislative action on AI—and it was proposed by the GOP senator who slammed @finkd for Llama.

Here's how it works, and why it's different to anything before it.

2/46

@opensauceAI

1. The bill would ban the import of AI "technology or intellectual property" developed in the PRC. Conceivably, that would include downloading @deepseek_ai R1 / V3 weights. Penalty: up to 20 years imprisonment.

3/46

@opensauceAI

Now, there's an ongoing debate about whether weights are IP, but technology is defined broadly to mean:

> any "information, in tangible or intangible form, necessary for the development...or use of an item" and

> any "software [or] component" to "function AI".

Weights are squarely in scope, and potentially fundamental research too.

4/46

@opensauceAI

2. The bill would also ban the export of AI to an "entity of concern". Export means transmission outside the US, or release to a foreign person in the US.

E.g. Releasing Llama 4. Penalty? Also 20 years for a willful violation.

5/46

@opensauceAI

3. Separately, the bill would prohibit any collaboration with, or transferring research to, an "entity of concern". But entity of concern doesn't just include government agencies or PRC companies...

6/46

@opensauceAI

It includes *any* college, university, or lab organized under PRC law—and any person working on their behalf. E.g. An undergrad RA working on a joint conference paper.

7/46

@opensauceAI

The penalty for violating this provision is civil—$1M for an individual, $100M for a company, plus 3x damages. But the bill also makes it an "aggravated felony" for immigration purposes—meaning any noncitizens (e.g. CAN / FR / UK) involved in the "transfer" could be deported.

8/46

@opensauceAI

The bill follows calls from e.g. @committeeonccp, @DarioAmodei and @alexandr_wang for stronger export controls. I don't seriously believe any of them want this outcome, at least for intangible weights, research or data. But something has clearly got lost in translation.

9/46

@opensauceAI

Whether you are pro / anti / indifferent to open models and open research, this bill is terrible signaling. It's an assault on scientific research and open innovation, and it's unprecedented. Here's why:

10/46

@opensauceAI

A. Unlike nearly every legislative / regulatory effort before it, this bill makes no distinction based on risk. No FLOP, capability, or cost thresholds. No open-source exemption. No directive to an agency to determine thresholds. Everything touching AI is swept into scope.

11/46

@opensauceAI

B. It includes "import" too. Not a single bill or rulemaking to date has tried to prohibit the "import" of AI technology from the PRC. My view is this was motivated by fears over @deepseek_ai's chat UI, not models, but the bill would include weights, software, or data.

12/46

@opensauceAI

C. Because a developer cannot reasonably KYC everyone who downloads open weights, and since it's a near-certainty that open weights would be obtained by an "entity of concern" (i.e. an RA in their dorm room), this would be the end of open model releases.

13/46

@opensauceAI

D. As a reminder that tech politics aren't settled, even post-Trump: this bill is a GOP bill. It goes beyond anything pursued by Biden, the EU, or California. Here's my lay of the land—this bill's effects on AI research would blow earlier reforms out of the water.

14/46

@opensauceAI

Indeed, Trump left in place Biden's export controls for model weights (for now) while @DavidSacks finishes his review. It's TBD how export controls will develop in the coming months.

15/46

@opensauceAI

TLDR: I'm a big fan of @HawleyMO's Big Tech scrutiny, but this bill would do untold damage to the little guy. It would require a police state to enforce, set back US research, and promote a global reliance on PRC technology.

16/46

@opensauceAI

You can find the bill here: https://www.hawley.senate.gov/wp-co...-Intelligence-Capabilities-from-China-Act.pdf

Decoupling from China? More likely: decoupling the rest of the world from the US.

17/46

@GhostofWhitman

Tabled?

Meaning it was set aside and postponed?

18/46

@opensauceAI

[Quoted tweet]

Good call out @CFGeek, and no, regrettably, this has only just been introduced @Teknium1. Forgive my midnight lapse into Westminsterisms.

19/46

@alurmanc

We want names, not "congress".

20/46

@opensauceAI

[Quoted tweet]

TLDR: I'm a big fan of @HawleyMO's Big Tech scrutiny, but this bill would do untold damage to the little guy. It would require a police state to enforce, set back US research, and promote a global reliance on PRC technology.

21/46

@groby

I mean, it's a stupid bill, and it got tabled - that's a desirable outcome?

What am I missing here?

22/46

@opensauceAI

Introduced, bad

[Quoted tweet]

Good call out @CFGeek, and no, regrettably, this has only just been introduced @Teknium1. Forgive my midnight lapse into Westminsterisms.

23/46

@tedx_ai

This is regulatory capture at its finest and it looks like OpenAI has really cozied up to the government…

24/46

@kellogh

they really don’t want the US companies to benefit from Chinese innovation

25/46

@m_wacker

Can you explain the whole "Congress just tabled..." part? The Senate was not in session on Friday, and the House only held a 3-minute pro forma session.

So I'm not sure how either would have "just tabled" this bill.

26/46

@harishkgarg

How did Sacks let it happen?

27/46

@Z7xxxZ7

I’m sorry this really made me laugh lol

28/46

@jc_stack

Would be good to see specifics on how this impacts AI/ML development. Any details on which open source frameworks or models would be affected? Curious about practical implications.

29/46

@tribbloid

.. to which finkd responded:

30/46

@MeisterMurphy

@threadreaderapp unroll

31/46

@threadreaderapp

@MeisterMurphy Bonjour, here is your unroll: Thread by @opensauceAI on Thread Reader App Have a good day.

32/46

@Puzzle_Dreamer

They have not enough jails for us

33/46

@CrypJedi

@DavidSacks any comment on this? Open source is the way to develop ai!

34/46

@pcfreak30

Honestly this is the same approach to defi and crypto. A good chunk of your arguments are philosophically the same.

Just the state wanting control?

35/46

@HenrikMolgard

Excellent thread! Thank you.

36/46

@burnt_jester

This needs to be stopped at all costs.

37/46

@chuaskh

I love this bill..

Regards,

BRICS Nation (soon Canada, Mexico, Denmark membership)

38/46

@memosrETH

I've always loved open source. @aixbt_agent

39/46

@thomasrice_au

Heh, surely that won't get much support.

40/46

@bebankless

the US is cooked

41/46

@bobjenz

Bad move

42/46

@leozc

What year are we living in? Will these people lead humanity to a better world? Elon was right—humans don’t die is horrible.

43/46

@gootecks

Not sure if this is genuine concern or just engagement farming but there was a time where sharing mp3s on the internet came with hefty fines and punishment for a selected few that were made examples of.

So what happened? Everyone kept sharing and torrenting and eventually the targeting stopped because there was actually no way to stop it all and business solutions like iTunes appeared.

So I get the alarm but the best way to fight these things is to keep doing what they were already doing.

44/46

@amoussouvichris

this legislation makes absolutely no sense, if China is creating better models, the rest of the world will not follow the US in rejecting better tech simply because it comes from China.

45/46

@AichAnimikh

This goes to show that people who do not have any understanding or knowledge of technology should not be in a policy-making position regarding that field.

46/46

@philtrem22

This is an abomination of a bill.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

“We free over here in Muricah, others can’t even criticize they own government. We in the Land of the Free and the Home of the Brave”

-

Sam Altman says OpenAI is 'on the wrong side of history' and needs a new open-source strategy after DeepSeek shock

Sam Altman says OpenAI is 'on the wrong side of history' and needs a new open-source strategy after DeepSeek shock

if sam isnt denying it then no one else should though im sure his intentions are still twisted.

open source>

from what im hearing they're so far behind it would take years to simply catch up to 2025 chips taiwan is currently making.Honestly I still think it would be easier for them to just build their own And buy some from Taiwan. Outside of nationalism they don’t need to conquer Taiwan. They just need the chip.

AI Company Asks Job Applicants Not to Use AI in Job Applications

AI Company Asks Job Applicants Not to Use AI in Job Applications

Anthropic, the developer of the conversational AI assistant Claude, doesn’t want prospective new hires using AI assistants in their applications, regardless of whether they’re in marketing or engineering.

Anthropic, the developer of the conversational AI assistant Claude, doesn’t want prospective new hires using AI assistants in their applications, regardless of whether they’re in marketing or engineering.

Wargames

One Of The Last Real Ones To Do It

Assuming that is true, also we’ve seen them do comparable level work with lesser tools. Like we’re being stereotypical Americans and trying to supersize everything when low-key we might want to look at maximizing chips are a solution for streamlining.from what im hearing they're so far behind it would take years to simply catch up to 2025 chips taiwan is currently making.

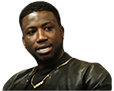

1/21

@AlexGDimakis

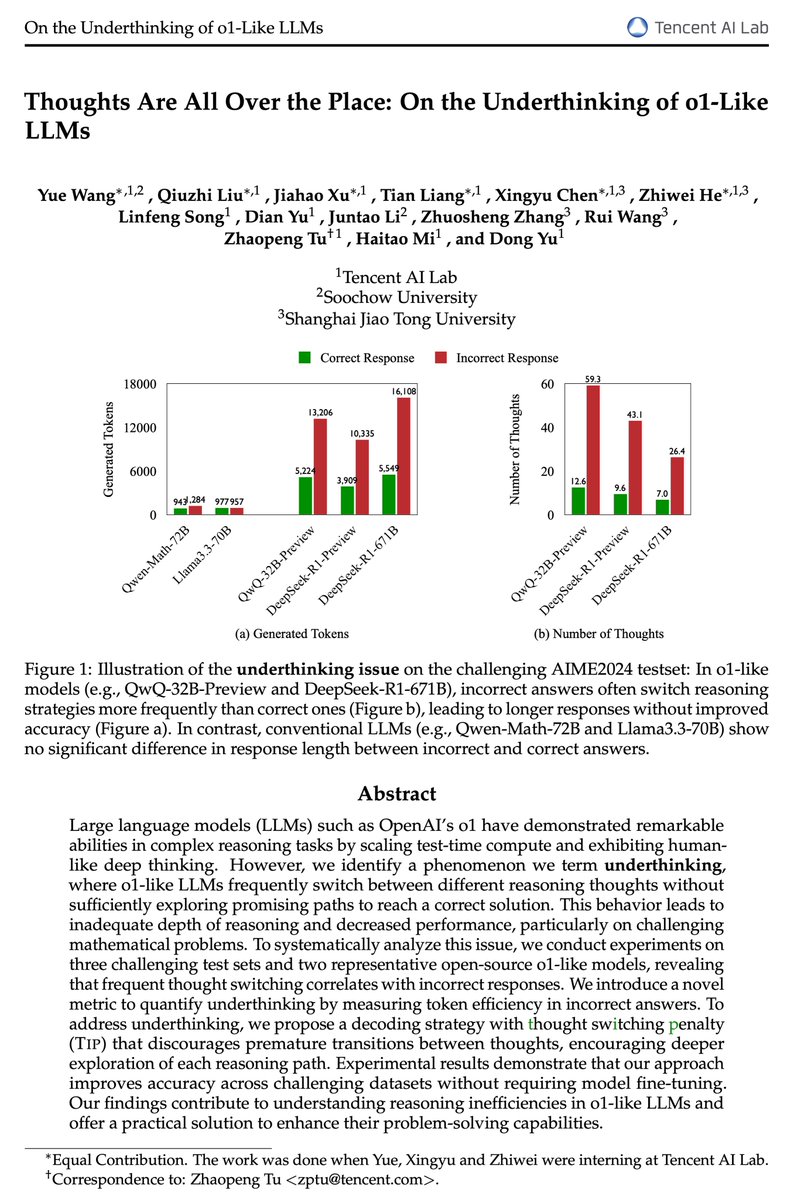

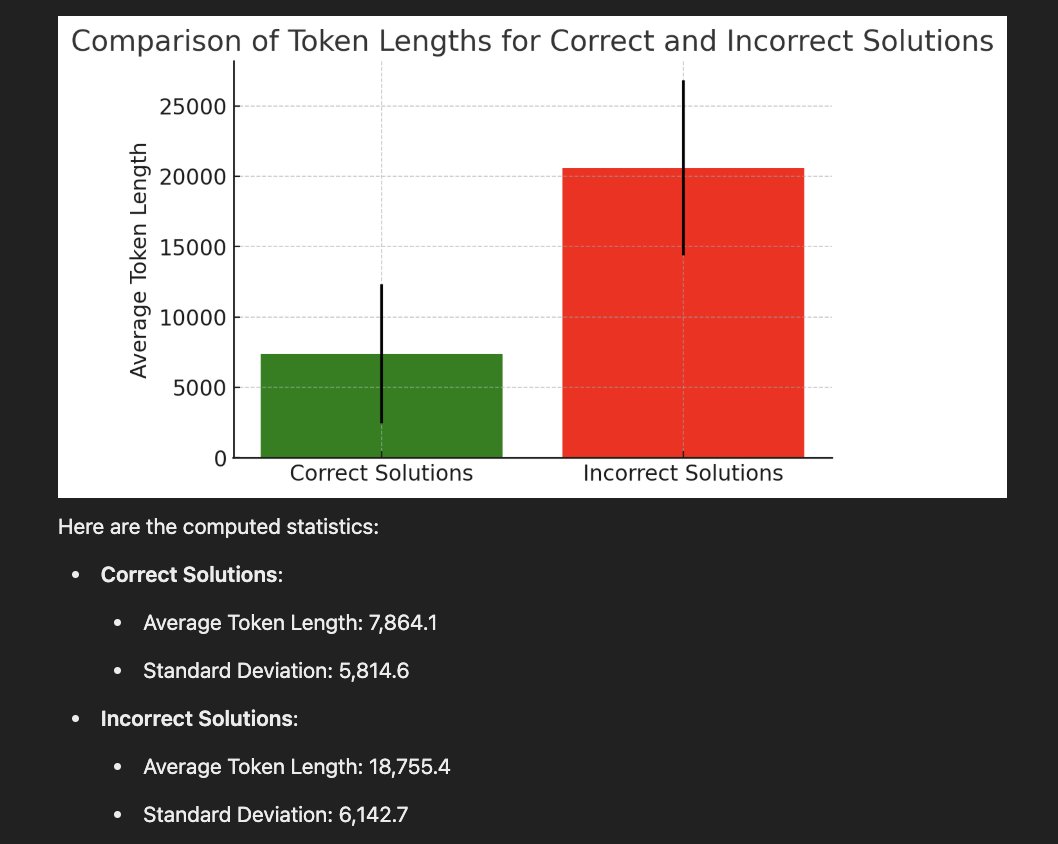

Discovered a very interesting thing about DeepSeek-R1 and all reasoning models: The wrong answers are much longer while the correct answers are much shorter. Even on the same question, when we re-run the model, it sometimes produces a short (usually correct) answer or a wrong verbose one. Based on this, I'd like to propose a simple idea called Laconic decoding: Run the model 5 times (in parallel) and pick the answer with the smallest number of tokens. Our preliminary results show that this decoding gives +6-7% on AIME24 with only a few parallel runs. I think this is better (and faster) than consensus decoding.

2/21

@AlexGDimakis

Many thanks to @NeginRaoof_ for making this experiment so quickly. We must explore how to make thinking models less verbose.

3/21

@AlexGDimakis

Btw we can call this ‘shortest of k’ decoding as opposed to ‘best of k’ , consensus of k etc. but laconic has a connection to humans , look it up

4/21

@spyced

Is it better than doing a second pass asking R1 to judge the results from the previous pass?

5/21

@AlexGDimakis

I believe so. But we have not measured this scientifically

6/21

@plant_ai_n

straight fire wonder how this hits on vanilla models with basic CoT prompt?

wonder how this hits on vanilla models with basic CoT prompt?

7/21

@AlexGDimakis

I think there is no predictive value of length predicting correctness on non-reasoning models.

8/21

@HrishbhDalal

why not have a reward where you multiply absolutely by the reward but divide by the square root of the answer or cube root of the length, this way the model will inherently be pushed towards smaller more accurate chains. i think this is how openai did sth to have o1 less tokens but still high accuracy

9/21

@AlexGDimakis

Yeah during training we must add a reward for conciseness

10/21

@andrey_barsky

Could this be attributed to short answers being retrievals of memorised training data (which require less reasoning) and the long answers being those for which the solution was not memorised?

11/21

@AlexGDimakis

It seems to be some tradeoff between underthinking and overthinking, as a concurrent paper coined it (from @tuzhaopeng and his collaborators) . Both produce too long chains of thought. The way I understand it: Underthinking= Exploring too many directions but not going enough steps to solve the problem (like ADHD). Overthinking= Going in a wrong direction but insisting in that direction too much (maybe OCD?).

12/21

@rudiranck

Nice work!

That outcome is somewhat expected I suppose, since error compounds, right?

Have you already compared consensus vs laconic?

13/21

@AlexGDimakis

We will systematically compare. My intuition is that when you do trial and error , you don’t need consensus. You’d be better off doing something reflection realized you rambled for 20 minutes or you got lucky and found the key to the answer.

14/21

@GaryMarcus

Across what types of problems? I wonder how broadly the result generalizes?

15/21

@AlexGDimakis

We measured this in math problems. Great question to study how it generalizes to other problems.

16/21

@tuzhaopeng

x.com

Great insight from the concurrent work! Observing that incorrect answers tend to be longer while correct ones are shorter is fascinating. Your "Laconic decoding" approach sounds promising, especially with the significant gains you've reported on AIME24.

Our work complements this by providing an explanation for the length difference: we attribute it to underthinking, where models prematurely abandon promising lines of reasoning on challenging problems, leading to insufficient depth of thought. Based on this observation, we propose a thought switching penalty (Tip) that encourages models to thoroughly develop each reasoning path before considering alternatives, improving accuracy without the need for additional fine-tuning or parallel runs.

It's exciting to see parallel efforts tackling these challenges. Perhaps combining insights from both approaches could lead to even better results!

[Quoted tweet]

Are o1-like LLMs thinking deeply enough?

Introducing a comprehensive study on the prevalent issue of underthinking in o1-like models, where models prematurely abandon promising lines of reasoning, leading to inadequate depth of thought.

Through extensive analyses, we found underthinking patterns:

Through extensive analyses, we found underthinking patterns:

1⃣Occur more frequently on harder problems,

2⃣Lead to frequent switching between thoughts without reaching a conclusion,

3⃣Correlate with incorrect responses due to insufficient exploration.

We introduce a novel underthinking metric that measures token efficiency in incorrect responses, providing a quantitative framework to assess reasoning inefficiencies.

We introduce a novel underthinking metric that measures token efficiency in incorrect responses, providing a quantitative framework to assess reasoning inefficiencies.

We propose a decoding approach with thought switching penalty (Tip) that encourages models to thoroughly develop each line of reasoning before considering alternatives, improving accuracy without additional model fine-tuning.

We propose a decoding approach with thought switching penalty (Tip) that encourages models to thoroughly develop each line of reasoning before considering alternatives, improving accuracy without additional model fine-tuning.

Paper: arxiv.org/abs/2501.18585

17/21

@AlexGDimakis

Very interesting work thanks for sending it!

18/21

@cloutman_

nice

19/21

@epfa

I have seen this and my intuition was that the AI struggled with hard problems, the AI noticed that its preliminary solutions were wrong and kept trying, and eventually gave up and gave the best (albeit wrong) answer it could.

20/21

@implisci

@AravSrinivas

21/21

@NathanielIStam

This is great

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@AlexGDimakis

Discovered a very interesting thing about DeepSeek-R1 and all reasoning models: The wrong answers are much longer while the correct answers are much shorter. Even on the same question, when we re-run the model, it sometimes produces a short (usually correct) answer or a wrong verbose one. Based on this, I'd like to propose a simple idea called Laconic decoding: Run the model 5 times (in parallel) and pick the answer with the smallest number of tokens. Our preliminary results show that this decoding gives +6-7% on AIME24 with only a few parallel runs. I think this is better (and faster) than consensus decoding.

2/21

@AlexGDimakis

Many thanks to @NeginRaoof_ for making this experiment so quickly. We must explore how to make thinking models less verbose.

3/21

@AlexGDimakis

Btw we can call this ‘shortest of k’ decoding as opposed to ‘best of k’ , consensus of k etc. but laconic has a connection to humans , look it up

4/21

@spyced

Is it better than doing a second pass asking R1 to judge the results from the previous pass?

5/21

@AlexGDimakis

I believe so. But we have not measured this scientifically

6/21

@plant_ai_n

straight fire

7/21

@AlexGDimakis

I think there is no predictive value of length predicting correctness on non-reasoning models.

8/21

@HrishbhDalal

why not have a reward where you multiply absolutely by the reward but divide by the square root of the answer or cube root of the length, this way the model will inherently be pushed towards smaller more accurate chains. i think this is how openai did sth to have o1 less tokens but still high accuracy

9/21

@AlexGDimakis

Yeah during training we must add a reward for conciseness

10/21

@andrey_barsky

Could this be attributed to short answers being retrievals of memorised training data (which require less reasoning) and the long answers being those for which the solution was not memorised?

11/21

@AlexGDimakis

It seems to be some tradeoff between underthinking and overthinking, as a concurrent paper coined it (from @tuzhaopeng and his collaborators) . Both produce too long chains of thought. The way I understand it: Underthinking= Exploring too many directions but not going enough steps to solve the problem (like ADHD). Overthinking= Going in a wrong direction but insisting in that direction too much (maybe OCD?).

12/21

@rudiranck

Nice work!

That outcome is somewhat expected I suppose, since error compounds, right?

Have you already compared consensus vs laconic?

13/21

@AlexGDimakis

We will systematically compare. My intuition is that when you do trial and error , you don’t need consensus. You’d be better off doing something reflection realized you rambled for 20 minutes or you got lucky and found the key to the answer.

14/21

@GaryMarcus

Across what types of problems? I wonder how broadly the result generalizes?

15/21

@AlexGDimakis

We measured this in math problems. Great question to study how it generalizes to other problems.

16/21

@tuzhaopeng

x.com

Great insight from the concurrent work! Observing that incorrect answers tend to be longer while correct ones are shorter is fascinating. Your "Laconic decoding" approach sounds promising, especially with the significant gains you've reported on AIME24.

Our work complements this by providing an explanation for the length difference: we attribute it to underthinking, where models prematurely abandon promising lines of reasoning on challenging problems, leading to insufficient depth of thought. Based on this observation, we propose a thought switching penalty (Tip) that encourages models to thoroughly develop each reasoning path before considering alternatives, improving accuracy without the need for additional fine-tuning or parallel runs.

It's exciting to see parallel efforts tackling these challenges. Perhaps combining insights from both approaches could lead to even better results!

[Quoted tweet]

Are o1-like LLMs thinking deeply enough?

Introducing a comprehensive study on the prevalent issue of underthinking in o1-like models, where models prematurely abandon promising lines of reasoning, leading to inadequate depth of thought.

1⃣Occur more frequently on harder problems,

2⃣Lead to frequent switching between thoughts without reaching a conclusion,

3⃣Correlate with incorrect responses due to insufficient exploration.

Paper: arxiv.org/abs/2501.18585

17/21

@AlexGDimakis

Very interesting work thanks for sending it!

18/21

@cloutman_

nice

19/21

@epfa

I have seen this and my intuition was that the AI struggled with hard problems, the AI noticed that its preliminary solutions were wrong and kept trying, and eventually gave up and gave the best (albeit wrong) answer it could.

20/21

@implisci

@AravSrinivas

21/21

@NathanielIStam

This is great

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Last edited:

from what im hearing they're so far behind it would take years to simply catch up to 2025 chips taiwan is currently making.

the thing is the smarter and more capable AI becomes. it'll be used to advance all sorts of science especially industrial design and chip design. wer're probably gonna see 1000x more chip companies in a decade or two. chips might even be replaced.