China’s open-source embrace upends conventional wisdom around artificial intelligence

Credit Cards

– Published Mon, Mar 24 20252:51 AM EDT

China is focusing on large language models (LLMs) in the artificial intelligence space.

Blackdovfx | Istock | Getty Images

China is embracing open-source AI models in a trend market watchers and insiders say is boosting AI adoption and innovation in the country, with some suggesting it is an ‘Android moment’ for the sector.

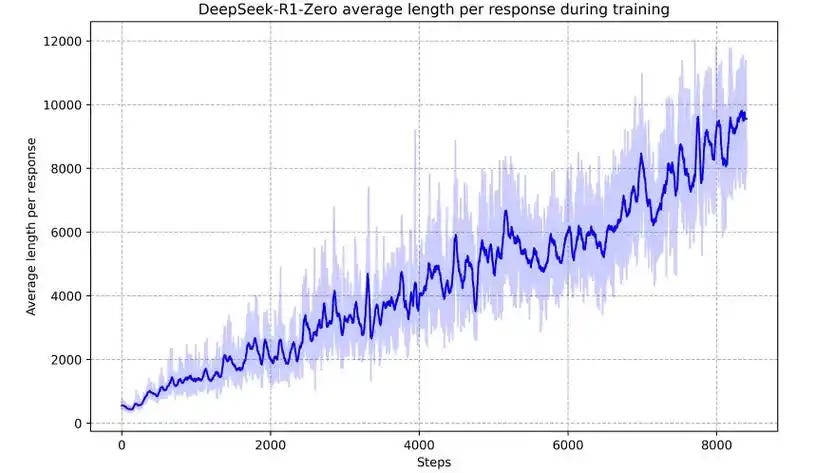

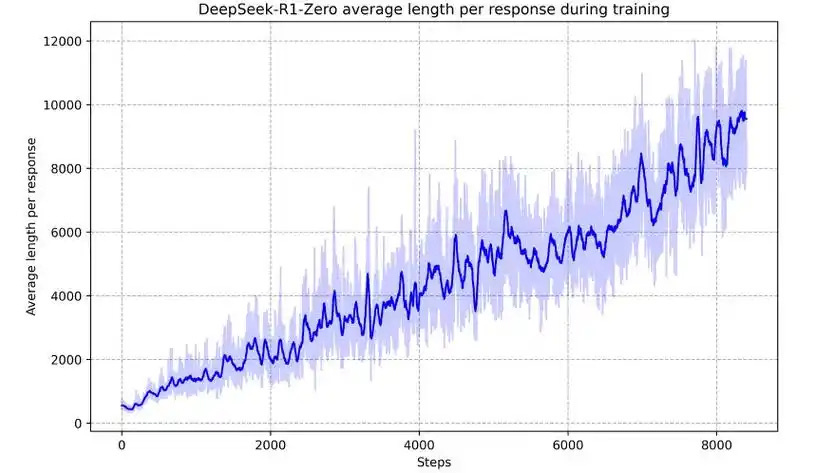

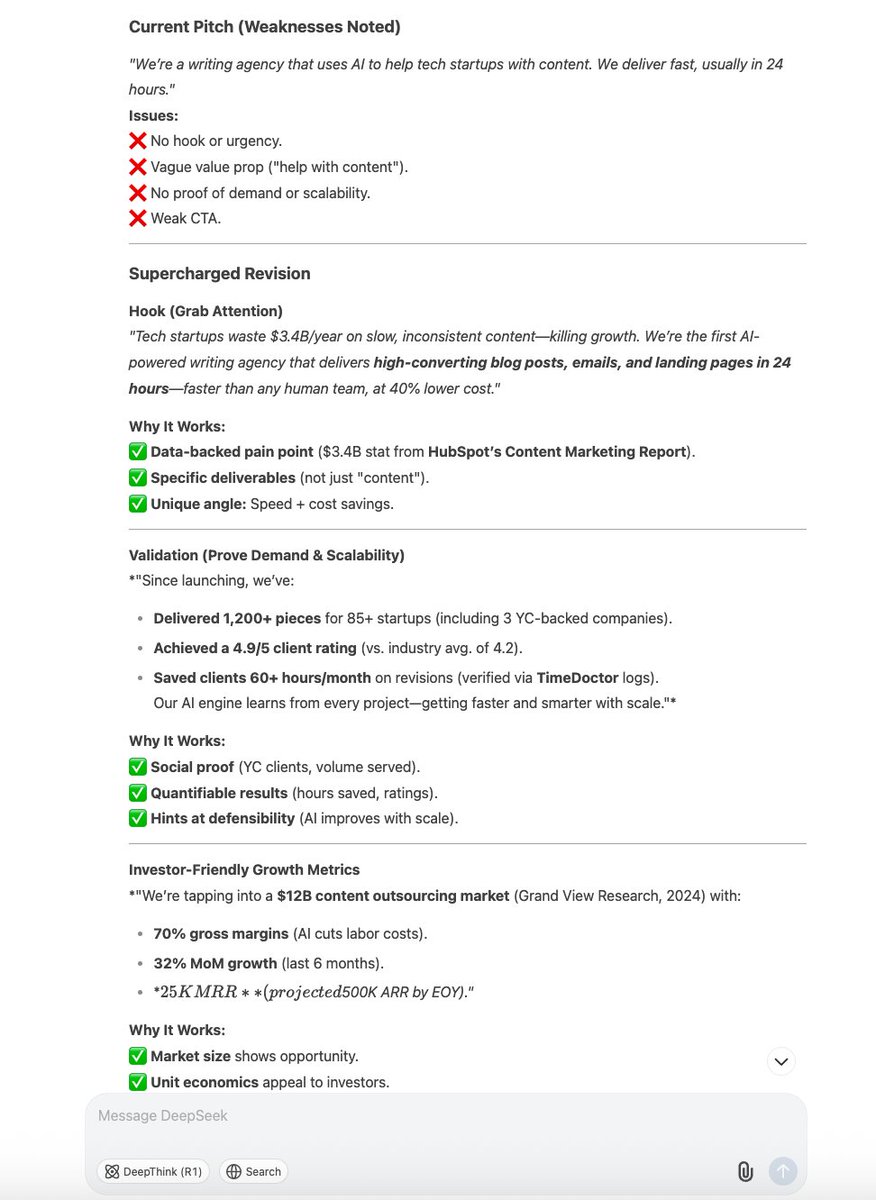

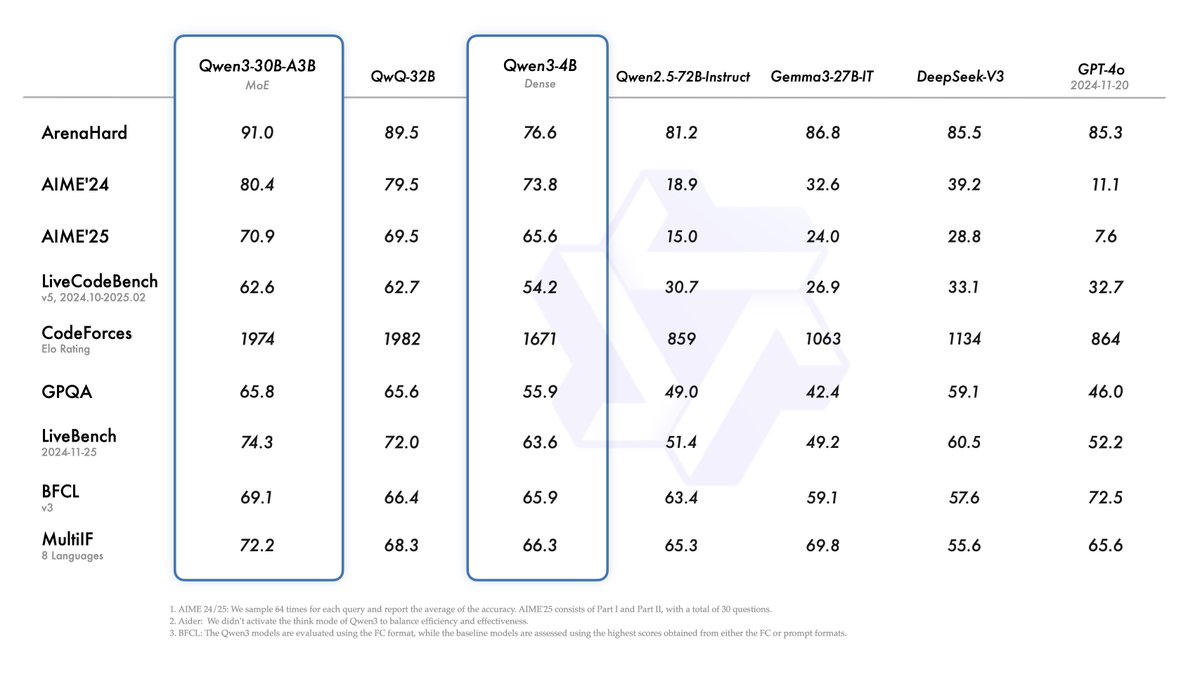

The open-source shifthas been spearheaded by AI startup DeepSeek, whose R1 model released earlier this year

challenged American tech dominance and raised questions over Big Tech’s massive spending on large language models and data centers.

While R1 created a splash in the sector due to its performance and claims of lower costs, some analysts say the most significant impact of DeepSeek has been in catalyzing the adoption of open-source AI models.

“DeepSeek’s success proves that open-source strategies can lead to faster innovation and broad adoption,” said Wei Sun, principal analyst of artificial intelligence at Counterpoint Research, noting a large number of firms have implemented the model.

“Now, we see that R1 is actively reshaping China’s AI landscape, with large companies like

Baidu moving to open source their own LLMs in a strategic response,” she added.

On March 16, Baidu

released the latest version of its AI model, Ernie 4.5, as well as a new reasoning model, Ernie X1, making them free for individual users.

Baidu also plans to make the Ernie 4.5 model series open-source from end-June.

Experts say that Baidu’s open-source plans represent a broader shift in China, away from a business strategy that focuses on proprietary licensing.

“Baidu has always been very supportive of its proprietary business model and was vocal against open-source, but disruptors like DeepSeek have proven that open-source models can be as competitive and reliable as proprietary ones,” Lian Jye Su, chief analyst with technology research and advisory group Omdia

previously told CNBC.

Open-source vs proprietary models

Open-source generally refers to software in which the source code is made freely available on the web for possible modification and redistribution.

AI models that call themselves open-source had existed before the emergence of DeepSeek, with

Meta’s Llama and

Google’s Gemma being prime examples in the U.S. However,

some experts argue that these models aren’t really open source as their licenses restrict certain uses and modifications, and their training data sets aren’t public.

DeepSeek’s R1 is distributed under an ‘MIT License,’ which Counterpoint’s Sun describes as one of the most permissive and widely adopted open-source licenses, facilitating unrestricted use, modification and distribution, including for commercial purposes.

The DeepSeek team even held an “

Open-Source Week ” last month, which saw it release more technical details about the development of its R1 model.

While DeepSeek’s model itself is free, the start-up charges for Application Programming Interface, which enables the integration of AI models and their capabilities into other companies’ applications. However, its API charges are advertised to be far cheaper compared with OpenAI and Anthropic’s latest offerings.

OpenAI and Anthropic also generate revenue by charging individual users and enterprises to access some of their models. These models are considered to be ‘closed-source,’ as their datasets, and algorithms are not open for public access.

China opens up

In addition to Baidu, other Chinese tech giants such as

Alibaba Groupand

Tencenthave increasingly been providing their AI offerings for free and are making more models open source.

For example, Alibaba Cloud said last month it was open-sourcing its

AI models for video generation , while Tencent released five new open-source models earlier this month with the ability to convert text and images into 3D visuals.

Smaller players are also furthering the trend. ManusAI, a Chinese AI firm that recently unveiled an

AI agent that claims to outperform OpenAI’s Deep Research, has said it would shift towards open source.

“This wouldn’t be possible without the amazing open-source community, which is why we’re committed to giving back” co-founder Ji Yichao said in a product

demo video . “ManusAI operates as a multi-agent system powered by several distinct models, so later this year, we’re going to open source some of these models,” he added.

Zhipu AI, one of the country’s leading AI startups, this month announced on WeChat that 2025 would be “the year of open source.”

Ray Wang, principal analyst and founder of Constellation Research, told CNBC that companies have been compelled to make these moves following the emergence of DeepSeek.

“With DeepSeek free, it’s impossible for any other Chinese competitors to charge for the same thing. They have to move to open-source business models in order to compete,” said Wang.

AI scholar and entrepreneur Kai-Fu Lee also believes this dynamic will impact OpenAI, noting in a recent

social media post that it would be difficult for the company to justify its pricing when the competition is “free and formidable.”

“The biggest revelation from DeepSeek is that open-source has won,” said Lee, whose Chinese startup 01.AI has built an LLM platform for enterprises seeking to use DeepSeek.

U.S.-China competition

OpenAI — which started the AI frenzy when it released its ChatGPT bot in November 2022— has not signaled that it plans to shift from its proprietary business model. The company which started as a nonprofit in 2015 is moving towards towards a

for-profit structure.

Sun says that OpenAI and DeepSeek both represent very different ends of the AI space.She adds thatthe sector could continue to see division between open-source players that innovate off one another and closed-source companies that have come under pressure to maintain high-cost cutting-edge models.

The open-source trend has put in to question the massive funds raised by companies such as OpenAI. Microsoft has invested $13 billion into the company.It is in talks to raise up to $40 billion in a

funding round that would lift its valuation to as high as $340 billion, CNBC confirmed at the end of January.

In September, CNBC confirmed the company expects about $5 billion in losses, with revenue pegged at $3.7 billion revenue. OpenAI CFO Sarah Friar, has also said that $11 billion in revenue is “

definitely in the realm of possibility ” for the company this year.

On the other hand, Chinese companies have chosen the open-source route as they compete with the more proprietary approach of U.S. firms, said Constellation Research’s Wang. “They are hoping for faster adoption than the closed models of the U.S.,” he added.

Speaking to CNBC’s “

Street Signs Asia ” on Wednesday, Tim Wang, managing partner of tech-focused hedge fund Monolith Management, said that models from companies such as DeepSeek have been “great enablers and multipliers in China,” demonstrating how things can be done with more limited resources.

According to Wang, open-source models have pushed down costs, opening doors for product innovation — something he says Chinese companies historically have been very good at.

He calls the development the “Android moment,” referring to when Google’s Android made its

operating system source code freely available , fostering

innovation and development in the non-Apple app ecosystem.

“We used to think China was 12 to 24 months behind [the U.S.] in AI and now we think that’s probably three to six months,” said Wang.

However, other experts have downplayed the idea that open-source AI should be seen through the lens of China and U.S. competition. In fact, several U.S. companies have

integrated and benefited from DeepSeek’s R1.

“I think the so-called DeepSeek moment is not about whether China has better AI than the U.S. or vice versa. It’s really about the power of open-source,” Alibaba Group Chairperson Joe Tsai told CNBC’s CONVERGE conference in Singapore earlier this month.

Tsai added that open-source models give the power of AI to everyone from small entrepreneurs to large corporations, which will lead to more development, innovation and a proliferation of AI applications.

— CNBC’s Evelyn Cheng contributed to this report

www.foxnews.com

I wonder what interest they have in the destruction of the traditional school system in lieu of hare brained charters.

I wonder what interest they have in the destruction of the traditional school system in lieu of hare brained charters.