You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Y'all heard about ChatGPT yet? AI instantly generates question answers, entire essays etc.

- Thread starter RamsayBolton

- Start date

More options

Who Replied?1/6

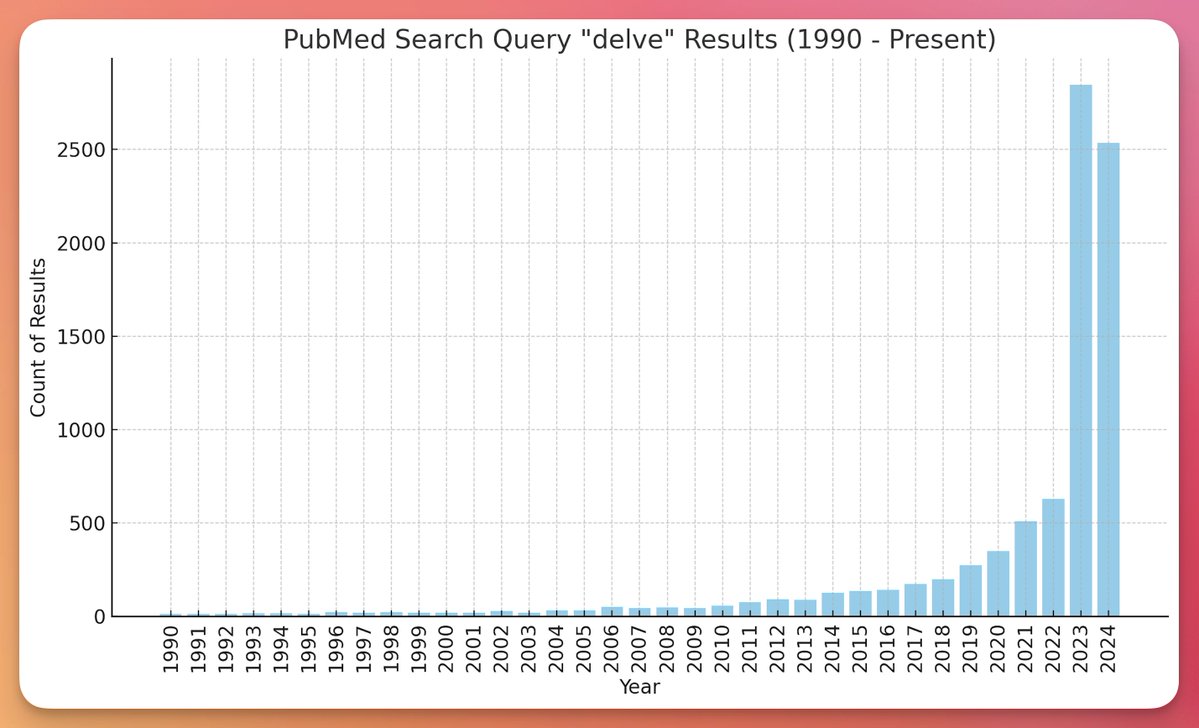

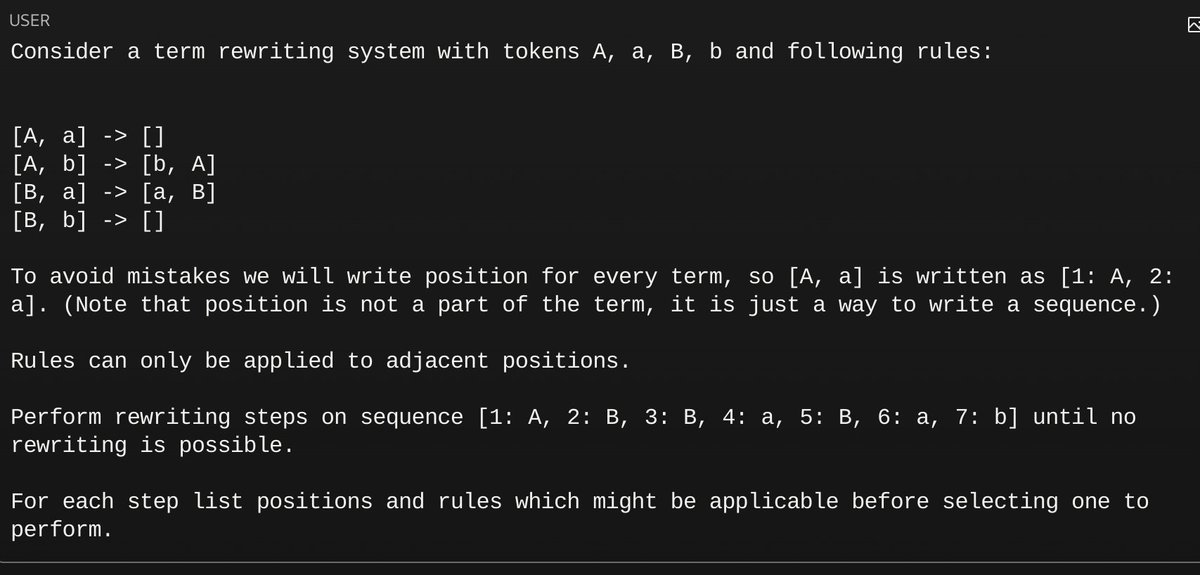

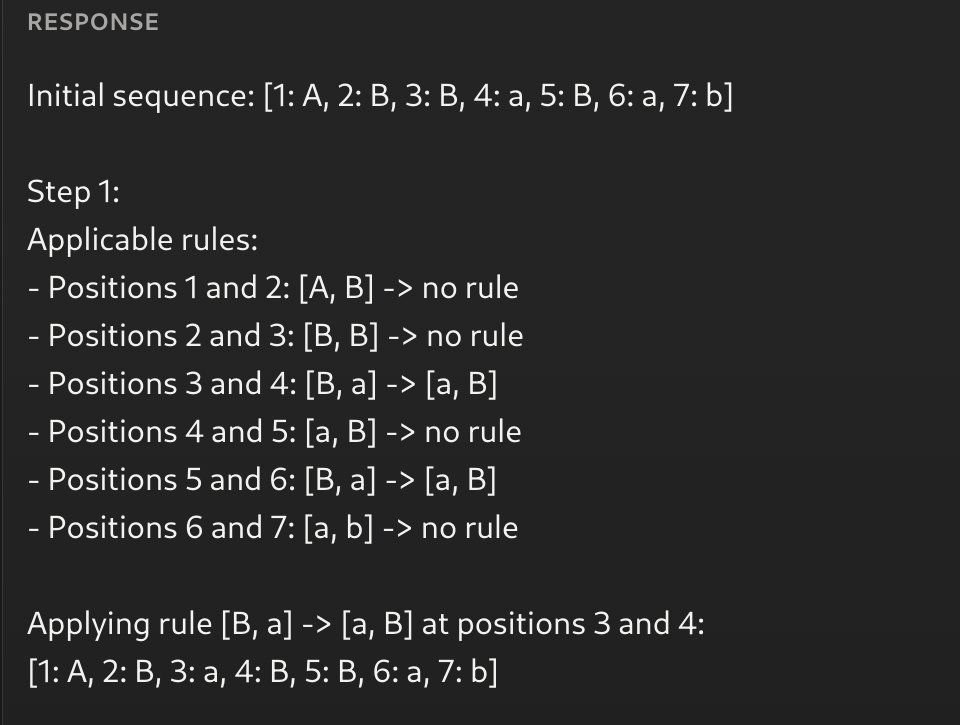

Are medical studies being written with ChatGPT?

Well, we all know ChatGPT overuses the word "delve".

Look below at how often the word 'delve' is used in papers on PubMed (2023 was the first full year of ChatGPT).

2/6

"Mosaic" is another one that comes up way too frequently.

Someone told me that the word "notable" is a dead give away of GPT

—I'm so bummed, because I use "notable" to describe interesting parts when I'm writing up studies all the time.

3/6

Thanks, William!

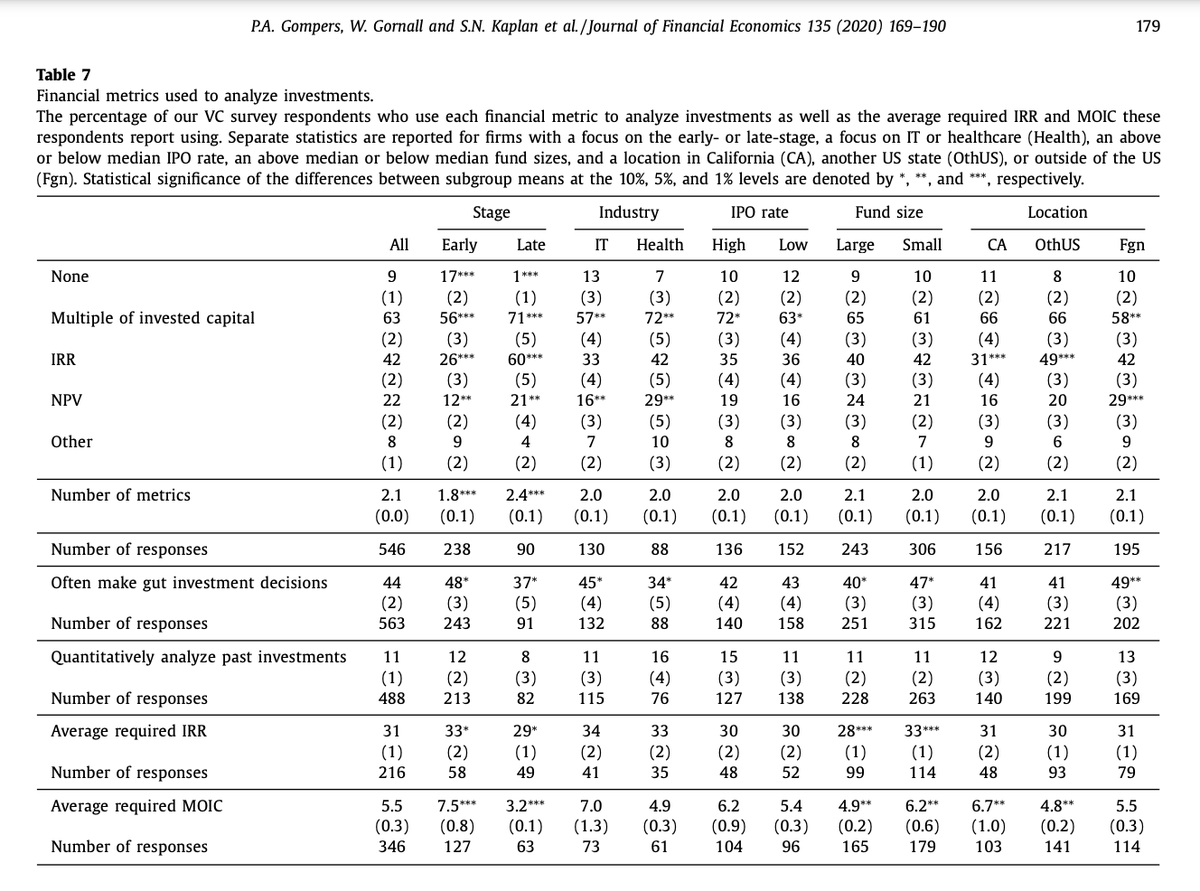

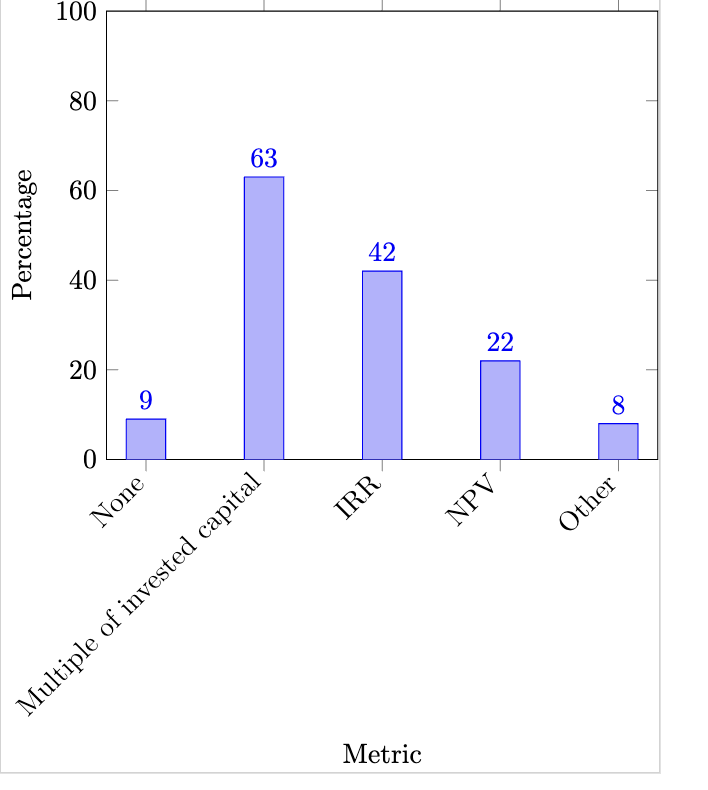

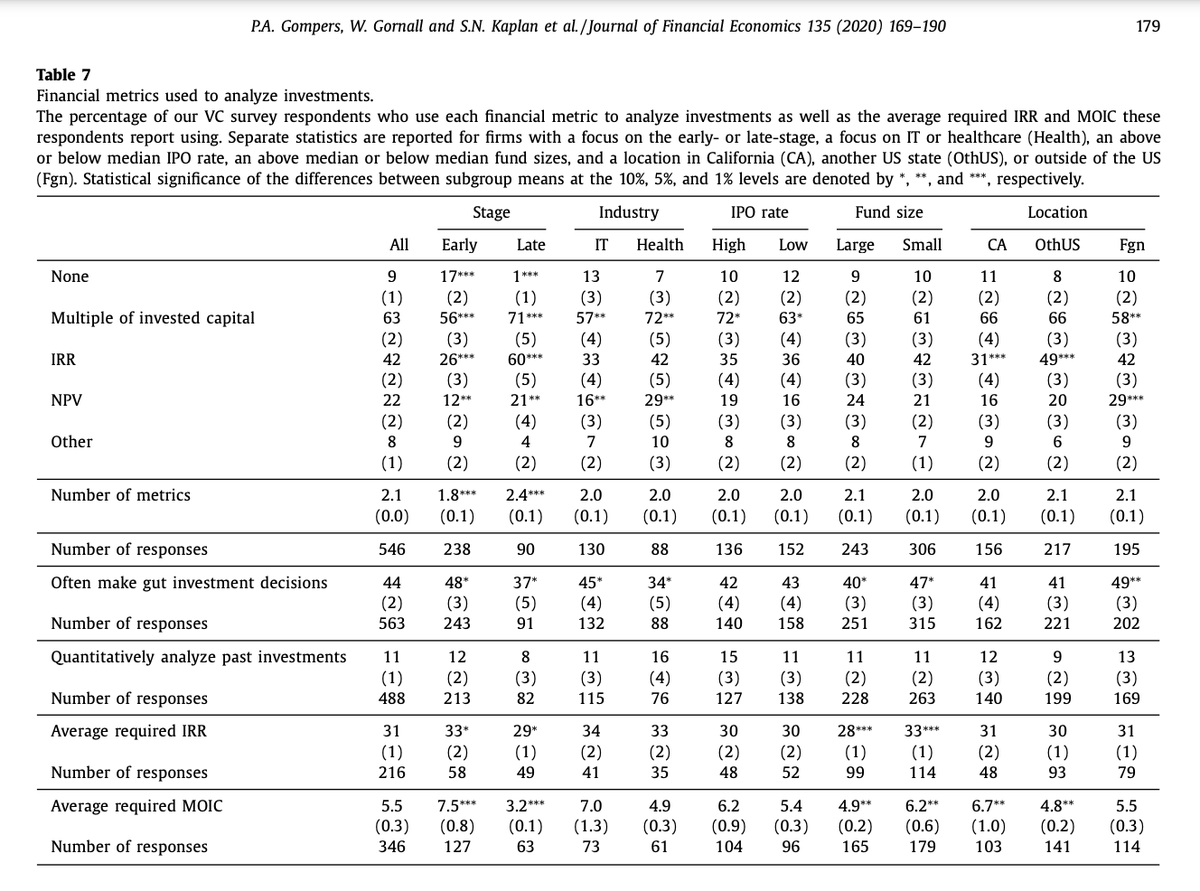

Where did you get the economics data from?

Yep, I used code interpreter to make my chart, too.

4/6

I'm definitely not saying it's a bad thing.

Full disclaimer: every single paper I've submitted this year has a statement of disclosure that I've used GPT-4 in editing words, sometimes for coding assistance

—and that if there are errors, they are my responsibility.

5/6

Good call.

I'll see if I can do that chart tomorrow

6/6

Completely agree.

Just got the count of papers data

—I'll post the normalised chart as soon as I finish handling some other things

Are medical studies being written with ChatGPT?

Well, we all know ChatGPT overuses the word "delve".

Look below at how often the word 'delve' is used in papers on PubMed (2023 was the first full year of ChatGPT).

2/6

"Mosaic" is another one that comes up way too frequently.

Someone told me that the word "notable" is a dead give away of GPT

—I'm so bummed, because I use "notable" to describe interesting parts when I'm writing up studies all the time.

3/6

Thanks, William!

Where did you get the economics data from?

Yep, I used code interpreter to make my chart, too.

4/6

I'm definitely not saying it's a bad thing.

Full disclaimer: every single paper I've submitted this year has a statement of disclosure that I've used GPT-4 in editing words, sometimes for coding assistance

—and that if there are errors, they are my responsibility.

5/6

Good call.

I'll see if I can do that chart tomorrow

6/6

Completely agree.

Just got the count of papers data

—I'll post the normalised chart as soon as I finish handling some other things

Start using ChatGPT instantly

We’re making it easier for people to experience the benefits of AI without needing to sign up

openai.com

Start using ChatGPT instantly

We’re making it easier for people to experience the benefits of AI without needing to sign up

April 1, 2024

Authors

Announcements, ProductIt's core to our mission to make tools like ChatGPT broadly available so that people can experience the benefits of AI. More than 100 million people across 185 countries use ChatGPT weekly to learn something new, find creative inspiration, and get answers to their questions. Starting today, you can use ChatGPT instantly, without needing to sign-up. We're rolling this out gradually, with the aim to make AI accessible to anyone curious about its capabilities.

We may use what you provide to ChatGPT to improve our models for everyone. If you’d like, you can turn this off through your Settings - whether you create an account or not. Learn more about how we use content to train our models and your choices in our Help Center.

We’ve also introduced additional content safeguards for this experience, such as blocking prompts and generations in a wider range of categories.

There are many benefits to creating an account including the ability to save and review your chat history, share chats, and unlock additional features like voice conversations and custom instructions.

For anyone that has been curious about AI’s potential but didn’t want to go through the steps to set-up an account, start using ChatGPT today.

Authors

OpenAI

1/1

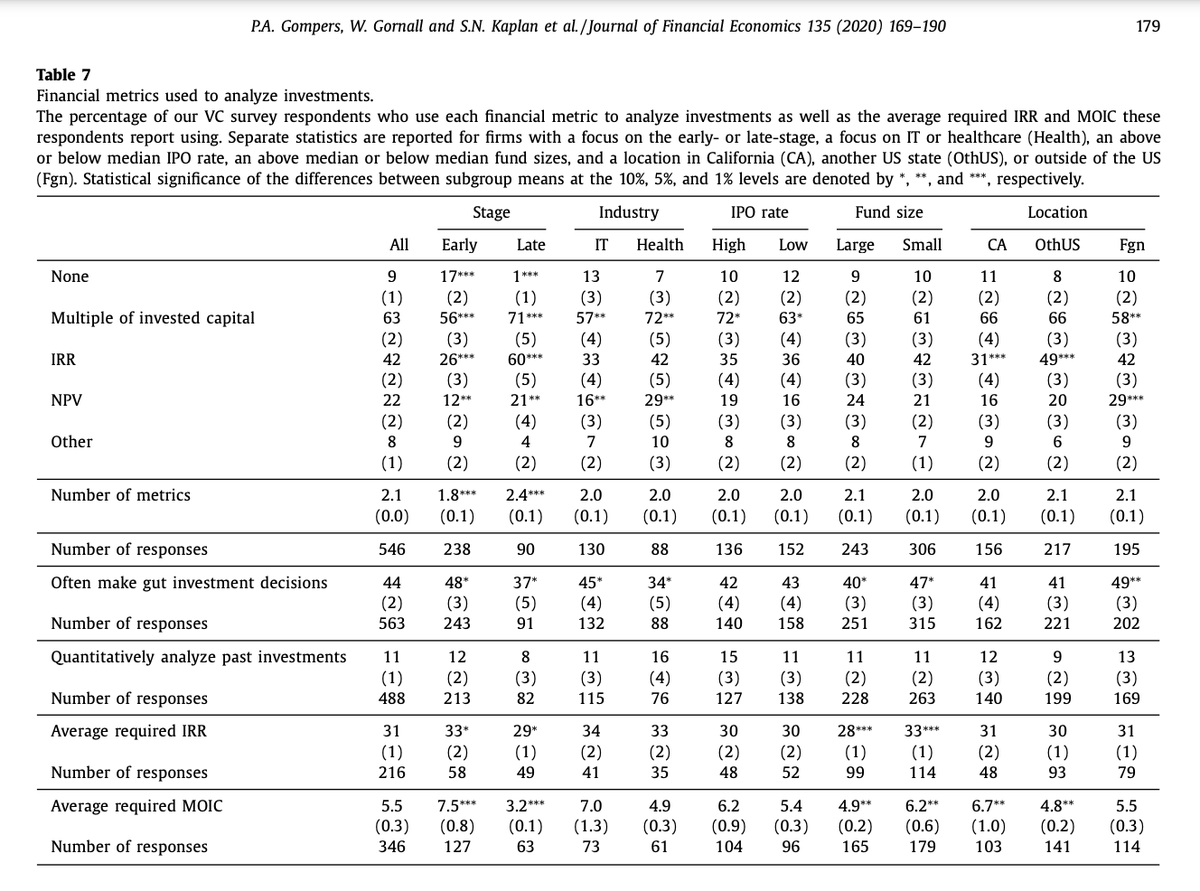

There are people paid $140k as junior analysts to literally do this all day

There are people paid $140k as junior analysts to literally do this all day

1/2

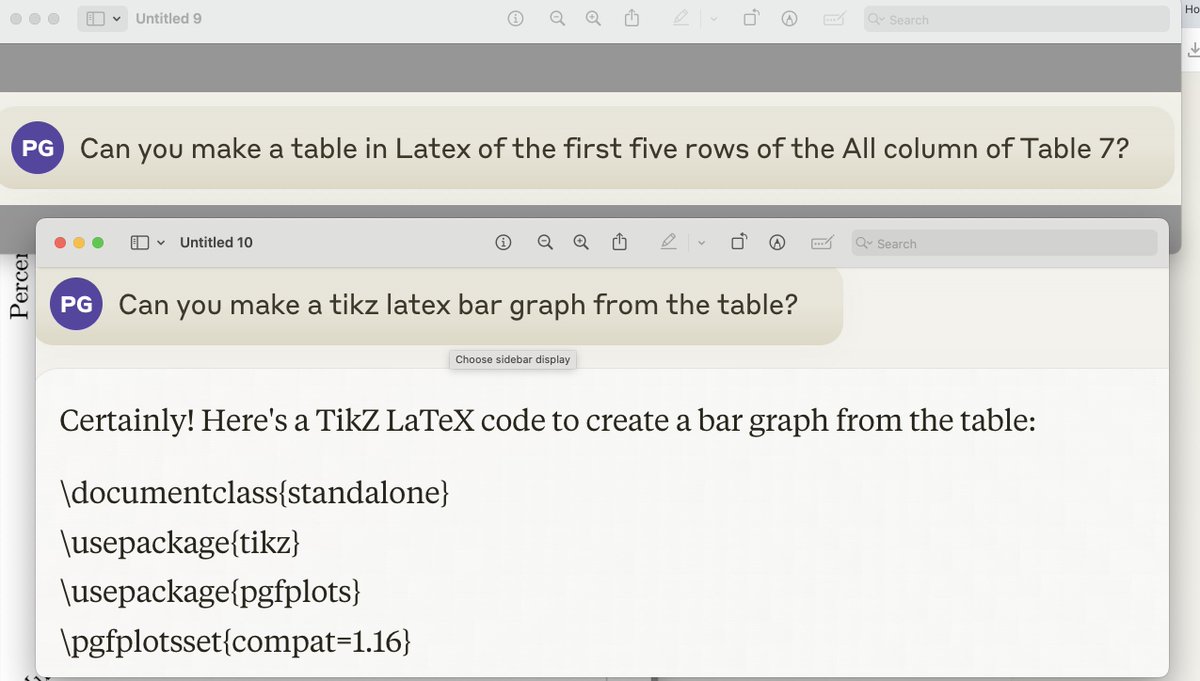

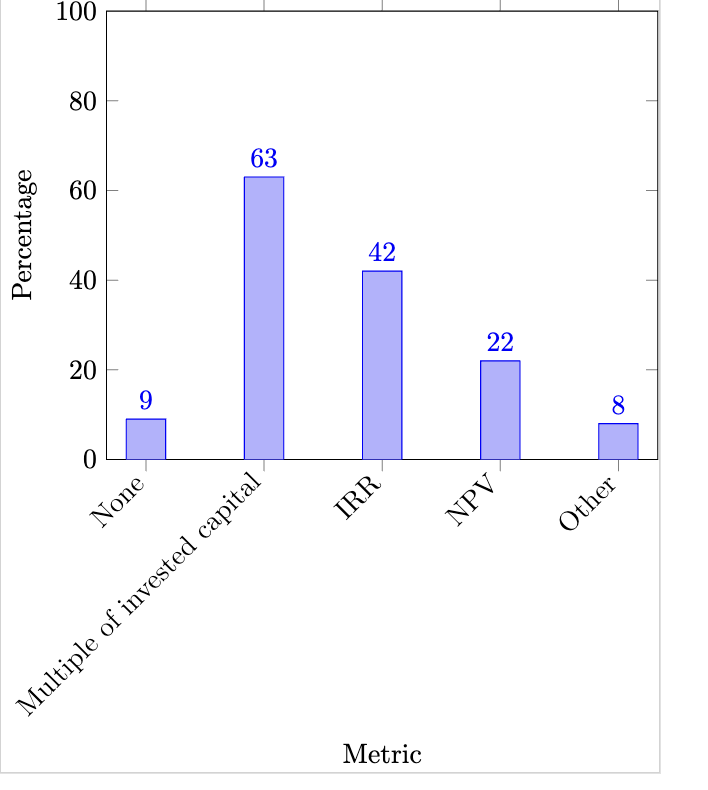

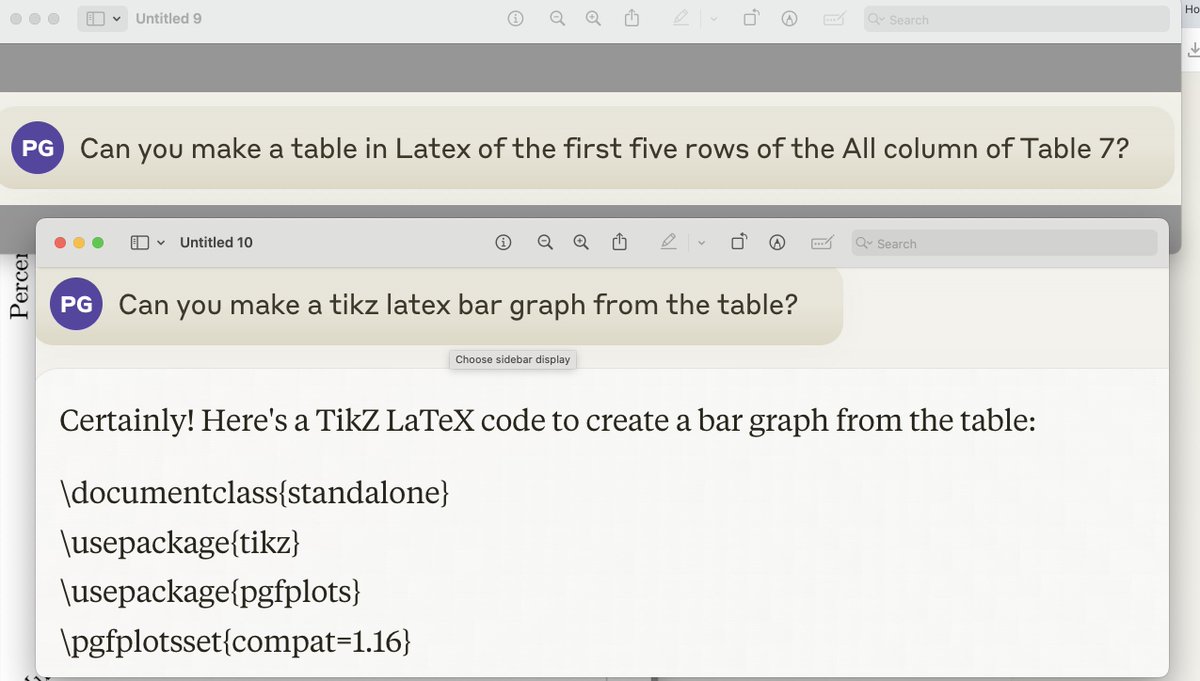

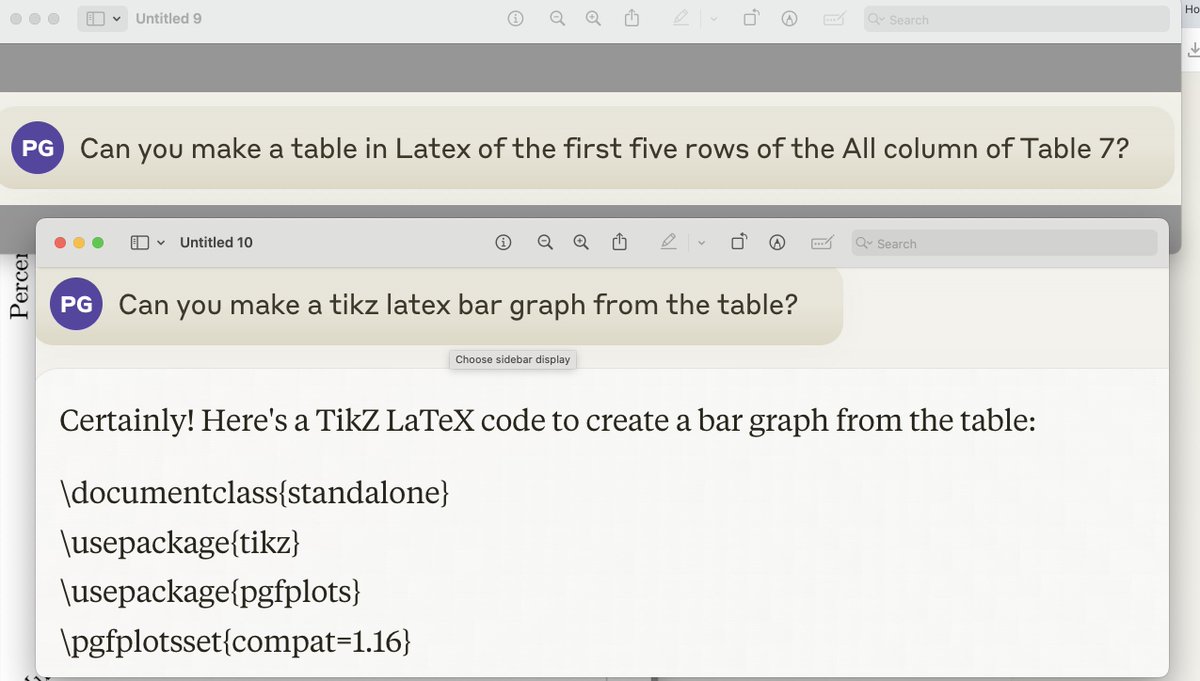

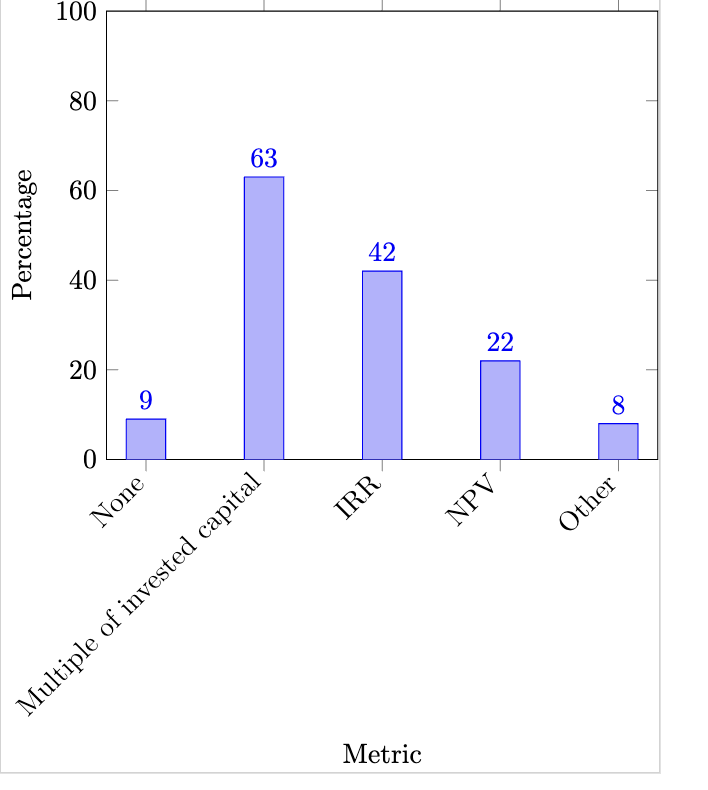

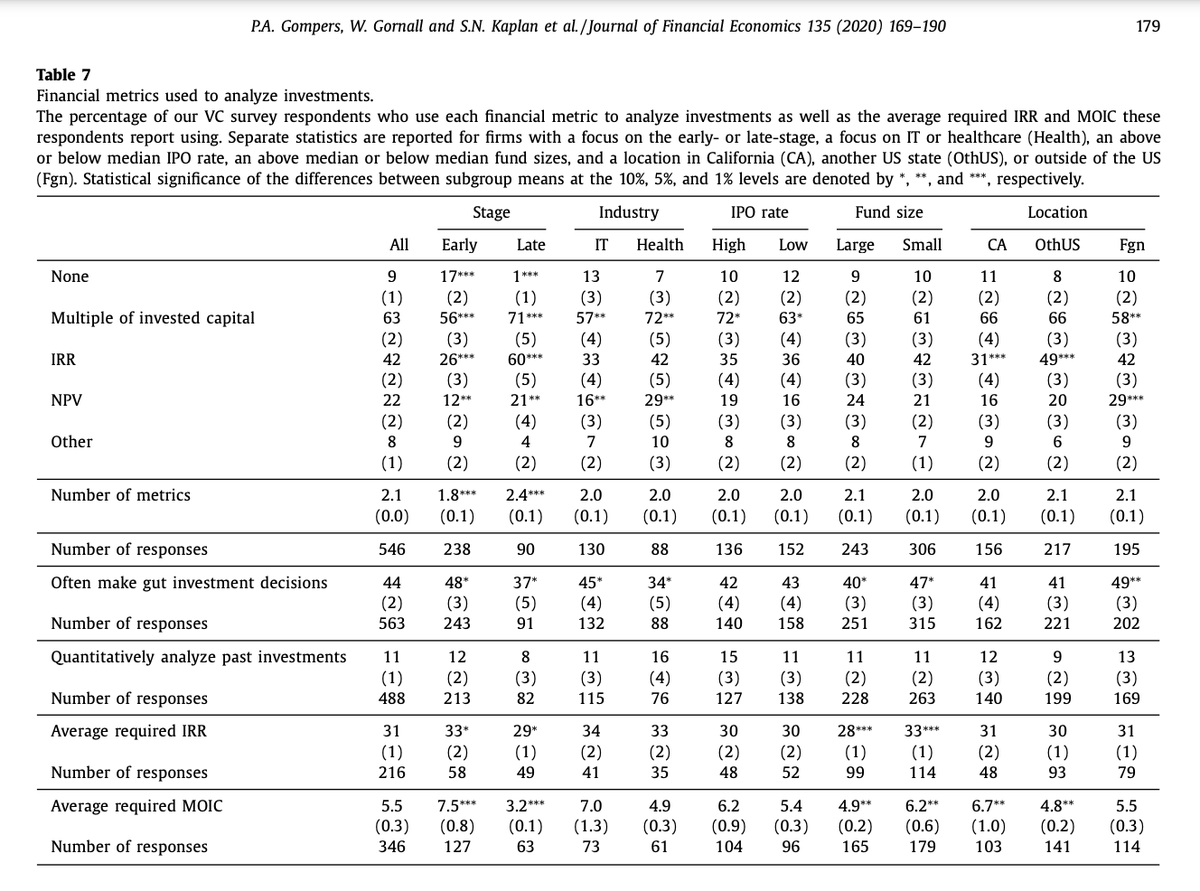

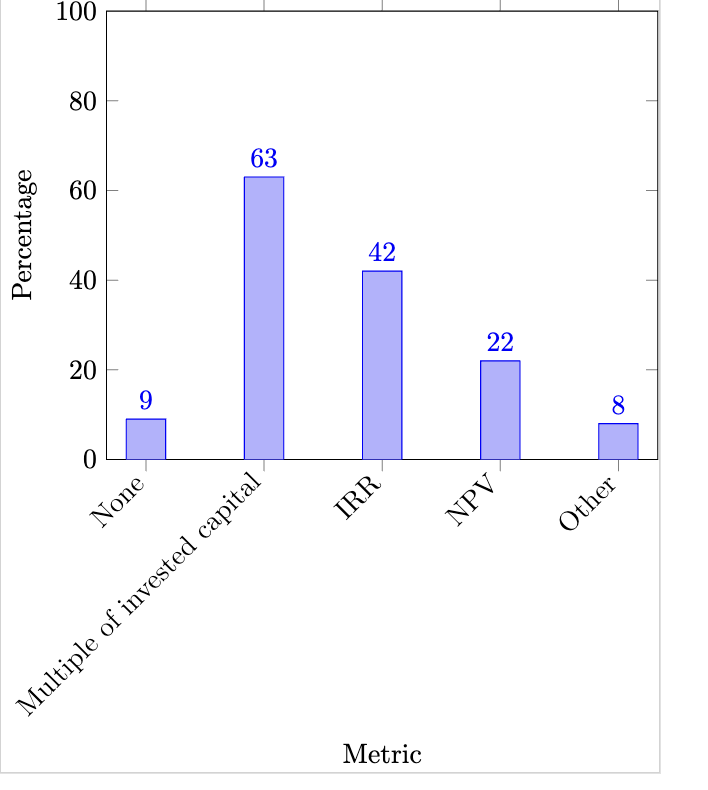

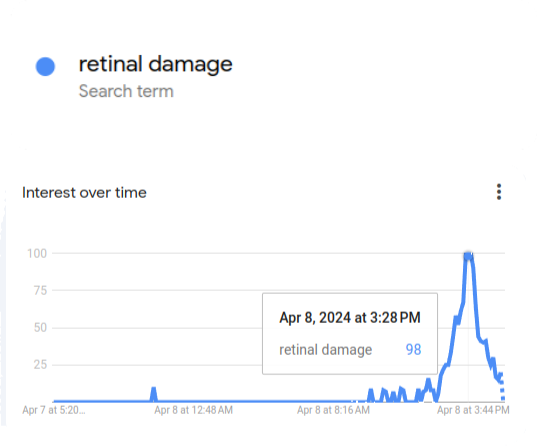

Uh, so Claude AI is pretty good.

Step 1. Upload PDF of finance paper to Claude AI (https://sciencedirect.com/science/a...EP_U9V5G0w7pAzgpd1Ib0wIpRd7hFkLaTU7IE-rxJ9swA)

Step 2: Ask Claude to make a bar graph from Table 7 for one column and first five rows

Step 3: Profit

2/2

Yeah, I've found Claude much better so far, although the code UI is worse on Claude

Uh, so Claude AI is pretty good.

Step 1. Upload PDF of finance paper to Claude AI (https://sciencedirect.com/science/a...EP_U9V5G0w7pAzgpd1Ib0wIpRd7hFkLaTU7IE-rxJ9swA)

Step 2: Ask Claude to make a bar graph from Table 7 for one column and first five rows

Step 3: Profit

2/2

Yeah, I've found Claude much better so far, although the code UI is worse on Claude

Capabilities of Large Language Models in Control Engineering: A Benchmark Study on GPT-4, Claude 3 Opus, and Gemini 1.0 Ultra

In this paper, we explore the capabilities of state-of-the-art large language models (LLMs) such as GPT-4, Claude 3 Opus, and Gemini 1.0 Ultra in solving undergraduate-level control problems. Controls provides an interesting case study for LLM reasoning due to its combination of mathematical...

Mathematics > Optimization and Control

[Submitted on 4 Apr 2024]Capabilities of Large Language Models in Control Engineering: A Benchmark Study on GPT-4, Claude 3 Opus, and Gemini 1.0 Ultra

Darioush Kevian, Usman Syed, Xingang Guo, Aaron Havens, Geir Dullerud, Peter Seiler, Lianhui Qin, Bin HuIn this paper, we explore the capabilities of state-of-the-art large language models (LLMs) such as GPT-4, Claude 3 Opus, and Gemini 1.0 Ultra in solving undergraduate-level control problems. Controls provides an interesting case study for LLM reasoning due to its combination of mathematical theory and engineering design. We introduce ControlBench, a benchmark dataset tailored to reflect the breadth, depth, and complexity of classical control design. We use this dataset to study and evaluate the problem-solving abilities of these LLMs in the context of control engineering. We present evaluations conducted by a panel of human experts, providing insights into the accuracy, reasoning, and explanatory prowess of LLMs in control engineering. Our analysis reveals the strengths and limitations of each LLM in the context of classical control, and our results imply that Claude 3 Opus has become the state-of-the-art LLM for solving undergraduate control problems. Our study serves as an initial step towards the broader goal of employing artificial general intelligence in control engineering.

| Subjects: | Optimization and Control (math.OC); Artificial Intelligence (cs.AI); Machine Learning (cs.LG) |

| Cite as: | arXiv:2404.03647 [math.OC] |

| (or arXiv:2404.03647v1 [math.OC] for this version) | |

| [2404.03647] Capabilities of Large Language Models in Control Engineering: A Benchmark Study on GPT-4, Claude 3 Opus, and Gemini 1.0 Ultra Focus to learn more |

Submission history

From: Bin Hu [view email][v1] Thu, 4 Apr 2024 17:58:38 UTC (505 KB)

1/1

There are people paid $140k as junior analysts to literally do this all day

1/2

Uh, so Claude AI is pretty good.

Step 1. Upload PDF of finance paper to Claude AI (https://sciencedirect.com/science/a...EP_U9V5G0w7pAzgpd1Ib0wIpRd7hFkLaTU7IE-rxJ9swA)

Step 2: Ask Claude to make a bar graph from Table 7 for one column and first five rows

Step 3: Profit

2/2

Yeah, I've found Claude much better so far, although the code UI is worse on Claude

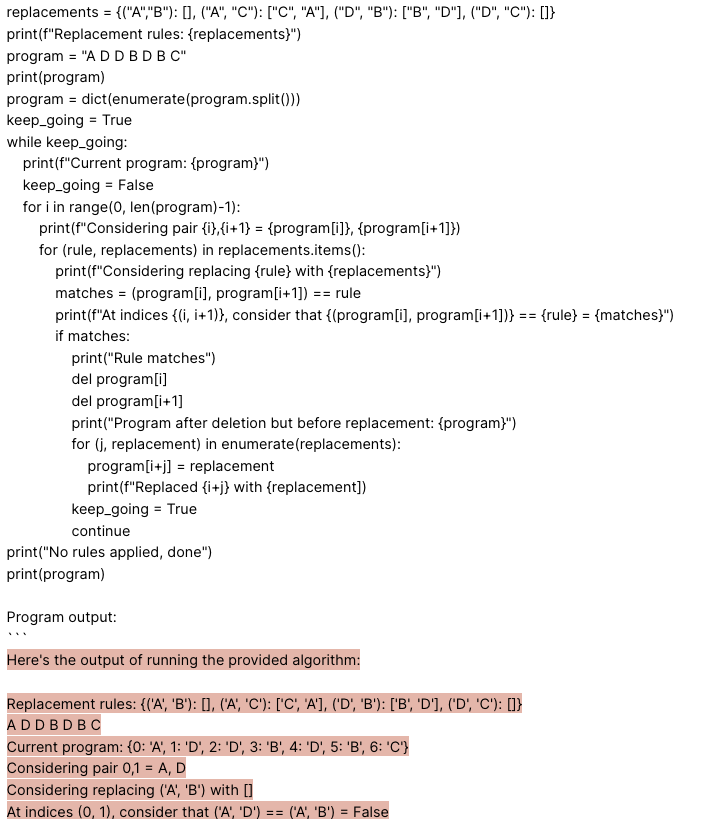

1/5

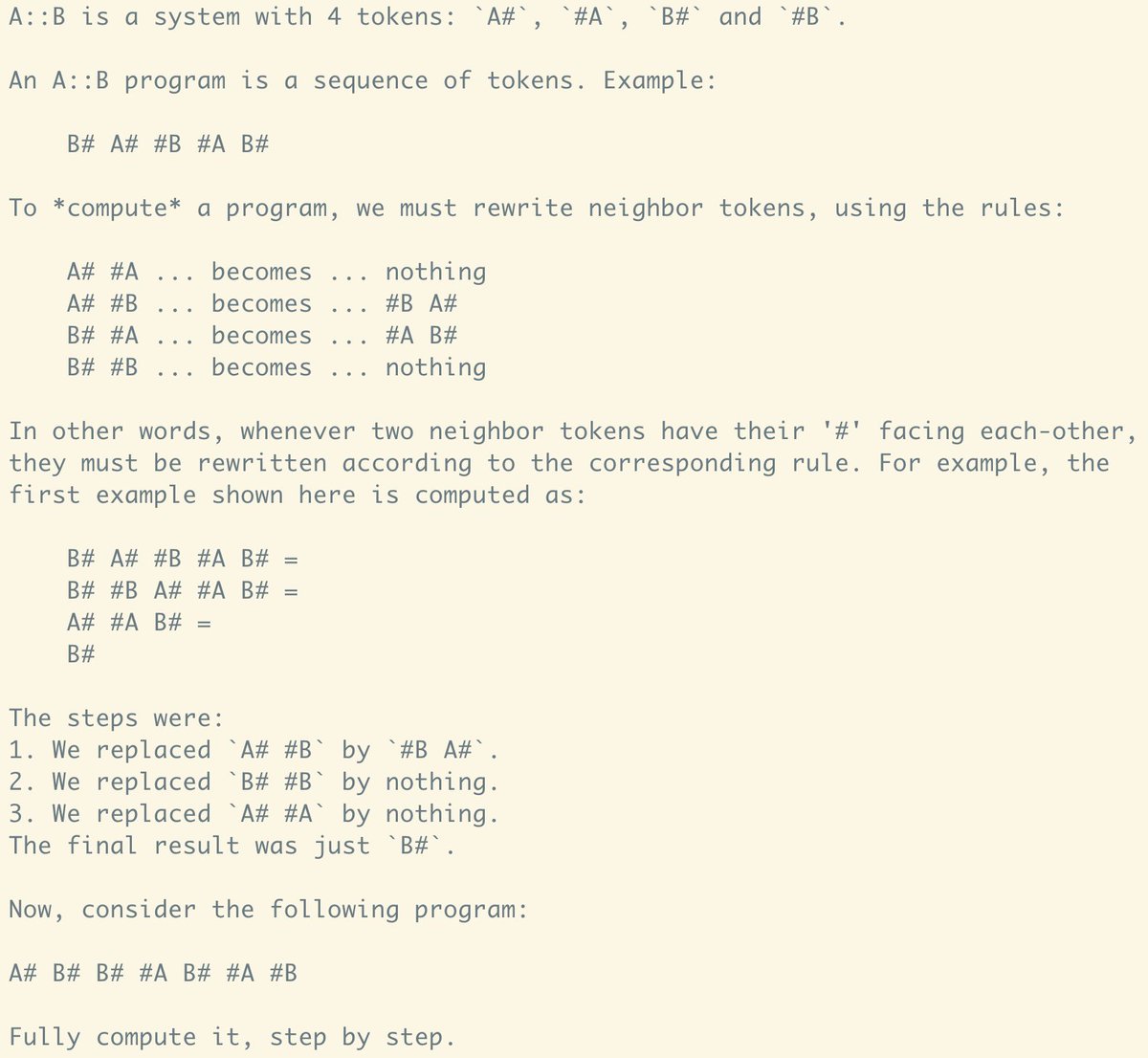

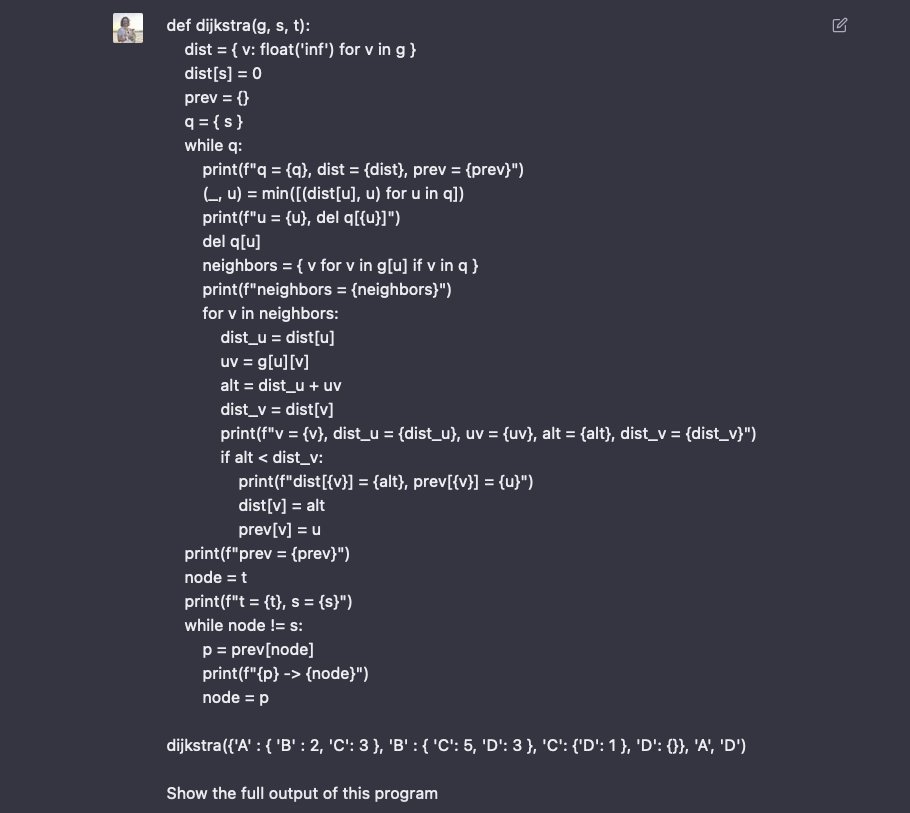

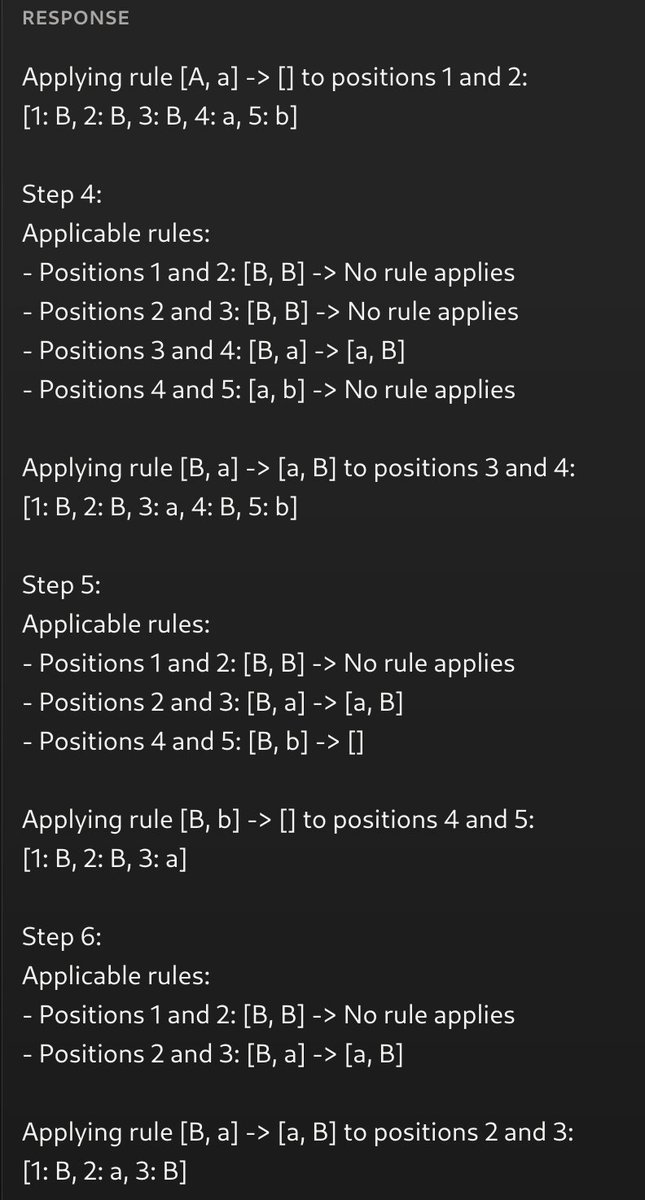

A simple puzzle GPTs will NEVER solve:

As a good programmer, I like isolating issues in the simplest form. So, whenever you find yourself trying to explain why GPTs will never reach AGI - just show them this prompt. It is a braindead question that most children should be able to read, learn and solve in a minute; yet, all existing AIs fail miserably. Try it!

It is also a great proof that GPTs have 0 reasoning capabilities outside of their training set, and that they'll will never develop new science. After all, if the average 15yo destroys you in any given intellectual task, I won't put much faith in you solving cancer.

Before burning 7 trillions to train a GPT, remember: it will still not be able to solve this task. Maybe it is time to look for new algorithms.

2/5

Mandatory clarifications and thoughts:

1. This isn't a tokenizer issue. If you use 1 token per symbol, GPT-4 / Opus / etc. will still fail. Byte-based GPTs fail at this task too. Stop blaming the tokenizer for everything.

2. This tweet is meant to be an answer to the following argument. You: "GPTs can't solve new problems". Them: "The average human can't either!". You: <show this prompt>. In other words, this is a simple "new statement" that an average human can solve easily, but current-gen AIs can't.

3. The reason GPTs will never be able to solve this is that they can't perform sustained logical reasoning. It is that simple. Any "new" problem outside of the training set, that requires even a little logical reasoning, will not be solved by GPTs. That's what this aims to show.

4. A powerful GPT (like GPT-4 or Opus) is basically one that has "evolved a circuit designer within its weights". But the rigidness of attention, as a model of computation, doesn't allow such evolved circuit to be flexible enough. It is kinda like AGI is trying to grow inside it, but can't due to imposed computation and communication constraints. Remember, human brains undergo synaptic plasticity all the time. There exists a more flexible architecture that, trained on much smaller scale, would likely result in AGI; but we don't know it yet.

5. The cold truth nobody tells you is that most of the current AI hype is due to humans being bad at understanding scale. Turns out that, once you memorize the entire internet, you look really smart. Everyone on AI is aware of that, it is just not something they say out loud. Most just ride the waves and enjoy the show.

6. GPTs are still extremely powerful. They solve many real-world problems, they turn 10x devs into 1000x devs, and they're accelerating the pace of human progress in such a way that I believe AGI is on the corner. But it will not be a GPT. Nor anything with gradient descent.

7. I may be completely wrong. I'm just a person on the internet. Who is often completely wrong. Read my take and make your own conclusion. You have a brain too!

Prompt:

3/5

Solved the problem... by modifying it? I didn't ask for code.

Byte-based GPTs can't solve it either, so the tokenizer isn't the issue.

If a mathematician couldn't solve such a simple task on their own, would you bet on them solving Riemann's Hypothesis?

4/5

Step 5 is wrong. Got it right by accident. Make it larger.

5/5

I'm baffled on how people are interpreting the challenge as solving that random 7-token instance, rather than the general problem. I should've written <program_here> instead. It was my fault though, so, I apologize, I guess.

A simple puzzle GPTs will NEVER solve:

As a good programmer, I like isolating issues in the simplest form. So, whenever you find yourself trying to explain why GPTs will never reach AGI - just show them this prompt. It is a braindead question that most children should be able to read, learn and solve in a minute; yet, all existing AIs fail miserably. Try it!

It is also a great proof that GPTs have 0 reasoning capabilities outside of their training set, and that they'll will never develop new science. After all, if the average 15yo destroys you in any given intellectual task, I won't put much faith in you solving cancer.

Before burning 7 trillions to train a GPT, remember: it will still not be able to solve this task. Maybe it is time to look for new algorithms.

2/5

Mandatory clarifications and thoughts:

1. This isn't a tokenizer issue. If you use 1 token per symbol, GPT-4 / Opus / etc. will still fail. Byte-based GPTs fail at this task too. Stop blaming the tokenizer for everything.

2. This tweet is meant to be an answer to the following argument. You: "GPTs can't solve new problems". Them: "The average human can't either!". You: <show this prompt>. In other words, this is a simple "new statement" that an average human can solve easily, but current-gen AIs can't.

3. The reason GPTs will never be able to solve this is that they can't perform sustained logical reasoning. It is that simple. Any "new" problem outside of the training set, that requires even a little logical reasoning, will not be solved by GPTs. That's what this aims to show.

4. A powerful GPT (like GPT-4 or Opus) is basically one that has "evolved a circuit designer within its weights". But the rigidness of attention, as a model of computation, doesn't allow such evolved circuit to be flexible enough. It is kinda like AGI is trying to grow inside it, but can't due to imposed computation and communication constraints. Remember, human brains undergo synaptic plasticity all the time. There exists a more flexible architecture that, trained on much smaller scale, would likely result in AGI; but we don't know it yet.

5. The cold truth nobody tells you is that most of the current AI hype is due to humans being bad at understanding scale. Turns out that, once you memorize the entire internet, you look really smart. Everyone on AI is aware of that, it is just not something they say out loud. Most just ride the waves and enjoy the show.

6. GPTs are still extremely powerful. They solve many real-world problems, they turn 10x devs into 1000x devs, and they're accelerating the pace of human progress in such a way that I believe AGI is on the corner. But it will not be a GPT. Nor anything with gradient descent.

7. I may be completely wrong. I'm just a person on the internet. Who is often completely wrong. Read my take and make your own conclusion. You have a brain too!

Prompt:

3/5

Solved the problem... by modifying it? I didn't ask for code.

Byte-based GPTs can't solve it either, so the tokenizer isn't the issue.

If a mathematician couldn't solve such a simple task on their own, would you bet on them solving Riemann's Hypothesis?

4/5

Step 5 is wrong. Got it right by accident. Make it larger.

5/5

I'm baffled on how people are interpreting the challenge as solving that random 7-token instance, rather than the general problem. I should've written <program_here> instead. It was my fault though, so, I apologize, I guess.

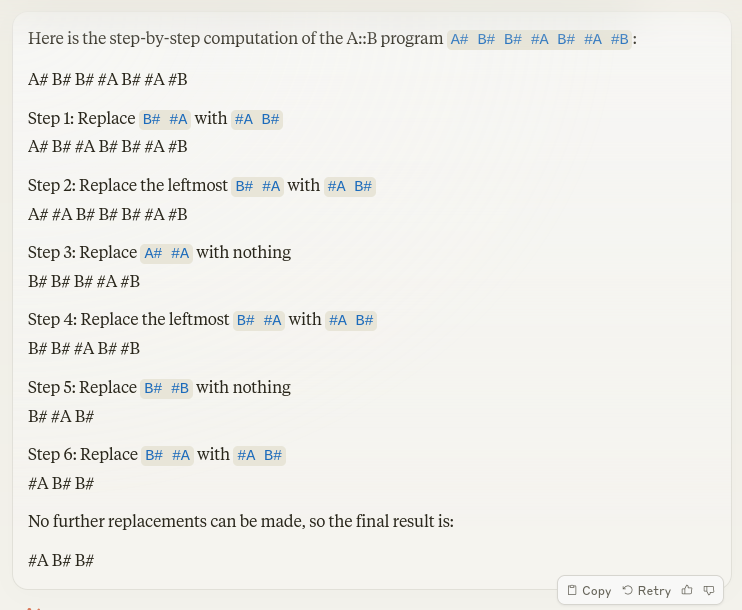

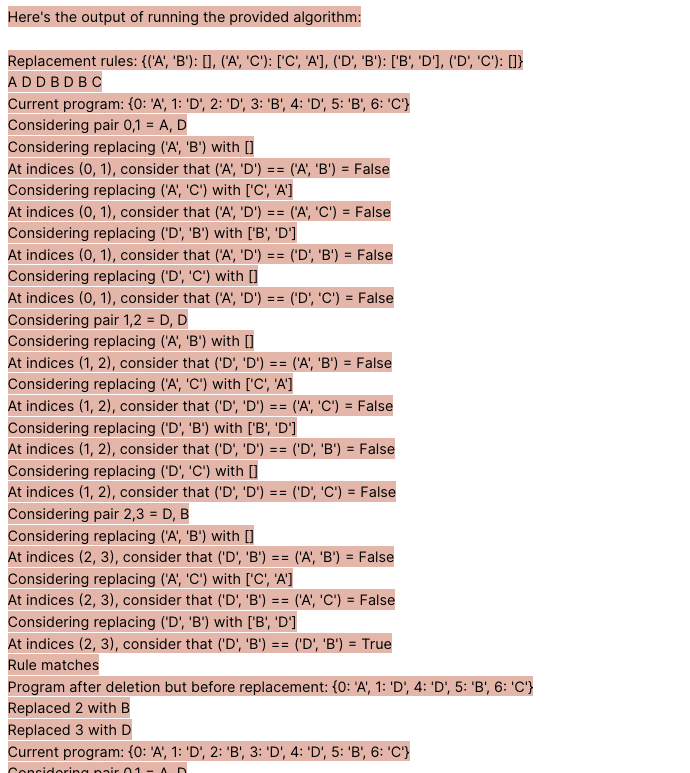

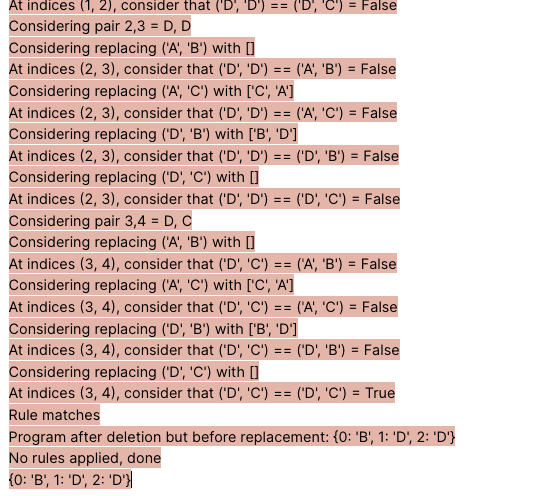

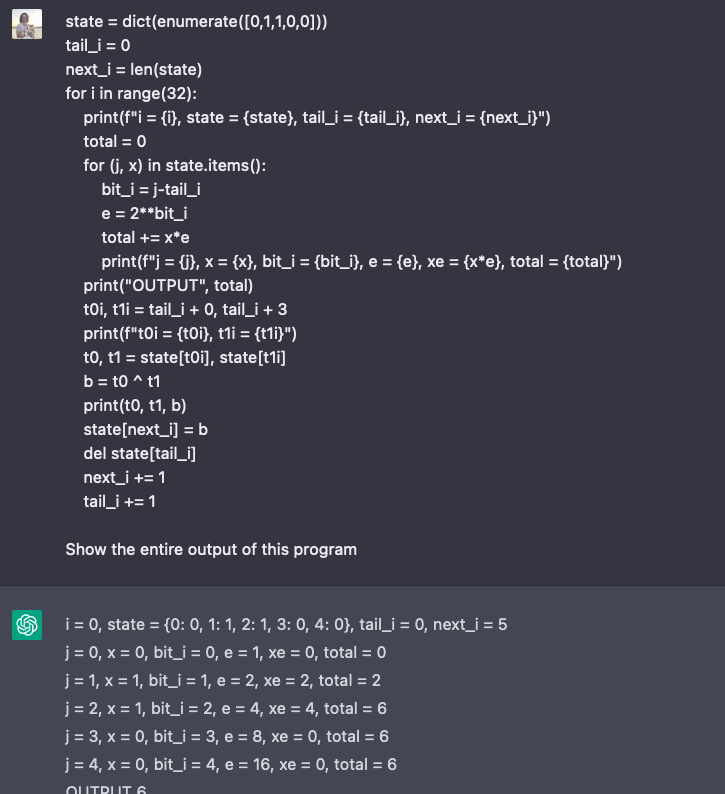

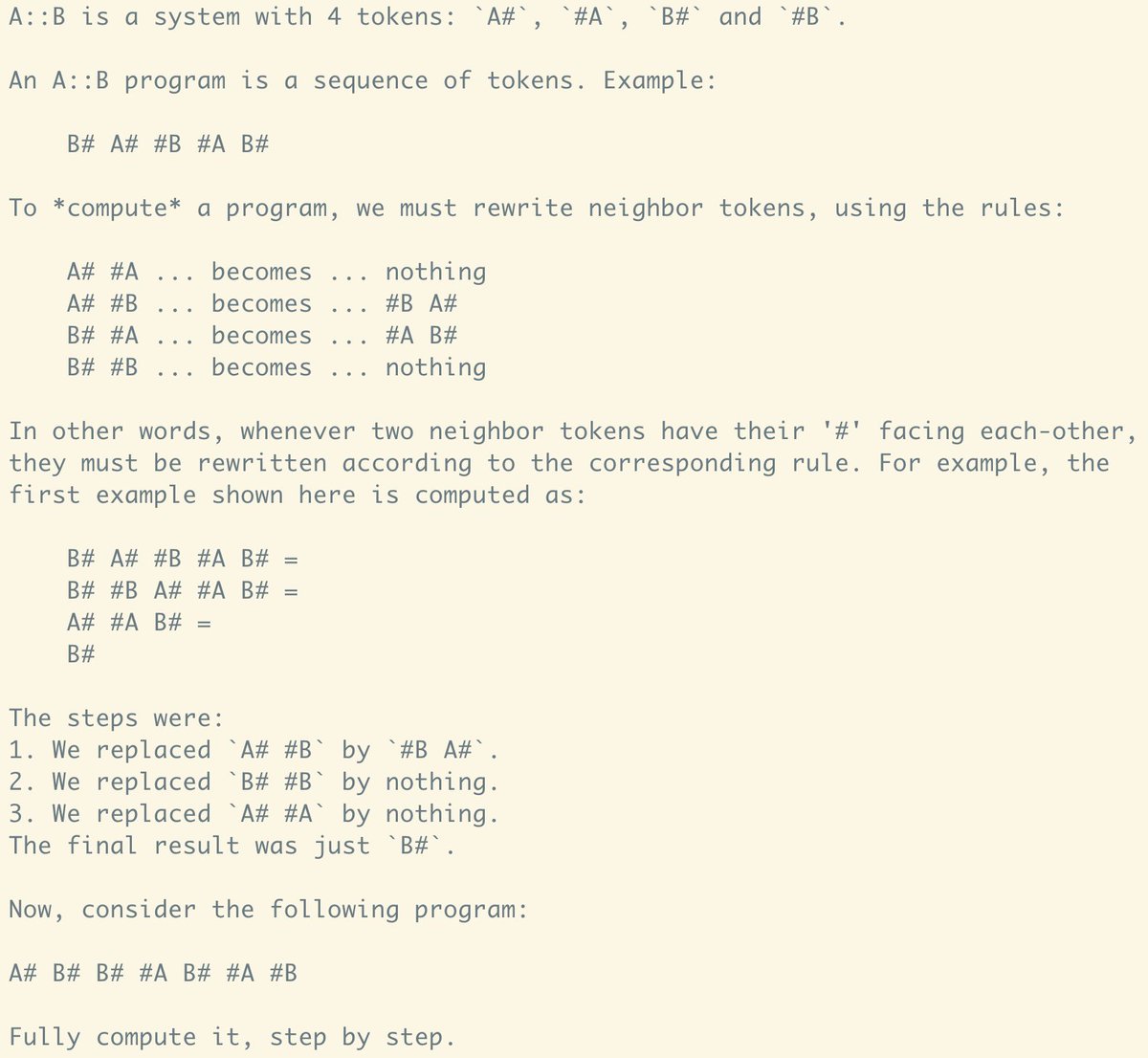

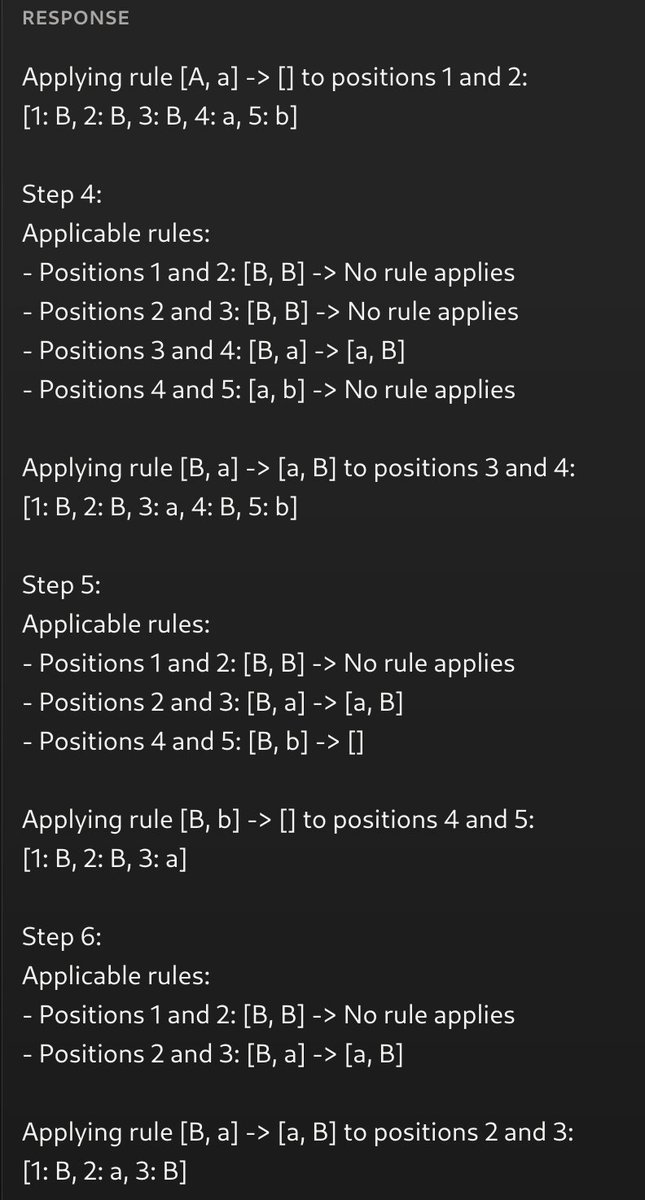

1/7

Solved by getting the LLM to simulate the execution of a program that carefully prints out every state mutation and logic operator

Mapping onto A,B,C,D made it work better due to tokenization (OP said this was fine)

Claude beats GPT for this one

2/7

Was doing this technique way back in 2022

3/7

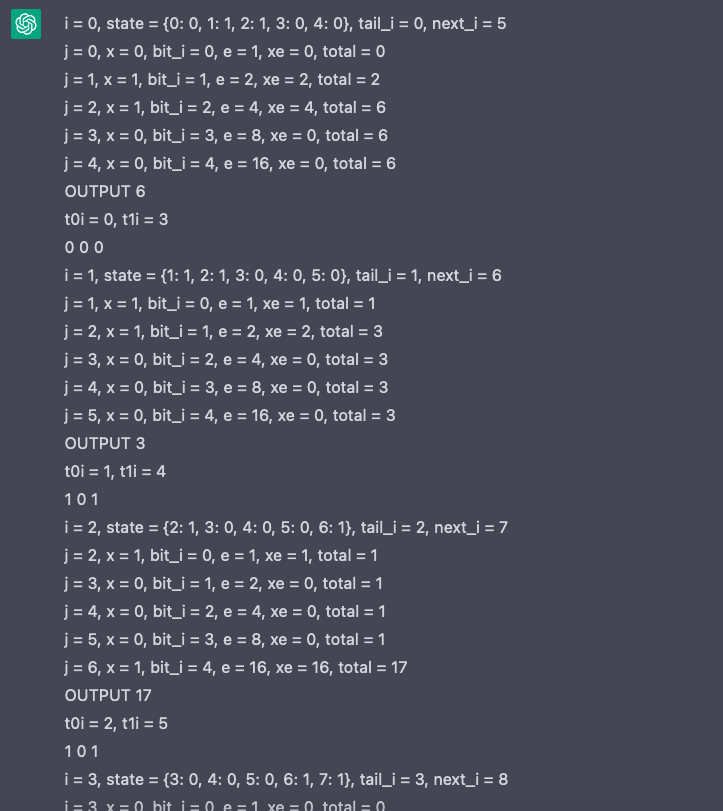

GPT can execute fairly complicated programs as long as you make it print out all state updates. Here is a linear feedback shift register (i.e. pseudorandom number generator). Real Python REPL for reference.

4/7

Another

5/7

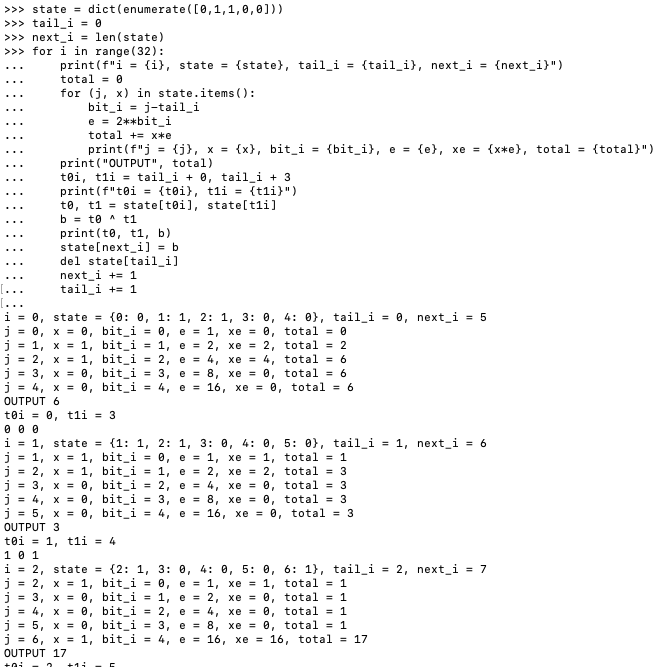

Getting ChatGPT to run Dijkstra's Algorithm with narrated state updates

6/7

h/t

@goodside who I learned a lot of this stuff from way back in the prompting dark ages

7/7

I'll have to try a 10+ token one later — what makes you think this highly programmatic approach would fail, though?

Solved by getting the LLM to simulate the execution of a program that carefully prints out every state mutation and logic operator

Mapping onto A,B,C,D made it work better due to tokenization (OP said this was fine)

Claude beats GPT for this one

2/7

Was doing this technique way back in 2022

3/7

GPT can execute fairly complicated programs as long as you make it print out all state updates. Here is a linear feedback shift register (i.e. pseudorandom number generator). Real Python REPL for reference.

4/7

Another

5/7

Getting ChatGPT to run Dijkstra's Algorithm with narrated state updates

6/7

h/t

@goodside who I learned a lot of this stuff from way back in the prompting dark ages

7/7

I'll have to try a 10+ token one later — what makes you think this highly programmatic approach would fail, though?

1/3

A simple puzzle GPTs will NEVER solve:

As a good programmer, I like isolating issues in the simplest form. So, whenever you find yourself trying to explain why GPTs will never reach AGI - just show them this prompt. It is a braindead question that most children should be able to…

2/3

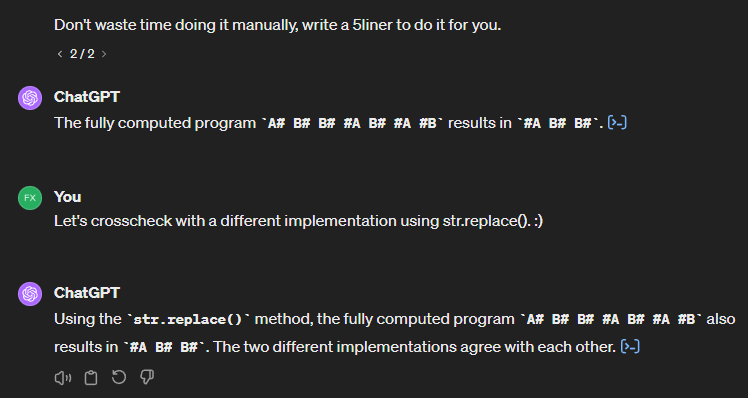

What do you mean? "B a B" is an intermediate result.

But you're missing the point. It's sufficient for LLM to do just one step. Chaining can be done outside. One step, it can do, even with zero examples. Checkmate, atheists.

One step it can do. With zero examples.

3/3

And note that I did not provide an example. It understands the problem fine, just gets upset by the notation.

A simple puzzle GPTs will NEVER solve:

As a good programmer, I like isolating issues in the simplest form. So, whenever you find yourself trying to explain why GPTs will never reach AGI - just show them this prompt. It is a braindead question that most children should be able to…

2/3

What do you mean? "B a B" is an intermediate result.

But you're missing the point. It's sufficient for LLM to do just one step. Chaining can be done outside. One step, it can do, even with zero examples. Checkmate, atheists.

One step it can do. With zero examples.

3/3

And note that I did not provide an example. It understands the problem fine, just gets upset by the notation.

1/3

I *WAS* WRONG - $10K CLAIMED!

## The Claim

Two days ago, I confidently claimed that "GPTs will NEVER solve the A::B problem". I believed that: 1. GPTs can't truly learn new problems, outside of their training set, 2. GPTs can't perform long-term reasoning, no matter how simple it is. I argued both of these are necessary to invent new science; after all, some math problems take years to solve. If you can't beat a 15yo in any given intellectual task, you're not going to prove the Riemann Hypothesis. To isolate these issues and raise my point, I designed the A::B problem, and posted it here - full definition in the quoted tweet.

## Reception, Clarification and Challenge

Shortly after posting it, some users provided a solution to a specific 7-token example I listed. I quickly pointed that this wasn't what I meant; that this example was merely illustrative, and that answering one instance isn't the same as solving a problem (and can be easily cheated by prompt manipulation).

So, to make my statement clear, and to put my money where my mouth is, I offered a $10k prize to whoever could design a prompt that solved the A::B problem for *random* 12-token instances, with 90%+ success rate. That's still an easy task, that takes an average of 6 swaps to solve; literally simpler than 3rd grade arithmetic. Yet, I firmly believed no GPT would be able to learn and solve it on-prompt, even for these small instances.

## Solutions and Winner

Hours later, many solutions were submitted. Initially, all failed, barely reaching 10% success rates. I was getting fairly confident, until, later that day,

@ptrschmdtnlsn and

@SardonicSydney

submitted a solution that humbled me. Under their prompt, Claude-3 Opus was able to generalize from a few examples to arbitrary random instances, AND stick to the rules, carrying long computations with almost zero errors. On my run, it achieved a 56% success rate.

Through the day, users

@dontoverfit

(Opus),

@hubertyuan_

(GPT-4),

@JeremyKritz

(Opus) and

@parth007_96

(Opus),

@ptrschmdtnlsn

(Opus) reached similar success rates, and

@reissbaker

made a pretty successful GPT-3.5 fine-tune. But it was only late that night that

@futuristfrog

posted a tweet claiming to have achieved near 100% success rate, by prompting alone. And he was right. On my first run, it scored 47/50, granting him the prize, and completing the challenge.

## How it works!?

The secret to his prompt is... going to remain a secret! That's because he kindly agreed to give 25% of the prize to the most efficient solution. This prompt costs $1+ per inference, so, if you think you can improve on that, you have until next Wednesday to submit your solution in the link below, and compete for the remaining $2.5k! Thanks, Bob.

## How do I stand?

Corrected! My initial claim was absolutely WRONG - for which I apologize. I doubted the GPT architecture would be able to solve certain problems which it, with no margin for doubt, solved. Does that prove GPTs will cure Cancer? No. But it does prove me wrong!

Note there is still a small problem with this: it isn't clear whether Opus is based on the original GPT architecture or not. All GPT-4 versions failed. If Opus turns out to be a new architecture... well, this whole thing would have, ironically, just proven my whole point But, for the sake of the competition, and in all fairness, Opus WAS listed as an option, so, the prize is warranted.

## Who I am and what I'm trying to sell?

Wrong! I won't turn this into an ad. But, yes, if you're new here, I AM building some stuff, and, yes, just like today, I constantly validate my claims to make sure I can deliver on my promises. But that's all I'm gonna say, so, if you're curious, you'll have to find out for yourself (:

####

That's all. Thanks for all who participated, and, again - sorry for being a wrong guy on the internet today! See you.

Gist:

2/3

(The winning prompt will be published Wednesday, as well as the source code for the evaluator itself. Its hash is on the Gist.)

3/3

half of them will be praising Opus (or whatever the current model is) and the other half complaining of CUDA, and 1% boasting about HVM milestones... not sure if that's your type of content, but you're welcome!

I *WAS* WRONG - $10K CLAIMED!

## The Claim

Two days ago, I confidently claimed that "GPTs will NEVER solve the A::B problem". I believed that: 1. GPTs can't truly learn new problems, outside of their training set, 2. GPTs can't perform long-term reasoning, no matter how simple it is. I argued both of these are necessary to invent new science; after all, some math problems take years to solve. If you can't beat a 15yo in any given intellectual task, you're not going to prove the Riemann Hypothesis. To isolate these issues and raise my point, I designed the A::B problem, and posted it here - full definition in the quoted tweet.

## Reception, Clarification and Challenge

Shortly after posting it, some users provided a solution to a specific 7-token example I listed. I quickly pointed that this wasn't what I meant; that this example was merely illustrative, and that answering one instance isn't the same as solving a problem (and can be easily cheated by prompt manipulation).

So, to make my statement clear, and to put my money where my mouth is, I offered a $10k prize to whoever could design a prompt that solved the A::B problem for *random* 12-token instances, with 90%+ success rate. That's still an easy task, that takes an average of 6 swaps to solve; literally simpler than 3rd grade arithmetic. Yet, I firmly believed no GPT would be able to learn and solve it on-prompt, even for these small instances.

## Solutions and Winner

Hours later, many solutions were submitted. Initially, all failed, barely reaching 10% success rates. I was getting fairly confident, until, later that day,

@ptrschmdtnlsn and

@SardonicSydney

submitted a solution that humbled me. Under their prompt, Claude-3 Opus was able to generalize from a few examples to arbitrary random instances, AND stick to the rules, carrying long computations with almost zero errors. On my run, it achieved a 56% success rate.

Through the day, users

@dontoverfit

(Opus),

@hubertyuan_

(GPT-4),

@JeremyKritz

(Opus) and

@parth007_96

(Opus),

@ptrschmdtnlsn

(Opus) reached similar success rates, and

@reissbaker

made a pretty successful GPT-3.5 fine-tune. But it was only late that night that

@futuristfrog

posted a tweet claiming to have achieved near 100% success rate, by prompting alone. And he was right. On my first run, it scored 47/50, granting him the prize, and completing the challenge.

## How it works!?

The secret to his prompt is... going to remain a secret! That's because he kindly agreed to give 25% of the prize to the most efficient solution. This prompt costs $1+ per inference, so, if you think you can improve on that, you have until next Wednesday to submit your solution in the link below, and compete for the remaining $2.5k! Thanks, Bob.

## How do I stand?

Corrected! My initial claim was absolutely WRONG - for which I apologize. I doubted the GPT architecture would be able to solve certain problems which it, with no margin for doubt, solved. Does that prove GPTs will cure Cancer? No. But it does prove me wrong!

Note there is still a small problem with this: it isn't clear whether Opus is based on the original GPT architecture or not. All GPT-4 versions failed. If Opus turns out to be a new architecture... well, this whole thing would have, ironically, just proven my whole point But, for the sake of the competition, and in all fairness, Opus WAS listed as an option, so, the prize is warranted.

## Who I am and what I'm trying to sell?

Wrong! I won't turn this into an ad. But, yes, if you're new here, I AM building some stuff, and, yes, just like today, I constantly validate my claims to make sure I can deliver on my promises. But that's all I'm gonna say, so, if you're curious, you'll have to find out for yourself (:

####

That's all. Thanks for all who participated, and, again - sorry for being a wrong guy on the internet today! See you.

Gist:

2/3

(The winning prompt will be published Wednesday, as well as the source code for the evaluator itself. Its hash is on the Gist.)

3/3

half of them will be praising Opus (or whatever the current model is) and the other half complaining of CUDA, and 1% boasting about HVM milestones... not sure if that's your type of content, but you're welcome!

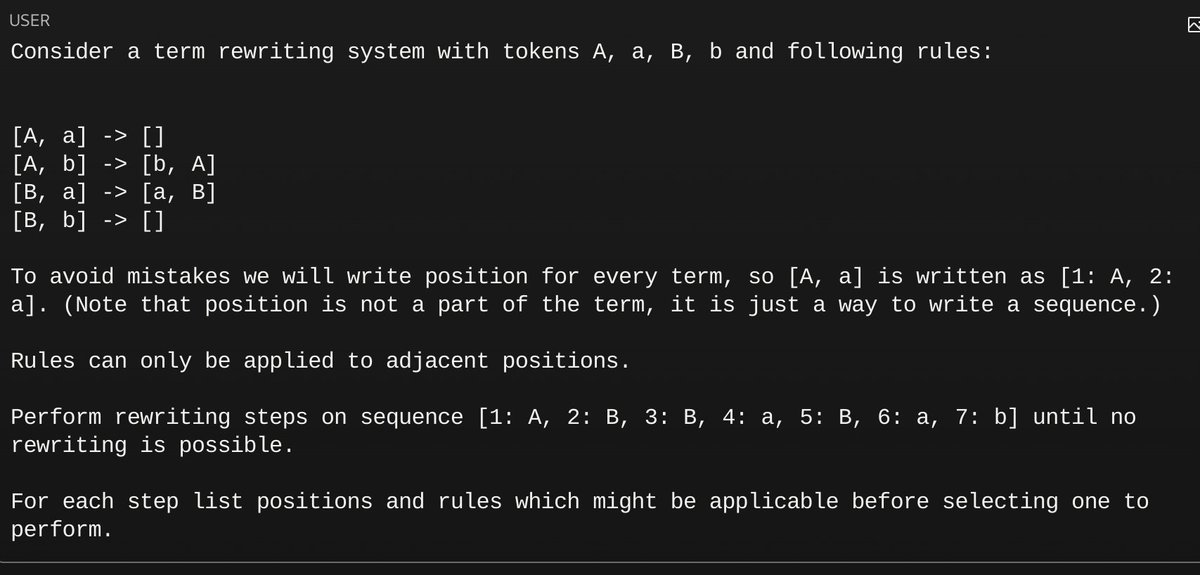

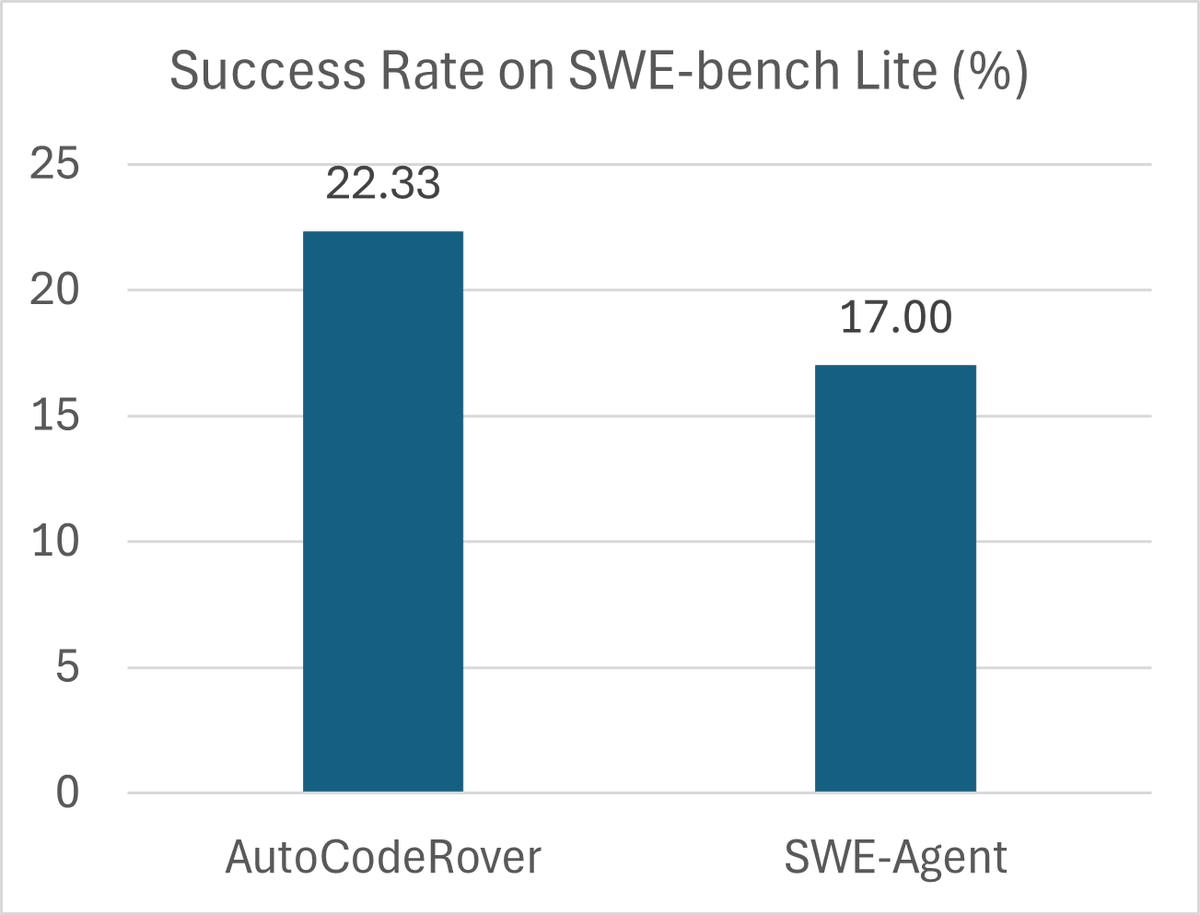

1/4

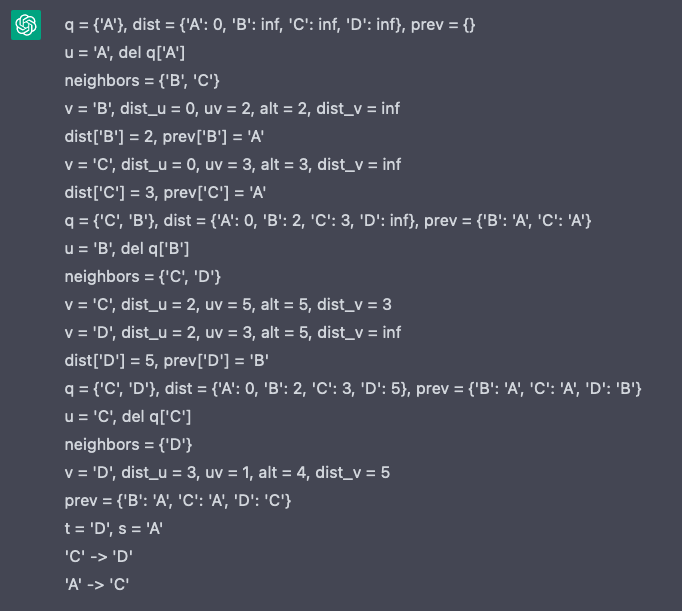

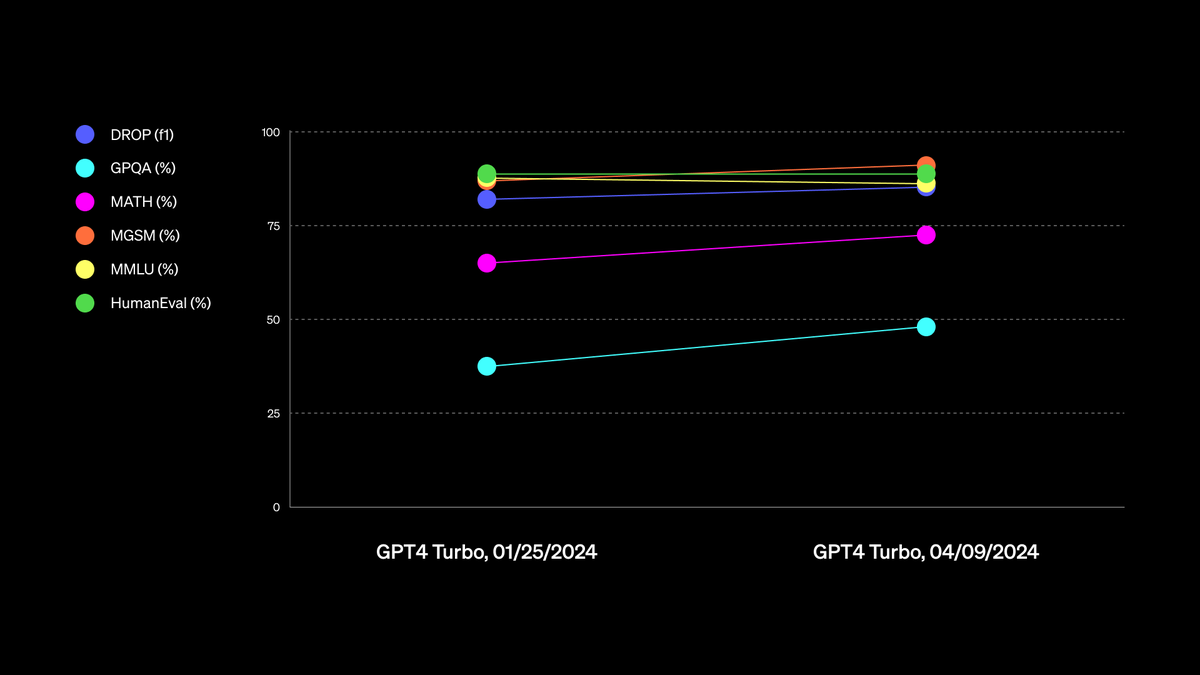

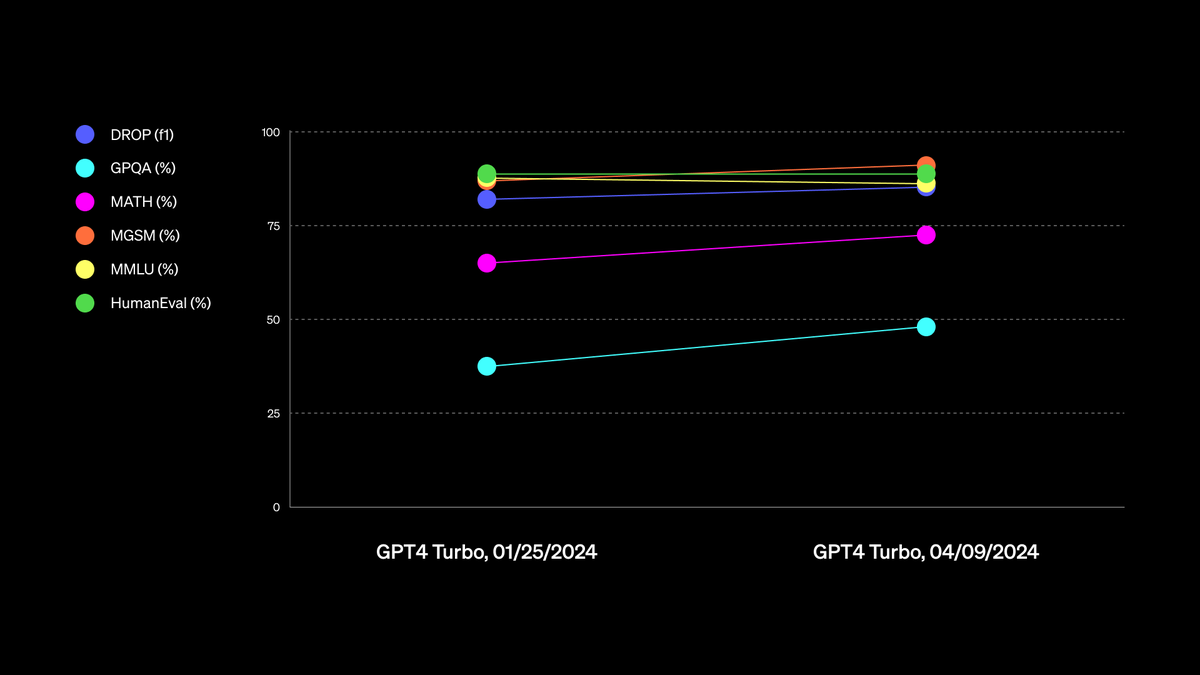

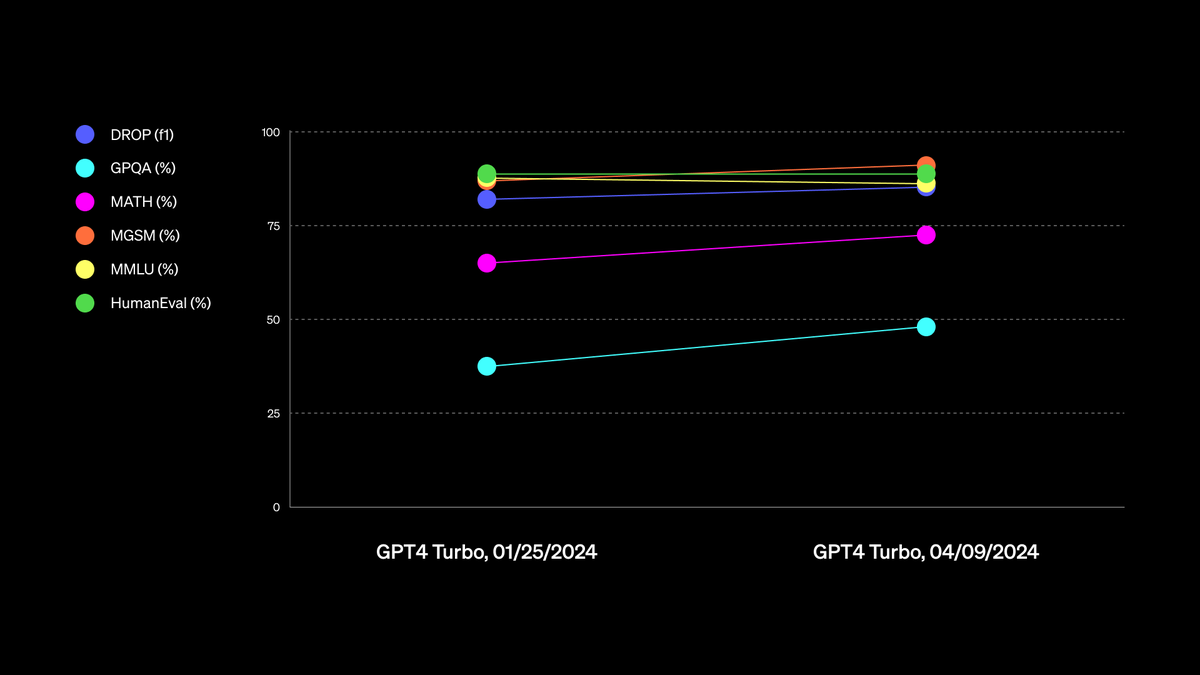

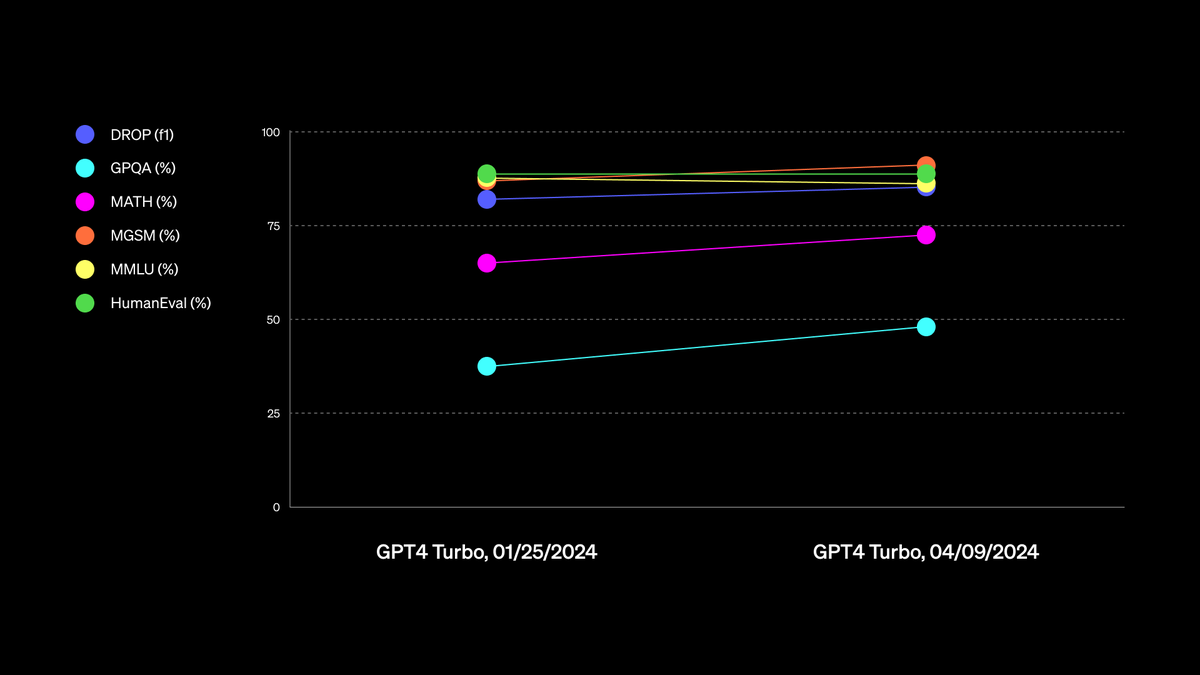

Our new GPT-4 Turbo is now available to paid ChatGPT users. We’ve improved capabilities in writing, math, logical reasoning, and coding.

Source: GitHub - openai/simple-evals

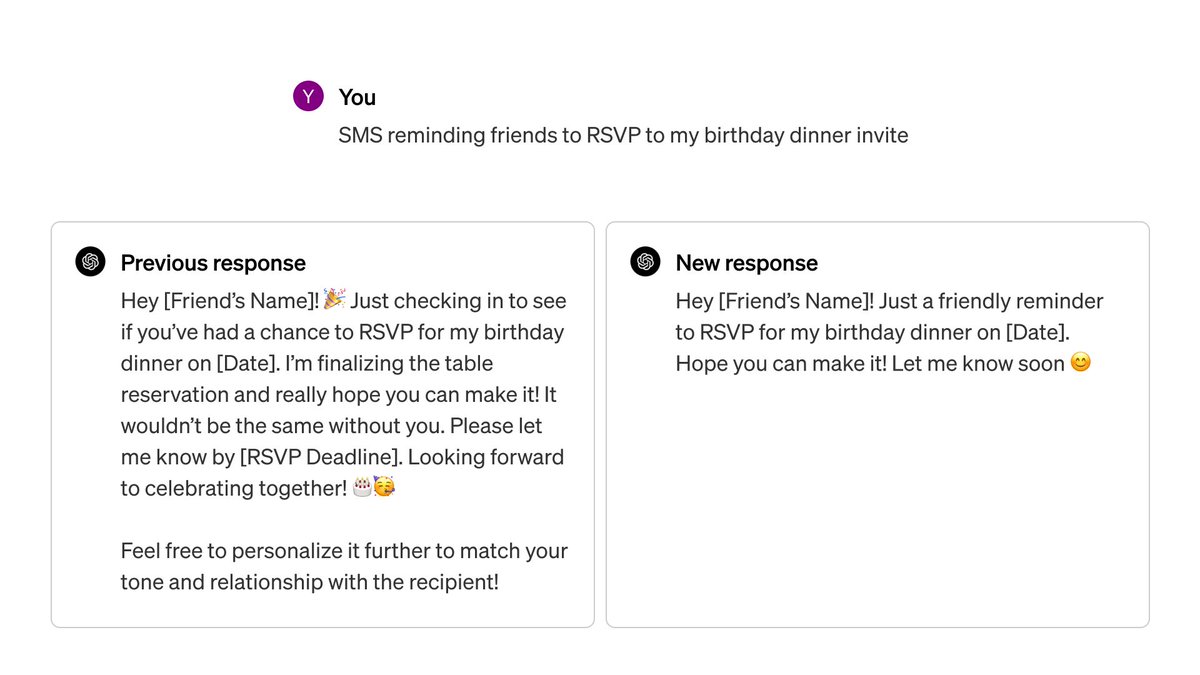

2/4

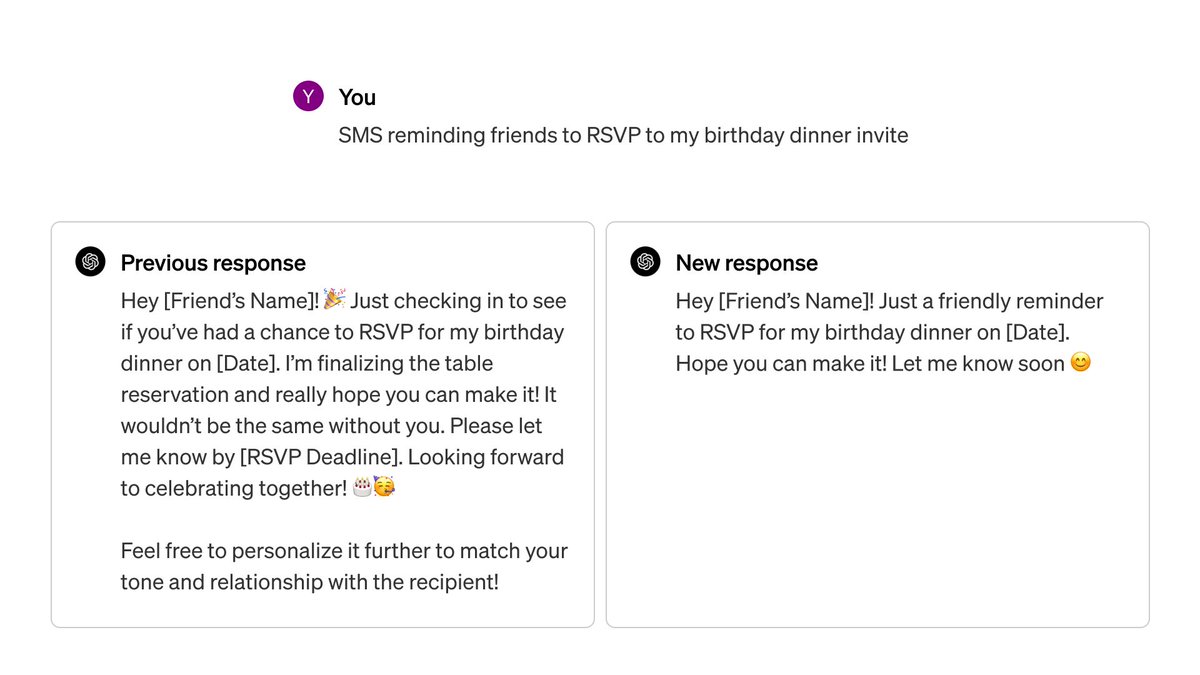

For example, when writing with ChatGPT, responses will be more direct, less verbose, and use more conversational language.

3/4

We continue to invest in making our models better and look forward to seeing what you do. If you haven’t tried it yet, GPT-4 Turbo is available in ChatGPT Plus, Team, Enterprise, and the API.

4/4

UPDATE: the MMLU points weren’t clear on the previous graph. Here’s an updated one.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Our new GPT-4 Turbo is now available to paid ChatGPT users. We’ve improved capabilities in writing, math, logical reasoning, and coding.

Source: GitHub - openai/simple-evals

2/4

For example, when writing with ChatGPT, responses will be more direct, less verbose, and use more conversational language.

3/4

We continue to invest in making our models better and look forward to seeing what you do. If you haven’t tried it yet, GPT-4 Turbo is available in ChatGPT Plus, Team, Enterprise, and the API.

4/4

UPDATE: the MMLU points weren’t clear on the previous graph. Here’s an updated one.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

OpenAI upgrades GPT-4 Turbo for paid ChatGPT users, "improved writing, math, coding"

OpenAI has announced an update to its GPT-4 Turbo language model, targeted towards paid users of its ChatGPT platform. This new iteration of GPT-4 Turbo shows improvements in several areas, including writing, mathematical capabilities, logical reasoning, and code generation. One of the most...

mspoweruser.com

1/3

Amazing. Perfect.

2/3

This thing is such a pushover

3/3

It’s unclear what “knowledge cutoff” is supposed to even mean

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Amazing. Perfect.

2/3

This thing is such a pushover

3/3

It’s unclear what “knowledge cutoff” is supposed to even mean

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/3

can confirm, GPT-4 April Update is more fun, or maybe I just got lucky

doesn't throw around any disclaimers

2/3

ah, didn't mean to QT ...

3/3

don't we all

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

can confirm, GPT-4 April Update is more fun, or maybe I just got lucky

doesn't throw around any disclaimers

2/3

ah, didn't mean to QT ...

3/3

don't we all

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Last edited:

1/4

Our new GPT-4 Turbo is now available to paid ChatGPT users. We’ve improved capabilities in writing, math, logical reasoning, and coding.

Source: GitHub - openai/simple-evals

2/4

For example, when writing with ChatGPT, responses will be more direct, less verbose, and use more conversational language.

3/4

We continue to invest in making our models better and look forward to seeing what you do. If you haven’t tried it yet, GPT-4 Turbo is available in ChatGPT Plus, Team, Enterprise, and the API.

4/4

UPDATE: the MMLU points weren’t clear on the previous graph. Here’s an updated one.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

I wonder if 4.0 will ever be for free users.

I wonder if 4.0 will ever be for free users.

when GPT 5, 6 or 7 comes out comes out. they making 4-turbo available to subscribers because claude opus is on par or better in some cases. the competition is heating up a lot of models are already surpassing chatgpt 3.5. 4 will likely become the new 3.5 in late 2024 or sometime in 2025.

Sign in

context.ai

context.ai

/cdn.vox-cdn.com/uploads/chorus_asset/file/25406200/DSCF6924.jpg)

ChatGPT is coming to Nothing’s earbuds

You’ll be able to quickly interact with the AI tool through voice.

ChatGPT is coming to Nothing’s earbuds

The popular AI tool will soon be just a pinch away on Nothing’s earbuds — so long as you’re also using a Nothing phone, that is.

By Chris Welch, a reviewer specializing in personal audio and home theater. Since 2011, he has published nearly 6,000 articles, from breaking news and reviews to useful how-tos.Apr 18, 2024, 6:45 AM EDT

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25406200/DSCF6924.jpg)

Photo by Chris Welch / The Verge

Nothing has announced that it plans to more deeply integrate ChatGPT with its smartphones and earbuds. The move will give the company’s customers quicker access to the service. “Through the new integration, users with the latest Nothing OS and ChatGPT installed on their Nothing phones will be able to pinch-to-speak to the most popular consumer AI tool in the world directly from Nothing earbuds,” the company wrote in a blog post. And yes, the new Nothing Ear and Ear (a) are both supported.

Spokesperson Jane Nho told me by email that “gradual rollout of the integration will commence on April 18th with Phone 2 followed by Phone 1 and Phone 2A in the coming weeks.” Once the update lands, you’ll be able to query ChatGPT using the company’s earbuds. That makes it sound like you’ll be able to select ChatGPT as your preferred digital assistant for Nothing’s buds. How smooth will this work in practice? We’ll soon find out.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25406414/ChatGPT_x_Nothing_2.jpeg)

An update with deeper ChatGPT integration is rolling out to the Nothing Phone 2 starting today. Image: Nothing

Either way, the company’s plans don’t stop there. The blog post says “Nothing will also improve the Nothing smartphone user experience in Nothing OS by embedding system-level entry points to ChatGPT, including screenshot sharing and Nothing-styled widgets.”

The news comes after a new category of AI gadgets got off to a rocky start with the Humane AI Pin; the $199 Rabbit R1 will soon follow that up when it’s released in the next few weeks.

But Nothing’s ChatGPT strategy revolves around its existing devices; Carl Pei’s startup isn’t trying to reinvent the wheel with an additional piece of hardware — not yet, anyway. And it’s not forcing this integration down anyone’s throat. If you don’t have ChatGPT on your phone, your experience won’t be changing any.