You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?Preparing for the era of 32K context: Early learnings and explorations

Jul 28Written By Together

Today, we’re releasing LLaMA-2-7B-32K, a 32K context model built using Position Interpolation and Together AI’s data recipe and system optimizations, including FlashAttention-2. Fine-tune the model for targeted, long-context tasks—such as multi-document understanding, summarization, and QA—and run inference and fine-tune on 32K context with up to 3x speedup.

LLaMA-2-7B-32K making completions of a book in the Together Playground. Try it yourself at api.together.ai.

In the last few months, we have witnessed the rapid progress of the open-source ecosystem for LLMs — from the original LLaMA model that triggered the “LLaMA moment”, to efforts such as RedPajama, MPT, Falcon, and the recent LLaMA-2 release, open-source models have been catching up with closed-source models. We believe the upcoming opportunity for open-source models is to extend the context length of open models to the regime of 32K-128K, matching that of state-of-the-art closed-source models. We have already seen some exciting efforts here such as MPT-7B-8K and LLongMA-2 (8K).

Today, we’re sharing with the community some recent learnings and explorations at Together AI in the direction of building long-context models with high quality and efficiency. Specifically:

- LLaMA-2-7B-32K: We extend LLaMA-2-7B to 32K long context, using Meta’s recipe of interpolation and continued pre-training. We share our current data recipe, consisting of a mixture of long context pre-training and instruction tuning data.

- Examples of building your own long-context models: We share two examples of how to fine-tune LLaMA-2-7B-32K to build specific applications, including book summarization and long-context question answering.

- Software support: We updated both the inference and training stack to allow for efficient inference and fine-tuning with 32K context, using the recently released FlashAttention-2 and a range of other optimizations. This allows one to create their own 32K context model and conduct inference efficiently.

- Try it yourself:

- Go to Together API and run LLaMA-2-7B-32K for inference.

- Use OpenChatKit to fine-tune a 32K model over LLaMA-2-7B-32K for your own long context applications.

- Go to HuggingFace and try out LLaMA-2-7B-32K.

Extending LLaMA-2 to 32K context

LLaMA-2 has a context length of 4K tokens. To extend it to 32K context, three things need to come together: modeling, data, and system optimizations.On the modeling side, we follow Meta’s recent paper and use linear interpolation to extend the context length. This provides a powerful way to extend the context length for models with rotary positional embeddings. We take the LLaMA-2 checkpoint, and continue pre-training/fine-tuning it with linear interpolation for 1.5B tokens.

But this alone is not enough. What data should we use in improving the base model? Instead of simply fine-tuning using generic language datasets such as Pile and RedPajama as in Meta’s recent recipe, we realize that there are two important factors here and we have to be careful about both. First, we need generic long-context language data for the model to learn how to handle the interpolated positional embeddings; and second, we need instruction data to encourage the models to actually take advantagement of the information in the long context. Having both seems to be the key.

Our current data recipe consists of the following mixture of data:

- In the first phase of continued pre-training, our data mixture contains 25% RedPajama Book, 25% RedPajama ArXiv (including abstracts), 25% other data from RedPajama, and 25% from the UL2 Oscar Data, which is a part of OIG (Open-Instruction-Generalist), asking the model to fill in missing chunks, or complete the text. To enhance the long-context capabilities, we exclude sequences shorter than 2K tokens. The UL2 Oscar Data encourages the model to model long-range dependencies.

- We then fine-tune the model to focus on its few shot capacity with long contexts, including 20% Natural Instructions (NI), 20% Public Pool of Prompts (P3), 20% the Pile. To mitigate forgetting, we further incorporate 20% RedPajama Book and 20% RedPajama ArXiv with abstracts. We decontaminated all data against HELM core scenarios (see a precise protocol here). We teach the model to leverage the in-context examples by packing as many examples as possible into one 32K-token sequence.

| Model | 2K | 4K | 8K | 16K | 32K |

|---|---|---|---|---|---|

| LLaMA-2 | 1.759 | 1.747 | N/A | N/A | N/A |

| LLaMA-2-7B-32K | 1.768 | 1.758 | 1.750 | 1.746 | 1.742 |

| LLaMA-2-7B | LLaMA-2-7B-32K | |

|---|---|---|

| AVG | 0.489 | 0.522 |

| MMLU - EM | 0.435 | 0.435 |

| BoolQ - EM | 0.746 | 0.784 |

| NarrativeQA - F1 | 0.483 | 0.548 |

| NaturalQuestions (closed-book) - F1 | 0.322 | 0.299 |

| NaturalQuestions (open-book) - F1 | 0.622 | 0.692 |

| QuAC - F1 | 0.355 | 0.343 |

| HellaSwag - EM | 0.759 | 0.748 |

| OpenbookQA - EM | 0.570 | 0.533 |

| TruthfulQA - EM | 0.29 | 0.294 |

| MS MARCO (regular) - RR@10 | 0.25 | 0.419 |

| MS MARCO (TREC) - NDCG@10 | 0.469 | 0.71 |

| CNN/DailyMail - ROUGE-2 | 0.155 | 0.151 |

| XSUM - ROUGE-2 | 0.144 | 0.129 |

| IMDB - EM | 0.951 | 0.965 |

| CivilComments - EM | 0.577 | 0.601 |

| RAFT - EM | 0.684 | 0.699 |

togethercomputer/LLaMA-2-7B-32K · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Open LLM Leaderboard - a Hugging Face Space by HuggingFaceH4

Discover amazing ML apps made by the community

huggingface.co

RT-2: New model translates vision and language into action

Introducing Robotic Transformer 2 (RT-2), a novel vision-language-action (VLA) model that learns from both web and robotics data, and translates this knowledge into generalised instructions for robot…

RT-2: New model translates vision and language into action

July 28, 2023

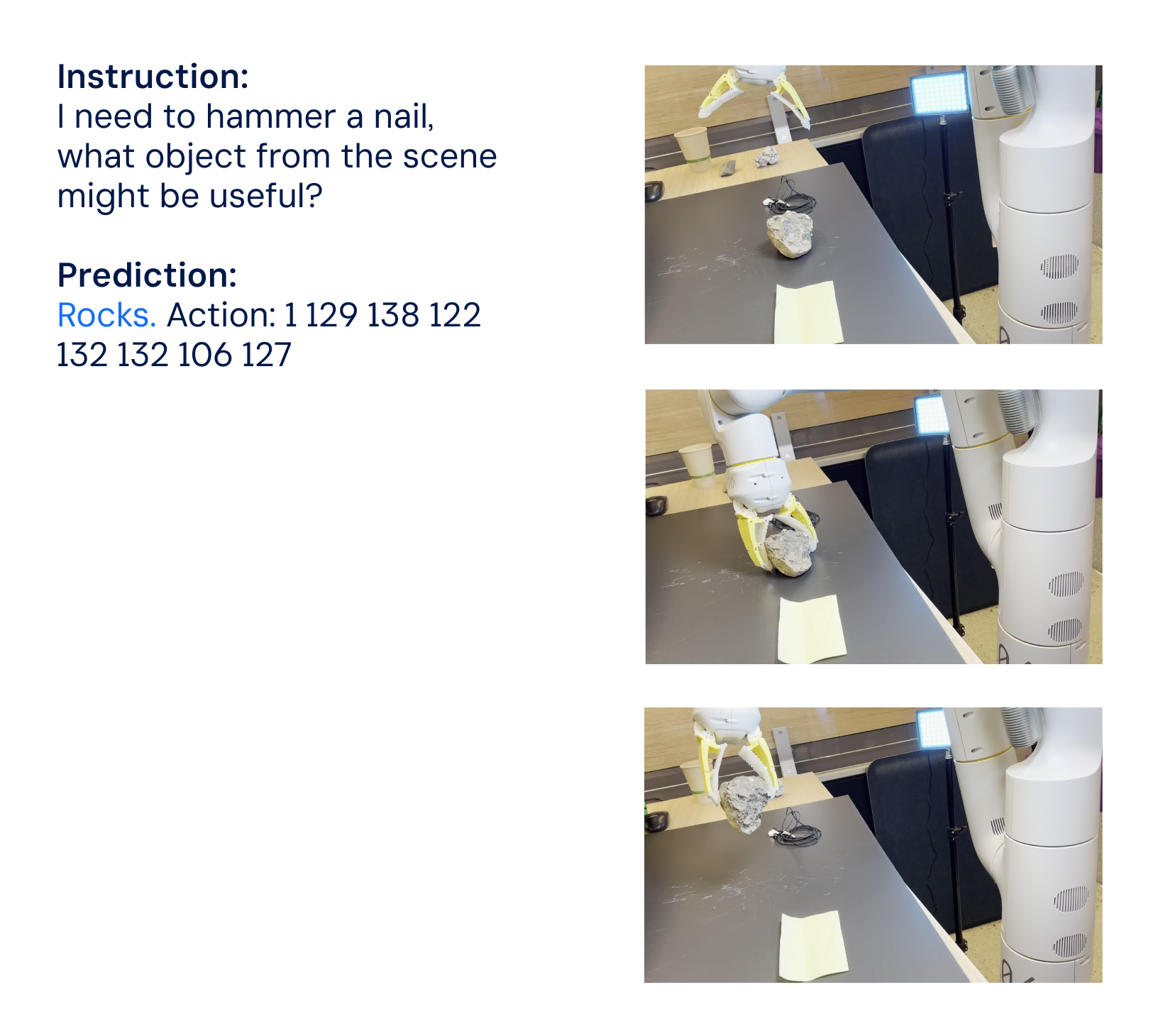

Robotic Transformer 2 (RT-2) is a novel vision-language-action (VLA) model that learns from both web and robotics data, and translates this knowledge into generalised instructions for robotic control.

High-capacity vision-language models (VLMs) are trained on web-scale datasets, making these systems remarkably good at recognising visual or language patterns and operating across different languages. But for robots to achieve a similar level of competency, they would need to collect robot data, first-hand, across every object, environment, task, and situation.

In our paper, we introduce Robotic Transformer 2 (RT-2), a novel vision-language-action (VLA) model that learns from both web and robotics data, and translates this knowledge into generalised instructions for robotic control, while retaining web-scale capabilities.

A visual-language model (VLM) pre-trained on web-scale data is learning from RT-1 robotics data to become RT-2, a visual-language-action (VLA) model that can control a robot.

This work builds upon Robotic Transformer 1 (RT-1), a model trained on multi-task demonstrations, which can learn combinations of tasks and objects seen in the robotic data. More specifically, our work used RT-1 robot demonstration data that was collected with 13 robots over 17 months in an office kitchen environment.

RT-2 shows improved generalisation capabilities and semantic and visual understanding beyond the robotic data it was exposed to. This includes interpreting new commands and responding to user commands by performing rudimentary reasoning, such as reasoning about object categories or high-level descriptions.

We also show that incorporating chain-of-thought reasoning allows RT-2 to perform multi-stage semantic reasoning, like deciding which object could be used as an improvised hammer (a rock), or which type of drink is best for a tired person (an energy drink).

Adapting VLMs for robotic control

RT-2 builds upon VLMs that take one or more images as input, and produces a sequence of tokens that, conventionally, represent natural language text. Such VLMs have been successfully trained on web-scale data to perform tasks, like visual question answering, image captioning, or object recognition. In our work, we adapt Pathways Language and Image model (PaLI-X) and Pathways Language model Embodied (PaLM-E) to act as the backbones of RT-2.

To control a robot, it must be trained to output actions. We address this challenge by representing actions as tokens in the model’s output – similar to language tokens – and describe actions as strings that can be processed by standard natural language tokenizers, shown here:

Representation of an action string used in RT-2 training. An example of such a string could be a sequence of robot action token numbers, e.g.“1 128 91 241 5 101 127 217”.

The string starts with a flag that indicates whether to continue or terminate the current episode, without executing the subsequent commands, and follows with the commands to change position and rotation of the end-effector, as well as the desired extension of the robot gripper.

We use the same discretised version of robot actions as in RT-1, and show that converting it to a string representation makes it possible to train VLM models on robotic data – as the input and output spaces of such models don’t need to be changed.

RT-2 architecture and training: We co-fine-tune a pre-trained VLM model on robotics and web data. The resulting model takes in robot camera images and directly predicts actions for a robot to perform.

Generalisation and emergent skills

We performed a series of qualitative and quantitative experiments on our RT-2 models, on over 6,000 robotic trials. Exploring RT-2’s emergent capabilities, we first searched for tasks that would require combining knowledge from web-scale data and the robot’s experience, and then defined three categories of skills: symbol understanding, reasoning, and human recognition.

Each task required understanding visual-semantic concepts and the ability to perform robotic control to operate on these concepts. Commands such as “pick up the bag about to fall off the table” or “move banana to the sum of two plus one” – where the robot is asked to perform a manipulation task on objects or scenarios never seen in the robotic data – required knowledge translated from web-based data to operate.

Examples of emergent robotic skills that are not present in the robotics data and require knowledge transfer from web pre-training.

Across all categories, we observed increased generalisation performance (more than 3x improvement) compared to previous baselines, such as previous RT-1 models and models like Visual Cortex (VC-1), which were pre-trained on large visual datasets.

Success rates of emergent skill evaluations: our RT-2 models outperform both previous robotics transformer (RT-1) and visual pre-training (VC-1) baselines.

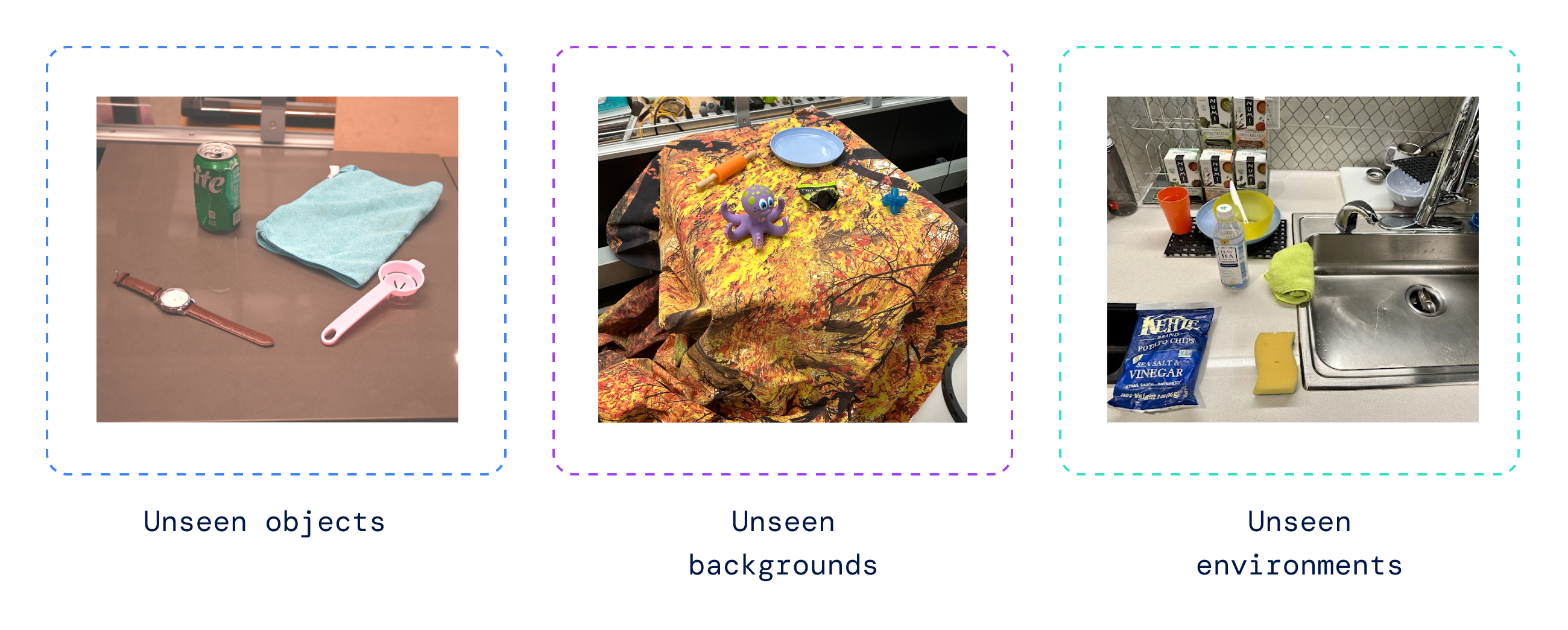

We also performed a series of quantitative evaluations, beginning with the original RT-1 tasks, for which we have examples in the robot data, and continued with varying degrees of previously unseen objects, backgrounds, and environments by the robot that required the robot to learn generalisation from VLM pre-training.

Examples of previously unseen environments by the robot, where RT-2 generalises to novel situations.

RT-2 retained the performance on the original tasks seen in robot data and improved performance on previously unseen scenarios by the robot, from RT-1’s 32% to 62%, showing the considerable benefit of the large-scale pre-training.

Additionally, we observed significant improvements over baselines pre-trained on visual-only tasks, such as VC-1 and Reusable Representations for Robotic Manipulation (R3M), and algorithms that use VLMs for object identification, such as Manipulation of Open-World Objects (MOO).

RT-2 achieves high performance on seen in-distribution tasks and outperforms multiple baselines on out-of-distribution unseen tasks.

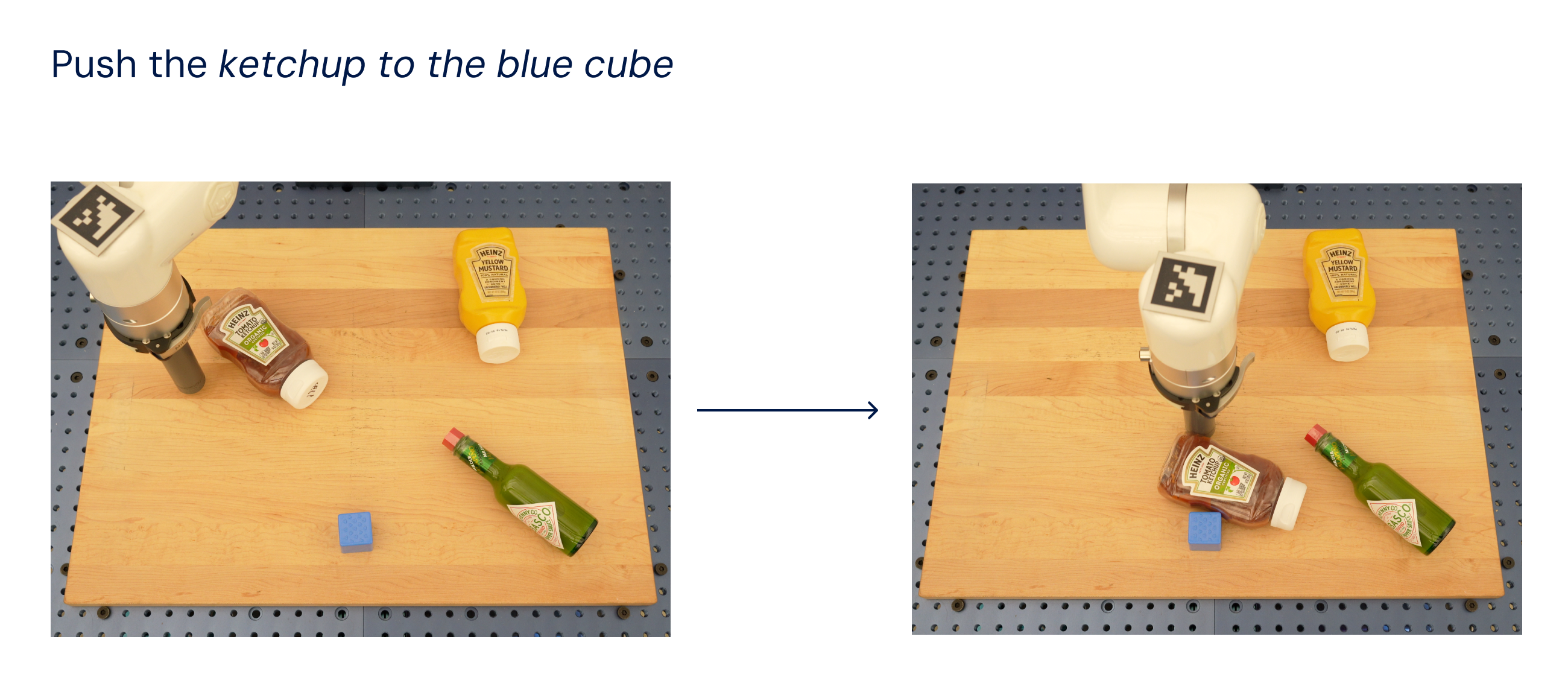

Evaluating our model on the open-source Language Table suite of robotic tasks, we achieved a success rate of 90% in simulation, substantially improving over the previous baselines including BC-Z (72%), RT-1 (74%), and LAVA (77%).

Then we evaluated the same model in the real world (since it was trained on simulation and real data), and demonstrated its ability to generalise to novel objects, as shown below, where none of the objects except the blue cube were present in the training dataset.

RT-2 performs well on real robot Language Table tasks. None of the objects except the blue cube were present in the training data.

Inspired by chain-of-thought prompting methods used in LLMs, we probed our models to combine robotic control with chain-of-thought reasoning to enable learning long-horizon planning and low-level skills within a single model.

In particular, we fine-tuned a variant of RT-2 for just a few hundred gradient steps to increase its ability to use language and actions jointly. Then we augmented the data to include an additional “Plan” step, first describing the purpose of the action that the robot is about to take in natural language, followed by “Action” and the action tokens. Here we show an example of such reasoning and the robot’s resulting behaviour:

Chain-of-thought reasoning enables learning a self-contained model that can both plan long-horizon skill sequences and predict robot actions.

With this process, RT-2 can perform more involved commands that require reasoning about intermediate steps needed to accomplish a user instruction. Thanks to its VLM backbone, RT-2 can also plan from both image and text commands, enabling visually grounded planning, whereas current plan-and-act approaches like SayCan cannot see the real world and rely entirely on language.

Advancing robotic control

RT-2 shows that vision-language models (VLMs) can be transformed into powerful vision-language-action (VLA) models, which can directly control a robot by combining VLM pre-training with robotic data.

With two instantiations of VLAs based on PaLM-E and PaLI-X, RT-2 results in highly-improved robotic policies, and, more importantly, leads to significantly better generalisation performance and emergent capabilities, inherited from web-scale vision-language pre-training.

RT-2 is not only a simple and effective modification over existing VLM models, but also shows the promise of building a general-purpose physical robot that can reason, problem solve, and interpret information for performing a diverse range of tasks in the real-world.

GitHub - khoj-ai/khoj: An AI personal assistant for your digital brain

An AI personal assistant for your digital brain. Contribute to khoj-ai/khoj development by creating an account on GitHub.

Khoj is a desktop application to search and chat with your notes, documents and images.

It is an offline-first, open source AI personal assistant accessible from your Emacs, Obsidian or Web browser.

It works with jpeg, markdown, notion, org-mode, pdf files and github repositories.

It is an offline-first, open source AI personal assistant accessible from your Emacs, Obsidian or Web browser.

It works with jpeg, markdown, notion, org-mode, pdf files and github repositories.

Black Artists Say A.I. Shows Bias, With Algorithms Erasing Their History

Tech companies acknowledge machine-learning algorithms can perpetuate discrimination and need improvement.

Black Artists Say A.I. Shows Bias, With Algorithms Erasing Their History

Tech companies acknowledge machine-learning algorithms can perpetuate discrimination and need improvement.

Stephanie Dinkins at work in her Brooklyn studio. For the past seven years, she has experimented with A.I.’s ability to realistically depict Black women smiling and crying. Credit...Flo Ngala for The New York Times

By Zachary Small

July 4, 2023

The artist Stephanie Dinkins has long been a pioneer in combining art and technology in her Brooklyn-based practice. In May she was awarded $100,000 by the Guggenheim Museum for her groundbreaking innovations, including an ongoing series of interviews with Bina48, a humanoid robot.

For the past seven years, she has experimented with A.I.’s ability to realistically depict Black women, smiling and crying, using a variety of word prompts. The first results were lackluster if not alarming: Her algorithm produced a pink-shaded humanoid shrouded by a black cloak.

“I expected something with a little more semblance of Black womanhood,” she said. And although the technology has improved since her first experiments, Dinkins found herself using runaround terms in the text prompts to help the A.I. image generators achieve her desired image, “to give the machine a chance to give me what I wanted.” But whether she uses the term “African American woman” or “Black woman,” machine distortions that mangle facial features and hair textures occur at high rates.

“Improvements obscure some of the deeper questions we should be asking about discrimination,” Dinkins said. The artist, who is Black, added, “The biases are embedded deep in these systems, so it becomes ingrained and automatic. If I’m working within a system that uses algorithmic ecosystems, then I want that system to know who Black people are in nuanced ways, so that we can feel better supported.”

She is not alone in asking tough questions about the troubling relationship between A.I. and race. Many Black artists are finding evidence of racial bias in artificial intelligence, both in the large data sets that teach machines how to generate images and in the underlying programs that run the algorithms. In some cases, A.I. technologies seem to ignore or distort artists’ text prompts, affecting how Black people are depicted in images, and in others, they seem to stereotype or censor Black history and culture.

Discussion of racial bias within artificial intelligence has surged in recent years, with studies showing that facial recognition technologies and digital assistants have trouble identifying the images and speech patterns of nonwhite people. The studies raised broader questions of fairness and bias.

Major companies behind A.I. image generators — including OpenAI, Stability AI and Midjourney — have pledged to improve their tools. “Bias is an important, industrywide problem,” Alex Beck, a spokeswoman for OpenAI, said in an email interview, adding that the company is continuously trying “to improve performance, reduce bias and mitigate harmful outputs.” She declined to say how many employees were working on racial bias, or how much money the company had allocated toward the problem.

An example of the distortion Dinkins found using a prompt of “A Black woman crying”’ in 2016 using the platform Runway ML.Credit...via Stephanie Dinkins

Some of the distortions from images of “Black woman smiling” in 2020.Credit...via Stephanie Dinkins

“Black people are accustomed to being unseen,” the Senegalese artist Linda Dounia Rebeiz wrote in an introduction to her exhibition “In/Visible,” for Feral File, an NFT marketplace. “When we are seen, we are accustomed to being misrepresented.”

To prove her point during an interview with a reporter, Rebeiz, 28, asked OpenAI’s image generator, DALL-E 2, to imagine buildings in her hometown, Dakar. The algorithm produced arid desert landscapes and ruined buildings that Rebeiz said were nothing like the coastal homes in the Senegalese capital.

“It’s demoralizing,” Rebeiz said. “The algorithm skews toward a cultural image of Africa that the West has created. It defaults to the worst stereotypes that already exist on the internet.”

Last year, OpenAI said it was establishing new techniques to diversify the images produced by DALL-E 2, so that the tool “generates images of people that more accurately reflect the diversity of the world’s population.”

An artist featured in Rebeiz’s exhibition, Minne Atairu is a Ph.D. candidate at Columbia University’s Teachers College who planned to use image generators with young students of color in the South Bronx. But she now worries “that might cause students to generate offensive images,” Atairu explained.

Minne Atairu, an artist and educator, at the Armory in 2022 with works based on a data set of Black models found in vintage Black magazines.Credit...via Minne Atairu

Included in the Feral File exhibition are images from her “Blonde Braids Studies,” which explore the limitations of Midjourney’s algorithm to produce images of Black women with natural blond hair. When the artist asked for an image of Black identical twins with blond hair, the program instead produced a sibling with lighter skin.

“That tells us where the algorithm is pooling images from,” Atairu said. “It’s not necessarily pulling from a corpus of Black people, but one geared toward white people.”

She said she worried that young Black children might attempt to generate images of themselves and see children whose skin was lightened. Atairu recalled some of her earlier experiments with Midjourney before recent updates improved its abilities. “It would generate images that were like blackface,” she said. “You would see a nose, but it wasn’t a human’s nose. It looked like a dog’s nose.”

In response to a request for comment, David Holz, Midjourney’s founder, said in an email, “If someone finds an issue with our systems, we ask them to please send us specific examples so we can investigate.”

Stability AI, which provides image generator services, said it planned on collaborating with the A.I. industry to improve bias evaluation techniques with a greater diversity of countries and cultures. Bias, the A.I. company said, is caused by “overrepresentation” in its general data sets, though it did not specify if overrepresentation of white people was the issue here.

{continued}

Stability AI, which provides image generator services, said it planned on collaborating with the A.I. industry to improve bias evaluation techniques with a greater diversity of countries and cultures. Bias, the A.I. company said, is caused by “overrepresentation” in its general data sets, though it did not specify if overrepresentation of white people was the issue here.

Minne Atairu’s “Blonde Braids Study IV,” explores the limitations of Midjourney’s algorithm to produce images of Black women with blond hair. One experiment produced a twin with lighter skin instead. “It’s not necessarily pulling from a corpus of Black people, but one geared toward white people.”Credit...via Minne Atairu

Earlier this month, Bloomberg analyzed more than 5,000 images generated by Stability AI, and found that its program amplified stereotypes about race and gender, typically depicting people with lighter skin tones as holding high-paying jobs while subjects with darker skin tones were labeled “dishwasher” and “housekeeper.”

These problems have not stopped a frenzy of investments in the tech industry. A recent rosy report by the consulting firm McKinsey predicted that generative A.I. would add $4.4 trillion to the global economy annually. Last year, nearly 3,200 start-ups received $52.1 billion in funding, according to the GlobalData Deals Database.

Technology companies have struggled against charges of bias in portrayals of dark skin from the early days of color photography in the 1950s, when companies like Kodak used white models in their color development. Eight years ago, Google disabled its A.I. program’s ability to let people search for gorillas and monkeys through its Photos app because the algorithm was incorrectly sorting Black people into those categories. As recently as May of this year, the issue still had not been fixed. Two former employees who worked on the technology told The New York Times that Google had not trained the A.I. system with enough images of Black people.

This reporter’s experiments with DALL-E 2 and the prompt “Black woman smiling” produced facial distortions.

Example of facial distortion on DALL-E 2 program to the prompt “Black woman smiling,” including a five o’clock shadow.

Other experts who study artificial intelligence said that bias goes deeper than data sets, referring to the early development of this technology in the 1960s.

“The issue is more complicated than data bias,” said James E. Dobson, a cultural historian at Dartmouth College and the author of a recent book on the birth of computer vision. There was very little discussion about race during the early days of machine learning, according to his research, and most scientists working on the technology were white men.

“It’s hard to separate today’s algorithms from that history, because engineers are building on those prior versions,” Dobson said.

To decrease the appearance of racial bias and hateful images, some companies have banned certain words from text prompts that users submit to generators, like “slave” and “fascist.”

But Dobson said that companies hoping for a simple solution, like censoring the kind of prompts that users can submit, were avoiding the more fundamental issues of bias in the underlying technology.

“It’s a worrying time as these algorithms become more complicated. And when you see garbage coming out, you have to wonder what kind of garbage process is still sitting there inside the model,” the professor added.

Auriea Harvey, an artist included in the Whitney Museum’s recent exhibition “Refiguring,” about digital identities, bumped into these bans for a recent project using Midjourney. “I wanted to question the database on what it knew about slave ships,” she said. “I received a message saying that Midjourney would suspend my account if I continued.”

Stephanie Dinkins, inaugural recipient of the LG Guggenheim Award for technology-based art, in her studio in Brooklyn. She says she is not giving up on technology despite problems.Credit...Flo Ngala for The New York Times

Dinkins ran into similar problems with NFTs that she created and sold showing how okra was brought to North America by enslaved people and settlers. She was censored when she tried to use a generative program, Replicate, to make pictures of slave ships. She eventually learned to outwit the censors by using the term “pirate ship.” The image she received was an approximation of what she wanted, but it also raised troubling questions for the artist.

“What is this technology doing to history?” Dinkins asked. “You can see that someone is trying to correct for bias, yet at the same time that erases a piece of history. I find those erasures as dangerous as any bias, because we are just going to forget how we got here.”

Naomi Beckwith, chief curator at the Guggenheim Museum, credited Dinkins’s nuanced approach to issues of representation and technology as one reason the artist received the museum’s first Art & Technology award.

“Stephanie has become part of a tradition of artists and cultural workers that poke holes in these overarching and totalizing theories about how things work,” Beckwith said. The curator added that her own initial paranoia about A.I. programs replacing human creativity was greatly reduced when she realized these algorithms knew virtually nothing about Black culture.

But Dinkins is not quite ready to give up on the technology. She continues to employ it for her artistic projects — with skepticism. “Once the system can generate a really high-fidelity image of a Black woman crying or smiling, can we rest?”

Stability AI, which provides image generator services, said it planned on collaborating with the A.I. industry to improve bias evaluation techniques with a greater diversity of countries and cultures. Bias, the A.I. company said, is caused by “overrepresentation” in its general data sets, though it did not specify if overrepresentation of white people was the issue here.

Minne Atairu’s “Blonde Braids Study IV,” explores the limitations of Midjourney’s algorithm to produce images of Black women with blond hair. One experiment produced a twin with lighter skin instead. “It’s not necessarily pulling from a corpus of Black people, but one geared toward white people.”Credit...via Minne Atairu

Earlier this month, Bloomberg analyzed more than 5,000 images generated by Stability AI, and found that its program amplified stereotypes about race and gender, typically depicting people with lighter skin tones as holding high-paying jobs while subjects with darker skin tones were labeled “dishwasher” and “housekeeper.”

These problems have not stopped a frenzy of investments in the tech industry. A recent rosy report by the consulting firm McKinsey predicted that generative A.I. would add $4.4 trillion to the global economy annually. Last year, nearly 3,200 start-ups received $52.1 billion in funding, according to the GlobalData Deals Database.

Technology companies have struggled against charges of bias in portrayals of dark skin from the early days of color photography in the 1950s, when companies like Kodak used white models in their color development. Eight years ago, Google disabled its A.I. program’s ability to let people search for gorillas and monkeys through its Photos app because the algorithm was incorrectly sorting Black people into those categories. As recently as May of this year, the issue still had not been fixed. Two former employees who worked on the technology told The New York Times that Google had not trained the A.I. system with enough images of Black people.

This reporter’s experiments with DALL-E 2 and the prompt “Black woman smiling” produced facial distortions.

Example of facial distortion on DALL-E 2 program to the prompt “Black woman smiling,” including a five o’clock shadow.

Other experts who study artificial intelligence said that bias goes deeper than data sets, referring to the early development of this technology in the 1960s.

“The issue is more complicated than data bias,” said James E. Dobson, a cultural historian at Dartmouth College and the author of a recent book on the birth of computer vision. There was very little discussion about race during the early days of machine learning, according to his research, and most scientists working on the technology were white men.

“It’s hard to separate today’s algorithms from that history, because engineers are building on those prior versions,” Dobson said.

To decrease the appearance of racial bias and hateful images, some companies have banned certain words from text prompts that users submit to generators, like “slave” and “fascist.”

But Dobson said that companies hoping for a simple solution, like censoring the kind of prompts that users can submit, were avoiding the more fundamental issues of bias in the underlying technology.

“It’s a worrying time as these algorithms become more complicated. And when you see garbage coming out, you have to wonder what kind of garbage process is still sitting there inside the model,” the professor added.

Auriea Harvey, an artist included in the Whitney Museum’s recent exhibition “Refiguring,” about digital identities, bumped into these bans for a recent project using Midjourney. “I wanted to question the database on what it knew about slave ships,” she said. “I received a message saying that Midjourney would suspend my account if I continued.”

Stephanie Dinkins, inaugural recipient of the LG Guggenheim Award for technology-based art, in her studio in Brooklyn. She says she is not giving up on technology despite problems.Credit...Flo Ngala for The New York Times

Dinkins ran into similar problems with NFTs that she created and sold showing how okra was brought to North America by enslaved people and settlers. She was censored when she tried to use a generative program, Replicate, to make pictures of slave ships. She eventually learned to outwit the censors by using the term “pirate ship.” The image she received was an approximation of what she wanted, but it also raised troubling questions for the artist.

“What is this technology doing to history?” Dinkins asked. “You can see that someone is trying to correct for bias, yet at the same time that erases a piece of history. I find those erasures as dangerous as any bias, because we are just going to forget how we got here.”

Naomi Beckwith, chief curator at the Guggenheim Museum, credited Dinkins’s nuanced approach to issues of representation and technology as one reason the artist received the museum’s first Art & Technology award.

“Stephanie has become part of a tradition of artists and cultural workers that poke holes in these overarching and totalizing theories about how things work,” Beckwith said. The curator added that her own initial paranoia about A.I. programs replacing human creativity was greatly reduced when she realized these algorithms knew virtually nothing about Black culture.

But Dinkins is not quite ready to give up on the technology. She continues to employ it for her artistic projects — with skepticism. “Once the system can generate a really high-fidelity image of a Black woman crying or smiling, can we rest?”

The Novice's LLM Training Guide

Written by Alpin Inspired by /hdg/'s LoRA train rentry The Table of Content on rentry is terrible, so I'll be linking to the different main sections here: Training from Scratch Native Fine-Tuning LoRA QLoRA Training Hyperparameters Interpreting the Learning Curves The Basics Using LLMs The Transf...

rentry.org

The Novice's LLM Training Guide

Written by AlpinInspired by /hdg/'s LoRA train rentryThe Table of Content on rentry is terrible, so I'll be linking to the different main sections here:

- Training from Scratch

- Native Fine-Tuning

- LoRA

- QLoRA

- Training Hyperparameters

- Interpreting the Learning Curves

- The Basics

- Training Basics

- Training from Scratch

- Native Fine-tuning

- Low-Rank Adaptation (LoRA)

- QLoRA

- Training Hyperparameters

- Interpreting the Learning Curves

The Basics

A modern Large Language Model (LLM) is trained using the Transformers library, which leverages the power of the Transformer network architecture. This architecture has revolutionized the field of natural language processing and is widely adopted for training LLMs. Python, a high-level programming language, is commonly used for implementing LLMs, making them more accessible and easier to comprehend compared to lower-level frameworks such as OpenXLA's IREE or GGML. The intuitive nature of Python allows researchers and developers to focus on the logic and algorithms of the model without getting caught up in intricate implementation details.This rentry won't go over pre-training LLMs (training from scratch), but rather fine-tuning and low-rank adaptation (LoRA) methods. Pre-training is prohibitively expensive, and if you have the compute for it, you're likely smart enough not to need this rentry at all.

Using LLMs

There are quite a few guides for using LLMs either locally on your PC or using a cloud computing service (often for a fee). Transformers models hosted on HuggingFace are the easiest to run, and several UIs offer inference for them:The compute requirement for the model can be calculated by multiplying the amount of space your model occupies on your hard disk by a factor of 1.2. Source: Transformer Math 101

For instance, let's consider the LLaMA 7B model, which occupies approximately 13.5 GB of storage space. To estimate the required VRAM for inference, we can multiply this value by 1.2, resulting in approximately 16.2 GB. This additional 20% accounts for overhead and the memory necessary for context token processing. It's important to note that the overhead will vary significantly based on the model's context size. The estimation assumes a model with an n_ctx (context size) of 2048.

In traditional attention mechanisms, the memory usage for context scales quadratically. However, newer techniques such as Flash Attention allow for linear scaling, reducing the memory footprint.

Stable Diffusion LoRA Models: A Complete Guide (Best ones, installation, training) - AiTuts

Definitive guide to using and training LoRAs for Stable Diffusion. Learn how to use LoRAs, which LoRAs are popular, and how to create them.

Stable Diffusion LoRA Models: A Complete Guide (Best ones, installation, training)

byYubin

updated

31 Jul, 2023

7 Comments

LoRAs are smaller files (anywhere from 1MB ~ 200MB) that you combine with an existing Stable Diffusion checkpoint models to introduce new concepts to your generations.

These new concepts can be almost anything: fictional characters, real-life people, facial expressions, art styles, props like weapons, poses, objects and more.

Quick Facts:

- Finetuning Stable Diffusion, which has billions of parameters, can be slow and difficult. LoRA is a faster and more efficient way of finetuning models.

- LoRAs have taken off in popularity because their small size makes them easy to share and download.

- You can train your own LoRA with as little as 10 training images

- You must train your LoRA on top of a foundational model:

- Most realistic LoRAs today are trained on Stable Diffusion v1.5

- Most anime LoRAs today are trained on NAI Diffusion

- The new generation of LoRAs will be trained on SDXL

- AUTOMATIC1111 users can use LoRAs by downloading them, placing them in the folder stable-diffusion-webui/models/Lora and then adding the phrase <lora:LORA-FILENAME:WEIGHT> to your prompt, where LORA-FILENAME is the filename of the LoRA without the file extension, and WEIGHT (which takes a value from 0-1) is the strength of the LoRA

- Sometimes LoRAs have trigger words that you must use in the prompt in addition to the keyphrase above

- You can use as many LoRAs in the same prompt as you want

This is Part 4 of the Stable Diffusion for Beginners series:

Stable Diffusion for Beginners

Part 1: Beginner's Guide to Stable Diffusion

Part 2: Models

Part 3: Prompting

Part 4: LoRA Models

Part 5: Embeddings/Textual Inversions

Part 6: Inpainting

Part 7: Animation with Deforum

Greg Rutkowski Was Removed From Stable Diffusion, But AI Artists Brought Him Back - Decrypt

His style has been requested over 400,000 times, even surpassing legends like Picasso and Da Vinci—all without his consent.

prompt:

If I were an Al robot, prompt me to create this image in a single paragraph.

Last edited: