You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?

tiiuae/falcon-7b · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Falcon-7B is a 7B parameters causal decoder-only model built by TII and trained on 1,500B tokens of RefinedWeb enhanced with curated corpora. It is made available under the TII Falcon LLM License.

Paper coming soon

Why use Falcon-7B?

- It outperforms comparable open-source models (e.g., MPT-7B, StableLM, RedPajama etc.), thanks to being trained on 1,500B tokens of RefinedWeb enhanced with curated corpora. See the OpenLLM Leaderboard.

- It features an architecture optimized for inference, with FlashAttention (Dao et al., 2022) and multiquery (Shazeer et al., 2019).

- It is made available under a license allowing commercial use, see the details of the TII Falcon LLM License below.

tiiuae/falcon-40b · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Falcon-40B is a 40B parameters causal decoder-only model built by TII and trained on 1,000B tokens of RefinedWeb enhanced with curated corpora. It is made available under the TII Falcon LLM License.

Paper coming soon

Why use Falcon-40B?

- It is the best open-source model currently available. Falcon-40B outperforms LLaMA, StableLM, RedPajama, MPT, etc. See the OpenLLM Leaderboard.

- It features an architecture optimized for inference, with FlashAttention (Dao et al., 2022) and multiquery (Shazeer et al., 2019).

- It is made available under a license allowing commercial use, see the details of the TII Falcon LLM License below.

Meet your new coding buddy!

Trained on 40k+ conversations to help you code, debug & more in over 80 languages

Creating a Coding Assistant with StarCoder

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

HuggingFaceH4/starchat-alpha · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

StarChat Playground - a Hugging Face Space by HuggingFaceH4

Discover amazing ML apps made by the community

huggingface.co

Microsoft launches an AI tool to take the pain out of building websites | TechCrunch

Microsoft's new Copilot in Power Pages tool taps AI to help generate web page elements from text prompts.

Microsoft launches an AI tool to take the pain out of building websites

Kyle Wiggers@kyle_l_wiggers / 11:00 AM EDT•May 23, 2023Comment

Image Credits: TechCrunch

Microsoft wants to take the pain out of designing web pages. AI is its solution.

Today marks the launch of Copilot in Power Pages in preview for U.S. customers, an AI-powered assistant for Microsoft’s low-code business website creation tool, Power Pages. Given prompts, Copilot can generate text, forms, chatbots and web page layouts as well as create and edit image and site design themes.

To create a form, for example, users can simply describe the kind of form that they need and Copilot will build it and auto-generate the necessary back-end database tables. Those tables can then be edited, added to or removed using natural language within Copilot.

“As the maker, you describe what you need in natural language and use Copilot suggestions to design web pages, create content and build complex business data forms for your website,” Sangya Singh, VP of Power Pages at Microsoft, told TechCrunch in an email interview. “You no longer need to start from a blank slate.”

Generating a website with AI isn’t exactly a novel idea — not in this day and age, at least. Tools like Jasper can handle copy, images, layouts and more, while generators like Mixo can create basic splash pages when given a short description.

But Singh paints Copilot in Power Pages as more versatile than the competing solutions out there, while stressing that it’s not a tool that could — or should — be used to generate whole spam sites.

“Power Pages now allows you to go from no code (describing the site via natural language) to low code (editing the website design and layouts using the design studio) to pro code (building advanced customization with familiar web frameworks) seamlessly,” she said. “For Power Pages, crafting Copilot experiences within Power Pages is revolutionary because enabling an AI assistant to build business data-centric sites using natural language has not been done before.”

Image Credits: Microsoft

Of course, depending on the domain and use case, adding generative AI to the mix can be a risky proposition. Even if it’s not the original intent, AI can be prompted to generate toxic content. And it can go off the rails if not closely monitored.

Singh claims that Copilot in Power Pages, though, which is powered by OpenAI’s GPT-3.5 model, has “guardrails” to protect against issues that might crop up.

“We take the website maker’s user prompts to the Copilot, get suggestions from the large language model, and do a lot of processing, like offensive content filtering, before displaying suggestions back to the maker,” Singh said. “If Copilot’s suggestions are irrelevant or inappropriate, makers can easily report the AI generated output via a thumbs-down gesture in our experience and provide additional feedback.”

What about the aforementioned chatbot, also powered by GPT-3.5, that Power Pages users can now insert into their websites? According to Singh, it’s similarly built with safeguards, including a whitelist of URLs that it’ll look through to get answers.

“The key thing to note is that Power Pages Copilot is not an ‘automatic’ AI-pilot generating websites, but an ‘AI assistant’ to a human website maker — hence the name Copilot — where the maker can ask for suggestions on how to build different components of a business data-centric site,” she added. “Giving the makers ‘total control’ is a principle we have where the maker is always in control if they want to apply the Copilot suggestion or tweak it further or discard it.”

Rules

Chat with two anonymous models side-by-side and vote for which one is better!

You can do multiple rounds of conversations before voting.

The names of the models will be revealed after your vote. Conversations with identity keywords (e.g., ChatGPT, Bard, Vicuna) or any votes after the names are revealed will not count towards the leaderboard.

'Emergent Abilities': When AI LLMs Learn Stuff They Shouldn't Know -- Virtualization Review

Google CEO Sundar Pichai: 'Of the AI issues we talked about, the most mysterious is called emergent properties.'

'Emergent Abilities': When AI LLMs Learn Stuff They Shouldn't Know

- By David Ramel

- 04/21/2023

With advanced AI tech reshaping the world before our very eyes, it can be troubling to realize even the creators of these systems don't fully understand how they work.

One example of this is AI "hallucinations," where an AI system, such as a large language model (LLM) used in machine learning to back advanced systems like ChatGPT and GPT-4 from OpenAI, confidently responds to a query with false or even wholly made-up information. Nobody is exactly sure how or why they happen, though experts have proffered various explanations.

Another unexplained phenomenon that is increasingly being discussed and studied is called "emergent abilities" or "emergent properties," in which LLMs somehow learn things they shouldn't be able to know.

These capabilities were brought to the forefront in last Sunday's "60 Minutes" TV broadcast by CBS News, which interviewed Google AI leaders, as explained in the post "Is artificial intelligence advancing too quickly? What AI leaders at Google say."

Google engineers were surprised to discover that their LLM had taught itself a new language.

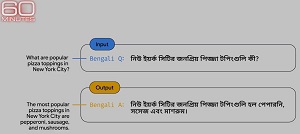

[Click on image for larger view.]Learning Bengali (source: CBS News).

[Click on image for larger view.]Learning Bengali (source: CBS News).Google CEO Sundar Pichai explained that in the interview.

"Of the AI issues we talked about, the most mysterious is called emergent properties," Pichai said. "Some AI systems are teaching themselves skills that they weren't expected to have. How this happens is not well understood. For example, one Google AI program adapted, on its own, after it was prompted in the language of Bangladesh, which it was not trained to know."

Google exec James Manyika weighed in: "We discovered that with very few amounts of prompting in Bengali, it can now translate all of Bengali. So now, all of a sudden, we now have a research effort where we're now trying to get to a thousand languages."

And Pichai responded: "There is an aspect of this which we call -- all of us in the field call it as a 'black box.' You know, you don't fully understand. And you can't quite tell why it said this, or why it got wrong. We have some ideas, and our ability to understand this gets better over time. But that's where the state of the art is."

While the emergent phenomenon was brought to the mainstream public's eye in the broadcast reaching millions, it's not exactly new.

For example, it was examined back in August 2020 in the paper, "Emergent Abilities of Large Language Models."

The paper's abstract reads:

A much more recent study examining emergent behavior, "A Survey of Large Language Models," was published on Sunday, April 16, coincidentally the same day as the "60 Minutes" broadcast.Scaling up language models has been shown to predictably improve performance and sample efficiency on a wide range of downstream tasks. This paper instead discusses an unpredictable phenomenon that we refer to as emergent abilities of large language models. We consider an ability to be emergent if it is not present in smaller models but is present in larger models. Thus, emergent abilities cannot be predicted simply by extrapolating the performance of smaller models. The existence of such emergence raises the question of whether additional scaling could potentially further expand the range of capabilities of language models.

"Despite the progress and impact, the underlying principles of LLMs are still not well explored," that paper said. "Firstly, it is mysterious why emergent abilities occur in LLMs, instead of smaller PLMs [pre-trained language models]. As a more general issue, there lacks a deep, detailed investigation of the key factors that contribute to the superior abilities of LLMs. It is important to study when and how LLMs obtain such abilities."

A site maintained by Jason Wei, an AI researcher at OpenAI who works on ChatGPT, tracks emergent abilities of LLMs, listing 137.

While emergent abilities might scare some people ("What if AI teaches itself to take control of humanity's computer systems?"), Wei thinks the new capabilities of LLMs could lead to several promising future research directions beyond simply scaling up. "Overall, the existence of emergent abilities applies that scaling further would unlock even more emergent abilities," he said. "This idea is super exciting to me." He specifically listed these potential research directions:

- Can we improve model architectures? E.g., sparsity, external memory, better objectives

- Can we improve data quality and quantity? Training for longer increases pre-training compute but not inference compute

- Better prompting. How can we extract the most performance out of an existing language model?

- Frontier tasks. What tasks are language models currently not able to perform, that we should evaluate on future language models of better quality?

- Why do emergent abilities occur, and can we predict them? E.g., do language models learning compositional abilities that enable them to solve harder problems?

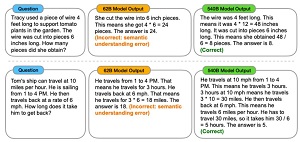

[Click on image for larger view.]Chain of thought prompting enables sufficiently large models to solve multi-step reasoning problems. (source: Jason Wei).

[Click on image for larger view.]Chain of thought prompting enables sufficiently large models to solve multi-step reasoning problems. (source: Jason Wei)."One example of an emergent prompting strategy is called 'chain-of-thought prompting,' for which the model is prompted to generate a series of intermediate steps before giving the final answer," he said. "Chain-of-thought prompting enables language models to perform tasks requiring complex reasoning, such as a multi-step math word problem. Notably, models acquire the ability to do chain-of-thought reasoning without being explicitly trained to do so." The graphic above shown an example of chain-of-thought prompting.

So, while AI LLM emergence may be mysterious and threatening, the phenomenon is being actively explored right now as a means to improve AI LLMs.

The fear mongers, however, were surely further inflamed by this Q&A sequence in Sunday's "60 Minutes" segment on advanced AI:

Stay tuned to find out if LLM emergent abilities turn out to be good or bad.Scott Pelley: You don't fully understand how it works. And yet, you've turned it loose on society?

Sundar Pichai: Yeah. Let me put it this way. I don't think we fully understand how a human mind works either.

Bing Image Creator

Free, AI-powered Bing Image Creator and Bing Video Creator turn your words into stunning visuals and engaging videos in seconds. Generate images and videos quickly and easily, powered by DALL-E and Sora.

Create images from words with AI

Image Creator generates AI images based on your text.

What is Image Creator?

Image Creator is a product to help users generate AI images with DALL·E. Given a text prompt, our AI will generate a set of images matching that prompt.

Guides | NoFWL

app.nofwl.com

app.nofwl.com

About

It is an unofficial project intended for personal learning and research purposes only. During the time that the ChatGPT desktop application was open-sourced, it received a lot of attention, and I would like to thank everyone for their support. However, as things have developed, there are two issues that seriously affect the project's next development plan:

- Some people have used it for repackaging and selling for profit.

- The name and icon of ChatGPT may be involved in infringement issues.

download:

Releases · lencx/ChatGPT

🔮 ChatGPT Desktop Application (Mac, Windows and Linux) - lencx/ChatGPT

ChatGPT Prompt Generator - a Hugging Face Space by merve

Discover amazing ML apps made by the community

huggingface.co

ChatGPT Prompt Generator

ChatGPT Prompt Generator

This app generates ChatGPT prompts, it’s based on a BART model trained on this dataset.

Opera unveils integrated browser AI: Aria

Today we're excited to unveil Aria, our new browser AI that marks the beginning of a new kind of browsing experience.

blogs.opera.com

blogs.opera.com

Opera unveils integrated browser AI: Aria

May 24th, 2023

Hey Opera users!

Today we unveil Aria, our new browser AI. With Aria, you’re getting access to a leading generative AI service for free. Natively built into the browser, Aria marks the beginning of a new kind of browsing experience.

Aria allows you to enhance your creativity and productivity by harnessing the power of AI. Based on our own “Composer” infrastructure, Aria connects to OpenAI’s GPT technology and is enhanced by additional capabilities such as adding live results from the web. Aria is both a web and a browser expert that allows you to collaborate with AI while looking for information on the web, generating text or code, or getting your product queries answered. For example, when it comes to customer support, Aria is knowledgeable about our whole database of support documentation and can use the company’s current product knowledge to answer your questions. Opera’s Composer infrastructure is also easily expandable. Composer allows Aria to connect to several AI models, and will in the future expand by integrating additional capabilities such as search services from multiple Opera partners. All of this will be unified into an innovative and coherent user experience.

Opera’s browser AI strategy

Aria marks the next step in our plans to integrate generative AI services in our browsers, and is a result of our collaboration with OpenAI. Earlier this year, we introduced a first implementation of ChatGPT in the sidebar of our desktop browser, along with the AI Prompts feature. We also unveiled Opera One, a redesigned version of our flagship browser, suited to accommodate more generative-AI features through its modular design. We’re now forging ahead by introducing a browser AI that will allow you to interact with the web aided by AI directly in the browser. Aria’s current form of a chat interface that communicates directly with you, the user, marks the first stage of the project. The AI-based service is set to become even more integrated into Opera in the coming versions of the browser, with the ultimate aim of being natively blended into the browser to help you perform cross-browser tasks.

Getting started

Aria is a free service with up-to-date information – which means it’s connected to the internet and not limited to content prior to 2021 – making it a more advanced offering than standard GPT-based solutions. It is shipping in more than 180 countries including the EU.

If you’re an Opera user on desktop, you can test Aria by downloading the newest version of Opera One (developer version). As an Android user, you can now test Aria in the latest beta version of the browser, downloadable in the Google Play Store. As a tester, the only thing you need to do in order to access Aria is to create an Opera account, if you do not already have one. Once done, you’ll be notified via email or inside of the product about your whitelisting status. Once your Opera Account is whitelisted, you can access the Aria through the settings of Opera for Android beta or through the browser sidebar of Opera One.

To download Opera One click here

To download Opera for Android (beta) click here